Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- Content Analysis | Guide, Methods & Examples

Content Analysis | Guide, Methods & Examples

Published on July 18, 2019 by Amy Luo . Revised on June 22, 2023.

Content analysis is a research method used to identify patterns in recorded communication. To conduct content analysis, you systematically collect data from a set of texts, which can be written, oral, or visual:

- Books, newspapers and magazines

- Speeches and interviews

- Web content and social media posts

- Photographs and films

Content analysis can be both quantitative (focused on counting and measuring) and qualitative (focused on interpreting and understanding). In both types, you categorize or “code” words, themes, and concepts within the texts and then analyze the results.

Table of contents

What is content analysis used for, advantages of content analysis, disadvantages of content analysis, how to conduct content analysis, other interesting articles.

Researchers use content analysis to find out about the purposes, messages, and effects of communication content. They can also make inferences about the producers and audience of the texts they analyze.

Content analysis can be used to quantify the occurrence of certain words, phrases, subjects or concepts in a set of historical or contemporary texts.

Quantitative content analysis example

To research the importance of employment issues in political campaigns, you could analyze campaign speeches for the frequency of terms such as unemployment , jobs , and work and use statistical analysis to find differences over time or between candidates.

In addition, content analysis can be used to make qualitative inferences by analyzing the meaning and semantic relationship of words and concepts.

Qualitative content analysis example

To gain a more qualitative understanding of employment issues in political campaigns, you could locate the word unemployment in speeches, identify what other words or phrases appear next to it (such as economy, inequality or laziness ), and analyze the meanings of these relationships to better understand the intentions and targets of different campaigns.

Because content analysis can be applied to a broad range of texts, it is used in a variety of fields, including marketing, media studies, anthropology, cognitive science, psychology, and many social science disciplines. It has various possible goals:

- Finding correlations and patterns in how concepts are communicated

- Understanding the intentions of an individual, group or institution

- Identifying propaganda and bias in communication

- Revealing differences in communication in different contexts

- Analyzing the consequences of communication content, such as the flow of information or audience responses

Prevent plagiarism. Run a free check.

- Unobtrusive data collection

You can analyze communication and social interaction without the direct involvement of participants, so your presence as a researcher doesn’t influence the results.

- Transparent and replicable

When done well, content analysis follows a systematic procedure that can easily be replicated by other researchers, yielding results with high reliability .

- Highly flexible

You can conduct content analysis at any time, in any location, and at low cost – all you need is access to the appropriate sources.

Focusing on words or phrases in isolation can sometimes be overly reductive, disregarding context, nuance, and ambiguous meanings.

Content analysis almost always involves some level of subjective interpretation, which can affect the reliability and validity of the results and conclusions, leading to various types of research bias and cognitive bias .

- Time intensive

Manually coding large volumes of text is extremely time-consuming, and it can be difficult to automate effectively.

If you want to use content analysis in your research, you need to start with a clear, direct research question .

Example research question for content analysis

Is there a difference in how the US media represents younger politicians compared to older ones in terms of trustworthiness?

Next, you follow these five steps.

1. Select the content you will analyze

Based on your research question, choose the texts that you will analyze. You need to decide:

- The medium (e.g. newspapers, speeches or websites) and genre (e.g. opinion pieces, political campaign speeches, or marketing copy)

- The inclusion and exclusion criteria (e.g. newspaper articles that mention a particular event, speeches by a certain politician, or websites selling a specific type of product)

- The parameters in terms of date range, location, etc.

If there are only a small amount of texts that meet your criteria, you might analyze all of them. If there is a large volume of texts, you can select a sample .

2. Define the units and categories of analysis

Next, you need to determine the level at which you will analyze your chosen texts. This means defining:

- The unit(s) of meaning that will be coded. For example, are you going to record the frequency of individual words and phrases, the characteristics of people who produced or appear in the texts, the presence and positioning of images, or the treatment of themes and concepts?

- The set of categories that you will use for coding. Categories can be objective characteristics (e.g. aged 30-40 , lawyer , parent ) or more conceptual (e.g. trustworthy , corrupt , conservative , family oriented ).

Your units of analysis are the politicians who appear in each article and the words and phrases that are used to describe them. Based on your research question, you have to categorize based on age and the concept of trustworthiness. To get more detailed data, you also code for other categories such as their political party and the marital status of each politician mentioned.

3. Develop a set of rules for coding

Coding involves organizing the units of meaning into the previously defined categories. Especially with more conceptual categories, it’s important to clearly define the rules for what will and won’t be included to ensure that all texts are coded consistently.

Coding rules are especially important if multiple researchers are involved, but even if you’re coding all of the text by yourself, recording the rules makes your method more transparent and reliable.

In considering the category “younger politician,” you decide which titles will be coded with this category ( senator, governor, counselor, mayor ). With “trustworthy”, you decide which specific words or phrases related to trustworthiness (e.g. honest and reliable ) will be coded in this category.

4. Code the text according to the rules

You go through each text and record all relevant data in the appropriate categories. This can be done manually or aided with computer programs, such as QSR NVivo , Atlas.ti and Diction , which can help speed up the process of counting and categorizing words and phrases.

Following your coding rules, you examine each newspaper article in your sample. You record the characteristics of each politician mentioned, along with all words and phrases related to trustworthiness that are used to describe them.

5. Analyze the results and draw conclusions

Once coding is complete, the collected data is examined to find patterns and draw conclusions in response to your research question. You might use statistical analysis to find correlations or trends, discuss your interpretations of what the results mean, and make inferences about the creators, context and audience of the texts.

Let’s say the results reveal that words and phrases related to trustworthiness appeared in the same sentence as an older politician more frequently than they did in the same sentence as a younger politician. From these results, you conclude that national newspapers present older politicians as more trustworthy than younger politicians, and infer that this might have an effect on readers’ perceptions of younger people in politics.

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Normal distribution

- Measures of central tendency

- Chi square tests

- Confidence interval

- Quartiles & Quantiles

- Cluster sampling

- Stratified sampling

- Thematic analysis

- Cohort study

- Peer review

- Ethnography

Research bias

- Implicit bias

- Cognitive bias

- Conformity bias

- Hawthorne effect

- Availability heuristic

- Attrition bias

- Social desirability bias

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Luo, A. (2023, June 22). Content Analysis | Guide, Methods & Examples. Scribbr. Retrieved July 16, 2024, from https://www.scribbr.com/methodology/content-analysis/

Is this article helpful?

Other students also liked

Qualitative vs. quantitative research | differences, examples & methods, descriptive research | definition, types, methods & examples, reliability vs. validity in research | difference, types and examples, what is your plagiarism score.

Last updated 10th July 2024: Online ordering is currently unavailable due to technical issues. We apologise for any delays responding to customers while we resolve this. For further updates please visit our website https://www.cambridge.org/news-and-insights/technical-incident

We use cookies to distinguish you from other users and to provide you with a better experience on our websites. Close this message to accept cookies or find out how to manage your cookie settings .

Login Alert

- > Research Methods in Language Attitudes

- > Content Analysis of Social Media

Book contents

- Research Methods in Language Attitudes

- Copyright page

- Contributors

- Acknowledgements

- 1 An Introduction to Language Attitudes Research

- Part 1 Analysis of the Societal Treatment of Language

- 2 Discourse Analysis of Print Media

- 3 Content Analysis of Social Media

- 4 Discourse Analysis of Spoken Interaction

- 5 Analysis of Communication Accommodation

- 6 Variable Analysis

- Part 2 Direct Methods of Attitude Elicitation

- Part 3 Indirect Methods of Attitude Elicitation

- Part 4 Overarching Issues in Language Attitudes Research

3 - Content Analysis of Social Media

from Part 1 - Analysis of the Societal Treatment of Language

Published online by Cambridge University Press: 25 June 2022

This chapter outlines how social media data, such as Facebook and Twitter, can be used to study language attitudes. This comparatively recent method in language attitudes research benefits from the immediate accessibility of large amounts of data from a wide range of people that can be collected quickly and with minimal effort – a point in common with attitude studies using print data. At the same time, this method collects people’s spontaneous thoughts, that is unprompted attitudinal data – a characteristic usually attributed to methods drawing on speech data. The study of language attitudes in social media data can, however, yield wholly different insights from writing and speech data. The chapter discusses the advantages and pitfalls of different types of content analysis as well as the general limitations of the method. The chapter presents an overview of software programmes to collect social media data, as well as geo-tagging, and addresses data analysis as well as the general usefulness of the method (e.g. its applicability around the world or the potential for diachronic attitudinal change). The case study in this chapter uses examples from Twitter, focusing on attitudes towards the Welsh accent in English.

Access options

Suggested further readings, save book to kindle.

To save this book to your Kindle, first ensure [email protected] is added to your Approved Personal Document E-mail List under your Personal Document Settings on the Manage Your Content and Devices page of your Amazon account. Then enter the ‘name’ part of your Kindle email address below. Find out more about saving to your Kindle .

Note you can select to save to either the @free.kindle.com or @kindle.com variations. ‘@free.kindle.com’ emails are free but can only be saved to your device when it is connected to wi-fi. ‘@kindle.com’ emails can be delivered even when you are not connected to wi-fi, but note that service fees apply.

Find out more about the Kindle Personal Document Service .

- Content Analysis of Social Media

- By Mercedes Durham

- Edited by Ruth Kircher , Mercator European Research Centre on Multilingualism and Language Learning, and Fryske Akademy, Netherlands , Lena Zipp , Universität Zürich

- Book: Research Methods in Language Attitudes

- Online publication: 25 June 2022

- Chapter DOI: https://doi.org/10.1017/9781108867788.005

Save book to Dropbox

To save content items to your account, please confirm that you agree to abide by our usage policies. If this is the first time you use this feature, you will be asked to authorise Cambridge Core to connect with your account. Find out more about saving content to Dropbox .

Save book to Google Drive

To save content items to your account, please confirm that you agree to abide by our usage policies. If this is the first time you use this feature, you will be asked to authorise Cambridge Core to connect with your account. Find out more about saving content to Google Drive .

Journal of Electronic Commerce Research

Content Analysis Of Social Media: A Grounded Theory Approach

Author: , abstract: , key word: .

- social media

- Content analysis

- Lexical and statistical approaches

- Concept formation

Published Date:

Full file: .

5 Tips for Content Analysis on Social Media

Content analysis on social media is a research tool to determine the presence of certain words or phrases within a data set. In the past decade, content on social media has grown exponentially and is an optimal medium for effective content marketing research. The content on social media platforms is analyzed to understand consumer behavior, access unfiltered opinions of the consumers, and uncover patterns. Businesses understand the value of social media data to gain marketing insights, but how does it tap this source of information to gain marketing insights?

What Is Content Analysis?

Content analysis is the technique used to systematically and objectively make inferences and identify the special characteristics of messages. Content analysis on social media is a research methodology used to determine the text’s words, concepts, and themes. Using content analysis, researchers can perform quantitative and qualitative data analyses of the presence of meanings and the relationships of certain words and phrases. The goal is to identify patterns in recorded communication in the form of textual, vocal, and visual files and collect data from them. On social media, the content is available in the form of:

- Blogs, YouTube videos, podcasts.

- Static and dynamic images.

- Interviews, newsletters, and PDFs.

- Graphs, statistics, and survey forms.

The qualitative data analysis of the social media content measures the interpretation and the meaning of the words in the consideration. In contrast, the quantitative data analysis measures the word count and the frequency of the word usage in the content.

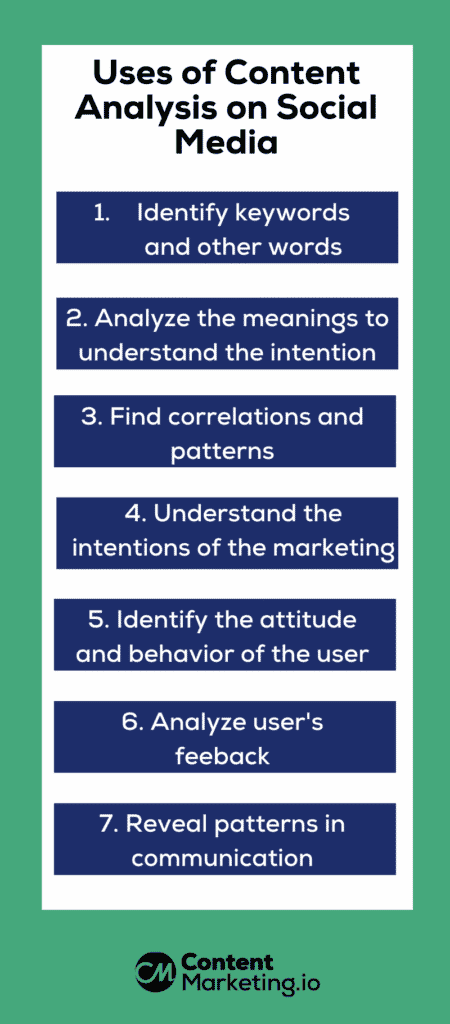

What Are the Uses of Content Analysis on Social Media?

Content analysis on social media is used to find out the purpose, message, and effects of the social media content. It can be used to make inferences about the content providers and the users. It quantifies the occurrence of certain words, phrases, themes, or concepts in contemporary text. Content analysis research can be done in marketing, media, cognitive studies, psychology, and other social disciplines.

- Identifies a keyword, other words related to the keyword, and phrases that appear next to it.

- Analyzes the meanings and relationships to understand the target and intention better.

- Finds correlations and patterns in how a marketing idea or strategy is communicated to the target audience.

- Understands the intentions and goals of the business, marketer, or user.

- Identifies the attitude, behavior, and psychological state of the user.

- Analyzes the feedback from the user , such as their comments, likes, and shares.

- Reveals patterns in communication content.

Types of Analysis of Social Media Content

Content analysis on social media can be broadly classified into conceptual and relational analysis. The conceptual analysis determines the existence and frequency of certain words or phrases in social media channels. The relational analysis extends the conceptual analysis by examining the relationship among these words in the channel. Each type of analysis may lead to different results, interpretations, and meanings.

On social media, you can perform the following analysis:

- Audience analysis

- Performance analysis

- Competitor’s analysis

- Sentimental analysis

Audience Analysis

Knowing your target audience is crucial for your business to build an effective, audience-oriented marketing strategy. It helps you nurture your leads and move them deeper into the content marketing funnel . Only when you understand what your customer wants will you be able to develop a suitable content marketing strategy. Using certain analytical tools, you should find out what type of information they want, in what form, and how frequently they would like to have it.

Post your questions as surveys on different social media channels and prompt your target audience to answer these questions. Collect this information and see their personas, characteristics, interests, and behavior. You can leverage the insights to reach new business opportunities.

Performance Analysis

It is good to measure your own performance and understand your strategy. At the same time, eMarketer projects, like global digital ad spending, will reach $571 billion in 2022 and rise to $800 billion by 2025.

It’s natural to expect a good ROI when you have invested a lot in social media content marketing . On a social media platform, the key metrics to track are:

- Finding whether the content you are publishing is making an impact on your audience based on the interactions your audience is having.

- Collect data on your cumulative audience and the interactions of every 1000 followers you have.

- Measuring the number of click-throughs and checking how many new followers you get. If you are interested in getting new customers, you must get new followers.

- Monitoring your new followers and measuring what ROI you get.

Competitor’s Analysis

To measure your success, you must compare yourself with your competitor’s metrics. Improve performance in two key areas, effective and efficient. Comparing your business’s social media performance with your competitor is the best way to assess your strategy’s effectiveness.

This will help you to learn about your performance improvement and the ROI. If you don’t wish to compare your performance with others, you can compare your own previous results. Use benchmarking solutions to measure your performance. You can get their performance results based on a specific country, industry, and niche. Doing so lets you learn about their strategy and easily get ahead of them.

Sentiment Analysis

The posts, reviews, and feedback are vital on social media. Failing to take remedial action for negative comments might put your business at risk. Use tools to filter out satisfied, not satisfied comments and try to fix the negative sentiment right in the early stage itself.

Start with a Research Question

The first step is to start with a research strategy on what to analyze on social media. You need to set your objective on what decision you will make when you gather the required information. As you have a good deal of data on social media, you need to have a clear idea to limit your direction on a specific topic and objective that will make the analysis more manageable. Examples of a research objective can be analyzing your customers’ feedback when you have launched a new product, providing extensions for an already launched product, or analyzing the success of your promotional campaign.

Gather, Organize, and Analyze Content on Social Media

Based on your research objectives, social media platforms provide consumer-generated data. There are tools to measure performance on social media and content marketing channels.

- Social media analytics tools help you track your social presence, such as Sprout Social , HubSpot, and BuzzSumo.

- Video analytical tools that help to improve your video content strategy are Unbox Social, Channel Meter, and Qunitly.

- LinkedIn analytics tools that can help you improve your page performance are Socialinsider, Buffer , and Hootsuite.

- Instagram analytics tools that assist you in becoming a power user are Sprout Social, Tap Influencer, and Bitly.

- Twitter analytics tools that can help you are Native Twitter Analytics , Socialbakers, and Hootsuite.

Data on social media need not necessarily be text; it can be audio, video, gifs, and various other diverse formats. There are free or commercial tools to organize such data systematically. When presented to the analyst, you must ensure that it is versatile and comprehensive. The output can either be made available online, or the results can be made available as numbers in graphs. Then, the data is analyzed for ideas, concepts, behaviors, and patterns.

Automate the Process

Content analysis on social media is exhaustive as it contains overwhelming data to analyze. Use AI-powered tools for content marketing automation . It automatically imports data from social media pages and monitors your query. Reactions to your posts, comments, and feedback are captured, and either measured or necessary action is initiated. Along with unstructured text, social media data comes with valuable metadata, such as the date of publication, follower count of your channel, upvotes, downvotes, and re-tweets.

Identify Clusters for Mining Patterns

All data have different insights. After you have collected and analyzed the data, divide it into different clusters. When you compartmentalize data, you see themes emerge from a large dataset. Monitoring these clusters can give insight into your products, industry, customers, and competitors. Analyzing data from a different perspective will help you to monitor your brand and also help you to check whether you are on the right track with your content marketing strategy.

Eliminate Noise

Content analysis on social media contains data that is irrelevant to the context. Such data don’t provide any insight for the analysis. One way is to aggregate data from selected media platforms to filter out the relevant content or use a machine learning algorithm to remove the noise. This can require a statistician or data scientist to develop a customized model that you can apply to the aggregated data to clean it up. The ML algorithm must identify patterns in context and classify the content as noisy or clean data based on the model, not the keywords, URLs, or rules. It must automatically be trained to learn new patterns.

1. What are the types of content analysis on social media?

Conceptual Analysis – establishing the existence and frequency of concepts represented by words or phrases in a text. Relational Analysis – examines the relationships among concepts in the text.

2. Why is Content Analysis important?

Despite being time-consuming, content analysis is used by researchers to find out about the messages, purposes, and effects of communication content. Content analysis is done to determine the purpose of posting the content by the content owner and to find the behavior pattern of the audience preference.

It is used in various fields, from psychology to media analysis and political science. Content analysis indicates a close relationship with socio-psycholinguistics and can be used in developing NLP algorithms.

3. What are the key elements in content analysis on social media data?

Both verbal and non-verbal methods can be used to collect data from social media for analysis. Surveys, interviews, podcasts, and social media engagement include likes, shares, and comments. The major elements to be considered are words, paragraphs, concepts, and semantics. It is necessary to capture the relevant data for content analysis, so there is enough sample data to perform the analysis. The sample data is the content itself, and analysis is done over time to extract the sample.

Closing Thoughts

Several excellent programs and services—free and commercial—have been developed to analyze social media data. Yet, most of these tools focus on providing summary statistics of the data. Web analytics, such as word counts, reach, word clouds, volume, and sentiment analysis can provide valuable, up-to-the-minute snapshots of web content. Still, no algorithm is an adequate replacement for the in-depth analysis of consumer-generated feedback that can be conducted by a skilled analyst with a deep understanding of a brand. Check our blogs if you are keen on knowing about content marketing strategies, tips, and tools.

Similar Posts

- 8 Key Content Marketing Best Practices to Ensure Succes s

- The 7 Types of Content Marketing to Nail Your Content Strategy

- Social Media Content Marketing – 5 Important Things to Keep in Mind

Daniel Martin

You may also like:.

Copywriting vs Content Marketing – The Ultimate Comparison

SaaS Content Marketing: 20 Amazing Tips to Elevate Your Business

Leave a comment cancel reply.

Save my name, email, and website in this browser for the next time I comment.

- Privacy Policy

Home » Content Analysis – Methods, Types and Examples

Content Analysis – Methods, Types and Examples

Table of Contents

Content Analysis

Definition:

Content analysis is a research method used to analyze and interpret the characteristics of various forms of communication, such as text, images, or audio. It involves systematically analyzing the content of these materials, identifying patterns, themes, and other relevant features, and drawing inferences or conclusions based on the findings.

Content analysis can be used to study a wide range of topics, including media coverage of social issues, political speeches, advertising messages, and online discussions, among others. It is often used in qualitative research and can be combined with other methods to provide a more comprehensive understanding of a particular phenomenon.

Types of Content Analysis

There are generally two types of content analysis:

Quantitative Content Analysis

This type of content analysis involves the systematic and objective counting and categorization of the content of a particular form of communication, such as text or video. The data obtained is then subjected to statistical analysis to identify patterns, trends, and relationships between different variables. Quantitative content analysis is often used to study media content, advertising, and political speeches.

Qualitative Content Analysis

This type of content analysis is concerned with the interpretation and understanding of the meaning and context of the content. It involves the systematic analysis of the content to identify themes, patterns, and other relevant features, and to interpret the underlying meanings and implications of these features. Qualitative content analysis is often used to study interviews, focus groups, and other forms of qualitative data, where the researcher is interested in understanding the subjective experiences and perceptions of the participants.

Methods of Content Analysis

There are several methods of content analysis, including:

Conceptual Analysis

This method involves analyzing the meanings of key concepts used in the content being analyzed. The researcher identifies key concepts and analyzes how they are used, defining them and categorizing them into broader themes.

Content Analysis by Frequency

This method involves counting and categorizing the frequency of specific words, phrases, or themes that appear in the content being analyzed. The researcher identifies relevant keywords or phrases and systematically counts their frequency.

Comparative Analysis

This method involves comparing the content of two or more sources to identify similarities, differences, and patterns. The researcher selects relevant sources, identifies key themes or concepts, and compares how they are represented in each source.

Discourse Analysis

This method involves analyzing the structure and language of the content being analyzed to identify how the content constructs and represents social reality. The researcher analyzes the language used and the underlying assumptions, beliefs, and values reflected in the content.

Narrative Analysis

This method involves analyzing the content as a narrative, identifying the plot, characters, and themes, and analyzing how they relate to the broader social context. The researcher identifies the underlying messages conveyed by the narrative and their implications for the broader social context.

Content Analysis Conducting Guide

Here is a basic guide to conducting a content analysis:

- Define your research question or objective: Before starting your content analysis, you need to define your research question or objective clearly. This will help you to identify the content you need to analyze and the type of analysis you need to conduct.

- Select your sample: Select a representative sample of the content you want to analyze. This may involve selecting a random sample, a purposive sample, or a convenience sample, depending on the research question and the availability of the content.

- Develop a coding scheme: Develop a coding scheme or a set of categories to use for coding the content. The coding scheme should be based on your research question or objective and should be reliable, valid, and comprehensive.

- Train coders: Train coders to use the coding scheme and ensure that they have a clear understanding of the coding categories and procedures. You may also need to establish inter-coder reliability to ensure that different coders are coding the content consistently.

- Code the content: Code the content using the coding scheme. This may involve manually coding the content, using software, or a combination of both.

- Analyze the data: Once the content is coded, analyze the data using appropriate statistical or qualitative methods, depending on the research question and the type of data.

- Interpret the results: Interpret the results of the analysis in the context of your research question or objective. Draw conclusions based on the findings and relate them to the broader literature on the topic.

- Report your findings: Report your findings in a clear and concise manner, including the research question, methodology, results, and conclusions. Provide details about the coding scheme, inter-coder reliability, and any limitations of the study.

Applications of Content Analysis

Content analysis has numerous applications across different fields, including:

- Media Research: Content analysis is commonly used in media research to examine the representation of different groups, such as race, gender, and sexual orientation, in media content. It can also be used to study media framing, media bias, and media effects.

- Political Communication : Content analysis can be used to study political communication, including political speeches, debates, and news coverage of political events. It can also be used to study political advertising and the impact of political communication on public opinion and voting behavior.

- Marketing Research: Content analysis can be used to study advertising messages, consumer reviews, and social media posts related to products or services. It can provide insights into consumer preferences, attitudes, and behaviors.

- Health Communication: Content analysis can be used to study health communication, including the representation of health issues in the media, the effectiveness of health campaigns, and the impact of health messages on behavior.

- Education Research : Content analysis can be used to study educational materials, including textbooks, curricula, and instructional materials. It can provide insights into the representation of different topics, perspectives, and values.

- Social Science Research: Content analysis can be used in a wide range of social science research, including studies of social media, online communities, and other forms of digital communication. It can also be used to study interviews, focus groups, and other qualitative data sources.

Examples of Content Analysis

Here are some examples of content analysis:

- Media Representation of Race and Gender: A content analysis could be conducted to examine the representation of different races and genders in popular media, such as movies, TV shows, and news coverage.

- Political Campaign Ads : A content analysis could be conducted to study political campaign ads and the themes and messages used by candidates.

- Social Media Posts: A content analysis could be conducted to study social media posts related to a particular topic, such as the COVID-19 pandemic, to examine the attitudes and beliefs of social media users.

- Instructional Materials: A content analysis could be conducted to study the representation of different topics and perspectives in educational materials, such as textbooks and curricula.

- Product Reviews: A content analysis could be conducted to study product reviews on e-commerce websites, such as Amazon, to identify common themes and issues mentioned by consumers.

- News Coverage of Health Issues: A content analysis could be conducted to study news coverage of health issues, such as vaccine hesitancy, to identify common themes and perspectives.

- Online Communities: A content analysis could be conducted to study online communities, such as discussion forums or social media groups, to understand the language, attitudes, and beliefs of the community members.

Purpose of Content Analysis

The purpose of content analysis is to systematically analyze and interpret the content of various forms of communication, such as written, oral, or visual, to identify patterns, themes, and meanings. Content analysis is used to study communication in a wide range of fields, including media studies, political science, psychology, education, sociology, and marketing research. The primary goals of content analysis include:

- Describing and summarizing communication: Content analysis can be used to describe and summarize the content of communication, such as the themes, topics, and messages conveyed in media content, political speeches, or social media posts.

- Identifying patterns and trends: Content analysis can be used to identify patterns and trends in communication, such as changes over time, differences between groups, or common themes or motifs.

- Exploring meanings and interpretations: Content analysis can be used to explore the meanings and interpretations of communication, such as the underlying values, beliefs, and assumptions that shape the content.

- Testing hypotheses and theories : Content analysis can be used to test hypotheses and theories about communication, such as the effects of media on attitudes and behaviors or the framing of political issues in the media.

When to use Content Analysis

Content analysis is a useful method when you want to analyze and interpret the content of various forms of communication, such as written, oral, or visual. Here are some specific situations where content analysis might be appropriate:

- When you want to study media content: Content analysis is commonly used in media studies to analyze the content of TV shows, movies, news coverage, and other forms of media.

- When you want to study political communication : Content analysis can be used to study political speeches, debates, news coverage, and advertising.

- When you want to study consumer attitudes and behaviors: Content analysis can be used to analyze product reviews, social media posts, and other forms of consumer feedback.

- When you want to study educational materials : Content analysis can be used to analyze textbooks, instructional materials, and curricula.

- When you want to study online communities: Content analysis can be used to analyze discussion forums, social media groups, and other forms of online communication.

- When you want to test hypotheses and theories : Content analysis can be used to test hypotheses and theories about communication, such as the framing of political issues in the media or the effects of media on attitudes and behaviors.

Characteristics of Content Analysis

Content analysis has several key characteristics that make it a useful research method. These include:

- Objectivity : Content analysis aims to be an objective method of research, meaning that the researcher does not introduce their own biases or interpretations into the analysis. This is achieved by using standardized and systematic coding procedures.

- Systematic: Content analysis involves the use of a systematic approach to analyze and interpret the content of communication. This involves defining the research question, selecting the sample of content to analyze, developing a coding scheme, and analyzing the data.

- Quantitative : Content analysis often involves counting and measuring the occurrence of specific themes or topics in the content, making it a quantitative research method. This allows for statistical analysis and generalization of findings.

- Contextual : Content analysis considers the context in which the communication takes place, such as the time period, the audience, and the purpose of the communication.

- Iterative : Content analysis is an iterative process, meaning that the researcher may refine the coding scheme and analysis as they analyze the data, to ensure that the findings are valid and reliable.

- Reliability and validity : Content analysis aims to be a reliable and valid method of research, meaning that the findings are consistent and accurate. This is achieved through inter-coder reliability tests and other measures to ensure the quality of the data and analysis.

Advantages of Content Analysis

There are several advantages to using content analysis as a research method, including:

- Objective and systematic : Content analysis aims to be an objective and systematic method of research, which reduces the likelihood of bias and subjectivity in the analysis.

- Large sample size: Content analysis allows for the analysis of a large sample of data, which increases the statistical power of the analysis and the generalizability of the findings.

- Non-intrusive: Content analysis does not require the researcher to interact with the participants or disrupt their natural behavior, making it a non-intrusive research method.

- Accessible data: Content analysis can be used to analyze a wide range of data types, including written, oral, and visual communication, making it accessible to researchers across different fields.

- Versatile : Content analysis can be used to study communication in a wide range of contexts and fields, including media studies, political science, psychology, education, sociology, and marketing research.

- Cost-effective: Content analysis is a cost-effective research method, as it does not require expensive equipment or participant incentives.

Limitations of Content Analysis

While content analysis has many advantages, there are also some limitations to consider, including:

- Limited contextual information: Content analysis is focused on the content of communication, which means that contextual information may be limited. This can make it difficult to fully understand the meaning behind the communication.

- Limited ability to capture nonverbal communication : Content analysis is limited to analyzing the content of communication that can be captured in written or recorded form. It may miss out on nonverbal communication, such as body language or tone of voice.

- Subjectivity in coding: While content analysis aims to be objective, there may be subjectivity in the coding process. Different coders may interpret the content differently, which can lead to inconsistent results.

- Limited ability to establish causality: Content analysis is a correlational research method, meaning that it cannot establish causality between variables. It can only identify associations between variables.

- Limited generalizability: Content analysis is limited to the data that is analyzed, which means that the findings may not be generalizable to other contexts or populations.

- Time-consuming: Content analysis can be a time-consuming research method, especially when analyzing a large sample of data. This can be a disadvantage for researchers who need to complete their research in a short amount of time.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Narrative Analysis – Types, Methods and Examples

Grounded Theory – Methods, Examples and Guide

Critical Analysis – Types, Examples and Writing...

Symmetric Histogram – Examples and Making Guide

Framework Analysis – Method, Types and Examples

Methodological Framework – Types, Examples and...

Social media analytics: a survey of techniques, tools and platforms

- Open access

- Published: 26 July 2014

- Volume 30 , pages 89–116, ( 2015 )

Cite this article

You have full access to this open access article

- Bogdan Batrinca 1 &

- Philip C. Treleaven 1

239k Accesses

335 Citations

75 Altmetric

Explore all metrics

This paper is written for (social science) researchers seeking to analyze the wealth of social media now available. It presents a comprehensive review of software tools for social networking media, wikis, really simple syndication feeds, blogs, newsgroups, chat and news feeds. For completeness, it also includes introductions to social media scraping, storage, data cleaning and sentiment analysis. Although principally a review, the paper also provides a methodology and a critique of social media tools. Analyzing social media, in particular Twitter feeds for sentiment analysis, has become a major research and business activity due to the availability of web-based application programming interfaces (APIs) provided by Twitter, Facebook and News services. This has led to an ‘explosion’ of data services, software tools for scraping and analysis and social media analytics platforms. It is also a research area undergoing rapid change and evolution due to commercial pressures and the potential for using social media data for computational (social science) research. Using a simple taxonomy, this paper provides a review of leading software tools and how to use them to scrape, cleanse and analyze the spectrum of social media. In addition, it discussed the requirement of an experimental computational environment for social media research and presents as an illustration the system architecture of a social media (analytics) platform built by University College London. The principal contribution of this paper is to provide an overview (including code fragments) for scientists seeking to utilize social media scraping and analytics either in their research or business. The data retrieval techniques that are presented in this paper are valid at the time of writing this paper (June 2014), but they are subject to change since social media data scraping APIs are rapidly changing.

Similar content being viewed by others

Social Media Analytics: Techniques, Tools, Platforms a Comprehensive Review

A Big Linked Data Toolkit for Social Media Analysis and Visualization Based on W3C Web Components

Open-Source Monitoring, Search and Analytics Over Social Media

Avoid common mistakes on your manuscript.

1 Introduction

Social media is defined as web-based and mobile-based Internet applications that allow the creation, access and exchange of user-generated content that is ubiquitously accessible (Kaplan and Haenlein 2010 ). Besides social networking media (e.g., Twitter and Facebook), for convenience, we will also use the term ‘social media’ to encompass really simple syndication (RSS) feeds, blogs, wikis and news, all typically yielding unstructured text and accessible through the web. Social media is especially important for research into computational social science that investigates questions (Lazer et al. 2009 ) using quantitative techniques (e.g., computational statistics, machine learning and complexity) and so-called big data for data mining and simulation modeling (Cioffi-Revilla 2010 ).

This has led to numerous data services, tools and analytics platforms. However, this easy availability of social media data for academic research may change significantly due to commercial pressures. In addition, as discussed in Sect. 2 , the tools available to researchers are far from ideal. They either give superficial access to the raw data or (for non-superficial access) require researchers to program analytics in a language such as Java.

1.1 Terminology

We start with definitions of some of the key techniques related to analyzing unstructured textual data:

Natural language processing —(NLP) is a field of computer science, artificial intelligence and linguistics concerned with the interactions between computers and human (natural) languages. Specifically, it is the process of a computer extracting meaningful information from natural language input and/or producing natural language output.

News analytics —the measurement of the various qualitative and quantitative attributes of textual (unstructured data) news stories. Some of these attributes are: sentiment , relevance and novelty .

Opinion mining —opinion mining (sentiment mining, opinion/sentiment extraction) is the area of research that attempts to make automatic systems to determine human opinion from text written in natural language.

Scraping —collecting online data from social media and other Web sites in the form of unstructured text and also known as site scraping, web harvesting and web data extraction.

Sentiment analysis —sentiment analysis refers to the application of natural language processing, computational linguistics and text analytics to identify and extract subjective information in source materials.

Text analytics —involves information retrieval (IR), lexical analysis to study word frequency distributions, pattern recognition, tagging/annotation, information extraction, data mining techniques including link and association analysis, visualization and predictive analytics.

1.2 Research challenges

Social media scraping and analytics provides a rich source of academic research challenges for social scientists, computer scientists and funding bodies. Challenges include:

Scraping —although social media data is accessible through APIs, due to the commercial value of the data, most of the major sources such as Facebook and Google are making it increasingly difficult for academics to obtain comprehensive access to their ‘raw’ data; very few social data sources provide affordable data offerings to academia and researchers. News services such as Thomson Reuters and Bloomberg typically charge a premium for access to their data. In contrast, Twitter has recently announced the Twitter Data Grants program, where researchers can apply to get access to Twitter’s public tweets and historical data in order to get insights from its massive set of data (Twitter has more than 500 million tweets a day).

Data cleansing —cleaning unstructured textual data (e.g., normalizing text), especially high-frequency streamed real-time data, still presents numerous problems and research challenges.

Holistic data sources —researchers are increasingly bringing together and combining novel data sources: social media data, real-time market & customer data and geospatial data for analysis.

Data protection —once you have created a ‘big data’ resource, the data needs to be secured, ownership and IP issues resolved (i.e., storing scraped data is against most of the publishers’ terms of service), and users provided with different levels of access; otherwise, users may attempt to ‘suck’ all the valuable data from the database.

Data analytics —sophisticated analysis of social media data for opinion mining (e.g., sentiment analysis) still raises a myriad of challenges due to foreign languages, foreign words, slang, spelling errors and the natural evolving of language.

Analytics dashboards —many social media platforms require users to write APIs to access feeds or program analytics models in a programming language, such as Java. While reasonable for computer scientists, these skills are typically beyond most (social science) researchers. Non-programming interfaces are required for giving what might be referred to as ‘deep’ access to ‘raw’ data, for example, configuring APIs, merging social media feeds, combining holistic sources and developing analytical models.

Data visualization —visual representation of data whereby information that has been abstracted in some schematic form with the goal of communicating information clearly and effectively through graphical means. Given the magnitude of the data involved, visualization is becoming increasingly important.

1.3 Social media research and applications

Social media data is clearly the largest, richest and most dynamic evidence base of human behavior, bringing new opportunities to understand individuals, groups and society. Innovative scientists and industry professionals are increasingly finding novel ways of automatically collecting, combining and analyzing this wealth of data. Naturally, doing justice to these pioneering social media applications in a few paragraphs is challenging. Three illustrative areas are: business, bioscience and social science.

The early business adopters of social media analysis were typically companies in retail and finance. Retail companies use social media to harness their brand awareness, product/customer service improvement, advertising/marketing strategies, network structure analysis, news propagation and even fraud detection. In finance, social media is used for measuring market sentiment and news data is used for trading. As an illustration, Bollen et al. ( 2011 ) measured sentiment of random sample of Twitter data, finding that Dow Jones Industrial Average (DJIA) prices are correlated with the Twitter sentiment 2–3 days earlier with 87.6 percent accuracy. Wolfram ( 2010 ) used Twitter data to train a Support Vector Regression (SVR) model to predict prices of individual NASDAQ stocks, finding ‘significant advantage’ for forecasting prices 15 min in the future.

In the biosciences, social media is being used to collect data on large cohorts for behavioral change initiatives and impact monitoring, such as tackling smoking and obesity or monitoring diseases. An example is Penn State University biologists (Salathé et al. 2012 ) who have developed innovative systems and techniques to track the spread of infectious diseases, with the help of news Web sites, blogs and social media.

Computational social science applications include: monitoring public responses to announcements, speeches and events especially political comments and initiatives; insights into community behavior; social media polling of (hard to contact) groups; early detection of emerging events, as with Twitter. For example, Lerman et al. ( 2008 ) use computational linguistics to automatically predict the impact of news on the public perception of political candidates. Yessenov and Misailovic ( 2009 ) use movie review comments to study the effect of various approaches in extracting text features on the accuracy of four machine learning methods—Naive Bayes, Decision Trees, Maximum Entropy and K-Means clustering. Lastly, Karabulut ( 2013 ) found that Facebook’s Gross National Happiness (GNH) exhibits peaks and troughs in-line with major public events in the USA.

1.4 Social media overview

For this paper, we group social media tools into:

Social media data —social media data types (e.g., social network media, wikis, blogs, RSS feeds and news, etc.) and formats (e.g., XML and JSON). This includes data sets and increasingly important real-time data feeds, such as financial data, customer transaction data, telecoms and spatial data.

Social media programmatic access —data services and tools for sourcing and scraping (textual) data from social networking media, wikis, RSS feeds, news, etc. These can be usefully subdivided into:

Data sources, services and tools —where data is accessed by tools which protect the raw data or provide simple analytics. Examples include: Google Trends, SocialMention, SocialPointer and SocialSeek, which provide a stream of information that aggregates various social media feeds.

Data feeds via APIs —where data sets and feeds are accessible via programmable HTTP-based APIs and return tagged data using XML or JSON, etc. Examples include Wikipedia, Twitter and Facebook.

Text cleaning and storage tools —tools for cleaning and storing textual data. Google Refine and DataWrangler are examples for data cleaning.

Text analysis tools —individual or libraries of tools for analyzing social media data once it has been scraped and cleaned. These are mainly natural language processing, analysis and classification tools, which are explained below.

Transformation tools —simple tools that can transform textual input data into tables, maps, charts (line, pie, scatter, bar, etc.), timeline or even motion (animation over timeline), such as Google Fusion Tables, Zoho Reports, Tableau Public or IBM’s Many Eyes.

Analysis tools —more advanced analytics tools for analyzing social data, identifying connections and building networks, such as Gephi (open source) or the Excel plug-in NodeXL.

Social media platforms —environments that provide comprehensive social media data and libraries of tools for analytics. Examples include: Thomson Reuters Machine Readable News, Radian 6 and Lexalytics.

Social network media platforms —platforms that provide data mining and analytics on Twitter, Facebook and a wide range of other social network media sources.

News platforms —platforms such as Thomson Reuters providing commercial news archives/feeds and associated analytics.

2 Social media methodology and critique

The two major impediments to using social media for academic research are firstly access to comprehensive data sets and secondly tools that allow ‘deep’ data analysis without the need to be able to program in a language such as Java. The majority of social media resources are commercial and companies are naturally trying to monetize their data. As discussed, it is important that researchers have access to open-source ‘big’ (social media) data sets and facilities for experimentation. Otherwise, social media research could become the exclusive domain of major companies, government agencies and a privileged set of academic researchers presiding over private data from which they produce papers that cannot be critiqued or replicated. Recently, there has been a modest response, as Twitter and Gnip are piloting a new program for data access, starting with 5 all-access data grants to select applicants.

2.1 Methodology

Research requirements can be grouped into: data, analytics and facilities.

Researchers need online access to historic and real-time social media data, especially the principal sources, to conduct world-leading research:

Social network media —access to comprehensive historic data sets and also real-time access to sources, possibly with a (15 min) time delay, as with Thomson Reuters and Bloomberg financial data.

News data —access to historic data and real-time news data sets, possibly through the concept of ‘educational data licenses’ (cf. software license).

Public data —access to scraped and archived important public data; available through RSS feeds, blogs or open government databases.

Programmable interfaces —researchers also need access to simple application programming interfaces (APIs) to scrape and store other available data sources that may not be automatically collected.

2.1.2 Analytics

Currently, social media data is typically either available via simple general routines or require the researcher to program their analytics in a language such as MATLAB, Java or Python. As discussed above, researchers require:

Analytics dashboards —non-programming interfaces are required for giving what might be termed as ‘deep’ access to ‘raw’ data.

Holistic data analysis —tools are required for combining (and conducting analytics across) multiple social media and other data sets.

Data visualization —researchers also require visualization tools whereby information that has been abstracted can be visualized in some schematic form with the goal of communicating information clearly and effectively through graphical means.

2.1.3 Facilities

Lastly, the sheer volume of social media data being generated argues for national and international facilities to be established to support social media research (cf. Wharton Research Data Services https://wrds-web.wharton.upenn.edu ):

Data storage —the volume of social media data, current and projected, is beyond most individual universities and hence needs to be addressed at a national science foundation level. Storage is required both for principal data sources (e.g., Twitter), but also for sources collected by individual projects and archived for future use by other researchers.

Computational facility —remotely accessible computational facilities are also required for: a) protecting access to the stored data; b) hosting the analytics and visualization tools; and c) providing computational resources such as grids and GPUs required for processing the data at the facility rather than transmitting it across a network.

2.2 Critique

Much needs to be done to support social media research. As discussed, the majority of current social media resources are commercial, expensive and difficult for academics to obtain full access.

In general, access to important sources of social media data is frequently restricted and full commercial access is expensive.

Siloed data —most data sources (e.g., Twitter) have inherently isolated information making it difficult to combine with other data sources.

Holistic data —in contrast, researchers are increasingly interested in accessing, storing and combining novel data sources: social media data, real-time financial market & customer data and geospatial data for analysis. This is currently extremely difficult to do even for Computer Science departments.

2.2.2 Analytics

Analytical tools provided by vendors are often tied to a single data set, maybe limited in analytical capability, and data charges make them expensive to use.

2.2.3 Facilities

There are an increasing number of powerful commercial platforms, such as the ones supplied by SAS and Thomson Reuters, but the charges are largely prohibitive for academic research. Either comparable facilities need to be provided by national science foundations or vendors need to be persuaded to introduce the concept of an ‘educational license.’

3 Social media data

Clearly, there is a large and increasing number of (commercial) services providing access to social networking media (e.g., Twitter, Facebook and Wikipedia) and news services (e.g., Thomson Reuters Machine Readable News). Equivalent major academic services are scarce.We start by discussing types of data and formats produced by these services.

3.1 Types of data

Although we focus on social media, as discussed, researchers are continually finding new and innovative sources of data to bring together and analyze. So when considering textual data analysis, we should consider multiple sources (e.g., social networking media, RSS feeds, blogs and news) supplemented by numeric (financial) data, telecoms data, geospatial data and potentially speech and video data. Using multiple data sources is certainly the future of analytics.

Broadly, data subdivides into:

Historic data sets —previously accumulated and stored social/news, financial and economic data.

Real-time feeds —live data feeds from streamed social media, news services, financial exchanges, telecoms services, GPS devices and speech.

Raw data —unprocessed computer data straight from source that may contain errors or may be unanalyzed.

Cleaned data —correction or removal of erroneous (dirty) data caused by disparities, keying mistakes, missing bits, outliers, etc.

Value-added data —data that has been cleaned, analyzed, tagged and augmented with knowledge.

3.2 Text data formats

The four most common formats used to markup text are: HTML, XML, JSON and CSV.

HTML —HyperText Markup Language (HTML) as well-known is the markup language for web pages and other information that can be viewed in a web browser. HTML consists of HTML elements, which include tags enclosed in angle brackets (e.g., <div>), within the content of the web page.

XML —Extensible Markup Language (XML)—the markup language for structuring textual data using <tag>…<\tag> to define elements.

JSON —JavaScript Object Notation (JSON) is a text-based open standard designed for human-readable data interchange and is derived from JavaScript.

CSV —a comma-separated values (CSV) file contains the values in a table as a series of ASCII text lines organized such that each column value is separated by a comma from the next column’s value and each row starts a new line.

For completeness, HTML and XML are so-called markup languages (markup and content) that define a set of simple syntactic rules for encoding documents in a format both human readable and machine readable. A markup comprises start-tags (e.g., <tag>), content text and end-tags (e.g., </tag>).

Many feeds use JavaScript Object Notation (JSON), the lightweight data-interchange format, based on a subset of the JavaScript Programming Language. JSON is a language-independent text format that uses conventions that are familiar to programmers of the C-family of languages, including C, C++, C#, Java, JavaScript, Perl, Python, and many others. JSON’s basic types are: Number, String, Boolean, Array (an ordered sequence of values, comma-separated and enclosed in square brackets) and Object (an unordered collection of key:value pairs). The JSON format is illustrated in Fig. 1 for a query on the Twitter API on the string ‘UCL,’ which returns two ‘text’ results from the Twitter user ‘uclnews.’

JSON Example

Comma-separated values are not a single, well-defined format but rather refer to any text file that: (a) is plain text using a character set such as ASCII, Unicode or EBCDIC; (b) consists of text records (e.g., one record per line); (c) with records divided into fields separated by delimiters (e.g., comma, semicolon and tab); and (d) where every record has the same sequence of fields.

4 Social media providers

Social media data resources broadly subdivide into those providing:

Freely available databases —repositories that can be freely downloaded, e.g., Wikipedia ( http://dumps.wikimedia.org ) and the Enron e-mail data set available via http://www.cs.cmu.edu/~enron/ .

Data access via tools —sources that provide controlled access to their social media data via dedicated tools, both to facilitate easy interrogation and also to stop users ‘sucking’ all the data from the repository. An example is Google’s Trends. These further subdivided into:

Free sources —repositories that are freely accessible, but the tools protect or may limit access to the ‘raw’ data in the repository, such as the range of tools provided by Google.

Commercial sources —data resellers that charge for access to their social media data. Gnip and DataSift provide commercial access to Twitter data through a partnership, and Thomson Reuters to news data.

Data access via APIs —social media data repositories providing programmable HTTP-based access to the data via APIs (e.g., Twitter, Facebook and Wikipedia).

4.1 Open-source databases

A major open source of social media is Wikipedia, which offers free copies of all available content to interested users (Wikimedia Foundation 2014 ). These databases can be used for mirroring, database queries and social media analytics. They include dumps from any Wikimedia Foundation project: http://dumps.wikimedia.org/ , English Wikipedia dumps in SQL and XML: http://dumps.wikimedia.org/enwiki/ , etc.

Another example of freely available data for research is the World Bank data, i.e., the World Bank Databank ( http://databank.worldbank.org/data/databases.aspx ) , which provides over 40 databases, such as Gender Statistics, Health Nutrition and Population Statistics, Global Economic Prospects, World Development Indicators and Global Development Finance, and many others. Most of the databases can be filtered by country/region, series/topics or time (years and quarters). In addition, tools are provided to allow reports to be customized and displayed in table, chart or map formats.

4.2 Data access via tools

As discussed, most commercial services provide access to social media data via online tools, both to control access to the raw data and increasingly to monetize the data.

4.2.1 Freely accessible sources

Google with tools such as Trends and InSights is a good example of this category. Google is the largest ‘scraper’ in the world, but they do their best to ‘discourage’ scraping of their own pages. (For an introduction of how to surreptitious scrape Google—and avoid being ‘banned’—see http://google-scraper.squabbel.com .) Google’s strategy is to provide a wide range of packages, such as Google Analytics, rather than from a researchers’ viewpoint the more useful programmable HTTP-based APIs.

Figure 2 illustrates how Google Trends displays a particular search term, in this case ‘libor.’ Using Google Trends you can compare up to five topics at a time and also see how often those topics have been mentioned and in which geographic regions the topics have been searched for the most.

Google Trends

4.2.2 Commercial sources

There is an increasing number of commercial services that scrape social networking media and then provide paid-for access via simple analytics tools. (The more comprehensive platforms with extensive analytics are reviewed in Sect. 8 .) In addition, companies such as Twitter are both restricting free access to their data and licensing their data to commercial data resellers, such as Gnip and DataSift.

Gnip is the world’s largest provider of social data. Gnip was the first to partner with Twitter to make their social data available, and since then, it was the first to work with Tumblr, Foursquare, WordPress, Disqus, StockTwits and other leading social platforms. Gnip delivers social data to customers in more than 40 countries, and Gnip’s customers deliver social media analytics to more than 95 % of the Fortune 500. Real-time data from Gnip can be delivered as a ‘Firehose’ of every single activity or via PowerTrack, a proprietary filtering tool that allows users to build queries around only the data they need. PowerTrack rules can filter data streams based on keywords, geo boundaries, phrase matches and even the type of content or media in the activity. The company then offers enrichments to these data streams such as Profile Geo (to add significantly more usable geo data for Twitter), URL expansion and language detection to further enhance the value of the data delivered. In addition to real-time data access, the company also offers Historical PowerTrack and Search API access for Twitter which give customers the ability to pull any Tweet since the first message on March 21, 2006.

Gnip provides access to premium (Gnip’s ‘Complete Access’ sources are publishers that have an agreement with Gnip to resell their data) and free data feeds (Gnip’s ‘Managed Public API Access’ sources provide access to normalized and consolidated free data from their APIs, although it requires Gnip’s paid services for the Data Collectors) via its dashboard (see Fig. 3 ). Firstly, the user only sees the feeds in the dashboard that were paid for under a sales agreement. To select a feed, the user clicks on a publisher and then chooses a specific feed from that publisher as shown in Fig. 3 . Different types of feeds serve different types of use cases and correspond to different types of queries and API endpoints on the publisher’s source API. After selecting the feed, the user is assisted by Gnip to configure it with any required parameters before it begins collecting data. This includes adding at least one rule. Under ‘Get Data’ – > ‘Advanced Settings’ you can also configure how often your feed queries the source API for data (the ‘query rate’). Choose between the publisher’s native data format and Gnip’s Activity Streams format (XML for Enterprise Data Collector feeds).

Gnip Dashboard, Publishers and Feeds

4.3 Data feed access via APIs

For researchers, arguably the most useful sources of social media data are those that provide programmable access via APIs, typically using HTTP-based protocols. Given their importance to academics, here, we review individually wikis, social networking media, RSS feeds, news, etc.

4.3.1 Wiki media

Wikipedia (and wikis in general) provides academics with large open-source repositories of user-generated (crowd-sourced) content. What is not widely known is that Wikipedia provides HTTP-based APIs that allows programmable access and searching (i.e., scraping) that returns data in a variety of formats including XML. In fact, the API is not unique to Wikipedia but part of MediaWiki’s ( http://www.mediawiki.org/ ) open-source toolkit and hence can be used with any MediaWiki-based wikis.

The wiki HTTP-based API works by accepting requests containing one or more input arguments and returning strings, often in XML format, that can be parsed and used by the requesting client. Other formats supported include JSON, WDDX, YAML, or PHP serialized. Details can be found at: http://en.wikipedia.org/w/api.php?action=query&list=allcategories&acprop=size&acprefix=hollywood&format=xml .

The HTTP request must contain: a) the requested ‘action,’ such as query, edit or delete operation; b) an authentication request; and c) any other supported actions. For example, the above request returns an XML string listing the first 10 Wikipedia categories with the prefix ‘hollywood.’ Vaswani ( 2011 ) provides a detailed description of how to scrape Wikipedia using an Apache/PHP development environment and an HTTP client capable of transmitting GET and PUT requests and handling responses.

4.3.2 Social networking media

As with Wikipedia, popular social networks, such as Facebook, Twitter and Foursquare, make a proportion of their data accessible via APIs.

Although many social networking media sites provide APIs, not all sites (e.g., Bing, LinkedIn and Skype) provide API access for scraping data. While more and more social networks are shifting to publicly available content, many leading networks are restricting free access, even to academics. For example, Foursquare announced in December 2013 that it will no longer allow private check-ins on iOS 7, and has now partnered with Gnip to provide a continuous stream of anonymized check-in data. The data is available in two packages: the full Firehose access level and a filtered version via Gnip’s PowerTrack service. Here, we briefly discuss the APIs provided by Twitter and Facebook.

4.3.2.1 Twitter

The default account setting keeps users’ Tweets public, although users can protect their Tweets and make them visible only to their approved Twitter followers. However, less than 10 % of all the Twitter accounts are private. Tweets from public accounts (including replies and mentions) are available in JSON format through Twitter’s Search API for batch requests of past data and Streaming API for near real-time data.

Search API —Query Twitter for recent Tweets containing specific keywords. It is part of the Twitter REST API v1.1 (it attempts to comply with the design principles of the REST architectural style, which stands for Representational State Transfer) and requires an authorized application (using oAuth, the open standard for authorization) before retrieving any results from the API.

Streaming API —A real-time stream of Tweets, filtered by user ID, keyword, geographic location or random sampling.

One may retrieve recent Tweets containing particular keywords through Twitter’s Search API (part of REST API v1.1) with the following API call: https://api.twitter.com/1.1/search/tweets.json?q=APPLE and real-time data using the streaming API call: https://stream.twitter.com/1/statuses/sample.json .

Twitter’s Streaming API allows data to be accessed via filtering (by keywords, user IDs or location) or by sampling of all updates from a select amount of users. Default access level ‘Spritzer’ allows sampling of roughly 1 % of all public statuses, with the option to retrieve 10 % of all statuses via the ‘Gardenhose’ access level (more suitable for data mining and research applications). In social media, streaming APIs are often called Firehose—a syndication feed that publishes all public activities as they happen in one big stream. Twitter has recently announced the Twitter Data Grants program, where researchers can apply to get access to Twitter’s public tweets and historical data in order to get insights from its massive set of data (Twitter has more than 500 million tweets a day); research institutions and academics will not get the Firehose access level; instead, they will only get the data set needed for their research project. Researchers can apply for it at the following address: https://engineering.twitter.com/research/data-grants .

Twitter results are stored in a JSON array of objects containing the fields shown in Fig. 4 . The JSON array consists of a list of objects matching the supplied filters and the search string, where each object is a Tweet and its structure is clearly specified by the object’s fields, e.g., ‘created_at’ and ‘from_user’. The example in Fig. 4 consists of the output of calling Twitter’s GET search API via http://search.twitter.com/search.json?q=financial%20times&rpp=1&include_entities=true&result_type=mixed where the parameters specify that the search query is ‘financial times,’ one result per page, each Tweet should have a node called ‘entities’ (i.e., metadata about the Tweet) and list ‘mixed’ results types, i.e., include both popular and real-time results in the response.

Example Output in JSON for Twitter REST API v1

4.3.2.2 Facebook

Facebook’s privacy issues are more complex than Twitter’s, meaning that a lot of status messages are harder to obtain than Tweets, requiring ‘open authorization’ status from users. Facebook currently stores all data as objects Footnote 1 and has a series of APIs, ranging from the Graph and Public Feed APIs to Keyword Insight API. In order to access the properties of an object, its unique ID must be known to make the API call. Facebook’s Search API (part of Facebook’s Graph API) can be accessed by calling https://graph.facebook.com/search?q=QUERY&type=page . The detailed API query format is shown in Fig. 5 . Here, ‘QUERY’ can be replaced by any search term, and ‘page’ can be replaced with ‘post,’ ‘user,’ ‘page,’ ‘event,’ ‘group,’ ‘place,’ ‘checkin,’ ‘location’ or ‘placetopic.’ The results of this search will contain the unique ID for each object. When returning the individual ID for a particular search result, one can use https://graph.facebook.com/ID to obtain further page details such as number of ‘likes.’ This kind of information is of interest to companies when it comes to brand awareness and competition monitoring.

Facebook Graph API Search Query Format

The Facebook Graph API search queries require an access token included in the request. Searching for pages and places requires an ‘app access token’, whereas searching for other types requires a user access token.

Replacing ‘page’ with ‘post’ in the aforementioned search URL will return all public statuses containing this search term. Footnote 2 Batch requests can be sent by following the procedure outlined here: https://developers.facebook.com/docs/reference/api/batch/ . Information on retrieving real-time updates can be found here: https://developers.facebook.com/docs/reference/api/realtime/ . Facebook also returns data in JSON format and so can be retrieved and stored using the same methods as used with data from Twitter, although the fields are different depending on the search type, as illustrated in Fig. 6 .

Facebook Graph API Search Results for q=’Centrica’ and type=’page’

4.3.3 RSS feeds

A large number of Web sites already provide access to content via RSS feeds. This is the syndication standard for publishing regular updates to web-based content based on a type of XML file that resides on an Internet server. For Web sites, RSS feeds can be created manually or automatically (with software).