This browser is no longer supported.

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support.

Machine learning tasks in ML.NET

- 14 contributors

A machine learning task is the type of prediction or inference being made, based on the problem or question that is being asked, and the available data. For example, the classification task assigns data to categories, and the clustering task groups data according to similarity.

Machine learning tasks rely on patterns in the data rather than being explicitly programmed.

This article describes the different machine learning tasks that you can choose from in ML.NET and some common use cases.

Once you have decided which task works for your scenario, then you need to choose the best algorithm to train your model. The available algorithms are listed in the section for each task.

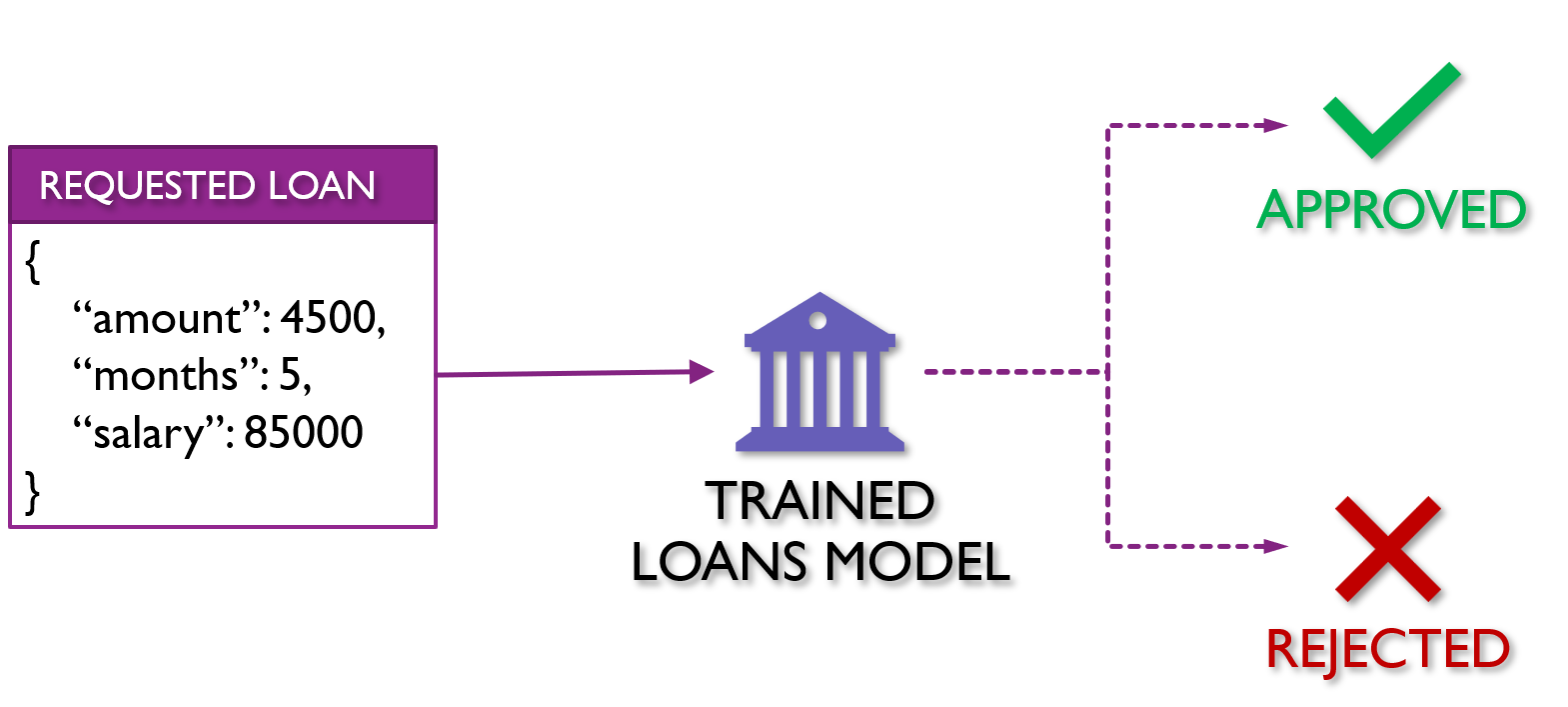

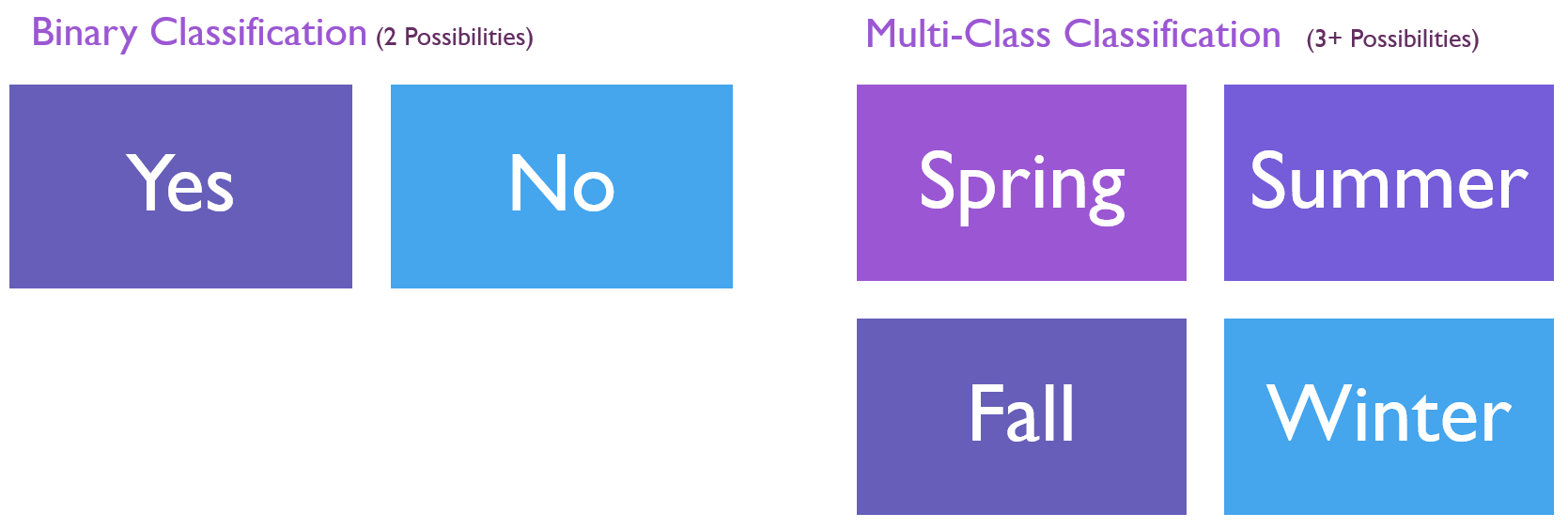

Binary classification

A supervised machine learning task that is used to predict which of two classes (categories) an instance of data belongs to. The input of a classification algorithm is a set of labeled examples, where each label is an integer of either 0 or 1. The output of a binary classification algorithm is a classifier, which you can use to predict the class of new unlabeled instances. Examples of binary classification scenarios include:

- Understanding sentiment of Twitter comments as either "positive" or "negative".

- Diagnosing whether a patient has a certain disease or not.

- Making a decision to mark an email as "spam" or not.

- Determining if a photo contains a particular item or not, such as a dog or fruit.

For more information, see the Binary classification article on Wikipedia.

Binary classification trainers

You can train a binary classification model using the following algorithms:

- AveragedPerceptronTrainer

- SdcaLogisticRegressionBinaryTrainer

- SdcaNonCalibratedBinaryTrainer

- SymbolicSgdLogisticRegressionBinaryTrainer

- LbfgsLogisticRegressionBinaryTrainer

- LightGbmBinaryTrainer

- FastTreeBinaryTrainer

- FastForestBinaryTrainer

- GamBinaryTrainer

- FieldAwareFactorizationMachineTrainer

- PriorTrainer

- LinearSvmTrainer

Binary classification inputs and outputs

For best results with binary classification, the training data should be balanced (that is, equal numbers of positive and negative training data). Missing values should be handled before training.

The input label column data must be Boolean . The input features column data must be a fixed-size vector of Single .

These trainers output the following columns:

| Output Column Name | Column Type | Description |

|---|---|---|

| The raw score that was calculated by the model | ||

| The predicted label, based on the sign of the score. A negative score maps to and a positive score maps to . |

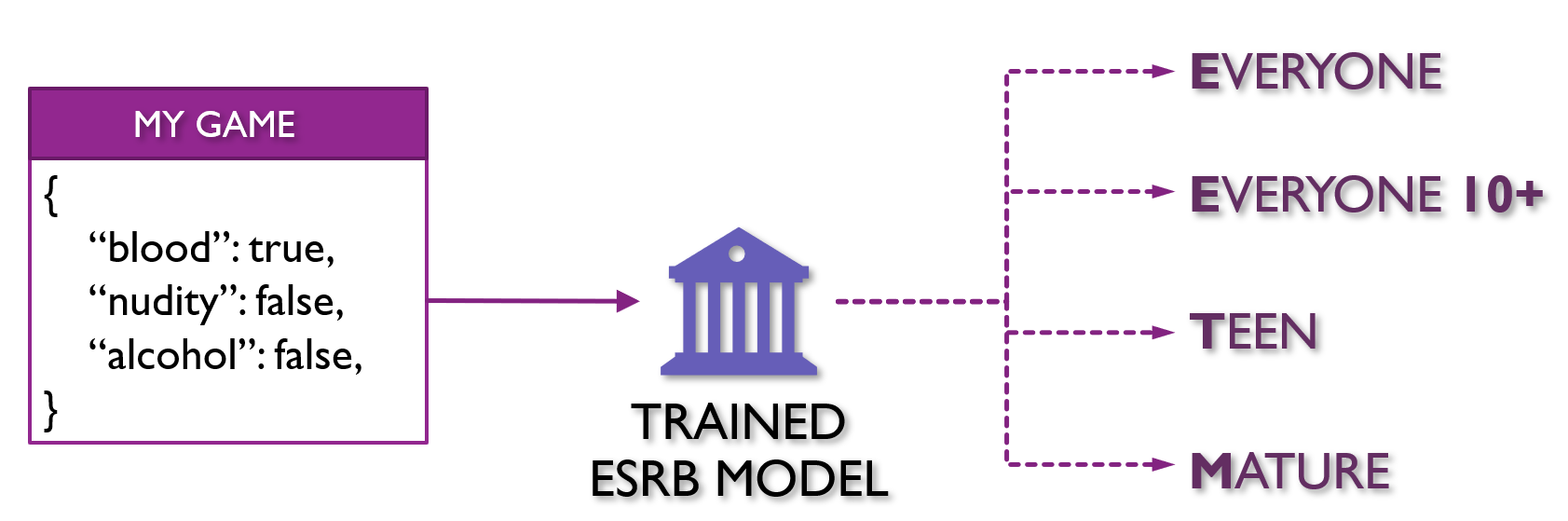

Multiclass classification

A supervised machine learning task that is used to predict the class (category) of an instance of data. The input of a classification algorithm is a set of labeled examples. Each label normally starts as text. It is then run through the TermTransform, which converts it to the Key (numeric) type. The output of a classification algorithm is a classifier, which you can use to predict the class of new unlabeled instances. Examples of multi-class classification scenarios include:

- Categorizing flights as "early", "on time", or "late".

- Understanding movie reviews as "positive", "neutral", or "negative".

- Categorizing hotel reviews as "location", "price", "cleanliness", etc.

For more information, see the Multiclass classification article on Wikipedia.

One vs all upgrades any binary classification learner to act on multiclass datasets. More information on Wikipedia .

Multiclass classification trainers

You can train a multiclass classification model using the following training algorithms:

- LightGbmMulticlassTrainer

- SdcaMaximumEntropyMulticlassTrainer

- SdcaNonCalibratedMulticlassTrainer

- LbfgsMaximumEntropyMulticlassTrainer

- NaiveBayesMulticlassTrainer

- OneVersusAllTrainer

- PairwiseCouplingTrainer

Multiclass classification inputs and outputs

The input label column data must be key type. The feature column must be a fixed size vector of Single .

This trainer outputs the following:

| Output Name | Type | Description |

|---|---|---|

| Vector of | The scores of all classes. Higher value means higher probability to fall into the associated class. If the i-th element has the largest value, the predicted label index would be i. Note that i is zero-based index. | |

| type | The predicted label's index. If its value is i, the actual label would be the i-th category in the key-valued input label type. |

A supervised machine learning task that is used to predict the value of the label from a set of related features. The label can be of any real value and is not from a finite set of values as in classification tasks. Regression algorithms model the dependency of the label on its related features to determine how the label will change as the values of the features are varied. The input of a regression algorithm is a set of examples with labels of known values. The output of a regression algorithm is a function, which you can use to predict the label value for any new set of input features. Examples of regression scenarios include:

- Predicting house prices based on house attributes such as number of bedrooms, location, or size.

- Predicting future stock prices based on historical data and current market trends.

- Predicting sales of a product based on advertising budgets.

Regression trainers

You can train a regression model using the following algorithms:

- LbfgsPoissonRegressionTrainer

- LightGbmRegressionTrainer

- SdcaRegressionTrainer

- OnlineGradientDescentTrainer

- FastTreeRegressionTrainer

- FastTreeTweedieTrainer

- FastForestRegressionTrainer

- GamRegressionTrainer

Regression inputs and outputs

The input label column data must be Single .

The trainers for this task output the following:

| Output Name | Type | Description |

|---|---|---|

| The raw score that was predicted by the model |

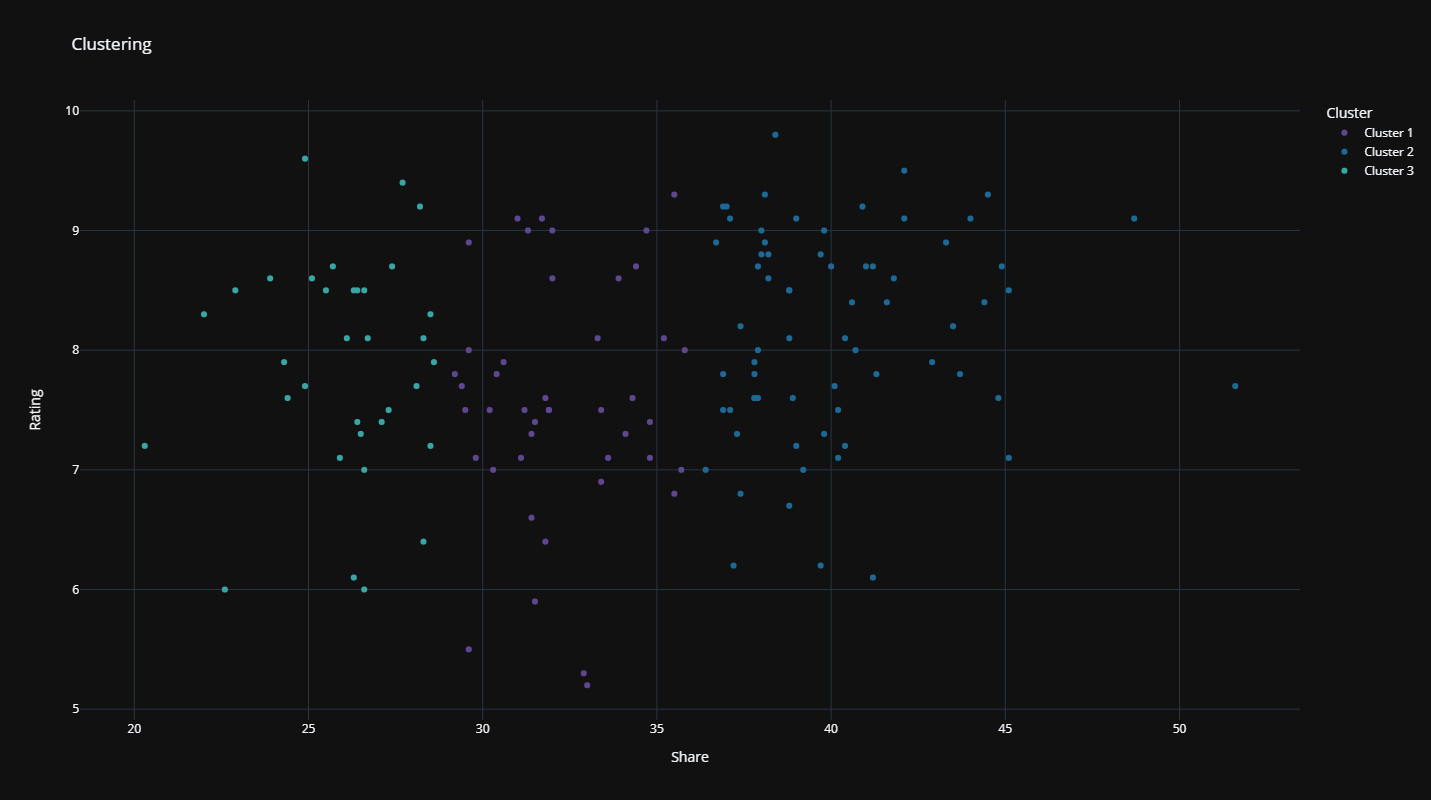

An unsupervised machine learning task that is used to group instances of data into clusters that contain similar characteristics. Clustering can also be used to identify relationships in a dataset that you might not logically derive by browsing or simple observation. The inputs and outputs of a clustering algorithm depends on the methodology chosen. You can take a distribution, centroid, connectivity, or density-based approach. ML.NET currently supports a centroid-based approach using K-Means clustering. Examples of clustering scenarios include:

- Understanding segments of hotel guests based on habits and characteristics of hotel choices.

- Identifying customer segments and demographics to help build targeted advertising campaigns.

- Categorizing inventory based on manufacturing metrics.

Clustering trainer

You can train a clustering model using the following algorithm:

- KMeansTrainer

Clustering inputs and outputs

The input features data must be Single . No labels are needed.

| Output Name | Type | Description |

|---|---|---|

| vector of | The distances of the given data point to all clusters' centroids | |

| type | The closest cluster's index predicted by the model. |

Anomaly detection

This task creates an anomaly detection model by using Principal Component Analysis (PCA). PCA-Based Anomaly Detection helps you build a model in scenarios where it is easy to obtain training data from one class, such as valid transactions, but difficult to obtain sufficient samples of the targeted anomalies.

An established technique in machine learning, PCA is frequently used in exploratory data analysis because it reveals the inner structure of the data and explains the variance in the data. PCA works by analyzing data that contains multiple variables. It looks for correlations among the variables and determines the combination of values that best captures differences in outcomes. These combined feature values are used to create a more compact feature space called the principal components.

Anomaly detection encompasses many important tasks in machine learning:

- Identifying transactions that are potentially fraudulent.

- Learning patterns that indicate that a network intrusion has occurred.

- Finding abnormal clusters of patients.

- Checking values entered into a system.

Because anomalies are rare events by definition, it can be difficult to collect a representative sample of data to use for modeling. The algorithms included in this category have been especially designed to address the core challenges of building and training models by using imbalanced data sets.

Anomaly detection trainer

You can train an anomaly detection model using the following algorithm:

- RandomizedPcaTrainer

Anomaly detection inputs and outputs

The input features must be a fixed-sized vector of Single .

| Output Name | Type | Description |

|---|---|---|

| The non-negative, unbounded score that was calculated by the anomaly detection model | ||

| A true/false value representing whether the input is an anomaly (PredictedLabel=true) or not (PredictedLabel=false) |

A ranking task constructs a ranker from a set of labeled examples. This example set consists of instance groups that can be scored with a given criteria. The ranking labels are { 0, 1, 2, 3, 4 } for each instance. The ranker is trained to rank new instance groups with unknown scores for each instance. ML.NET ranking learners are machine learned ranking based.

Ranking training algorithms

You can train a ranking model with the following algorithms:

- LightGbmRankingTrainer

- FastTreeRankingTrainer

Ranking input and outputs

The input label data type must be key type or Single . The value of the label determines relevance, where higher values indicate higher relevance. If the label is a key type, then the key index is the relevance value, where the smallest index is the least relevant. If the label is a Single , larger values indicate higher relevance.

The feature data must be a fixed size vector of Single and input row group column must be key type.

| Output Name | Type | Description |

|---|---|---|

| The unbounded score that was calculated by the model to determine the prediction |

Recommendation

A recommendation task enables producing a list of recommended products or services. ML.NET uses Matrix factorization (MF) , a collaborative filtering algorithm for recommendations when you have historical product rating data in your catalog. For example, you have historical movie rating data for your users and want to recommend other movies they are likely to watch next.

Recommendation training algorithms

You can train a recommendation model with the following algorithm:

- MatrixFactorizationTrainer

Forecasting

The forecasting task use past time-series data to make predictions about future behavior. Scenarios applicable to forecasting include weather forecasting, seasonal sales predictions, and predictive maintenance.

Forecasting trainers

You can train a forecasting model with the following algorithm:

ForecastBySsa

Image Classification

A supervised machine learning task that is used to predict the class (category) of an image. The input is a set of labeled examples. Each label normally starts as text. It is then run through the TermTransform, which converts it to the Key (numeric) type. The output of the image classification algorithm is a classifier, which you can use to predict the class of new images. The image classification task is a type of multiclass classification. Examples of image classification scenarios include:

- Determining the breed of a dog as a "Siberian Husky", "Golden Retriever", "Poodle", etc.

- Determining if a manufacturing product is defective or not.

- Determining what types of flowers as "Rose", "Sunflower", etc.

Image classification trainers

You can train an image classification model using the following training algorithms:

- ImageClassificationTrainer

Image classification inputs and outputs

The input label column data must be key type. The feature column must be a variable-sized vector of Byte .

This trainer outputs the following columns:

| Output Name | Type | Description |

|---|---|---|

| The scores of all classes.Higher value means higher probability to fall into the associated class. If the i-th element has the largest value, the predicted label index would be i.Note that i is zero-based index. | ||

| type | The predicted label's index. If its value is i, the actual label would be the i-th category in the key-valued input label type. |

Object Detection

A supervised machine learning task that is used to predict the class (category) of an image but also gives a bounding box to where that category is within the image. Instead of classifying a single object in an image, object detection can detect multiple objects within an image. Examples of object detection include:

- Detecting cars, signs, or people on images of a road.

- Detecting defects on images of products.

- Detecting areas of concern on X-Ray images.

Object detection model training is currently only available in Model Builder using Azure Machine Learning.

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback .

Submit and view feedback for

Additional resources

An introduction to machine learning with scikit-learn #

Machine learning: the problem setting #.

In general, a learning problem considers a set of n samples of data and then tries to predict properties of unknown data. If each sample is more than a single number and, for instance, a multi-dimensional entry (aka multivariate data), it is said to have several attributes or features .

Learning problems fall into a few categories:

supervised learning , in which the data comes with additional attributes that we want to predict ( Click here to go to the scikit-learn supervised learning page).This problem can be either:

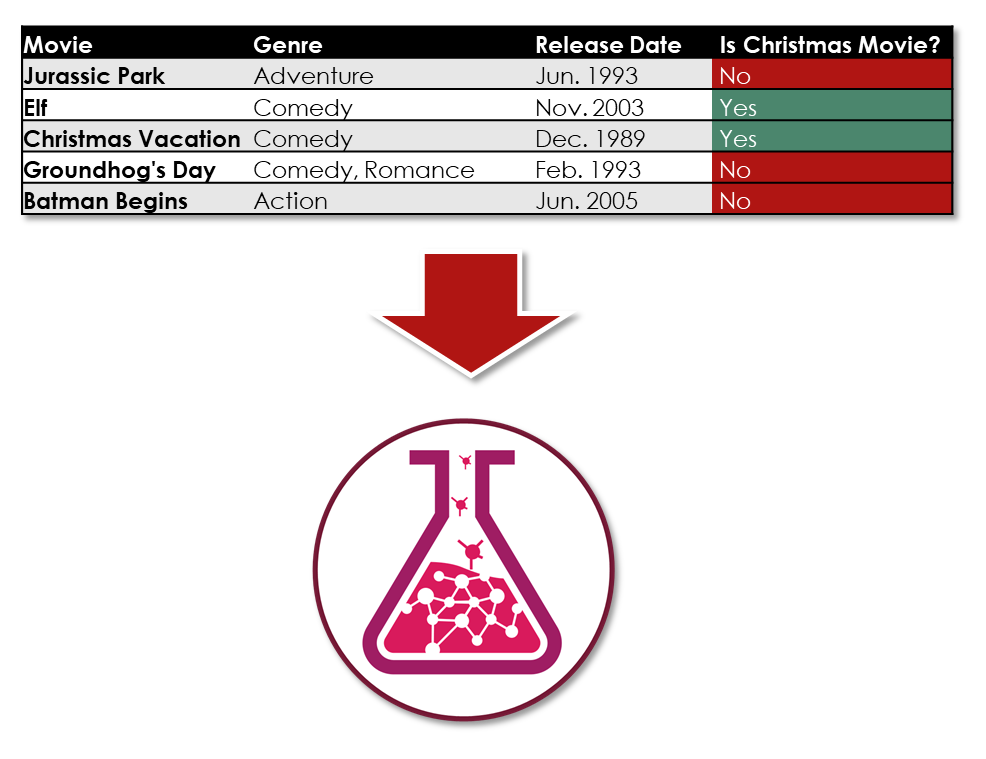

classification : samples belong to two or more classes and we want to learn from already labeled data how to predict the class of unlabeled data. An example of a classification problem would be handwritten digit recognition, in which the aim is to assign each input vector to one of a finite number of discrete categories. Another way to think of classification is as a discrete (as opposed to continuous) form of supervised learning where one has a limited number of categories and for each of the n samples provided, one is to try to label them with the correct category or class.

regression : if the desired output consists of one or more continuous variables, then the task is called regression . An example of a regression problem would be the prediction of the length of a salmon as a function of its age and weight.

unsupervised learning , in which the training data consists of a set of input vectors x without any corresponding target values. The goal in such problems may be to discover groups of similar examples within the data, where it is called clustering , or to determine the distribution of data within the input space, known as density estimation , or to project the data from a high-dimensional space down to two or three dimensions for the purpose of visualization ( Click here to go to the Scikit-Learn unsupervised learning page).

Loading an example dataset #

scikit-learn comes with a few standard datasets, for instance the iris and digits datasets for classification and the diabetes dataset for regression.

In the following, we start a Python interpreter from our shell and then load the iris and digits datasets. Our notational convention is that $ denotes the shell prompt while >>> denotes the Python interpreter prompt:

A dataset is a dictionary-like object that holds all the data and some metadata about the data. This data is stored in the .data member, which is a n_samples, n_features array. In the case of supervised problems, one or more response variables are stored in the .target member. More details on the different datasets can be found in the dedicated section .

For instance, in the case of the digits dataset, digits.data gives access to the features that can be used to classify the digits samples:

and digits.target gives the ground truth for the digit dataset, that is the number corresponding to each digit image that we are trying to learn:

Learning and predicting #

In the case of the digits dataset, the task is to predict, given an image, which digit it represents. We are given samples of each of the 10 possible classes (the digits zero through nine) on which we fit an estimator to be able to predict the classes to which unseen samples belong.

In scikit-learn, an estimator for classification is a Python object that implements the methods fit(X, y) and predict(T) .

An example of an estimator is the class sklearn.svm.SVC , which implements support vector classification . The estimator’s constructor takes as arguments the model’s parameters.

For now, we will consider the estimator as a black box:

The clf (for classifier) estimator instance is first fitted to the model; that is, it must learn from the model. This is done by passing our training set to the fit method. For the training set, we’ll use all the images from our dataset, except for the last image, which we’ll reserve for our predicting. We select the training set with the [:-1] Python syntax, which produces a new array that contains all but the last item from digits.data :

Now you can predict new values. In this case, you’ll predict using the last image from digits.data . By predicting, you’ll determine the image from the training set that best matches the last image.

The corresponding image is:

As you can see, it is a challenging task: after all, the images are of poor resolution. Do you agree with the classifier?

A complete example of this classification problem is available as an example that you can run and study: Recognizing hand-written digits .

Conventions #

scikit-learn estimators follow certain rules to make their behavior more predictive. These are described in more detail in the Glossary of Common Terms and API Elements .

Type casting #

Where possible, input of type float32 will maintain its data type. Otherwise input will be cast to float64 :

In this example, X is float32 , and is unchanged by fit_transform(X) .

Using float32 -typed training (or testing) data is often more efficient than using the usual float64 dtype : it allows to reduce the memory usage and sometimes also reduces processing time by leveraging the vector instructions of the CPU. However it can sometimes lead to numerical stability problems causing the algorithm to be more sensitive to the scale of the values and require adequate preprocessing .

Keep in mind however that not all scikit-learn estimators attempt to work in float32 mode. For instance, some transformers will always cast their input to float64 and return float64 transformed values as a result.

Regression targets are cast to float64 and classification targets are maintained:

Here, the first predict() returns an integer array, since iris.target (an integer array) was used in fit . The second predict() returns a string array, since iris.target_names was for fitting.

Refitting and updating parameters #

Hyper-parameters of an estimator can be updated after it has been constructed via the set_params() method. Calling fit() more than once will overwrite what was learned by any previous fit() :

Here, the default kernel rbf is first changed to linear via SVC.set_params() after the estimator has been constructed, and changed back to rbf to refit the estimator and to make a second prediction.

Multiclass vs. multilabel fitting #

When using multiclass classifiers , the learning and prediction task that is performed is dependent on the format of the target data fit upon:

In the above case, the classifier is fit on a 1d array of multiclass labels and the predict() method therefore provides corresponding multiclass predictions. It is also possible to fit upon a 2d array of binary label indicators:

Here, the classifier is fit() on a 2d binary label representation of y , using the LabelBinarizer . In this case predict() returns a 2d array representing the corresponding multilabel predictions.

Note that the fourth and fifth instances returned all zeroes, indicating that they matched none of the three labels fit upon. With multilabel outputs, it is similarly possible for an instance to be assigned multiple labels:

In this case, the classifier is fit upon instances each assigned multiple labels. The MultiLabelBinarizer is used to binarize the 2d array of multilabels to fit upon. As a result, predict() returns a 2d array with multiple predicted labels for each instance.

- Machine Learning Engineers

- Big Data Architects

- Back-end Developers

- Data Scientists

- Deep Learning Experts

- TensorFlow Developers

- Python Developers

- Algorithm Developers

A Machine Learning Tutorial With Examples: An Introduction to ML Theory and Its Applications

This Machine Learning tutorial introduces the basics of ML theory, laying down the common themes and concepts, making it easy to follow the logic and get comfortable with the topic.

By Nick McCrea

Nicholas is a professional software engineer with a passion for quality craftsmanship. He loves architecting and writing top-notch code.

PREVIOUSLY AT

Editor’s note: This article was updated on 09/12/22 by our editorial team. It has been modified to include recent sources and to align with our current editorial standards.

Machine learning (ML) is coming into its own, with a growing recognition that ML can play a key role in a wide range of critical applications, such as data mining , natural language processing , image recognition , and expert systems . ML provides potential solutions in all these domains and more, and likely will become a pillar of our future civilization.

The supply of expert ML designers has yet to catch up to this demand. A major reason for this is that ML is just plain tricky. This machine learning tutorial introduces the basic theory, laying out the common themes and concepts, and making it easy to follow the logic and get comfortable with machine learning basics.

Machine Learning Basics: What Is Machine Learning?

So what exactly is “machine learning” anyway? ML is a lot of things. The field is vast and is expanding rapidly, being continually partitioned and sub-partitioned into different sub-specialties and types of machine learning .

There are some basic common threads, however, and the overarching theme is best summed up by this oft-quoted statement made by Arthur Samuel way back in 1959: “[Machine Learning is the] field of study that gives computers the ability to learn without being explicitly programmed.”

In 1997, Tom Mitchell offered a “well-posed” definition that has proven more useful to engineering types: “A computer program is said to learn from experience E with respect to some task T and some performance measure P, if its performance on T, as measured by P, improves with experience E.”

So if you want your program to predict, for example, traffic patterns at a busy intersection (task T), you can run it through a machine learning algorithm with data about past traffic patterns (experience E) and, if it has successfully “learned,” it will then do better at predicting future traffic patterns (performance measure P).

The highly complex nature of many real-world problems, though, often means that inventing specialized algorithms that will solve them perfectly every time is impractical, if not impossible.

Real-world examples of machine learning problems include “Is this cancer?” , “What is the market value of this house?” , “Which of these people are good friends with each other?” , “Will this rocket engine explode on take off?” , “Will this person like this movie?” , “Who is this?” , “What did you say?” , and “How do you fly this thing?” All of these problems are excellent targets for an ML project; in fact ML has been applied to each of them with great success.

Among the different types of ML tasks, a crucial distinction is drawn between supervised and unsupervised learning:

- Supervised machine learning is when the program is “trained” on a predefined set of “training examples,” which then facilitate its ability to reach an accurate conclusion when given new data.

- Unsupervised machine learning is when the program is given a bunch of data and must find patterns and relationships therein.

We will focus primarily on supervised learning here, but the last part of the article includes a brief discussion of unsupervised learning with some links for those who are interested in pursuing the topic.

Supervised Machine Learning

In the majority of supervised learning applications, the ultimate goal is to develop a finely tuned predictor function h(x) (sometimes called the “hypothesis”). “Learning” consists of using sophisticated mathematical algorithms to optimize this function so that, given input data x about a certain domain (say, square footage of a house), it will accurately predict some interesting value h(x) (say, market price for said house).

In practice, x almost always represents multiple data points. So, for example, a housing price predictor might consider not only square footage (x1) but also number of bedrooms (x2), number of bathrooms (x3), number of floors (x4), year built (x5), ZIP code (x6), and so forth. Determining which inputs to use is an important part of ML design. However, for the sake of explanation, it is easiest to assume a single input value.

Let’s say our simple predictor has this form:

Machine Learning Examples

We’re using simple problems for the sake of illustration, but the reason ML exists is because, in the real world, problems are much more complex. On this flat screen, we can present a picture of, at most, a three-dimensional dataset, but ML problems often deal with data with millions of dimensions and very complex predictor functions. ML solves problems that cannot be solved by numerical means alone.

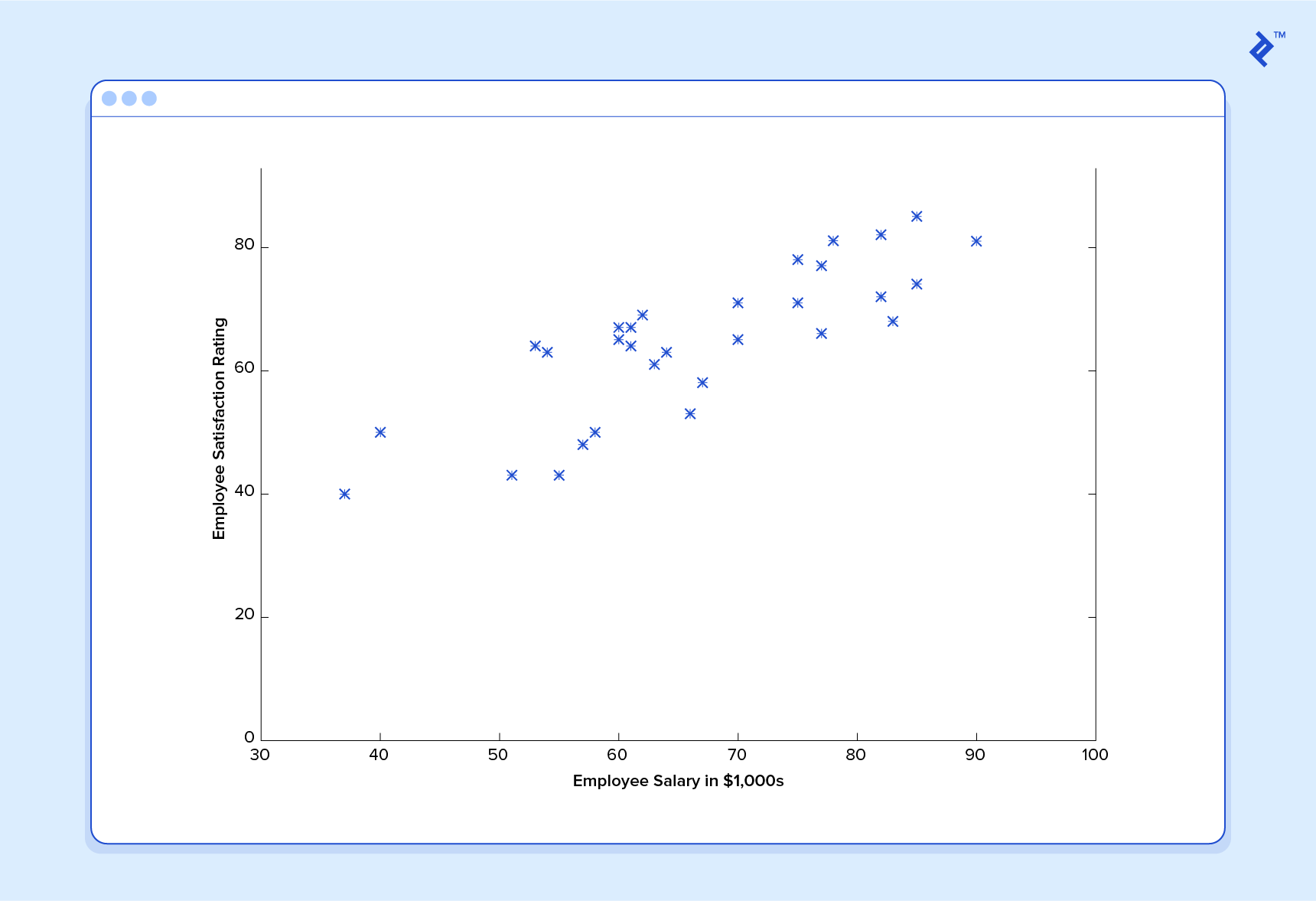

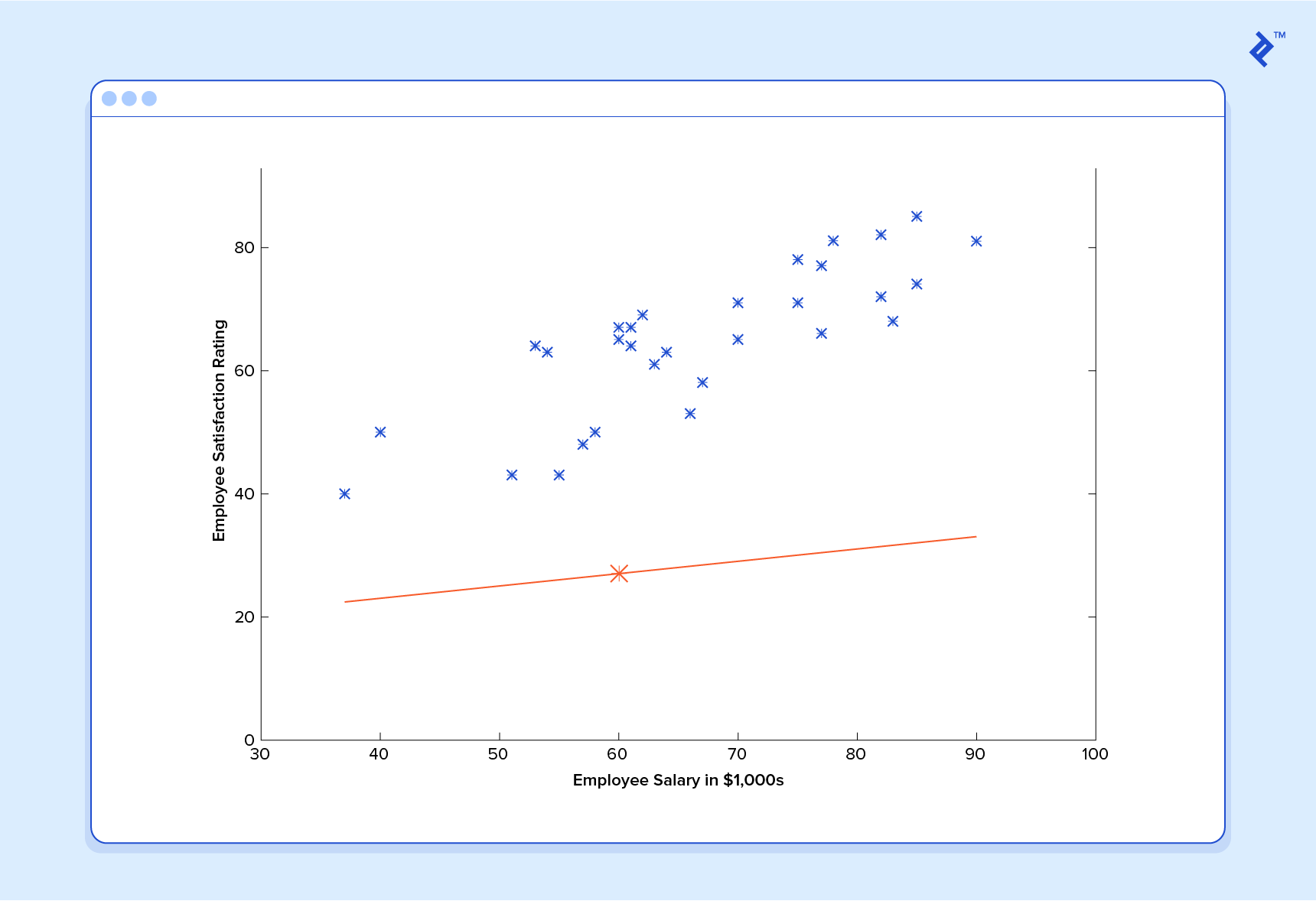

With that in mind, let’s look at another simple example. Say we have the following training data, wherein company employees have rated their satisfaction on a scale of 1 to 100:

First, notice that the data is a little noisy. That is, while we can see that there is a pattern to it (i.e., employee satisfaction tends to go up as salary goes up), it does not all fit neatly on a straight line. This will always be the case with real-world data (and we absolutely want to train our machine using real-world data). How can we train a machine to perfectly predict an employee’s level of satisfaction? The answer, of course, is that we can’t. The goal of ML is never to make “perfect” guesses because ML deals in domains where there is no such thing. The goal is to make guesses that are good enough to be useful.

It is somewhat reminiscent of the famous statement by George E. P. Box , the British mathematician and professor of statistics: “All models are wrong, but some are useful.”

The goal of ML is never to make “perfect” guesses because ML deals in domains where there is no such thing. The goal is to make guesses that are good enough to be useful.

Machine learning builds heavily on statistics. For example, when we train our machine to learn, we have to give it a statistically significant random sample as training data. If the training set is not random, we run the risk of the machine learning patterns that aren’t actually there. And if the training set is too small (see the law of large numbers ), we won’t learn enough and may even reach inaccurate conclusions. For example, attempting to predict companywide satisfaction patterns based on data from upper management alone would likely be error-prone.

If we ask this predictor for the satisfaction of an employee making $60,000, it would predict a rating of 27:

It’s obvious that this is a terrible guess and that this machine doesn’t know very much.

And if we repeat this process, say 1,500 times, our predictor will end up looking like this:

Now we’re getting somewhere.

Machine Learning Regression: A Note on Complexity

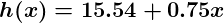

The above example is technically a simple problem of univariate linear regression , which in reality can be solved by deriving a simple normal equation and skipping this “tuning” process altogether. However, consider a predictor that looks like this:

This function takes input in four dimensions and has a variety of polynomial terms. Deriving a normal equation for this function is a significant challenge. Many modern machine learning problems take thousands or even millions of dimensions of data to build predictions using hundreds of coefficients. Predicting how an organism’s genome will be expressed or what the climate will be like in 50 years are examples of such complex problems.

Fortunately, the iterative approach taken by ML systems is much more resilient in the face of such complexity. Instead of using brute force, a machine learning system “feels” its way to the answer. For big problems, this works much better. While this doesn’t mean that ML can solve all arbitrarily complex problems—it can’t—it does make for an incredibly flexible and powerful tool.

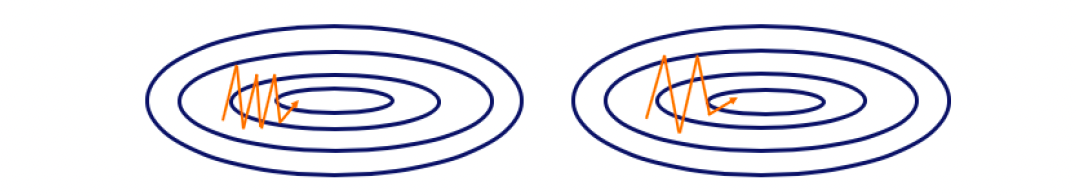

Gradient Descent: Minimizing “Wrongness”

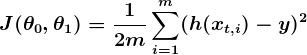

The choice of the cost function is another important piece of an ML program. In different contexts, being “wrong” can mean very different things. In our employee satisfaction example, the well-established standard is the linear least squares function :

With least squares, the penalty for a bad guess goes up quadratically with the difference between the guess and the correct answer, so it acts as a very “strict” measurement of wrongness. The cost function computes an average penalty across all the training examples.

Consider the following plot of a cost function for some particular machine learning problem:

That covers the basic theory underlying the majority of supervised machine learning systems. But the basic concepts can be applied in a variety of ways, depending on the problem at hand.

Classification Problems in Machine Learning

Under supervised ML, two major subcategories are:

- Regression machine learning systems – Systems where the value being predicted falls somewhere on a continuous spectrum. These systems help us with questions of “How much?” or “How many?”

- Classification machine learning systems – Systems where we seek a yes-or-no prediction, such as “Is this tumor cancerous?”, “Does this cookie meet our quality standards?”, and so on.

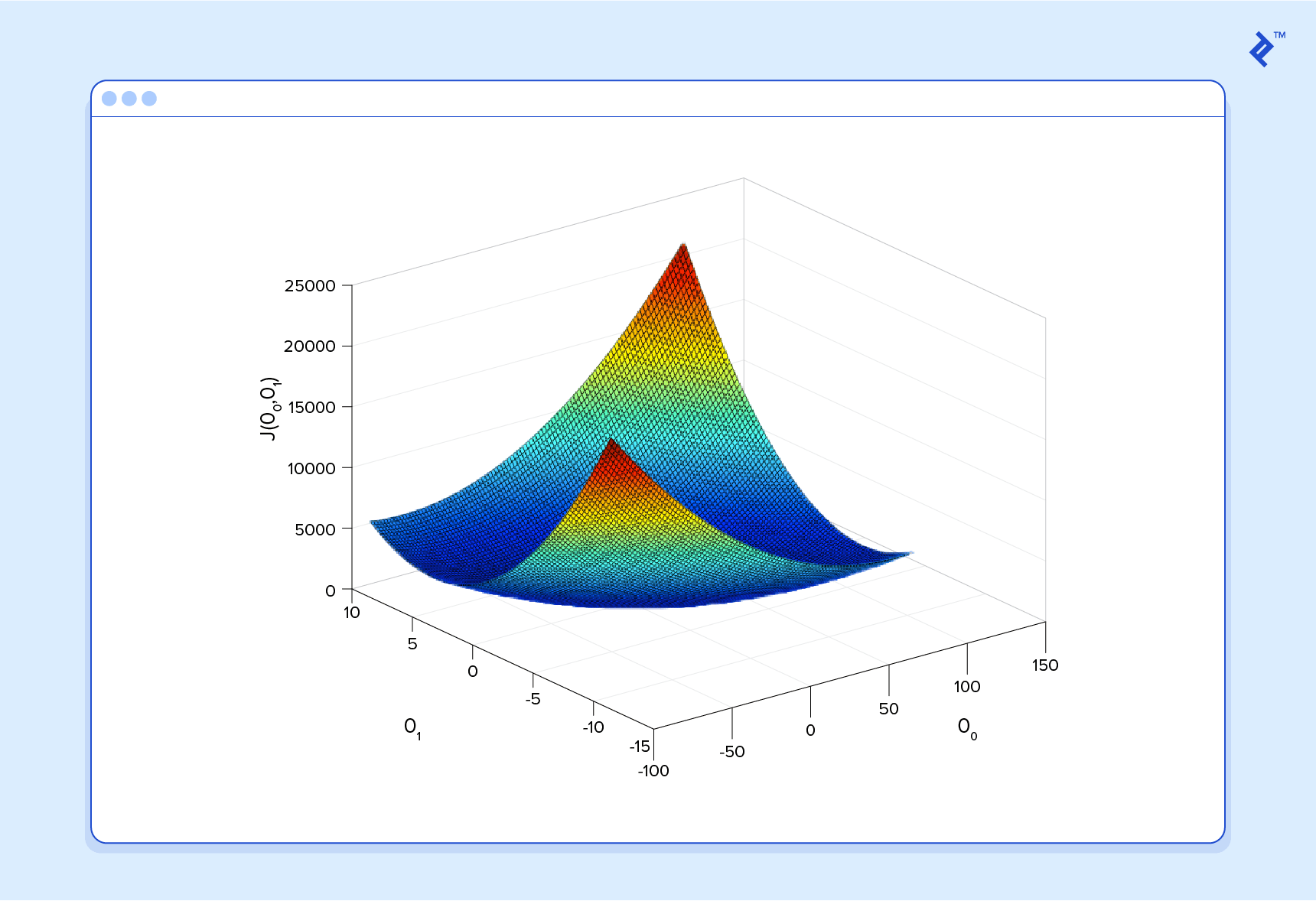

Our examples so far have focused on regression problems, so now let’s take a look at a classification example.

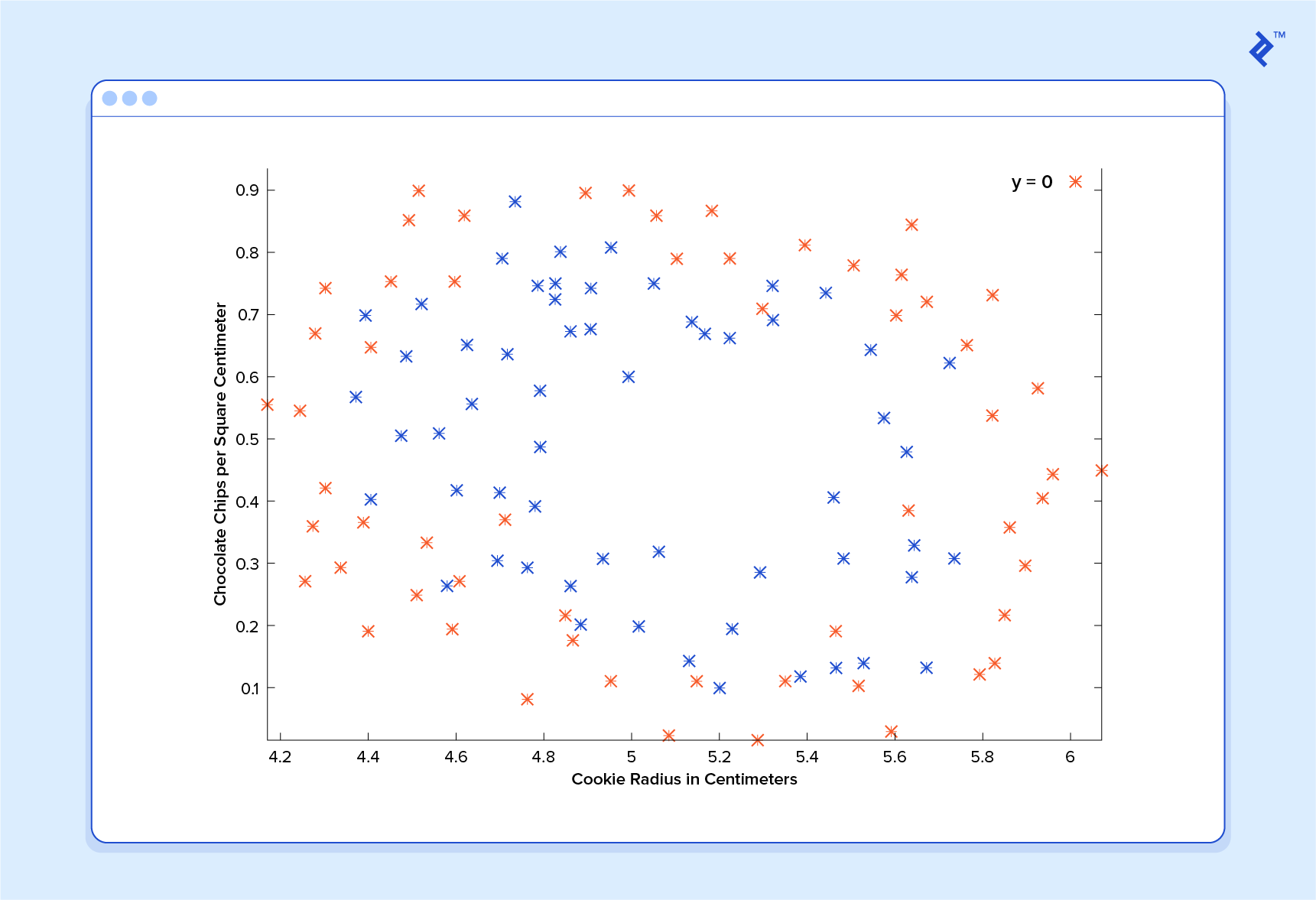

Here are the results of a cookie quality testing study, where the training examples have all been labeled as either “good cookie” ( y = 1 ) in blue or “bad cookie” ( y = 0 ) in red.

In classification, a regression predictor is not very useful. What we usually want is a predictor that makes a guess somewhere between 0 and 1. In a cookie quality classifier, a prediction of 1 would represent a very confident guess that the cookie is perfect and utterly mouthwatering. A prediction of 0 represents high confidence that the cookie is an embarrassment to the cookie industry. Values falling within this range represent less confidence, so we might design our system such that a prediction of 0.6 means “Man, that’s a tough call, but I’m gonna go with yes, you can sell that cookie,” while a value exactly in the middle, at 0.5, might represent complete uncertainty. This isn’t always how confidence is distributed in a classifier but it’s a very common design and works for the purposes of our illustration.

It turns out there’s a nice function that captures this behavior well. It’s called the sigmoid function , g(z) , and it looks something like this:

z is some representation of our inputs and coefficients, such as:

so that our predictor becomes:

Notice that the sigmoid function transforms our output into the range between 0 and 1.

The logic behind the design of the cost function is also different in classification. Again we ask “What does it mean for a guess to be wrong?” and this time a very good rule of thumb is that if the correct guess was 0 and we guessed 1, then we were completely wrong—and vice-versa. Since you can’t be more wrong than completely wrong, the penalty in this case is enormous. Alternatively, if the correct guess was 0 and we guessed 0, our cost function should not add any cost for each time this happens. If the guess was right, but we weren’t completely confident (e.g., y = 1 , but h(x) = 0.8 ), this should come with a small cost, and if our guess was wrong but we weren’t completely confident (e.g., y = 1 but h(x) = 0.3 ), this should come with some significant cost but not as much as if we were completely wrong.

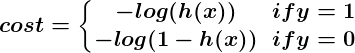

This behavior is captured by the log function, such that:

A classification predictor can be visualized by drawing the boundary line; i.e., the barrier where the prediction changes from a “yes” (a prediction greater than 0.5) to a “no” (a prediction less than 0.5). With a well-designed system, our cookie data can generate a classification boundary that looks like this:

Now that’s a machine that knows a thing or two about cookies!

An Introduction to Neural Networks

No discussion of Machine Learning would be complete without at least mentioning neural networks . Not only do neural networks offer an extremely powerful tool to solve very tough problems, they also offer fascinating hints at the workings of our own brains and intriguing possibilities for one day creating truly intelligent machines.

Neural networks are well suited to machine learning models where the number of inputs is gigantic. The computational cost of handling such a problem is just too overwhelming for the types of systems we’ve discussed. As it turns out, however, neural networks can be effectively tuned using techniques that are strikingly similar to gradient descent in principle.

A thorough discussion of neural networks is beyond the scope of this tutorial, but I recommend checking out previous post on the subject.

Unsupervised Machine Learning

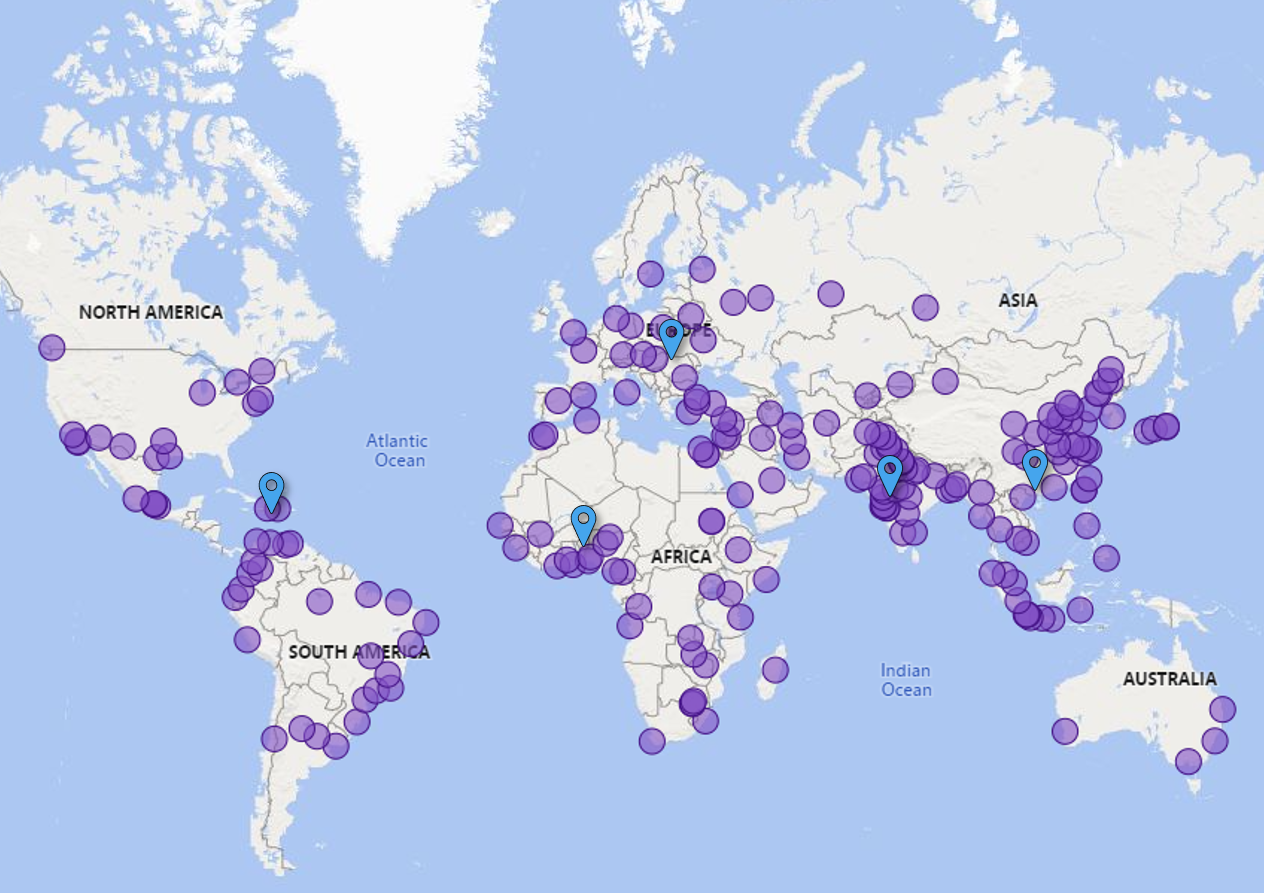

Unsupervised machine learning is typically tasked with finding relationships within data. There are no training examples used in this process. Instead, the system is given a set of data and tasked with finding patterns and correlations therein. A good example is identifying close-knit groups of friends in social network data.

The machine learning algorithms used to do this are very different from those used for supervised learning, and the topic merits its own post. However, for something to chew on in the meantime, take a look at clustering algorithms such as k-means , and also look into dimensionality reduction systems such as principle component analysis . You can also read our article on semi-supervised image classification .

Putting Theory Into Practice

We’ve covered much of the basic theory underlying the field of machine learning but, of course, we have only scratched the surface.

Keep in mind that to really apply the theories contained in this introduction to real-life machine learning examples, a much deeper understanding of these topics is necessary. There are many subtleties and pitfalls in ML and many ways to be lead astray by what appears to be a perfectly well-tuned thinking machine. Almost every part of the basic theory can be played with and altered endlessly, and the results are often fascinating. Many grow into whole new fields of study that are better suited to particular problems.

Clearly, machine learning is an incredibly powerful tool. In the coming years, it promises to help solve some of our most pressing problems, as well as open up whole new worlds of opportunity for data science firms . The demand for machine learning engineers is only going to grow, offering incredible chances to be a part of something big. I hope you will consider getting in on the action!

Acknowledgement

This article draws heavily on material taught by Stanford professor Dr. Andrew Ng in his free and open “Supervised Machine Learning” course . It covers everything discussed in this article in great depth, and gives tons of practical advice to ML practitioners. I cannot recommend it highly enough for those interested in further exploring this fascinating field.

Further Reading on the Toptal Blog:

- Machine Learning Video Analysis: Identifying Fish

- A Deep Learning Tutorial: From Perceptrons to Deep Networks

- Adversarial Machine Learning: How to Attack and Defend ML Models

- Getting Started With TensorFlow: A Machine Learning Tutorial

- Machine Learning Number Recognition: From Zero to Application

- Computer Vision Pipeline Architecture: A Tutorial

- 5 Pillars of Responsible Generative AI: A Code of Ethics for the Future

- Advantages of AI: Using GPT and Diffusion Models for Image Generation

- Ask a Cybersecurity Engineer: Trending Questions About AI in Cybersecurity

Understanding the basics

What is deep learning.

Deep learning is a machine learning method that relies on artificial neural networks, allowing computer systems to learn by example. In most cases, deep learning algorithms are based on information patterns found in biological nervous systems.

What is Machine Learning?

As described by Arthur Samuel, Machine Learning is the “field of study that gives computers the ability to learn without being explicitly programmed.”

Machine Learning vs Artificial Intelligence: What’s the difference?

Artificial Intelligence (AI) is a broad term used to describe systems capable of making certain decisions on their own. Machine Learning (ML) is a specific subject within the broader AI arena, describing the ability for a machine to improve its ability by practicing a task or being exposed to large data sets.

How to learn Machine Learning?

Machine Learning requires a great deal of dedication and practice to learn, due to the many subtle complexities involved in ensuring your machine learns the right thing and not the wrong thing. An excellent online course for Machine Learning is Andrew Ng’s Coursera course.

What is overfitting in Machine Learning?

Overfitting is the result of focussing a Machine Learning algorithm too closely on the training data, so that it is not generalized enough to correctly process new data. It is an example of a machine “learning the wrong thing” and becoming less capable of correctly interpreting new data.

What is a Machine Learning model?

A Machine Learning model is a set of assumptions about the underlying nature the data to be trained for. The model is used as the basis for determining what a Machine Learning algorithm should learn. A good model, which makes accurate assumptions about the data, is necessary for the machine to give good results

- MachineLearning

- ArtificialIntelligence

Nick McCrea

Denver, CO, United States

Member since July 8, 2014

About the author

World-class articles, delivered weekly.

By entering your email, you are agreeing to our privacy policy .

Toptal Developers

- Adobe Commerce (Magento) Developers

- Angular Developers

- AWS Developers

- Azure Developers

- Blockchain Developers

- Business Intelligence Developers

- C Developers

- Computer Vision Developers

- Django Developers

- Docker Developers

- Elixir Developers

- Go Engineers

- GraphQL Developers

- Jenkins Developers

- Kotlin Developers

- Kubernetes Developers

- .NET Developers

- R Developers

- React Native Developers

- Ruby on Rails Developers

- Salesforce Developers

- SQL Developers

- Tableau Developers

- Unreal Engine Developers

- Xamarin Developers

- View More Freelance Developers

Join the Toptal ® community.

Machine Learning Fundamentals Handbook – Key Concepts, Algorithms, and Python Code Examples

If you're planning to become a Machine Learning Engineer, Data Scientist, or you want to refresh your memory before your interviews, this handbook is for you.

In it, we'll cover the key Machine Learning algorithms you'll need to know as a Data Scientist, Machine Learning Engineer, Machine Learning Researcher, and AI Engineer.

Throughout this handbook, I'll include examples for each Machine Learning algorithm with its Python code to help you understand what you're learning.

Whether you're a beginner or have some experience with Machine Learning or AI, this guide is designed to help you understand the fundamentals of Machine Learning algorithms at a high level.

As an experienced machine learning practitioner, I'm excited to share my knowledge and insights with you.

What You'll Learn

Chapter 1: what is machine learning.

- Chapter 2: Most popular Machine Learning algorithms

- 2.1 Linear Regression and Ordinary Least Squares (OLS)

- 2.2 Logistic Regression and MLE

- 2.3 Linear Discriminant Analysis(LDA)

2.4 Logistic Regression vs LDA

- 2.5 Naïve Bayes

2.6 Naïve Bayes vs Logistic Regression

2.7 decision trees, 2.8 bagging, 2.9 random forest.

- 2.10 Boosting or Ensamble Techniques (AdaBoost, GBM, XGBoost)

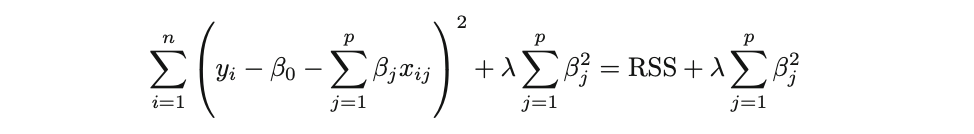

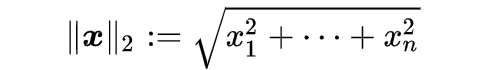

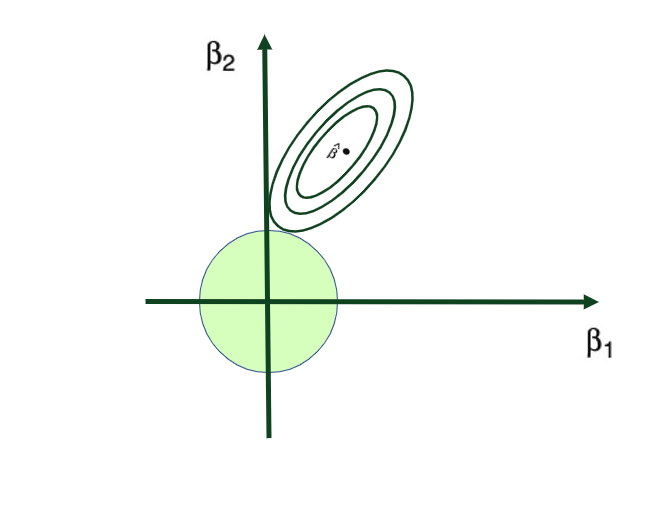

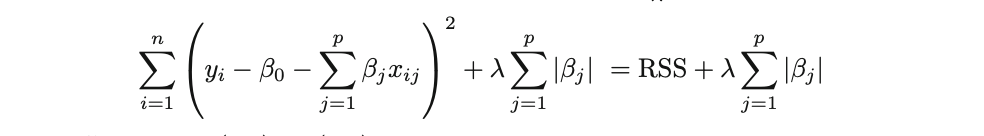

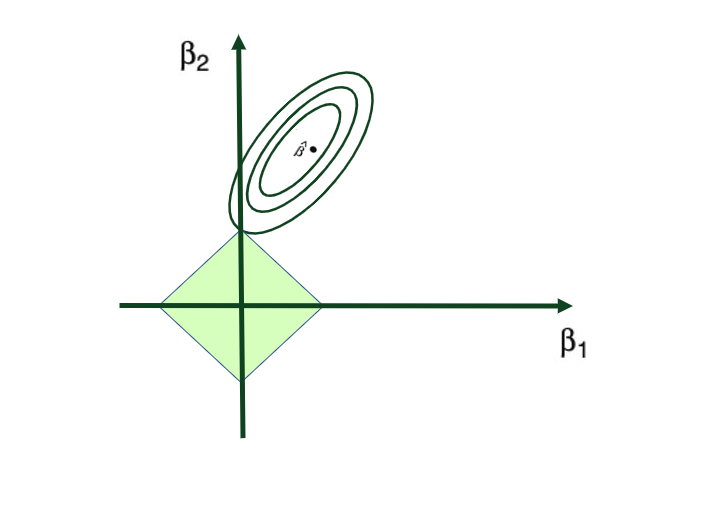

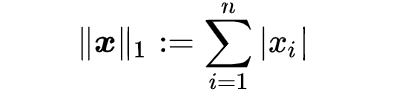

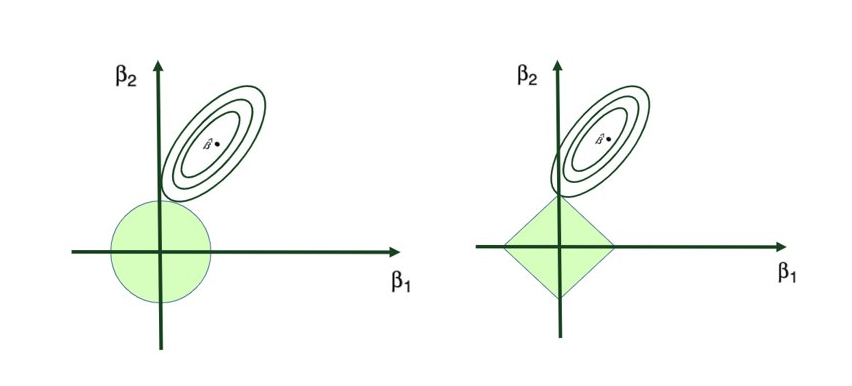

3. Chapter 3: Feature Selection

- 3.1 Subset Selection

- 3.2 Regularization (Ridge and Lasso)

- 3.3 Dimensionality Reduction (PCA)

4. Chapter 4: Resampling Technique

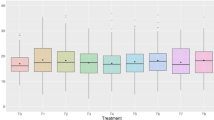

- 4.1 Cross Validation: (Validation Set, LOOCV, K-Fold CV)

- 4.2 Optimal k in K-Fold CV

- 4.5 Bootstrapping

5. Chapter 5: Optimization Techniques

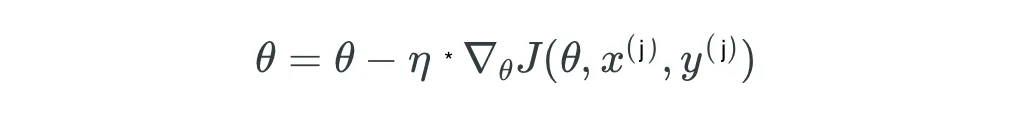

- 5.1 Optimization Techniques: Batch Gradient Descent (GD)

- 5.2 Optimization Techniques: Stochastic Gradient Descent (SGD)

- 5.3 Optimization Techniques: SGD with Momentum

- 5.4 Optimization Techniques: Adam Optimiser

- 6.1 Key Takeaways & What Comes Next

- 6.2 About the Author — That’s Me!

- 6.3 How Can You Dive Deeper?

- 6.4 Connect with Me

Prerequisites

To make the most out of this handbook, it'll be helpful if you're familiar with some core ML concepts:

Basic Terminology:

- Training Data & Test Data: Datasets used to train and evaluate models.

- Features: Variables aiding in predictions, we also call independent variables

- Target Variable: The predicted outcome, also called dependent variable or response variable

Overfitting Problem in Machine Learning

Understanding Overfitting, how it's related to Bias-Variance Tradeoff, and how you can fix it is very important. We will look at regularization techniques in detail in this guide, too. For a detailed understanding, refer to:

Foundational Readings for Beginners

If you have no prior statistical knowledge and wish to learn or refresh your understanding of essential statistical concepts, I'd recommend this article: Fundamental Statistical Concepts for Data Science

For a comprehensive guide on kickstarting a career in Data Science and AI, and insights on securing a Data Science job, you can delve into my previous handbook: Launching Your Data Science & AI Career

Tools/Languages to use in Machine Learning

As a Machine Learning Researcher or Machine Learning Engineer, there are many technical tools and programming languages you might use in your day-to-day job. But for today and for this handbook, we'll use the programming language and tools:

- Python Basics: Variables, data types, structures, and control mechanisms.

- Essential Libraries: numpy , pandas , matplotlib , scikit-learn , xgboost

- Environment: Familiarity with Jupyter Notebooks or PyCharm as IDE.

Embarking on this Machine Learning journey with a solid foundation ensures a more profound and enlightening experience.

Now, shall we?

Machine Learning (ML), a branch of artificial intelligence (AI), refers to a computer's ability to autonomously learn from data patterns and make decisions without explicit programming. Machines use statistical algorithms to enhance system decision-making and task performance.

At its core, ML is a method where computers improve at tasks by learning from data. Think of it like teaching computers to make decisions by providing them examples, much like showing pictures to teach a child to recognize animals.

For instance, by analyzing buying patterns, ML algorithms can help online shopping platforms recommend products (like how Amazon suggests items you might like).

Or consider email platforms that learn to flag spam through recognizing patterns in unwanted mails. Using ML techniques, computers quietly enhance our daily digital experiences, making recommendations more accurate and safeguarding our inboxes.

On this journey, you'll unravel the fascinating world of ML, one where technology learns and grows from the information it encounters. But before doing so, let's look into some basics in Machine Learning you must know to understand any sorts of Machine Learning model.

Types of Learning in Machine Learning:

There are three main ways models can learn:

- Supervised Learning: Models predict from labeled data (you got both features and labels, X and the Y)

- Unsupervised Learning: Models identify patterns autonomously, where you don't have labeled date (you only got features no response variable, only X)

- Reinforcement Learning: Algorithms learn via action feedback.

Model Evaluation Metrics:

In Machine Learning, whenever you are training a model you always must evaluate it. And you'll want to use the most common type of evaluation metrics depending on the nature of your problem.

Here are most common ML model evaluation metrics per model type:

1. Regression Metrics:

- MAE, MSE, RMSE: Measure differences between predicted and actual values.

- R-Squared: Indicates variance explained by the model.

2. Classification Metrics:

- Accuracy: Percentage of correct predictions.

- Precision, Recall, F1-Score: Assess prediction quality.

- ROC Curve, AUC: Gauge model's discriminatory power.

- Confusion Matrix: Compares actual vs. predicted classifications.

3. Clustering Metrics:

- Silhouette Score: Gauges object similarity within clusters.

- Davies-Bouldin Index: Assesses cluster separation.

Chapter 2: Most Popular Machine Learning Algorithms

In this chapter, we'll simplify the complexity of essential Machine Learning (ML) algorithms. This will be a valuable resource for roles ranging from Data Scientists and Machine Learning Engineers to AI Researchers.

We'll start with basics in 2.1 with Linear Regression and Ordinary Least Squares (OLS), then go into 2.2 which explores Logistic Regression and Maximum Likelihood Estimation (MLE).

Section 2.3 explores Linear Discriminant Analysis (LDA), which is contrasted with Logistic Regression in 2.4. We get into Naïve Bayes in 2.5, offering a comparative analysis with Logistic Regression in 2.6.

In 2.7, we go through Decision Trees, subsequently exploring ensemble methods: Bagging in 2.8, and Random Forest in 2.9. Various and popular Boosting techniques unfold in the following segments, discussing AdaBoost in 2.10, Gradient Boosting Model (GBM) in 2.11, and concluding with Extreme Gradient Boosting (XGBoost) in 2.12.

All the algorithms we'll discuss here are fundamental and popular in the field, and every Data Scientist, Machine Learning Engineer, and AI researcher must know them at least at this high level.

Note that we will not delve into unsupervised learning techniques here, or enter into granular details of each algorithm.

2.1 Linear Regression

When the relationship between two variables is linear, you can use the Linear Regression statistical method. It can help you model the impact of a unit change in one variable, the independent variable on the values of another variable, the dependent variable .

Dependent variables are often referred to as response variables or explained variables, whereas independent variables are often referred to as regressors or explanatory variables.

When the Linear Regression model is based on a single independent variable, then the model is called Simple Linear Regression . But when the model is based on multiple independent variables, it’s referred to as Multiple Linear Regression .

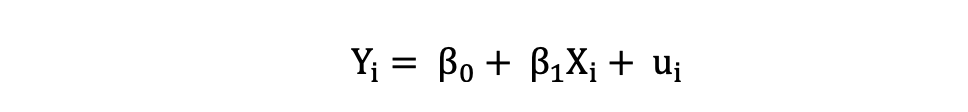

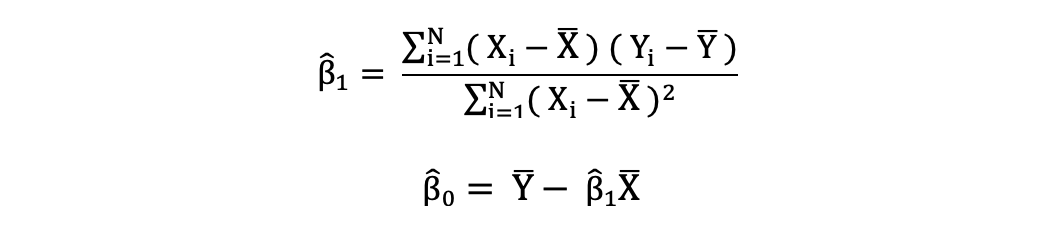

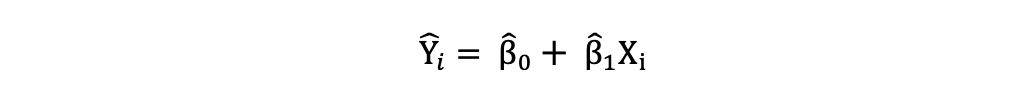

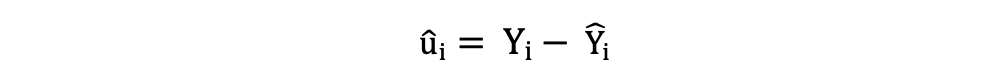

Simple Linear Regression can be described by the following expression:

where Y is the dependent variable, X is the independent variable which is part of the data, β0 is the intercept which is unknown and constant, and β1 is the slope coefficient or a parameter corresponding to the variable X which is unknown and constant as well. Finally, u is the error term that the model makes when estimating the Y values.

The main idea behind linear regression is to find the best-fitting straight line, the regression line, through a set of paired ( X, Y ) data. One example of the Linear Regression application is modeling the impact of flipper length on penguins’ body mass , which is visualized below:

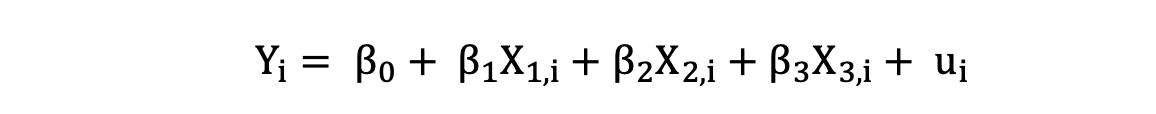

Multiple Linear Regression with three independent variables can be described by the following expression:

where Y is the dependent variable, X is the independent variable which is part of the data, β0 is the intercept which is unknown and constant, and β1 , β 2, β 3 are the slope coefficients or a parameter corresponding to the variable X1, X2, X3 which are unknown and constant as well. Finally, u is the error term that the model makes when estimating the Y values.

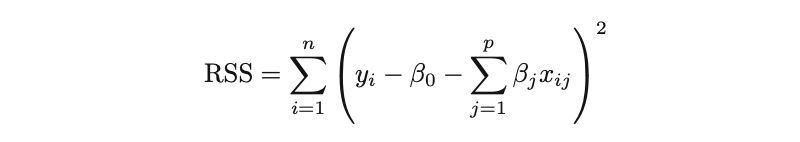

2.1.1 Ordinary Least Squares

The ordinary least squares (OLS) is a method for estimating the unknown parameters such as β0 and β1 in a linear regression model. The model is based on the principle of least squares that minimizes the sum of squares of the differences between the observed dependent variable and its values predicted by the linear function of the independent variable, often referred to as fitted values .

This difference between the real and predicted values of dependent variable Y is referred to as residual . What OLS does is minimize the sum of squared residuals. This optimization problem results in the following OLS estimates for the unknown parameters β0 and β1 which are also known as coefficient estimates .

Once these parameters of the Simple Linear Regression model are estimated, the fitted values of the response variable can be computed as follows:

Standard Error

The residuals or the estimated error terms can be determined as follows:

It is important to keep in mind the difference between the error terms and residuals. Error terms are never observed, while the residuals are calculated from the data. The OLS estimates the error terms for each observation but not the actual error term. So, the true error variance is still unknown.

Also, these estimates are subject to sampling uncertainty. What this means is that we will never be able to determine the exact estimate, the true value, of these parameters from sample data in an empirical application. But we can estimate it by calculating the sample residual variance.

2.1.2 OLS Assumptions

The OLS estimation method makes the following assumptions which need to be satisfied to get reliable prediction results:

- A ssumption (A) 1: the Linearity assumption states that the model is linear in parameters.

- A2: the Random Sample assumption states that all observations in the sample are randomly selected.

- A3: the Exogeneity assumption states that independent variables are uncorrelated with the error terms.

- A4: the Homoskedasticity assumption states that the variance of all error terms is constant.

- A5: the No Perfect Multi-Collinearity assumption states that none of the independent variables is constant and there are no exact linear relationships between the independent variables.

Note that the above description for Linear Regression is from my article named Complete Guide to Linear Regression .

For detailed article on Linear Regression check out this post:

2.1.3 Linear Regression in Python

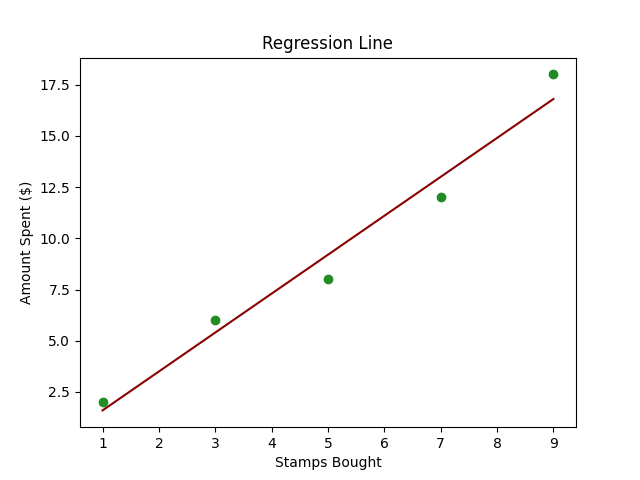

Imagine you have a friend, Alex, who collects stamps. Every month, Alex buys a certain number of stamps, and you notice that the amount Alex spends seems to depend on the number of stamps bought.

Now, you want to create a little tool that can predict how much Alex will spend next month based on the number of stamps bought. This is where Linear Regression comes into play.

In technical terms, we're trying to predict the dependent variable (amount spent) based on the independent variable (number of stamps bought).

Below is some simple Python code using scikit-learn to perform Linear Regression on a created dataset.

- Sample Data : stamps_bought represents the number of stamps Alex bought each month and amount_spent represents the corresponding money spent.

- Creating and Training Model : Using LinearRegression() from scikit-learn to create and train our model using .fit() .

- Predictions : Use the trained model to predict the amount Alex will spend for a given number of stamps. In the code, we predict the amount for 10 stamps.

- Plotting : We plot the original data points (in blue) and the predicted line (in red) to visually understand our model’s prediction capability.

- Displaying Prediction : Finally, we print out the predicted spending for a specific number of stamps (10 in this case).

2.2 Logistic Regression

Another very popular Machine Learning technique is Logistic Regression which, though named regression, is actually a supervised classification technique .

Logistic regression is a Machine Learning method that models conditional probability of an event occurring or observation belonging to a certain class, based on a given dataset of independent variables.

When the relationship between two variables is linear and the dependent variable is a categorical variable, you may want to predict a variable in the form of a probability (number between 0 and 1). In these cases, Logistic Regression comes in handy.

This is because during the prediction process in Logistic Regression, the classifier predicts the probability (a value between 0 and 1) of each observation belonging to the certain class, usually to one of the two classes of dependent variable.

For instance, if you want to predict the probability or likelihood that a candidate will be elected or not during an election given the candidate's popularity score, past successes, and other descriptive variables about that candidate, you can use Logistic Regression to model this probability.

So, rather than predicting the response variable, Logistic Regression models the probability that Y belongs to a particular category.

It's similar to Linear Regression with a difference being that instead of Y it predicts the log odds. In statistical terminology, we model the conditional distribution of the response Y , given the predictor(s) X . So LR helps to predict the probability of Y belonging to certain class (0 and 1) given the features P(Y|X=x) .

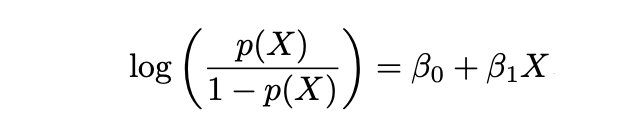

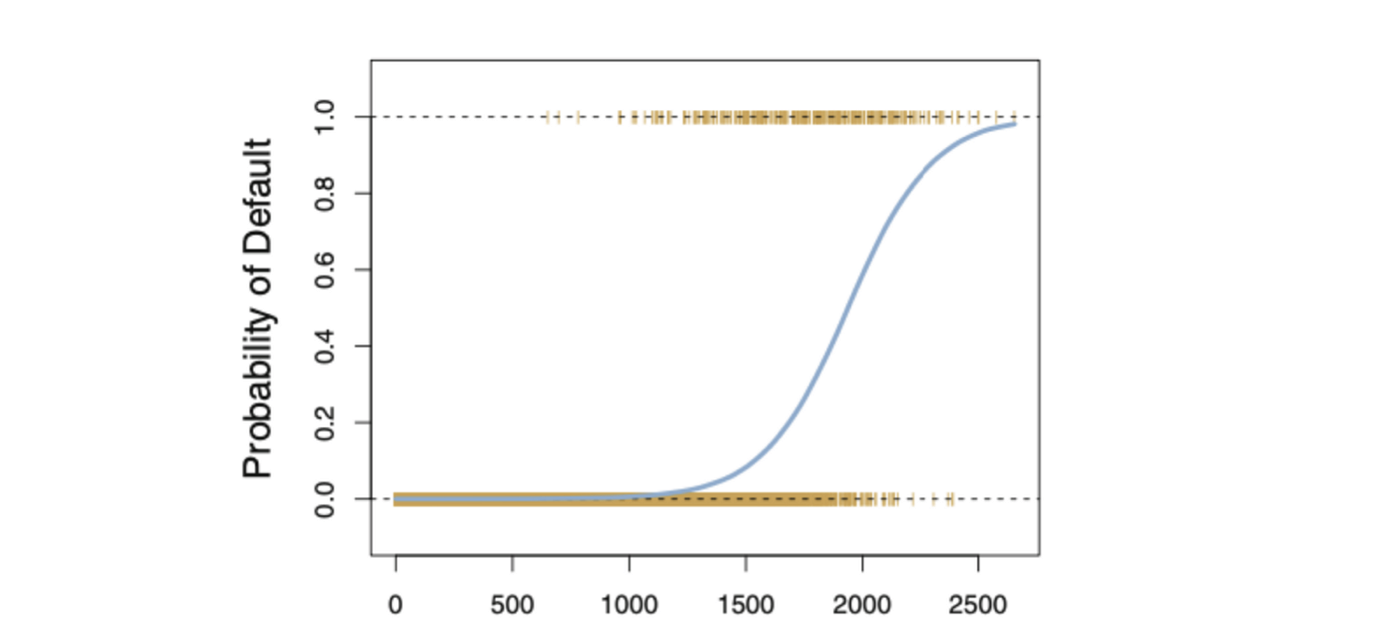

The name Logistic in Logistic Regression comes from the function this approach is based upon, which is Logistic Function . Logistic Function makes sure that for too large and too small values, the corresponding probability is still within the [0,1 bounds].

In the equation above, the P(X) stands for the probability of Y belonging to certain class (0 and 1) given the features P(Y|X=x). X stands for the independent variable, β0 is the intercept which is unknown and constant, β1 is the slope coefficient or a parameter corresponding to the variable X which is unknown and constant as well similar to Linear Regression. e stands for exp() function.

Odds and Log Odds

Logistic Regression and its estimation technique MLE is based on the terms Odds and Log Odds. Where Odds is defined as follows:

and Log Odds is defined as follows:

2.2.1 Maximum Likelihood Estimation (MLE)

While for Linear Regression, we use OLS (Ordinary Least Squares) or LS (Least Squares) as an estimation technique, for Logistic Regression we should use another estimation technique.

We can’t use LS in Logistic Regression to find the best fitting line (to perform estimation) because the errors can then become very large or very small (even negative) while in case of Logistic Regression we aim for a predicted value in [0,1].

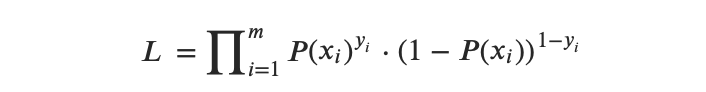

So for Logistic Regression we use the MLE technique, where the likelihood function calculates the probability of observing the outcome given the input data and the model. This function is then optimised to find the set of parameters that results in the largest sum likelihood over the training dataset.

The logistic function will always produce an S-shaped curve like above, regardless of the value of independent variable X resulting in sensible estimation most of the time.

2.2.2 Logistic Regression Likelihood Function(s)

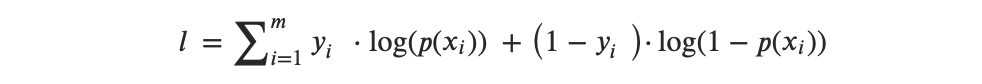

The Likelihood function can be expressed as follows:

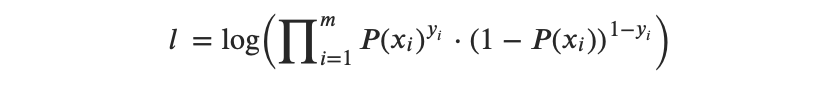

So the Log Likelihood function can be expressed as follows:

or, after transformation from multipliers to summation, we get:

Then the idea behind the MLE is to find a set of estimates that would maximize this likelihood function.

- Step 1: Project the data points into a candidate line that produces a sample log (odds) value.

- Step 2: Transform sample log (odds) to sample probabilities by using the following formula:

- Step 3: Obtain the overall likelihood or overall log likelihood.

- Step 4: Rotate the log (odds) line again and again, until you find the optimal log (odds) maximizing the overall likelihood

2.2.3 Cut off value in Logistic Regression

If you plan to use Logistic Regression at the end get a binary {0,1} value, then you need a cut-off point to transform the estimated values per observation from the range of [0,1] to a value 0 or 1.

Depending on your individual case you can choose a corresponding cut off point, but a popular cut-ff point is 0.5. In this case, all observations with a predicted value smaller than 0.5 will be assigned to class 0 and observations with a predicted value larger or equal than 0.5 will be assigned to class 1.

2.2.4 Performance Metrics in Logistic Regression

Since Logistic Regression is a classification method, common classification metrics such as recall, precision, F-1 measure can all be used. But there is also a metrics system that is also commonly used for assessing the performance of the Logistic Regression model, called Deviance .

2.2.5 Logistic Regression in Python

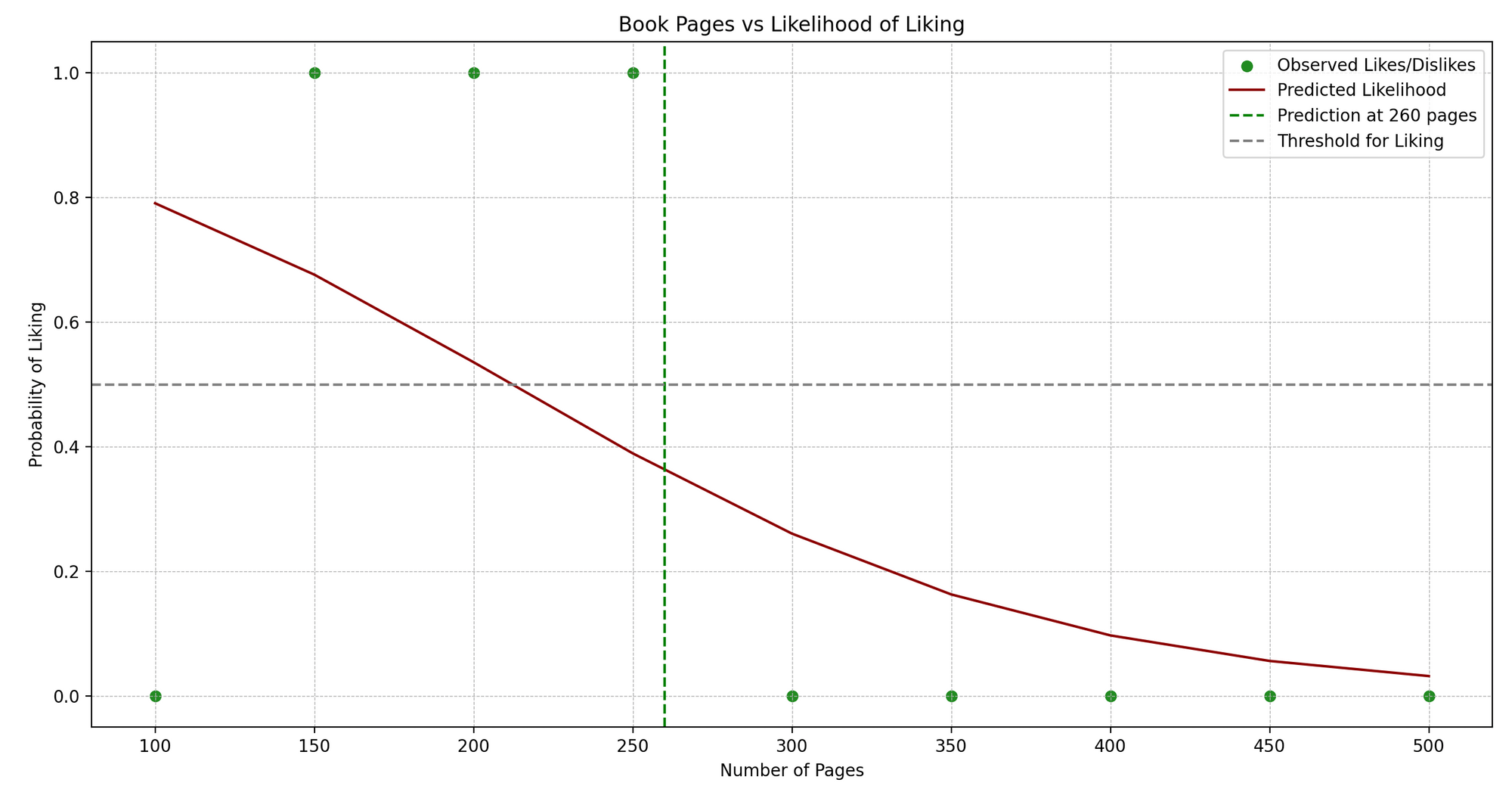

Jenny is an avid book reader. Jenny reads books of different genres and maintains a little journal where she notes down the number of pages and whether she liked the book (Yes or No).

We see a pattern: Jenny typically enjoys books that are neither too short nor too long. Now, can we predict whether Jenny will like a book based on its number of pages? This is where Logistic Regression can help us!

In technical terms, we're trying to predict a binary outcome (like/dislike) based on one independent variable (number of pages).

Here's a simplified Python example using scikit-learn to implement Logistic Regression:

- Sample Data : pages represents the number of pages in the books Jenny has read, and likes represents whether she liked them (1 for like, 0 for dislike).

- Creating and Training Model : We instantiate LogisticRegression() and train the model using .fit() with our data.

- Predictions : We predict whether Jenny will like a book with a particular number of pages (260 in this example).

- Plotting : We visualize the original data points (in blue) and the predicted probability curve (in red). The green dashed line represents the page number we’re predicting for, and the grey dashed line indicates the threshold (0.5) above which we predict a "like".

- Displaying Prediction : We output whether Jenny will like a book of the given page number based on our model's prediction.

2.3 Linear Discriminant Analysis (LDA)

Another classification technique, closely related to Logistic Regression, is Linear Discriminant Analytics (LDA). Where Logistic Regression is usually used to model the probability of observation belonging to a class of the outcome variable with 2 categories, LDA is usually used to model the probability of observation belonging to a class of the outcome variable with 3 and more categories.

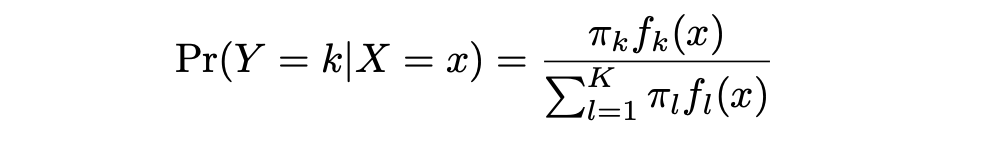

LDA offers an alternative approach to model the conditional likelihood of the outcome variable given that set of predictors that addresses the issues of Logistic Regression. It models the distribution of the predictors X separately in each of the response classes (that is, given Y ), and then uses Bayes’ theorem to flip these two around into estimates for Pr(Y = k|X = x).

Note that in the case of LDA these distributions are assumed to be normal. It turns out that the model is very similar in form to logistic regression. In the equation here:

π_k represents the overall prior probability that a randomly chosen observation comes from the k th class. f_k(x) , which is equal to Pr(X = x|Y = k), represents the posterior probability , and is the density function of X for an observation that comes from the k th class (density function of the predictors).

This is the probability of X=x given the observation is from certain class. Stated differently, it is the probability that the observation belongs to the k th class, given the predictor value for that observation.

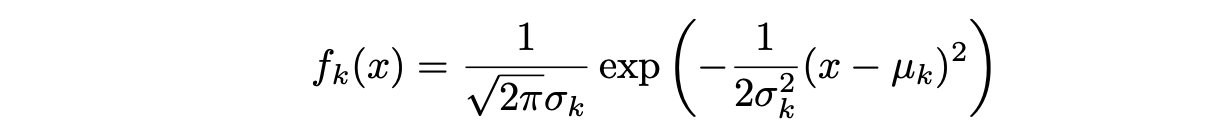

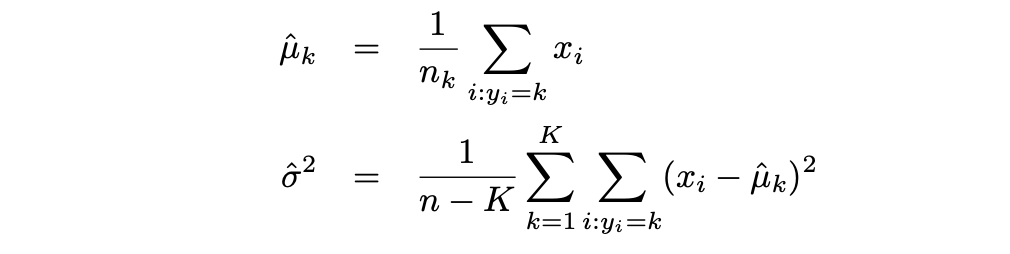

Assuming that f_k(x) is Normal or Gaussian, the normal density takes the following form (this is the one- normal dimensional setting):

where μ_k and σ_k² are the mean and variance parameters for the k th class. Assuming that σ_¹² = · · · = σ_K² (there is a shared variance term across all K classes, which we denote by σ2).

Then the LDA approximates the Bayes classifier by using the following estimates for πk, μk, and σ2:

Where Logistic Regression is usually used to model the probability of observation belonging to a class of the outcome variable with 2 categories, LDA is usually used to model the probability of observation belonging to a class of the outcome variable with 3 and more categories.

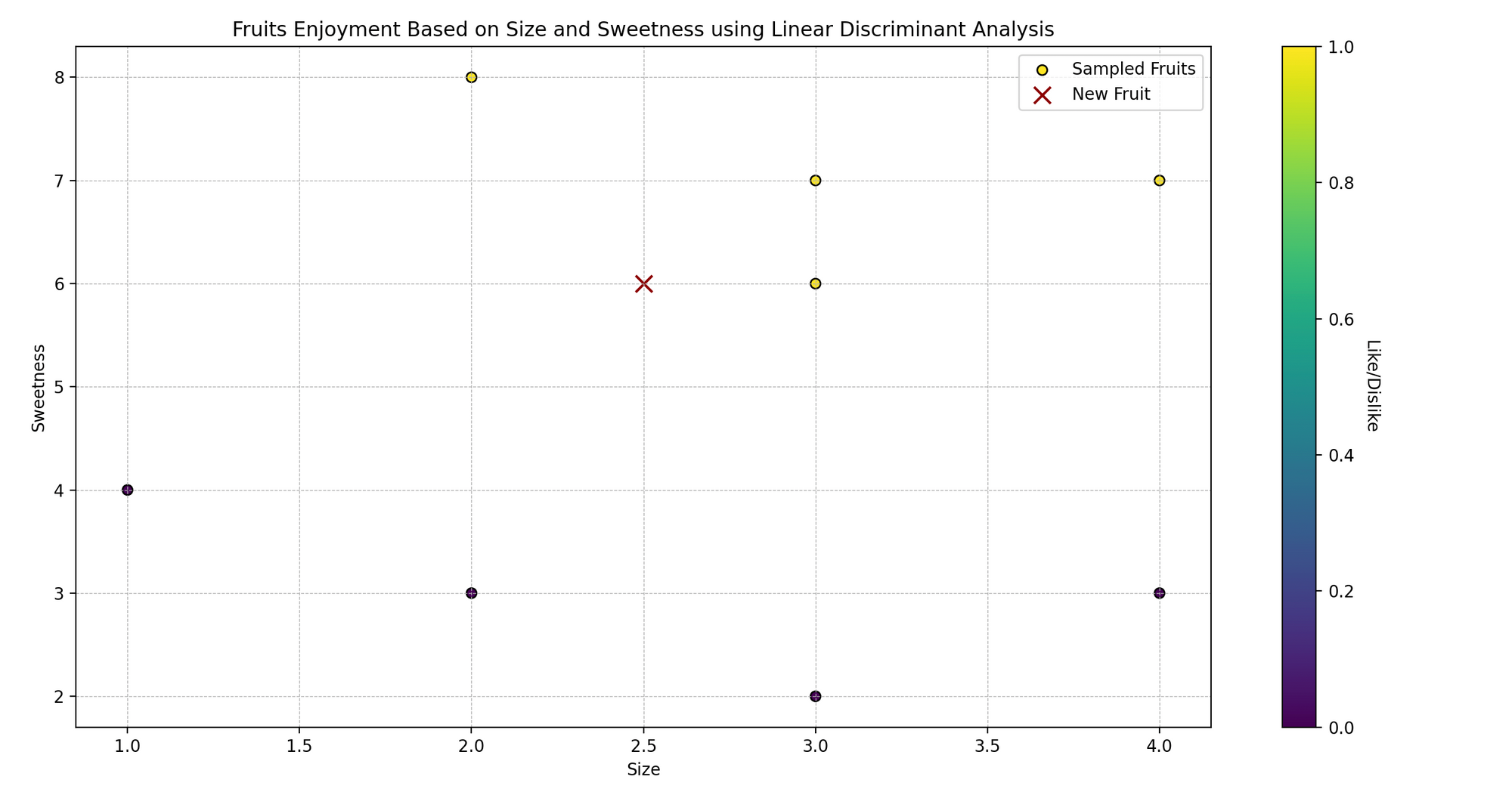

2.3.1 Linear Discriminant Analysis in Python

Imagine Sarah, who loves cooking and trying various fruits. She sees that the fruits she likes are typically of specific sizes and sweetness levels.

Now, Sarah is curious: can she predict whether she will like a fruit based on its size and sweetness? Let's use Linear Discriminant Analysis (LDA) to help her predict whether she'll like certain fruits or not.

In technical language, we are trying to classify the fruits (like/dislike) based on two predictor variables (size and sweetness).

- Sample Data : fruits_features contains two features – size and sweetness of fruits, and fruits_likes represents whether Sarah likes them (1 for like, 0 for dislike).

- Creating and Training Model : We instantiate LinearDiscriminantAnalysis() and train it using .fit() with our sample data.

- Prediction : We predict whether Sarah will like a fruit with a particular size and sweetness level ([2.5, 6] in this example).

- Plotting : We visualize the original data points, color-coded based on Sarah’s like (yellow) and dislike (purple), and mark the new fruit with a red 'x'.

- Displaying Prediction : We output whether Sarah will like a fruit with the given size and sweetness level based on our model's prediction.

Logistic regression is a popular approach for performing classification when there are two classes. But when the classes are well-separated or the number of classes exceeds 2, the parameter estimates for the logistic regression model are surprisingly unstable.

Unlike Logistic Regression, LDA does not suffer from this instability problem when the number of classes is more than 2. If n is small and the distribution of the predictors X is approximately normal in each of the classes, LDA is again more stable than the Logistic Regression model.

2.5 Naïve Bayes

Another classification method that relies on Bayes Rule , like LDA, is Naïve Bayes Classification approach. For more about Bayes Theorem, Bayes Rule and a corresponding example, you can read these articles .

Like Logistic Regression, you can use the Naïve Bayes approach to classify observation in one of the two classes (0 or 1).

The idea behind this method is to calculate the probability of observation belonging to a class given the prior probability for that class and conditional probability of each feature value given for given class. That is:

where Y stands for the class of observation, k is the k th class and x1, …, xn stands for feature 1 till feature n, respectively. f_k(x) = Pr(X = x|Y = k), represents the posterior probability, which like in case of LDA is the density function of X for an observation that comes from the k th class (density function of the predictors).

If you compare the above expression with the one you saw for LDA, you will see some similarities.

In LDA, we make a very important and strong assumption for simplification purposes: namely, that f_k is the density function for a multivariate normal random variable with class-specific mean μ_k, and shared covariance matrix Sigma Σ.

This assumtion helps to replace the very challenging problem of estimating K p-dimensional density functions with the much simpler problem, which is to estimate K p-dimensional mean vectors and one (p × p)-dimensional covariance matrices.

In the case of the Naïve Bayes Classifier, it uses a different approach for estimating f_1 (x), . . . , f_K(x). Instead of making an assumption that these functions belong to a particular family of distributions (for example normal or multivariate normal), we instead make a single assumption: within the k th class, the p predictors are independent. That is:

So Bayes classifier assumes that the value of a particular variable or feature is independent of the value of any other variables (uncorrelated), given the class/label variable.

For instance, a fruit may be considered to be a banana if it is yellow, oval shaped, and about 5–10 cm long. So, the Naïve Bayes classifier considers that each of these various features of fruit contribute independently to the probability that this fruit is a banana, independent of any possible correlation between the colour, shape, and length features.

Naïve Bayes Estimation

Like Logistic Regression, in the case of the Naïve Bayes classification approach we use Maximum Likelihood Estimation (MLE) as estimation technique. There is a great article providing detailed, coincise summary for this approach with corresponding example which you can find here .

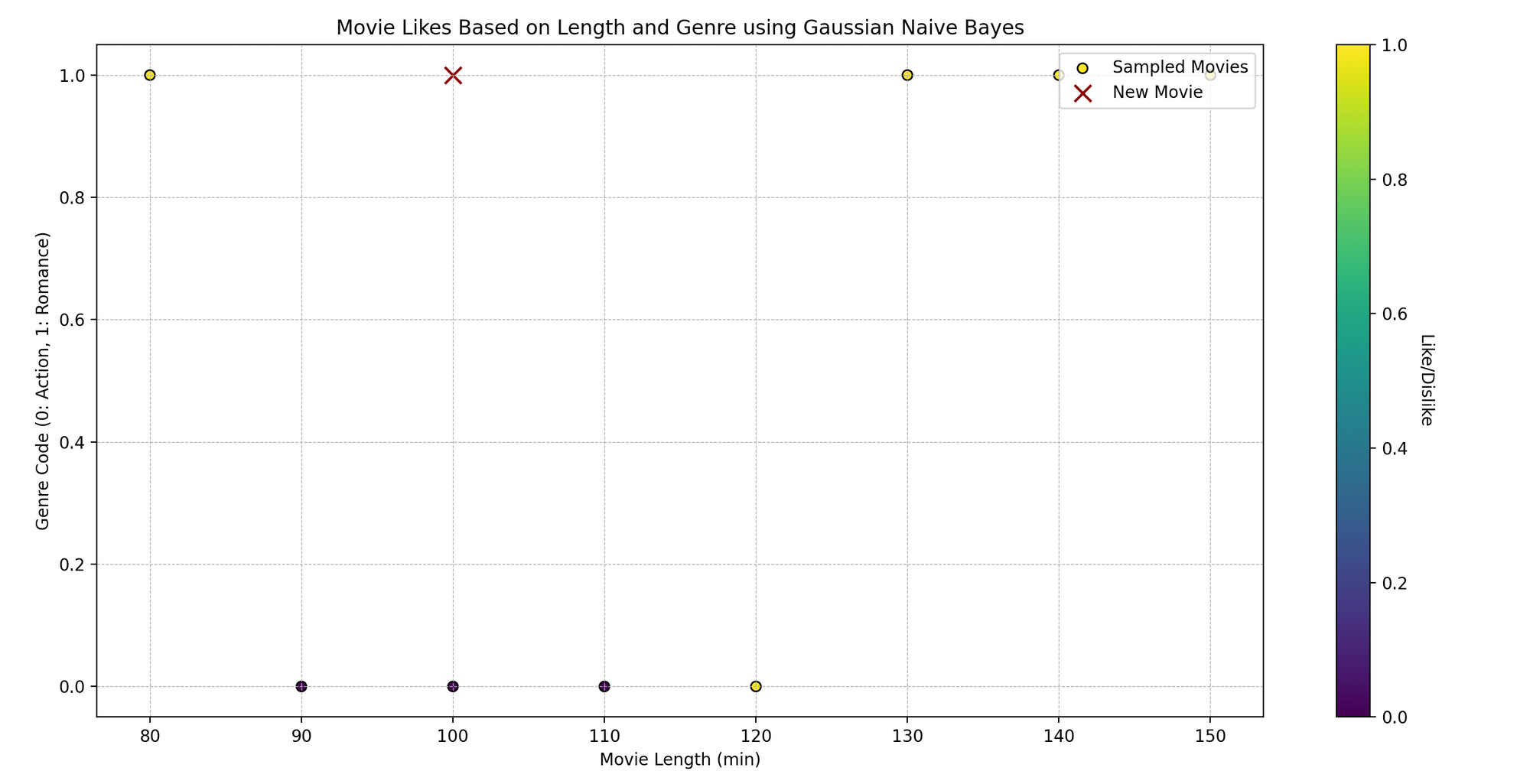

2.5.1 Naïve Bayes in Python

Tom is a movie enthusiast who watches films across different genres and records his feedback—whether he liked them or not. He has noticed that whether he likes a film might depend on two aspects: the movie's length and its genre. Can we predict whether Tom will like a movie based on these two characteristics using Naïve Bayes?

Technically, we want to predict a binary outcome (like/dislike) based on the independent variables (movie length and genre).

- Sample Data : movies_features contains two features: movie length and genre (encoded as numbers), while movies_likes indicates whether Tom likes them (1 for like, 0 for dislike).

- Creating and Training Model : We instantiate GaussianNB() (a Naïve Bayes classifier assuming Gaussian distribution of data) and train it with .fit() using our data.

- Prediction : We predict whether Tom will like a new movie, given its length and genre code ([100, 1] in this case).

- Plotting : We visualize the original data points, color-coded based on Tom’s like (yellow) and dislike (purple). The red 'x' represents the new movie.

- Displaying Prediction : We print whether Tom will like a movie of the given length and genre code, as per our model's prediction.

Naïve Bayes Classifier has proven to be faster and has a higher bias and lower variance. Logistic regression has a low bias and higher variance. Depending on your individual case, and the bias-variance trade-off , you can pick the corresponding approach.

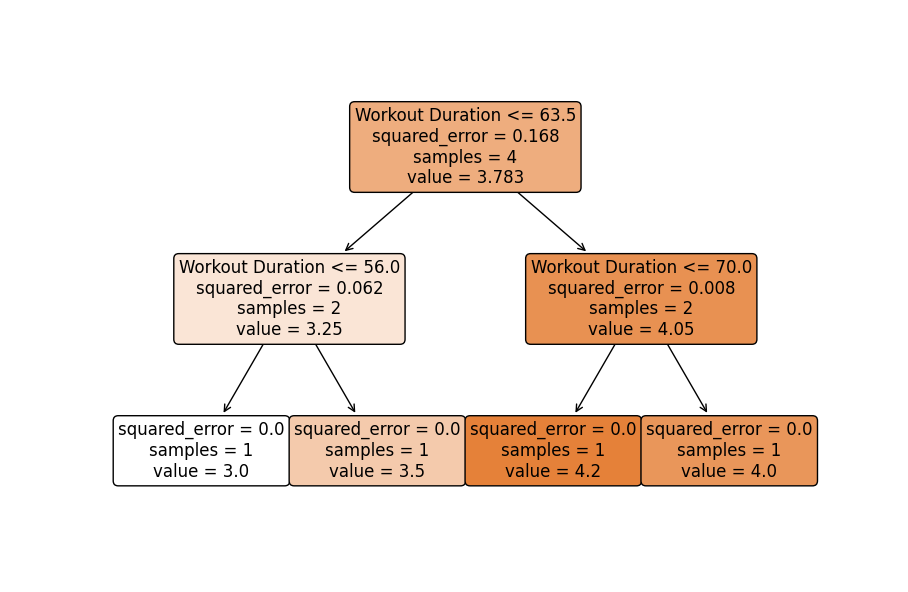

Decision Trees are a supervised and non-parametric Machine Learning learning method used for both classification and regression purposes. The idea is to create a model that predicts the value of a target variable by learning simple decision rules from the data predictors.

Unlike Linear Regression, or Logistic Regression, Decision Trees are simple and useful model alternatives when the relationship between independent variables and dependent variable is suspected to be non-linear.

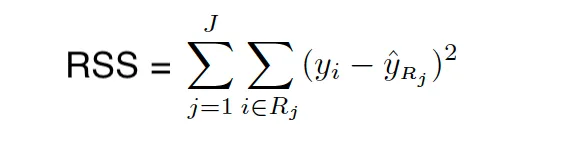

Tree-based methods stratify or segment the predictor space into smaller regions. The idea behind building Decision Trees is to divide the predictor space into distinct and mutually exclusive regions X1,X2,….. ,Xp → R_1,R_2, …,R_N where the regions are in the form of boxes or rectangles. These regions are found by recursive binary splitting since minimizing the RSS is not feasible. This approach is often referred to as a greedy approach.

Decision trees are built by top-down splitting. So, in the beginning, all observations belong to a single region. Then, the model successively splits the predictor space. Each split is indicated via two new branches further down on the tree.

This approach is sometimes called greedy because at each step of the tree-building process, the best split is made at that particular step, rather than looking ahead and picking a split that will lead to a better tree in some future step.

Stopping Criteria

There are some common stopping criteria used when building Decision Trees:

- Minimum number of observations in the leaf.

- Minimum number of samples for a node split.

- Maximum depth of tree (vertical depth).

- Maximum number of terminal nodes.

- Maximum features to consider for the split.

For example, repeat this splitting process until no region contains more than 100 observations. Let's dive deeper

1. Minimum number of observations in the leaf: If a proposed split results in a leaf node with fewer than a defined number of observations, that split might be discarded. This prevents the tree from becoming overly complex.

2. Minimum number of samples for a node split: To proceed with a node split, the node must have at least this many samples. This ensures that there's a significant amount of data to justify the split.

3. Maximum depth of tree (vertical depth): This limits how many times a tree can split. It's like telling the tree how many questions it can ask about the data before making a decision.

4. Maximum number of terminal nodes: This is the total number of end nodes (or leaves) the tree can have.

5. Maximum features to consider for the split: For each split, the algorithm considers only a subset of features. This can speed up training and help in generalization.

When building a decision tree, especially when dealing with large number of features, the tree can become too big with too many leaves. This will effect the interpretability of the model, and might potentially result in an overfitting problem. Therefore, picking a good stopping criteria is essential for the interpretability and for the performance of the model.

RSS/Gini Index/Entropy/Node Purity

When building the tree, we use RSS (for Regression Trees) and GINI Index/Entropy (for Classification Trees) for picking the predictor and value for splitting the regions. Both Gini Index and Entropy are often called Node Purity measures because they describe how pure the leaf of the trees are.

The Gini index measures the total variance across K classes. It takes small value when all class error rates are either 1 or 0. This is also why it’s called a measure for node purity – Gini index takes small values when the nodes of the tree contain predominantly observations from the same class.

The Gini index is defined as follows:

where pˆmk represents the proportion of training observations in the mth region that are from the kth class.

Entropy is another node purity measure, and like the Gini index, the entropy will take on a small value if the m th node is pure. In fact, the Gini index and the entropy are quite similar numerical and can be expressed as follows:

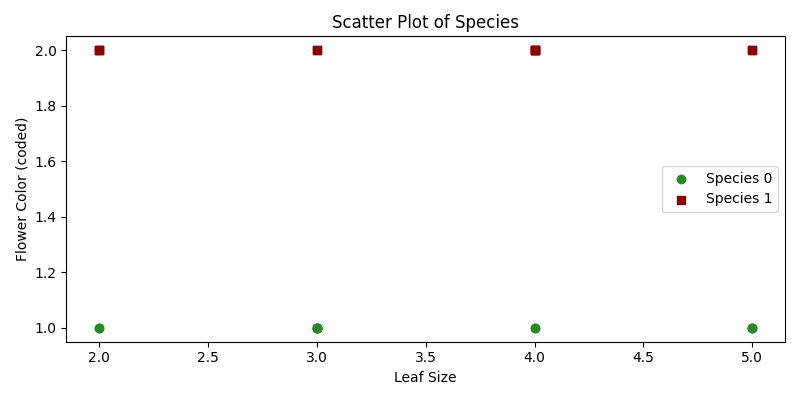

Decision Tree Classification Example

Let’s look at an example where we have three features describing consumers' past behaviour:

- Recency (How recent was the customer’s last purchase?)

- Monetary (How much money did the customer spend in a given period?)

- Frequency (How often did this customer make a purchase in a given period?)

We will use the classification version of the Decision Tree to classify customers to 1 of the 3 classes (Good: 1, Better: 2 and Best: 3), given the features describing the customer's behaviour.

In the following tree, where we use Gini Index as a purity measure, we see that the first features that seems to be the most important one is the Recency. Let's look at the tree and then interpret it:

Customers who have a recency of 202 or larger (last time has made a purchase > 202 days ago) then the chance of this observation to be assigned to class 1 is 93% (basically, we can label those customers as Good Class customers).

For customers with Recency less than 202 (they made a purchase recently), we look at their Monetary value and if it's smaller than 1394, then we look at their Frequency. If the Frequency is then smaller than 44, we can then label this customers’ class as Better or (class 2). And so on.

Decision Trees Python Implementation

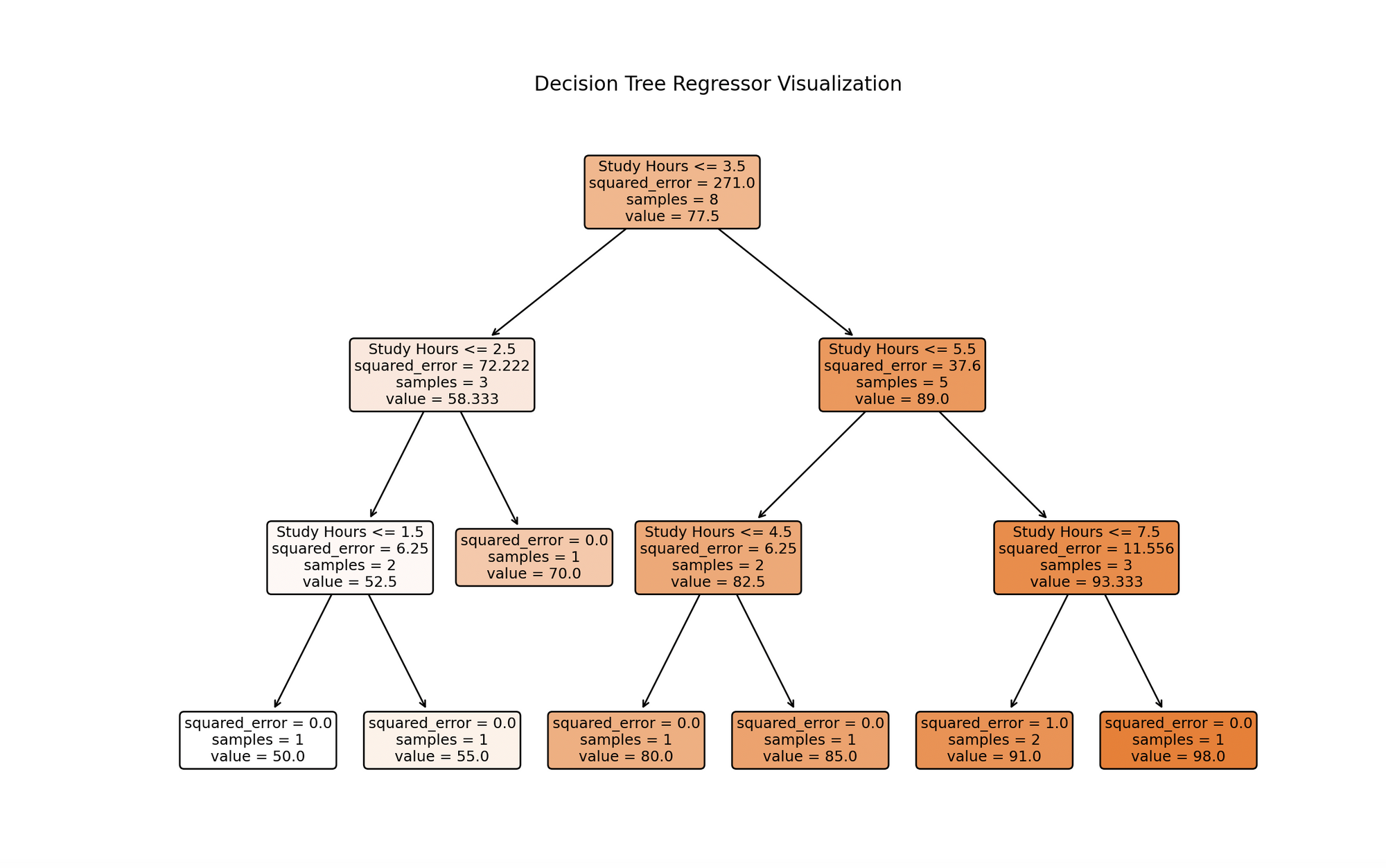

Alex is intrigued by the relationship between the number of hours studied and the scores obtained by students. Alex collected data from his peers about their study hours and respective test scores.

He wonders: can we predict a student's score based on the number of hours they study? Let's leverage Decision Tree Regression to uncover this.

Technically, we're predicting a continuous outcome (test score) based on an independent variable (study hours).

- Sample Data : study_hours contains hours studied, and test_scores contains the corresponding test scores.

- Creating and Training Model : We create a DecisionTreeRegressor with a specified maximum depth (to prevent overfitting) and train it with .fit() using our data.

- Plotting the Decision Tree : plot_tree helps visualize the decision-making process of the model, representing splits based on study hours.

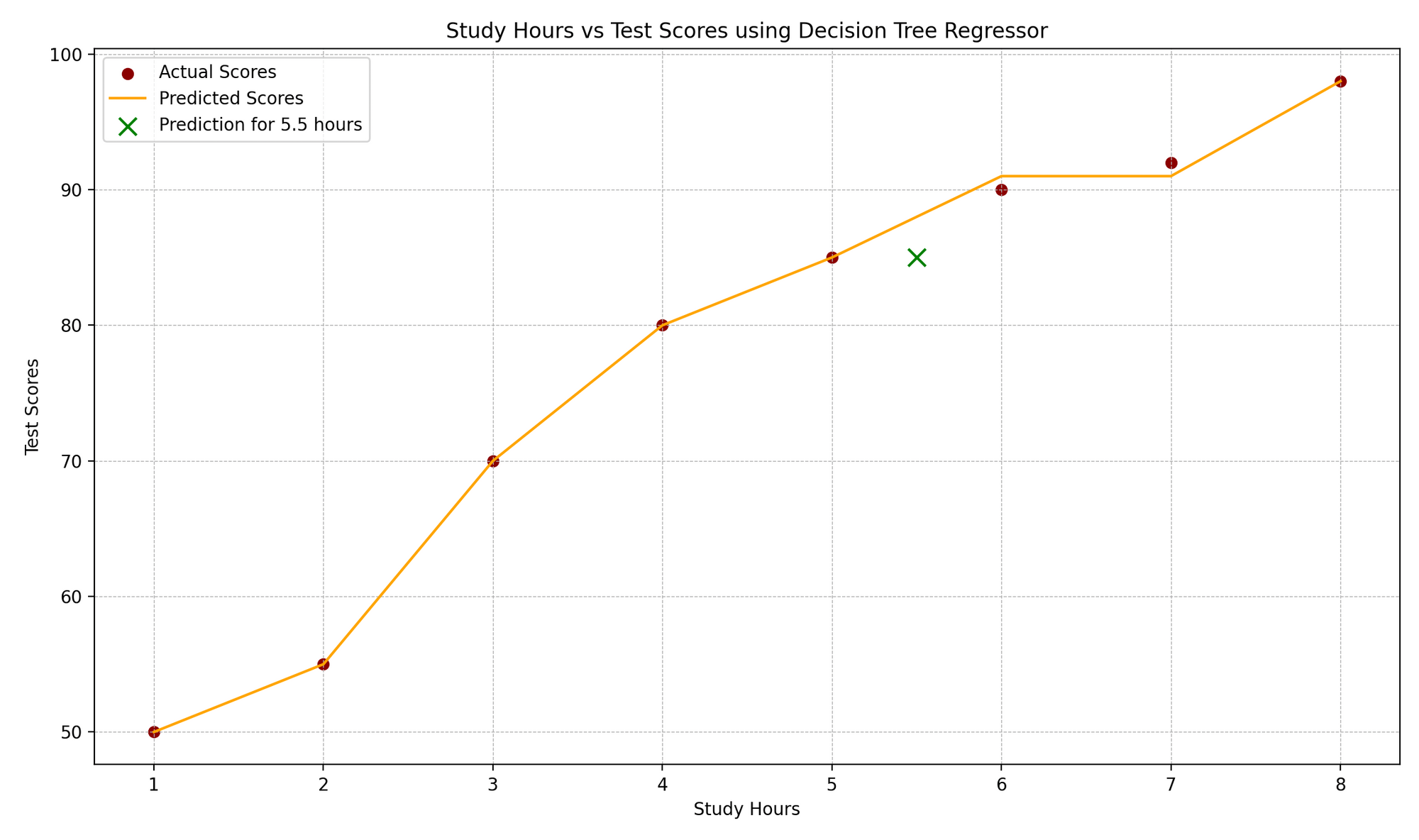

- Prediction & Plotting : We predict the test score for a new study hour value (5.5 in this example), visualize the original data points, the decision tree’s predicted scores, and the new prediction.

The visualization depicts a decision tree model trained on study hours data. Each node represents a decision based on study hours, branching from the top root based on conditions that best forecast test scores. The process continues until reaching a maximum depth or no further meaningful splits. Leaf nodes at the bottom give final predictions, which for regression trees, are the average of target values for training instances reaching that leaf. This visualization highlights the model's predictive approach and the significant influence of study hours on test scores.

The "Study Hours vs. Test Scores" plot illustrates the correlation between study hours and corresponding test scores. Actual data points are denoted by red dots, while the model's predictions are shown as an orange step function, characteristic of regression trees. A green "x" marker highlights a prediction for a new data point, here representing a 5.5-hour study duration. The plot's design elements, such as gridlines, labels, and legends, enhance comprehension of the real versus anticipated values.

One of the biggest disadvantages of Decision Trees is their high variance. You might end up with a model and predictions that are easy to explain but misleading. This would result in making incorrect conclusions and business decisions.

So to reduce the variance of the Decision trees, you can use a method called Bagging. To understand what Bagging is, there are two terms you need to know:

- Bootstrapping

- Central Limit Theorem (CLT)

You can find more about Boostrapping, which is a resampling technique, later in this handbook. For now, you can think of Bootstrapping as a technique that performs sampling from the original data with replacement, which creates a copy of the data very similar to but not exactly the same as the original data.

Bagging is also based on the same ideas as the CLT which is one of the most important if not the most important theorem in Statistics. You can read in more detail about CLT here .

But the idea that is also used in Bagging is that if you take the average of many samples, then the variance is significantly reduced compared to the variance of each of the individual sample based models.

So, given a set of n independent observations Z1,…,Zn, each with variance σ2, the variance of the mean Z ̄ of the observations is given by σ2/n . So averaging a set of observations reduces variance.

For more Statistical details, check out the following tutorial:

Bagging is basically a Bootstrap aggregation that builds B trees using Bootrsapped samples. Bagging can be used to improve the precision (lower the variance of many approaches) by taking repeated samples from a single training data.