- Privacy Policy

Home » Case Study – Methods, Examples and Guide

Case Study – Methods, Examples and Guide

Table of Contents

A case study is a research method that involves an in-depth examination and analysis of a particular phenomenon or case, such as an individual, organization, community, event, or situation.

It is a qualitative research approach that aims to provide a detailed and comprehensive understanding of the case being studied. Case studies typically involve multiple sources of data, including interviews, observations, documents, and artifacts, which are analyzed using various techniques, such as content analysis, thematic analysis, and grounded theory. The findings of a case study are often used to develop theories, inform policy or practice, or generate new research questions.

Types of Case Study

Types and Methods of Case Study are as follows:

Single-Case Study

A single-case study is an in-depth analysis of a single case. This type of case study is useful when the researcher wants to understand a specific phenomenon in detail.

For Example , A researcher might conduct a single-case study on a particular individual to understand their experiences with a particular health condition or a specific organization to explore their management practices. The researcher collects data from multiple sources, such as interviews, observations, and documents, and uses various techniques to analyze the data, such as content analysis or thematic analysis. The findings of a single-case study are often used to generate new research questions, develop theories, or inform policy or practice.

Multiple-Case Study

A multiple-case study involves the analysis of several cases that are similar in nature. This type of case study is useful when the researcher wants to identify similarities and differences between the cases.

For Example, a researcher might conduct a multiple-case study on several companies to explore the factors that contribute to their success or failure. The researcher collects data from each case, compares and contrasts the findings, and uses various techniques to analyze the data, such as comparative analysis or pattern-matching. The findings of a multiple-case study can be used to develop theories, inform policy or practice, or generate new research questions.

Exploratory Case Study

An exploratory case study is used to explore a new or understudied phenomenon. This type of case study is useful when the researcher wants to generate hypotheses or theories about the phenomenon.

For Example, a researcher might conduct an exploratory case study on a new technology to understand its potential impact on society. The researcher collects data from multiple sources, such as interviews, observations, and documents, and uses various techniques to analyze the data, such as grounded theory or content analysis. The findings of an exploratory case study can be used to generate new research questions, develop theories, or inform policy or practice.

Descriptive Case Study

A descriptive case study is used to describe a particular phenomenon in detail. This type of case study is useful when the researcher wants to provide a comprehensive account of the phenomenon.

For Example, a researcher might conduct a descriptive case study on a particular community to understand its social and economic characteristics. The researcher collects data from multiple sources, such as interviews, observations, and documents, and uses various techniques to analyze the data, such as content analysis or thematic analysis. The findings of a descriptive case study can be used to inform policy or practice or generate new research questions.

Instrumental Case Study

An instrumental case study is used to understand a particular phenomenon that is instrumental in achieving a particular goal. This type of case study is useful when the researcher wants to understand the role of the phenomenon in achieving the goal.

For Example, a researcher might conduct an instrumental case study on a particular policy to understand its impact on achieving a particular goal, such as reducing poverty. The researcher collects data from multiple sources, such as interviews, observations, and documents, and uses various techniques to analyze the data, such as content analysis or thematic analysis. The findings of an instrumental case study can be used to inform policy or practice or generate new research questions.

Case Study Data Collection Methods

Here are some common data collection methods for case studies:

Interviews involve asking questions to individuals who have knowledge or experience relevant to the case study. Interviews can be structured (where the same questions are asked to all participants) or unstructured (where the interviewer follows up on the responses with further questions). Interviews can be conducted in person, over the phone, or through video conferencing.

Observations

Observations involve watching and recording the behavior and activities of individuals or groups relevant to the case study. Observations can be participant (where the researcher actively participates in the activities) or non-participant (where the researcher observes from a distance). Observations can be recorded using notes, audio or video recordings, or photographs.

Documents can be used as a source of information for case studies. Documents can include reports, memos, emails, letters, and other written materials related to the case study. Documents can be collected from the case study participants or from public sources.

Surveys involve asking a set of questions to a sample of individuals relevant to the case study. Surveys can be administered in person, over the phone, through mail or email, or online. Surveys can be used to gather information on attitudes, opinions, or behaviors related to the case study.

Artifacts are physical objects relevant to the case study. Artifacts can include tools, equipment, products, or other objects that provide insights into the case study phenomenon.

How to conduct Case Study Research

Conducting a case study research involves several steps that need to be followed to ensure the quality and rigor of the study. Here are the steps to conduct case study research:

- Define the research questions: The first step in conducting a case study research is to define the research questions. The research questions should be specific, measurable, and relevant to the case study phenomenon under investigation.

- Select the case: The next step is to select the case or cases to be studied. The case should be relevant to the research questions and should provide rich and diverse data that can be used to answer the research questions.

- Collect data: Data can be collected using various methods, such as interviews, observations, documents, surveys, and artifacts. The data collection method should be selected based on the research questions and the nature of the case study phenomenon.

- Analyze the data: The data collected from the case study should be analyzed using various techniques, such as content analysis, thematic analysis, or grounded theory. The analysis should be guided by the research questions and should aim to provide insights and conclusions relevant to the research questions.

- Draw conclusions: The conclusions drawn from the case study should be based on the data analysis and should be relevant to the research questions. The conclusions should be supported by evidence and should be clearly stated.

- Validate the findings: The findings of the case study should be validated by reviewing the data and the analysis with participants or other experts in the field. This helps to ensure the validity and reliability of the findings.

- Write the report: The final step is to write the report of the case study research. The report should provide a clear description of the case study phenomenon, the research questions, the data collection methods, the data analysis, the findings, and the conclusions. The report should be written in a clear and concise manner and should follow the guidelines for academic writing.

Examples of Case Study

Here are some examples of case study research:

- The Hawthorne Studies : Conducted between 1924 and 1932, the Hawthorne Studies were a series of case studies conducted by Elton Mayo and his colleagues to examine the impact of work environment on employee productivity. The studies were conducted at the Hawthorne Works plant of the Western Electric Company in Chicago and included interviews, observations, and experiments.

- The Stanford Prison Experiment: Conducted in 1971, the Stanford Prison Experiment was a case study conducted by Philip Zimbardo to examine the psychological effects of power and authority. The study involved simulating a prison environment and assigning participants to the role of guards or prisoners. The study was controversial due to the ethical issues it raised.

- The Challenger Disaster: The Challenger Disaster was a case study conducted to examine the causes of the Space Shuttle Challenger explosion in 1986. The study included interviews, observations, and analysis of data to identify the technical, organizational, and cultural factors that contributed to the disaster.

- The Enron Scandal: The Enron Scandal was a case study conducted to examine the causes of the Enron Corporation’s bankruptcy in 2001. The study included interviews, analysis of financial data, and review of documents to identify the accounting practices, corporate culture, and ethical issues that led to the company’s downfall.

- The Fukushima Nuclear Disaster : The Fukushima Nuclear Disaster was a case study conducted to examine the causes of the nuclear accident that occurred at the Fukushima Daiichi Nuclear Power Plant in Japan in 2011. The study included interviews, analysis of data, and review of documents to identify the technical, organizational, and cultural factors that contributed to the disaster.

Application of Case Study

Case studies have a wide range of applications across various fields and industries. Here are some examples:

Business and Management

Case studies are widely used in business and management to examine real-life situations and develop problem-solving skills. Case studies can help students and professionals to develop a deep understanding of business concepts, theories, and best practices.

Case studies are used in healthcare to examine patient care, treatment options, and outcomes. Case studies can help healthcare professionals to develop critical thinking skills, diagnose complex medical conditions, and develop effective treatment plans.

Case studies are used in education to examine teaching and learning practices. Case studies can help educators to develop effective teaching strategies, evaluate student progress, and identify areas for improvement.

Social Sciences

Case studies are widely used in social sciences to examine human behavior, social phenomena, and cultural practices. Case studies can help researchers to develop theories, test hypotheses, and gain insights into complex social issues.

Law and Ethics

Case studies are used in law and ethics to examine legal and ethical dilemmas. Case studies can help lawyers, policymakers, and ethical professionals to develop critical thinking skills, analyze complex cases, and make informed decisions.

Purpose of Case Study

The purpose of a case study is to provide a detailed analysis of a specific phenomenon, issue, or problem in its real-life context. A case study is a qualitative research method that involves the in-depth exploration and analysis of a particular case, which can be an individual, group, organization, event, or community.

The primary purpose of a case study is to generate a comprehensive and nuanced understanding of the case, including its history, context, and dynamics. Case studies can help researchers to identify and examine the underlying factors, processes, and mechanisms that contribute to the case and its outcomes. This can help to develop a more accurate and detailed understanding of the case, which can inform future research, practice, or policy.

Case studies can also serve other purposes, including:

- Illustrating a theory or concept: Case studies can be used to illustrate and explain theoretical concepts and frameworks, providing concrete examples of how they can be applied in real-life situations.

- Developing hypotheses: Case studies can help to generate hypotheses about the causal relationships between different factors and outcomes, which can be tested through further research.

- Providing insight into complex issues: Case studies can provide insights into complex and multifaceted issues, which may be difficult to understand through other research methods.

- Informing practice or policy: Case studies can be used to inform practice or policy by identifying best practices, lessons learned, or areas for improvement.

Advantages of Case Study Research

There are several advantages of case study research, including:

- In-depth exploration: Case study research allows for a detailed exploration and analysis of a specific phenomenon, issue, or problem in its real-life context. This can provide a comprehensive understanding of the case and its dynamics, which may not be possible through other research methods.

- Rich data: Case study research can generate rich and detailed data, including qualitative data such as interviews, observations, and documents. This can provide a nuanced understanding of the case and its complexity.

- Holistic perspective: Case study research allows for a holistic perspective of the case, taking into account the various factors, processes, and mechanisms that contribute to the case and its outcomes. This can help to develop a more accurate and comprehensive understanding of the case.

- Theory development: Case study research can help to develop and refine theories and concepts by providing empirical evidence and concrete examples of how they can be applied in real-life situations.

- Practical application: Case study research can inform practice or policy by identifying best practices, lessons learned, or areas for improvement.

- Contextualization: Case study research takes into account the specific context in which the case is situated, which can help to understand how the case is influenced by the social, cultural, and historical factors of its environment.

Limitations of Case Study Research

There are several limitations of case study research, including:

- Limited generalizability : Case studies are typically focused on a single case or a small number of cases, which limits the generalizability of the findings. The unique characteristics of the case may not be applicable to other contexts or populations, which may limit the external validity of the research.

- Biased sampling: Case studies may rely on purposive or convenience sampling, which can introduce bias into the sample selection process. This may limit the representativeness of the sample and the generalizability of the findings.

- Subjectivity: Case studies rely on the interpretation of the researcher, which can introduce subjectivity into the analysis. The researcher’s own biases, assumptions, and perspectives may influence the findings, which may limit the objectivity of the research.

- Limited control: Case studies are typically conducted in naturalistic settings, which limits the control that the researcher has over the environment and the variables being studied. This may limit the ability to establish causal relationships between variables.

- Time-consuming: Case studies can be time-consuming to conduct, as they typically involve a detailed exploration and analysis of a specific case. This may limit the feasibility of conducting multiple case studies or conducting case studies in a timely manner.

- Resource-intensive: Case studies may require significant resources, including time, funding, and expertise. This may limit the ability of researchers to conduct case studies in resource-constrained settings.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Questionnaire – Definition, Types, and Examples

Observational Research – Methods and Guide

Quantitative Research – Methods, Types and...

Qualitative Research Methods

Explanatory Research – Types, Methods, Guide

Survey Research – Types, Methods, Examples

- Foundations

- Write Paper

Search form

- Experiments

- Anthropology

- Self-Esteem

- Social Anxiety

Case Study Research Design

The case study research design have evolved over the past few years as a useful tool for investigating trends and specific situations in many scientific disciplines.

This article is a part of the guide:

- Research Designs

- Quantitative and Qualitative Research

- Literature Review

- Quantitative Research Design

- Descriptive Research

Browse Full Outline

- 1 Research Designs

- 2.1 Pilot Study

- 2.2 Quantitative Research Design

- 2.3 Qualitative Research Design

- 2.4 Quantitative and Qualitative Research

- 3.1 Case Study

- 3.2 Naturalistic Observation

- 3.3 Survey Research Design

- 3.4 Observational Study

- 4.1 Case-Control Study

- 4.2 Cohort Study

- 4.3 Longitudinal Study

- 4.4 Cross Sectional Study

- 4.5 Correlational Study

- 5.1 Field Experiments

- 5.2 Quasi-Experimental Design

- 5.3 Identical Twins Study

- 6.1 Experimental Design

- 6.2 True Experimental Design

- 6.3 Double Blind Experiment

- 6.4 Factorial Design

- 7.1 Literature Review

- 7.2 Systematic Reviews

- 7.3 Meta Analysis

The case study has been especially used in social science, psychology, anthropology and ecology.

This method of study is especially useful for trying to test theoretical models by using them in real world situations. For example, if an anthropologist were to live amongst a remote tribe, whilst their observations might produce no quantitative data, they are still useful to science.

What is a Case Study?

Basically, a case study is an in depth study of a particular situation rather than a sweeping statistical survey . It is a method used to narrow down a very broad field of research into one easily researchable topic.

Whilst it will not answer a question completely, it will give some indications and allow further elaboration and hypothesis creation on a subject.

The case study research design is also useful for testing whether scientific theories and models actually work in the real world. You may come out with a great computer model for describing how the ecosystem of a rock pool works but it is only by trying it out on a real life pool that you can see if it is a realistic simulation.

For psychologists, anthropologists and social scientists they have been regarded as a valid method of research for many years. Scientists are sometimes guilty of becoming bogged down in the general picture and it is sometimes important to understand specific cases and ensure a more holistic approach to research .

H.M.: An example of a study using the case study research design.

The Argument for and Against the Case Study Research Design

Some argue that because a case study is such a narrow field that its results cannot be extrapolated to fit an entire question and that they show only one narrow example. On the other hand, it is argued that a case study provides more realistic responses than a purely statistical survey.

The truth probably lies between the two and it is probably best to try and synergize the two approaches. It is valid to conduct case studies but they should be tied in with more general statistical processes.

For example, a statistical survey might show how much time people spend talking on mobile phones, but it is case studies of a narrow group that will determine why this is so.

The other main thing to remember during case studies is their flexibility. Whilst a pure scientist is trying to prove or disprove a hypothesis , a case study might introduce new and unexpected results during its course, and lead to research taking new directions.

The argument between case study and statistical method also appears to be one of scale. Whilst many 'physical' scientists avoid case studies, for psychology, anthropology and ecology they are an essential tool. It is important to ensure that you realize that a case study cannot be generalized to fit a whole population or ecosystem.

Finally, one peripheral point is that, when informing others of your results, case studies make more interesting topics than purely statistical surveys, something that has been realized by teachers and magazine editors for many years. The general public has little interest in pages of statistical calculations but some well placed case studies can have a strong impact.

How to Design and Conduct a Case Study

The advantage of the case study research design is that you can focus on specific and interesting cases. This may be an attempt to test a theory with a typical case or it can be a specific topic that is of interest. Research should be thorough and note taking should be meticulous and systematic.

The first foundation of the case study is the subject and relevance. In a case study, you are deliberately trying to isolate a small study group, one individual case or one particular population.

For example, statistical analysis may have shown that birthrates in African countries are increasing. A case study on one or two specific countries becomes a powerful and focused tool for determining the social and economic pressures driving this.

In the design of a case study, it is important to plan and design how you are going to address the study and make sure that all collected data is relevant. Unlike a scientific report, there is no strict set of rules so the most important part is making sure that the study is focused and concise; otherwise you will end up having to wade through a lot of irrelevant information.

It is best if you make yourself a short list of 4 or 5 bullet points that you are going to try and address during the study. If you make sure that all research refers back to these then you will not be far wrong.

With a case study, even more than a questionnaire or survey , it is important to be passive in your research. You are much more of an observer than an experimenter and you must remember that, even in a multi-subject case, each case must be treated individually and then cross case conclusions can be drawn .

How to Analyze the Results

Analyzing results for a case study tends to be more opinion based than statistical methods. The usual idea is to try and collate your data into a manageable form and construct a narrative around it.

Use examples in your narrative whilst keeping things concise and interesting. It is useful to show some numerical data but remember that you are only trying to judge trends and not analyze every last piece of data. Constantly refer back to your bullet points so that you do not lose focus.

It is always a good idea to assume that a person reading your research may not possess a lot of knowledge of the subject so try to write accordingly.

In addition, unlike a scientific study which deals with facts, a case study is based on opinion and is very much designed to provoke reasoned debate. There really is no right or wrong answer in a case study.

- Psychology 101

- Flags and Countries

- Capitals and Countries

Martyn Shuttleworth (Apr 1, 2008). Case Study Research Design. Retrieved May 01, 2024 from Explorable.com: https://explorable.com/case-study-research-design

You Are Allowed To Copy The Text

The text in this article is licensed under the Creative Commons-License Attribution 4.0 International (CC BY 4.0) .

This means you're free to copy, share and adapt any parts (or all) of the text in the article, as long as you give appropriate credit and provide a link/reference to this page.

That is it. You don't need our permission to copy the article; just include a link/reference back to this page. You can use it freely (with some kind of link), and we're also okay with people reprinting in publications like books, blogs, newsletters, course-material, papers, wikipedia and presentations (with clear attribution).

Want to stay up to date? Follow us!

Get all these articles in 1 guide.

Want the full version to study at home, take to school or just scribble on?

Whether you are an academic novice, or you simply want to brush up your skills, this book will take your academic writing skills to the next level.

Download electronic versions: - Epub for mobiles and tablets - PDF version here

Save this course for later

Don't have time for it all now? No problem, save it as a course and come back to it later.

Research Writing and Analysis

- NVivo Group and Study Sessions

- SPSS This link opens in a new window

- Statistical Analysis Group sessions

- Using Qualtrics

- Dissertation and Data Analysis Group Sessions

- Defense Schedule - Commons Calendar This link opens in a new window

- Research Process Flow Chart

- Research Alignment Chapter 1 This link opens in a new window

- Step 1: Seek Out Evidence

- Step 2: Explain

- Step 3: The Big Picture

- Step 4: Own It

- Step 5: Illustrate

- Annotated Bibliography

- Literature Review This link opens in a new window

- Systematic Reviews & Meta-Analyses

- How to Synthesize and Analyze

- Synthesis and Analysis Practice

- Synthesis and Analysis Group Sessions

- Problem Statement

- Purpose Statement

- Conceptual Framework

- Theoretical Framework

- Quantitative Research Questions

- Qualitative Research Questions

- Trustworthiness of Qualitative Data

- Analysis and Coding Example- Qualitative Data

- Thematic Data Analysis in Qualitative Design

- Dissertation to Journal Article This link opens in a new window

- International Journal of Online Graduate Education (IJOGE) This link opens in a new window

- Journal of Research in Innovative Teaching & Learning (JRIT&L) This link opens in a new window

Writing a Case Study

What is a case study?

A Case study is:

- An in-depth research design that primarily uses a qualitative methodology but sometimes includes quantitative methodology.

- Used to examine an identifiable problem confirmed through research.

- Used to investigate an individual, group of people, organization, or event.

- Used to mostly answer "how" and "why" questions.

What are the different types of case studies?

Note: These are the primary case studies. As you continue to research and learn

about case studies you will begin to find a robust list of different types.

Who are your case study participants?

What is triangulation ?

Validity and credibility are an essential part of the case study. Therefore, the researcher should include triangulation to ensure trustworthiness while accurately reflecting what the researcher seeks to investigate.

How to write a Case Study?

When developing a case study, there are different ways you could present the information, but remember to include the five parts for your case study.

Was this resource helpful?

- << Previous: Thematic Data Analysis in Qualitative Design

- Next: Journal Article Reporting Standards (JARS) >>

- Last Updated: Apr 29, 2024 1:16 PM

- URL: https://resources.nu.edu/researchtools

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Methodology

- Case Study | Definition, Examples & Methods

Case Study | Definition, Examples & Methods

Published on 5 May 2022 by Shona McCombes . Revised on 30 January 2023.

A case study is a detailed study of a specific subject, such as a person, group, place, event, organisation, or phenomenon. Case studies are commonly used in social, educational, clinical, and business research.

A case study research design usually involves qualitative methods , but quantitative methods are sometimes also used. Case studies are good for describing , comparing, evaluating, and understanding different aspects of a research problem .

Table of contents

When to do a case study, step 1: select a case, step 2: build a theoretical framework, step 3: collect your data, step 4: describe and analyse the case.

A case study is an appropriate research design when you want to gain concrete, contextual, in-depth knowledge about a specific real-world subject. It allows you to explore the key characteristics, meanings, and implications of the case.

Case studies are often a good choice in a thesis or dissertation . They keep your project focused and manageable when you don’t have the time or resources to do large-scale research.

You might use just one complex case study where you explore a single subject in depth, or conduct multiple case studies to compare and illuminate different aspects of your research problem.

Prevent plagiarism, run a free check.

Once you have developed your problem statement and research questions , you should be ready to choose the specific case that you want to focus on. A good case study should have the potential to:

- Provide new or unexpected insights into the subject

- Challenge or complicate existing assumptions and theories

- Propose practical courses of action to resolve a problem

- Open up new directions for future research

Unlike quantitative or experimental research, a strong case study does not require a random or representative sample. In fact, case studies often deliberately focus on unusual, neglected, or outlying cases which may shed new light on the research problem.

If you find yourself aiming to simultaneously investigate and solve an issue, consider conducting action research . As its name suggests, action research conducts research and takes action at the same time, and is highly iterative and flexible.

However, you can also choose a more common or representative case to exemplify a particular category, experience, or phenomenon.

While case studies focus more on concrete details than general theories, they should usually have some connection with theory in the field. This way the case study is not just an isolated description, but is integrated into existing knowledge about the topic. It might aim to:

- Exemplify a theory by showing how it explains the case under investigation

- Expand on a theory by uncovering new concepts and ideas that need to be incorporated

- Challenge a theory by exploring an outlier case that doesn’t fit with established assumptions

To ensure that your analysis of the case has a solid academic grounding, you should conduct a literature review of sources related to the topic and develop a theoretical framework . This means identifying key concepts and theories to guide your analysis and interpretation.

There are many different research methods you can use to collect data on your subject. Case studies tend to focus on qualitative data using methods such as interviews, observations, and analysis of primary and secondary sources (e.g., newspaper articles, photographs, official records). Sometimes a case study will also collect quantitative data .

The aim is to gain as thorough an understanding as possible of the case and its context.

In writing up the case study, you need to bring together all the relevant aspects to give as complete a picture as possible of the subject.

How you report your findings depends on the type of research you are doing. Some case studies are structured like a standard scientific paper or thesis, with separate sections or chapters for the methods , results , and discussion .

Others are written in a more narrative style, aiming to explore the case from various angles and analyse its meanings and implications (for example, by using textual analysis or discourse analysis ).

In all cases, though, make sure to give contextual details about the case, connect it back to the literature and theory, and discuss how it fits into wider patterns or debates.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

McCombes, S. (2023, January 30). Case Study | Definition, Examples & Methods. Scribbr. Retrieved 29 April 2024, from https://www.scribbr.co.uk/research-methods/case-studies/

Is this article helpful?

Shona McCombes

Other students also liked, correlational research | guide, design & examples, a quick guide to experimental design | 5 steps & examples, descriptive research design | definition, methods & examples.

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- My Account Login

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 01 May 2024

A critical assessment of using ChatGPT for extracting structured data from clinical notes

- Jingwei Huang ORCID: orcid.org/0000-0003-2155-6107 1 ,

- Donghan M. Yang 1 ,

- Ruichen Rong 1 ,

- Kuroush Nezafati ORCID: orcid.org/0000-0002-6785-7362 1 ,

- Colin Treager 1 ,

- Zhikai Chi ORCID: orcid.org/0000-0002-3601-3351 2 ,

- Shidan Wang ORCID: orcid.org/0000-0002-0001-3261 1 ,

- Xian Cheng 1 ,

- Yujia Guo 1 ,

- Laura J. Klesse 3 ,

- Guanghua Xiao 1 ,

- Eric D. Peterson 4 ,

- Xiaowei Zhan 1 &

- Yang Xie ORCID: orcid.org/0000-0001-9456-1762 1

npj Digital Medicine volume 7 , Article number: 106 ( 2024 ) Cite this article

38 Altmetric

Metrics details

- Non-small-cell lung cancer

Existing natural language processing (NLP) methods to convert free-text clinical notes into structured data often require problem-specific annotations and model training. This study aims to evaluate ChatGPT’s capacity to extract information from free-text medical notes efficiently and comprehensively. We developed a large language model (LLM)-based workflow, utilizing systems engineering methodology and spiral “prompt engineering” process, leveraging OpenAI’s API for batch querying ChatGPT. We evaluated the effectiveness of this method using a dataset of more than 1000 lung cancer pathology reports and a dataset of 191 pediatric osteosarcoma pathology reports, comparing the ChatGPT-3.5 (gpt-3.5-turbo-16k) outputs with expert-curated structured data. ChatGPT-3.5 demonstrated the ability to extract pathological classifications with an overall accuracy of 89%, in lung cancer dataset, outperforming the performance of two traditional NLP methods. The performance is influenced by the design of the instructive prompt. Our case analysis shows that most misclassifications were due to the lack of highly specialized pathology terminology, and erroneous interpretation of TNM staging rules. Reproducibility shows the relatively stable performance of ChatGPT-3.5 over time. In pediatric osteosarcoma dataset, ChatGPT-3.5 accurately classified both grades and margin status with accuracy of 98.6% and 100% respectively. Our study shows the feasibility of using ChatGPT to process large volumes of clinical notes for structured information extraction without requiring extensive task-specific human annotation and model training. The results underscore the potential role of LLMs in transforming unstructured healthcare data into structured formats, thereby supporting research and aiding clinical decision-making.

Similar content being viewed by others

Systematic analysis of ChatGPT, Google search and Llama 2 for clinical decision support tasks

Assessing ChatGPT 4.0’s test performance and clinical diagnostic accuracy on USMLE STEP 2 CK and clinical case reports

Large language models streamline automated machine learning for clinical studies

Introduction.

Large Language Models (LLMs) 1 , 2 , 3 , 4 , 5 , 6 , such as Generative Pre-trained Transformer (GPT) models represented by ChatGPT, are being utilized for diverse applications across various sectors. In the healthcare industry, early applications of LLMs are being used to facilitate patient-clinician communication 7 , 8 . To date, few studies have examined the potential of LLMs in reading and interpreting clinical notes, turning unstructured texts into structured, analyzable data.

Traditionally, the automated extraction of structured data elements from medical notes has relied on medical natural language processing (NLP) using rule-based or machine-learning approaches or a combination of both 9 , 10 . Machine learning methods 11 , 12 , 13 , 14 , particularly deep learning, typically employ neural networks and the first generation of transformer-based large language models (e.g., BERT). Medical domain knowledge needs to be integrated into model designs to enhance performance. However, a significant obstacle to developing these traditional medical NLP algorithms is the limited existence of human-annotated datasets and the costs associated with new human annotation 15 . Despite meticulous ground-truth labeling, the relatively small corpus sizes often result in models with poor generalizability or make evaluations of generalizability impossible. For decades, conventional artificial intelligence (AI) systems (symbolic and neural networks) have suffered from a lack of general knowledge and commonsense reasoning. LLMs, like GPT, offer a promising alternative, potentially using commonsense reasoning and broad general knowledge to facilitate language processing.

ChatGPT is the application interface of the GPT model family. This study explores an approach to using ChatGPT to extract structured data elements from unstructured clinical notes. In this study, we selected lung cancer pathology reports as the corpus for extracting detailed diagnosis information for lung cancer. To accomplish this, we developed and improved a prompt engineering process. We then evaluated the effectiveness of this method by comparing the ChatGPT output with expert-curated structured data and used case studies to provide insights into how ChatGPT read and interpreted notes and why it made mistakes in some cases.

Data and endpoints

The primary objective of this study was to develop an algorithm and assess the capabilities of ChatGPT in processing and interpreting a large volume of free-text clinical notes. To evaluate this, we utilized unstructured lung cancer pathology notes, which provide diagnostic information essential for developing treatment plans and play vital roles in clinical and translational research. We accessed a total of 1026 lung cancer pathology reports from two web portals: the Cancer Digital Slide Archive (CDSA data) ( https://cancer.digitalslidearchive.org/ ) and The Cancer Genome Atlas (TCGA data) ( https://cBioPortal.org ). These platforms serve as public data repositories for de-identified patient information, facilitating cancer research. The CDSA dataset was utilized as the “training” data for prompt development, while the TCGA dataset, after removing the overlapping cases with CDSA, served as the test data for evaluating the ChatGPT model performance.

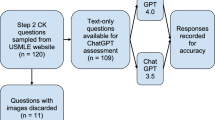

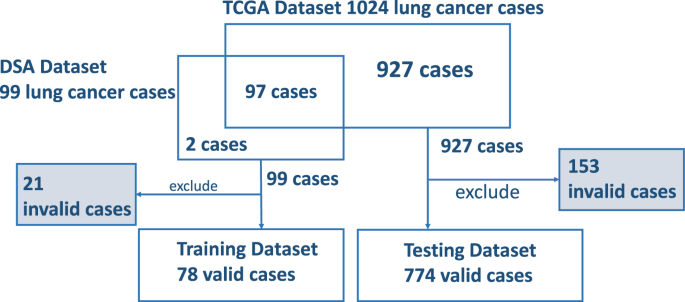

From all the downloaded 99 pathology reports from CDSA for the training data, we excluded 21 invalid reports due to near-empty content, poor scanning quality, or missing report forms. Seventy-eight valid pathology reports were included as the training data to optimize the prompt. To evaluate the model performance, 1024 pathology reports were downloaded from cBioPortal. Among them, 97 overlapped with the training data and were excluded from the evaluation. We further excluded 153 invalid reports due to near-empty content, poor scanning quality, or missing report forms. The invalid reports were preserved to evaluate ChatGPT’s handling of irregular inputs separately, and were not included in the testing data for accuracy performance assessment. As a result, 774 valid pathology reports were included as the testing data for performance evaluation. These valid reports still contain typos, missing words, random characters, incomplete contents, and other quality issues challenging human reading. The corresponding numbers of reports used at each step of the process are detailed in Fig. 1 .

Exclusions are accounted for due to reasons such as empty reports, poor scanning quality, and other factors, including reports of stage IV or unknown conditions.

The specific task of this study was to identify tumor staging and histology types which are important for clinical care and research from pathology reports. The TNM staging system 16 , outlining the primary tumor features (T), regional lymph node involvement (N), and distant metastases (M), is commonly used to define the disease extent, assign prognosis, and guide lung cancer treatment. The American Joint Committee on Cancer (AJCC) has periodically released various editions 16 of TNM classification/staging for lung cancers based on recommendations from extensive database analyses. Following the AJCC guideline, individual pathologic T, N, and M stage components can be summarized into an overall pathologic staging score of Stage I, II, III, or IV. For this project, we instructed ChatGPT to use the AJCC 7 th edition Cancer Staging Manual 17 as the reference for staging lung cancer cases. As the lung cancer cases in our dataset are predominantly non-metastatic, the pathologic metastasis (pM) stage was not extracted. The data elements we chose to extract and evaluate for this study are pathologic primary tumor (pT) and pathologic lymph node (pN) stage components, overall pathologic tumor stage, and histology type.

Overall Performance

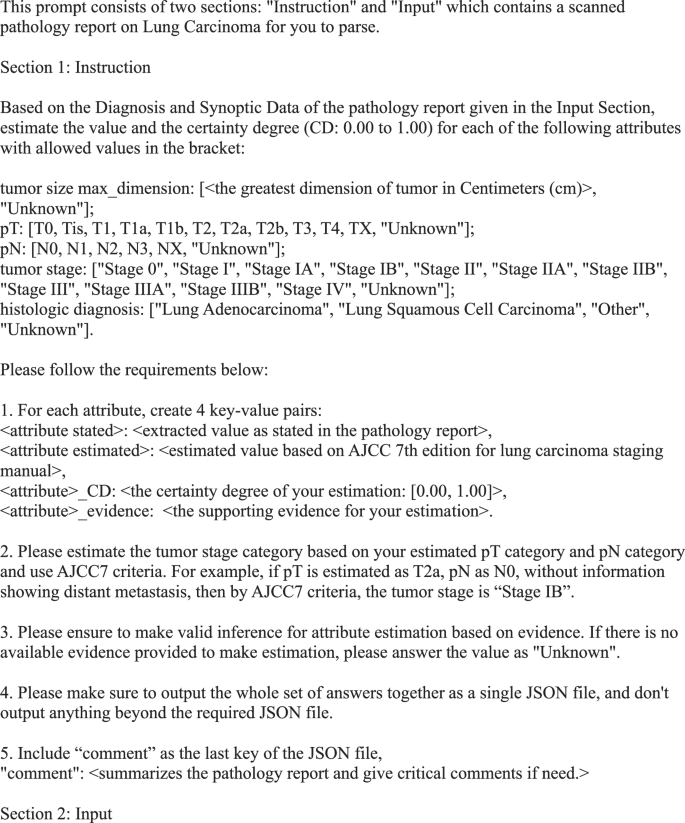

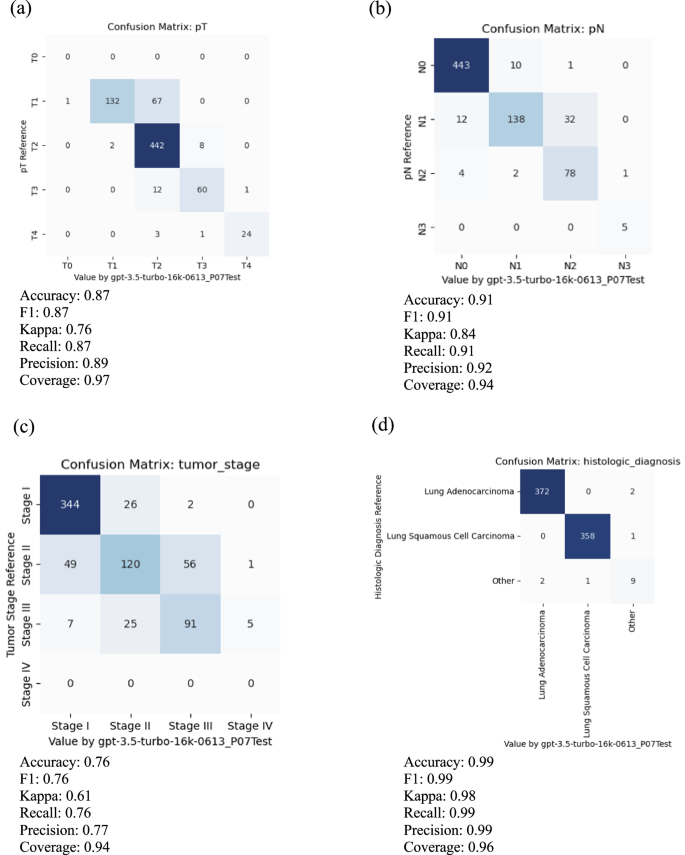

Using the training data in the CDSA dataset ( n = 78), we experimented and improved prompts iteratively, and the final prompt is presented in Fig. 2 . The overall performance of the ChatGPT (gpt-3.5-turbo-16k model) is evaluated in the TCGA dataset ( n = 774), and the results are summarized in Table 1 . The accuracy of primary tumor features (pT), regional lymph node involvement (pN), overall tumor stage, and histological diagnosis are 0.87, 0.91, 0.76, and 0.99, respectively. The average accuracy of all attributes is 0.89. The coverage rates for pT, pN, overall stage and histological diagnosis are 0.97, 0.94, 0.94 and 0.96, respectively. Further details of the accuracy evaluation, F1, Kappa, recall, and precision for each attribute are summarized as confusion matrices in Fig. 3 .

Final prompt for information extraction and estimation from pathology reports.

For meaningful evaluation, the cases with uncertain values, such as “Not Available”, “Not Specified”, “Cannot be determined”, “Unknown”, et al. in reference and prediction have been removed. a Primary tumor features (pT), b regional lymph node involvement (pN), c overall tumor stage, and d histological diagnosis.

Inference and Interpretation

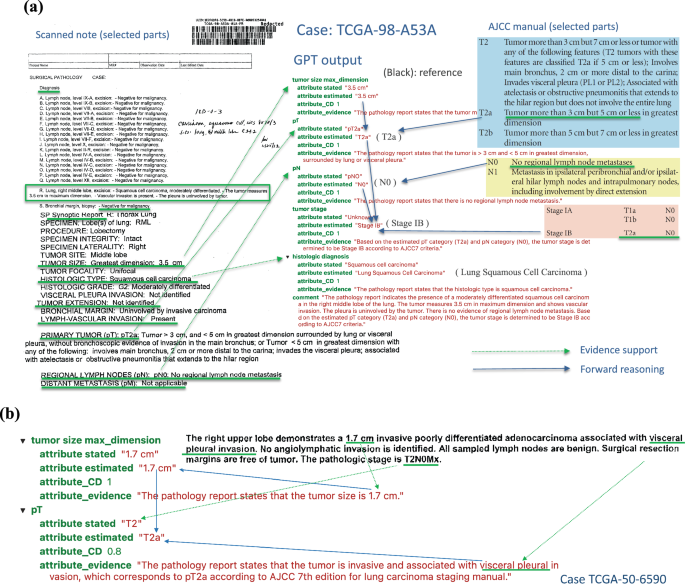

To understand how ChatGPT reads and makes inferences from pathology reports, we demonstrated a case study using a typical pathology report in this cohort (TCGA-98-A53A) in Fig. 4a . The left panel shows part of the original pathology report, and the right panel shows the ChatGPT output with estimated pT, pN, overall stage, and histology diagnosis. For each estimate, ChatGPT gives the confidence level and the corresponding evidence it used for the estimation. In this case, ChatGPT correctly extracted information related to tumor size, tumor features, lymph node involvement, and histology information and used the AJCC staging guidelines to estimate tumor stage correctly. In addition, the confidence level, evidence interpretation, and case summary align well with the report and pathologists’ evaluations. For example, the evidence for the pT category was described as “The pathology report states that the tumor is > 3 cm and < 5 cm in greatest dimension, surrounded by lung or visceral pleura.” The evidence for tumor stage was described as “Based on the estimated pT category (T2a) and pN category (N0), the tumor stage is determined to be Stage IB according to AJCC7 criteria.” It shows that ChatGPT extracted relevant information from the note and correctly inferred the pT category based on the AJCC guideline (Supplementary Fig. 1 ) and the extracted information.

a TCGA-98-A53A. An example of a scanned pathological report (left panel) and ChatGPT output and interpretation (right panel). All estimations and support evidence are consistent with the pathologist’s evaluations. b The GPT model correctly inferred pT as T2a based on the tumor’s size and involvement according to AJCC guidelines.

In another more complex case, TCGA-50-6590 (Fig. 4b ), ChatGPT correctly inferred pT as T2a based on both the tumor’s size and location according to AJCC guidelines. Case TCGA-44-2656 demonstrates a more challenging scenario (Supplementary Fig. 2 ), where the report only contains some factual data without specifying pT, pN, and tumor stage. However, ChatGPT was able to infer the correct classifications based on the reported facts and provide proper supporting evidence.

Error analysis

To understand the types and potential reasons for misclassifications, we performed a detailed error analysis by looking into individual attributes and cases where ChatGPT made mistakes, the results of which are summarized below.

Primary tumor feature (pT) classification

In total, 768 cases with valid reports and reference values in the testing data were used to evaluate the classification performance of pT. Among them, 15 cases were reported with unknown or empty output by ChatGPT, making the coverage rate 0.97. For the remaining 753 cases, 12.6% of pT was misclassified. Among these misclassification cases, the majority were T1 misclassified as T2 (67 out of 753 or 8.9%) or T3 misclassified as T2 (12 out of 753, or 1.6%).

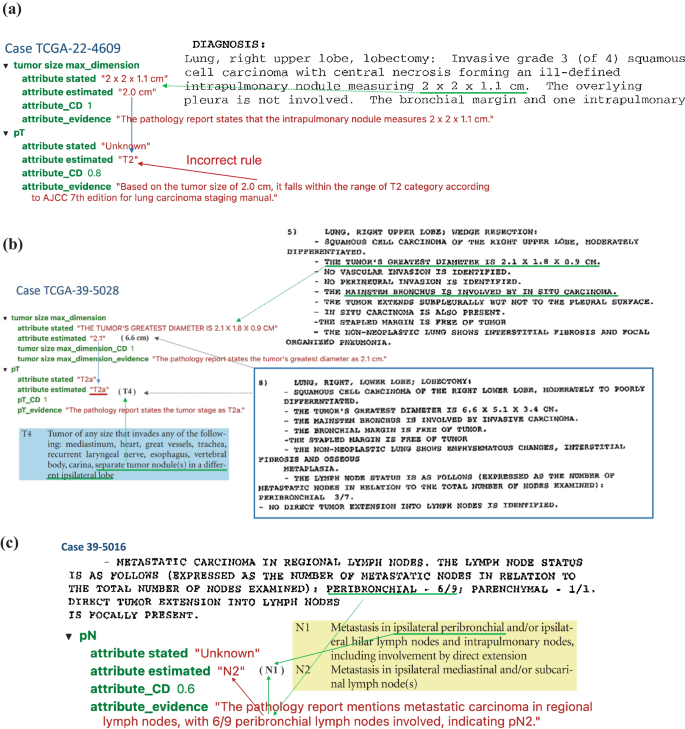

In most cases, ChatGPT extracted the correct tumor size information but used an incorrect rule to distinguish pT categories. For example, in the case TCGA-22-4609 (Fig. 5a ), ChatGPT stated, “Based on the tumor size of 2.0 cm, it falls within the range of T2 category according to AJCC 7th edition for lung carcinoma staging manual.” However, according to the AJCC 7 th edition staging guidelines for lung cancer, if the tumor is more than 2 cm but less than 3 cm in greatest dimension and does not invade nearby structures, pT should be classified as T1b. Therefore, ChatGPT correctly extracted the maximum tumor dimension of 2 cm but incorrectly interpreted this as meeting the criteria for classification as T2. Similarly, for case TCGA-85-A4JB, ChatGPT incorrectly claimed, “Based on the tumor size of 10 cm, the estimated pT category is T2 according to AJCC 7th edition for lung carcinoma staging manual.” According to the AJCC 7 th edition staging guidelines, a tumor more than 7 cm in greatest dimension should be classified as T3.

a TCGA-22-4609 illustrates a typical case where the GPT model uses a false rule, which is incorrect by AJCC guideline. b Case TCGA-39-5028 shows a complex case where there exist two tumors and the GPT model only capture one of them. c Case TCGA-39-5016 reveals a case where the GPT model made a mistake for getting confused with domain terminology.

Another challenging situation arose when multiple tumor nodules were identified within the lung. In the case of TCGA-39-5028 (Fig. 5b ), two separate tumor nodules were identified: one in the right upper lobe measuring 2.1 cm in greatest dimension and one in the right lower lobe measuring 6.6 cm in greatest dimension. According to the AJCC 7 th edition guidelines, the presence of separate tumor nodules in a different ipsilateral lobe results in a classification of T4. However, ChatGPT classified this case as T2a, stating, “The pathology report states the tumor’s greatest diameter as 2.1 cm”. This classification would be appropriated if the right upper lobe nodule were a single isolated tumor. However, ChatGPT failed to consider the presence of the second, larger nodule in the right lower lobe when determining the pT classification.

Regional lymph node involvement (pN)

The classification performance of pN was evaluated using 753 cases with valid reports and reference values in the testing data. Among them, 27 cases were reported with unknown or empty output by ChatGPT, making the coverage rate 0.94. For the remaining 726 cases, 8.5% of pN was misclassified. Most of these misclassification cases were N1 misclassified as N2 (32 cases). The AJCC 7th edition staging guidelines use the anatomic locations of positive lymph nodes to determine N1 vs. N2. However, most of the misclassification cases were caused by ChatGPT interpreting the number of positive nodes rather than the locations of the positive nodes. One such example is the case TCGA-85-6798. The report states, “Lymph nodes: 2/16 positive for metastasis (Hilar 2/16)”. Positive hilar lymph nodes correspond to N1 classification according to AJCC 7th edition guidelines. However, ChatGPT misclassifies this case as N2, stating, “The pathology report states that 2 out of 16 lymph nodes are positive for metastasis. Based on this information, the pN category can be estimated as N2 according to AJCC 7th edition for lung carcinoma staging manual.” This interpretation is incorrect, as the number of positive lymph nodes is not part of the criteria used to determine pN status according to AJCC 7th edition guidelines. The model misinterpreted pN2 predictions in 22 cases due to similar false assertions.

In some cases, the ChatGPT model made classification mistakes by misunderstanding the locations’ terminology. Figure 5c shows a case (TCGA-39-5016) where the ChatGPT model recognized that “6/9 peribronchial lymph nodes involved, “ corresponding with classification as N1, but ChatGPT misclassified this case as N2. By AJCC 7th edition guidelines, N2 is defined as “Metastasis in ipsilateral mediastinal and/or subcarinal lymph node(s)”. The ChatGPT model did not fully understand that terminology and made misclassifications.

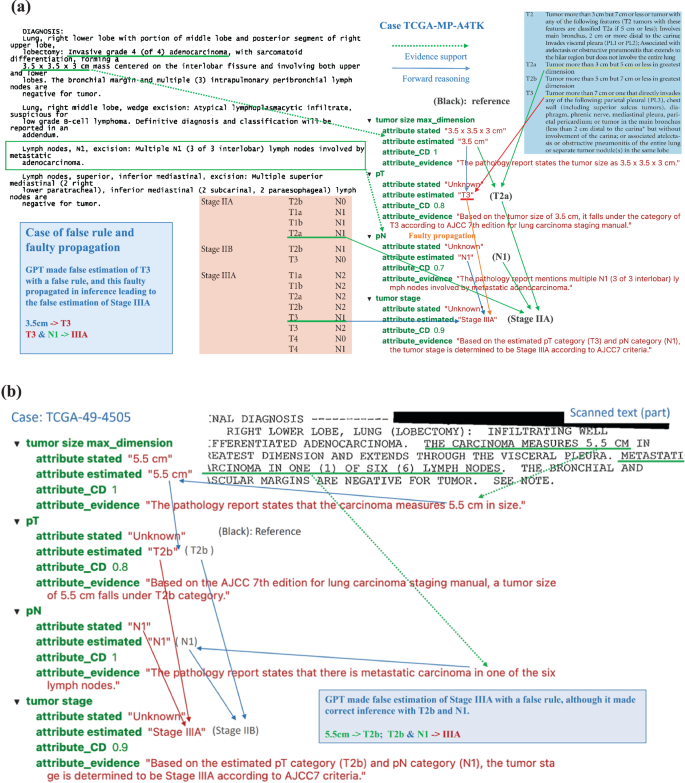

Pathology tumor stage

The overall tumor stage classification performance was evaluated using 744 cases with valid reports and reference values as stage I, II and III in the testing data. Among them, 18 cases were reported as unknown or empty output by ChatGPT making the coverage rate as 0.94. For the remaining 726 cases, 23.6% of the overall stage was misclassified. Since the overall stage depends on individual pT and pN stages, the mistakes could come from misclassification of pT or pN (error propagation) or applying incorrect inference rules to determine the overall stage from pT and pN (incorrect rules). Looking into the 56 cases where ChatGPT misclassified stage II as stage III, 22 cases were due to error propagation, and 34 were due to incorrect rules. Figure 6a shows an example of error propagation (TCGA-MP-A4TK). ChatGPT misclassified the pT stage from T2a to T3, and then this mistake led to the incorrect classification of stage IIA to stage IIIA. Figure 6b illustrates a case (TCGA-49-4505) where ChatGPT made correct estimation of pT and pN but made false prediction about tumor stage by using a false rule. Among the 34 cases affected by incorrect rules, ChatGPT mistakenly inferred tumor stage as stage III for 26 cases where pT is T3 and pN is N0, respectively. For example, for case TCGA-55-7994, ChatGPT provided the evidence as “Based on the estimated pT category (T3) and pN category (N0), the tumor stage is determined to be Stage IIIA according to AJCC7 criteria”. According to AJCC7, tumors with T3 and N0 should be classified as stage IIB. Similarly, error analysis for other tumor stages shows that misclassifications come from both error propagation and applying false rules.

a Case TCGA-MP-A4TK: An example of typical errors GPT made in the experiments, i.e. GPT took false rule and further led to faulty propagation. b Case TCGA-49-4505: The GPT model made false estimation of Stage IIIA with a false rule, although it made correct inference with T2b and N1.

Histological diagnosis

The classification performance of histology diagnosis was evaluated using 762 cases with valid reports and reference values in the testing data. Among them, 17 cases were reported as either unknown or empty output by ChatGPT, making the coverage rate 0.96. For the remaining 745 cases, 6 ( < 1%) of histology types were misclassified. Among the mistakes that ChatGPT made for histology diagnosis, ChatGPT misclassified 3 of them as “other” type and 3 cases of actual “other” type (neither adenocarcinomas nor squamous cell carcinomas) as 2 adenocarcinomas and 1 squamous cell carcinoma. In TCGA-22-5485, two tumors exist: one squamous cell carcinoma and another adenocarcinoma, which should be classified as the ‘other’ type. However, ChatGPT only identified and extracted information for one tumor. In the case TCGA-33-AASB, which is the “other” type of histology, ChatGPT captured the key information and gave it as evidence: “The pathology report states the histologic diagnosis as infiltrating poorly differentiated non-small cell carcinoma with both squamous and glandular features”. However, it mistakenly estimated this case as “adenocarcinoma”. In another case (TCGA-86-8668) of adenocarcinoma, ChatGPT again captured key information and stated as evidence, “The pathology report states the histologic diagnosis as Bronchiolo-alveolar carcinoma, mucinous” but could not tell it is a subtype of adenocarcinoma. Both cases reveal that ChatGPT still has limitations in the specific domain knowledge in lung cancer pathology and the capability of correcting understanding its terminology.

Analyzing irregularities

The initial model evaluation and prompt-response review uncovered irregular scenarios: the original pathology reports may be blank, poorly scanned, or simply missing report forms. We reviewed how ChatGPT responded to these anomalies. First, when a report was blank, the prompt contained only the instruction part. ChatGPT failed to recognize this situation in most cases and inappropriately generated a fabricated case. Our experiments showed that, with the temperature set at 0 for blank reports, ChatGPT converged to a consistent, hallucinated response. Second, for nearly blank reports with a few random characters and poorly scanned reports, ChatGPT consistently converged to the same response with increased variance as noise increased. In some cases, ChatGPT responded appropriately to all required attributes but with unknown values for missing information. Last, among the 15 missing report forms in a small dataset, ChatGPT responded “unknown” as expected in only 5 cases, with the remaining 10 still converging to the hallucinated response.

Reproducibility evaluation

Since ChatGPT models (even with the same version) evolve over time, it is important to evaluate the stability and reproducibility of ChatGPT. For this purpose, we conducted experiments with the same model (“gpt-3.5-turbo-0301”), the same data, prompt, and settings (e.g., temperature = 0) twice in early April and the middle of May of 2023. The rate of equivalence between ChatGPT estimations in April and May on key attributes of interest (pT, pN, tumor stage, and histological diagnosis) is 0.913. The mean absolute error between certainty degrees in the two experiments is 0.051. Considering the evolutionary nature of ChatGPT models, we regard an output difference to a certain extent as reasonable and the overall ChatGPT 3.5 model as stable.

Comparison with other NLP methods

In order to have a clear perspective on how ChatGPT’s performance stands relative to established methods, we conducted a comparative analysis of the results generated by ChatGPT with two established methods: a keyword search algorithm and a deep learning-based Named Entity Recognition (NER) method.

Data selection and annotation

Since the keyword search and NER methods do not support zero-shot learning and require human annotations on the entity level, we carefully annotated our dataset for these traditional NLP methods. We used the same training and testing datasets as in the prompt engineering for ChatGPT. The training dataset underwent meticulous annotation by experienced medical professionals, adhering to the AJCC7 standards. This annotation process involved identifying and highlighting all relevant entities and text spans related to stage, histology, pN, and pT attributes. The detailed annotation process for the 78 cases required a few weeks of full-time work from medical professionals.

Keyword search algorithm using wordpiece tokenizer

For the keyword search algorithm, we employed the WordPiece tokenizer to segment words into subwords. We compiled an annotated entity dictionary from the training dataset. To assess the performance of this method, we calculated span similarities between the extracted spans in the validation and testing datasets and the entries in the dictionary.

Named Entity Recognition (NER) classification algorithm

For the NER classification algorithm, we designed a multi-label span classification model. This model utilized the pre-trained Bio_ClinicalBERT as its backbone. To adapt it for multi-label classification, we introduced an additional linear layer. The model underwent fine-tuning for 1000 epochs using the stochastic gradient descent (SGD) optimizer. The model exhibiting the highest overall F1 score on the validation dataset was selected as the final model for further evaluation in the testing dataset.

Performance evaluation

We evaluated the performance of both the keyword search and NER methods on the testing dataset. We summarized the predicted entities/spans and their corresponding labels. In cases where multiple related entities were identified for a specific category, we selected the most severe entities as the final prediction. Moreover, we inferred the stage information for corpora lacking explicit staging information by aggregating details from pN, pT, and diagnosis, aligning with the AJCC7 protocol. The overall predictions for stage, diagnosis, pN, and pT were compared against the ground truth table to gauge the accuracy and effectiveness of our methods. The results (Supplementary Table S1 ) show that the ChatGPT outperforms WordPiece tokenizer and NER Classifier. The average accuracy for ChatGPT, WordPiece tokenizer, and NER Classifier are 0.89, 0.51, and 0.76, respectively.

Prompt engineering process and results

Prompt design is a heuristic search process with many elements to consider, thus having a significantly large design space. We conducted many experiments to explore better prompts. Here, we share a few typical prompts and the performance of these prompts in the training data set to demonstrate our prompt engineering process.

Output format

The most straightforward prompt without special design would be: “read the pathology report and answer what are pT, pN, tumor stage, and histological diagnosis”. However, this simple prompt would make ChatGPT produce unstructured answers varying in format, terminology, and granularity across the large number of pathology reports. For example, ChatGPT may output pT as “T2” or “pT2NOMx”, and it outputs histological diagnosis as “Multifocal invasive moderately differentiated non-keratinizing squamous cell carcinoma”. The free-text answers will require a significant human workload to clean and process the output from ChatGPT. To solve this problem, we used a multiple choice answer format to force ChatGPT to pick standardized values for some attributes. For example, for pT, ChatGPT could only provide the following outputs: “T0, Tis, T1, T1a, T1b, T2, T2a, T2b, T3, T4, TX, Unknown”. For the histologic diagnosis, ChatGPT could provide output in one of these categories: Lung Adenocarcinoma, Lung Squamous Cell Carcinoma, Other, Unknown. In addition, we added the instruction, “Please make sure to output the whole set of answers together as a single JSON file, and don’t output anything beyond the required JSON file,” to emphasize the requirement for the output format. These requests in the prompt make the downstream analysis of ChatGPT output much more efficient. In order to know the certainty degree of ChatGPT’s estimate and the evidence, we asked ChatGPT to provide the following 4 outputs for each attribute/variable: extracted value as stated in the pathology report, estimated value based on AJCC 7th edition for lung carcinoma staging manual, the certainty degree of the estimation, and the supporting evidence for the estimation. The classification accuracy of this prompt with multiple choice output format (prompt v1) in our training data could achieve 0.854.

Evidence-based inference

One of the major concerns for LLM is that the results from the model are not supported by any evidence, especially when there is not enough information for specific questions. In order to reduce this problem, we emphasize the use of evidence for inference in the prompt by adding this instruction to ChatGPT: “Please ensure to make valid inferences for attribute estimation based on evidence. If there is no available evidence provided to make an estimation, please answer the value as “Unknown.” In addition, we asked ChatGPT to “Include “comment” as the last key of the JSON file.” After adding these two instructions (prompt v2), the performance of the classification in the training data increased to 0.865.

Chain of thought prompting by asking intermediate questions

Although tumor size is not a primary interest for diagnosis and clinical research, it plays a critical role in classifying the pT stage. We hypothesize that if ChatGPT pays closer attention to tumor size, it will have better classification performance. Therefore, we added an instruction in the prompt (prompt v3) to ask ChatGPT to estimate: “tumor size max_dimension: [<the greatest dimension of tumor in Centimeters (cm)>, ‘Unknown’]” as one of the attributes. After this modification, the performance of the classification in the training data increased to 0.90.

Providing examples

Providing examples is an effective way for humans to learn, and it should have similar effects for ChatGPT. We provided a specific example to infer the overall stage based on pT and pN by adding this instruction: “Please estimate the tumor stage category based on your estimated pT category and pN category and use AJCC7 criteria. For example, if pT is estimated as T2a and pN as N0, without information showing distant metastasis, then by AJCC7 criteria, the tumor stage is “Stage IB”.” After this modification (prompt v4), the performance of the classification in the training data increased to 0.936.

Although we can further refine and improve prompts, we decided to use prompt v4 as the final model and apply it to the testing data and get the final classification accuracy of 0.89 in the testing data.

ChatGPT-4 performance

LLM evolves rapidly and OpenAI just released the newest GPT-4 Turbo model (GPT-4-1106-preview) in November 2023. To compare this new model with GPT-3.5-Turbo, we applied this newest GPT model GPT-4-1106 to analyze all the lung cancer pathology notes in the testing data. The classification result and the comparison with the GPT-3.5-Turbo-16k are summarized in Supplementary Table 1 . The results show that GPT-4-turbo performs better in almost every aspect; overall, the GPT-4-turbo model increases performance by over 5%. However, GPT-4-Turbo is much more expensive than GPT-3.5-Turbo. The performance of GPT-3.5-Turbo-16k is still comparable and acceptable. As such, this study mainly focuses on assessing GPT-3.5-Turbo-16k, but highlights the fast development and promise of using LLM to extract structured data from clinical notes.

Analyzing osteosarcoma data

To demonstrate the broader application of this method beyond lung cancer, we collected and analyzed clinical notes from pediatric osteosarcoma patients. Osteosarcoma, the most common type of bone cancer in children and adolescents, has seen no substantial improvement in patient outcomes for the past few decades 18 . Histology grades and margin status are among the most important prognostic factors for osteosarcoma. We collected pathology reports from 191 osteosarcoma cases (approved by UTSW IRB #STU 012018-061). Out of these, 148 cases had histology grade information, and 81 had margin status information; these cases were used to evaluate the performance of the GPT-3.5-Turbo-16K model and our prompt engineering strategy. Final diagnoses on grade and margin were manually reviewed and curated by human experts, and these diagnoses were used to assess ChatGPT’s performance. All notes were de-identified prior to analysis. We applied the same prompt engineering strategy to extract grade and margin information from these osteosarcoma pathology reports. This analysis was conducted on our institution’s private Azure OpenAI platform, using the GPT-3.5-Turbo-16K model (version 0613), the same model used for lung cancer cases. ChatGPT accurately classified both grades (with a 98.6% accuracy rate) and margin status (100% accuracy), as shown in Supplementary Fig. 3 . In addition, Supplementary Fig. 4 details a specific case, illustrating how ChatGPT identifies grades and margin status from osteosarcoma pathology reports.

Since ChatGPT’s release in November 2022, it has spurred many potential innovative applications in healthcare 19 , 20 , 21 , 22 , 23 . To our knowledge, this is among the first reports of an end-to-end data science workflow for prompt engineering, using, and rigorously evaluating ChatGPT in its capacity of batch-processing information extraction tasks on large-scale clinical report data.

The main obstacle to developing traditional medical NLP algorithms is the limited availability of annotated data and the costs for new human annotations. To overcome these hurdles, particularly in integrating problem-specific information and domain knowledge with LLMs’ task-agnostic general knowledge, Augmented Language Models (ALMs) 24 , which incorporate reasoning and external tools for interaction with the environment, are emerging. Research shows that in-context learning (most influentially, few-shot prompting) can complement LLMs with task-specific knowledge to perform downstream tasks effectively 24 , 25 . In-context learning is an approach of training through instruction or light tutorial with a few examples (so called few-shot prompting; well instruction without any example is called 0-shot prompting) rather than fine-tuning or computing-intensive training, which adjusts model weights. This approach has become a dominant method for using LLMs in real-world problem-solving 24 , 25 , 26 . The advent of ALMs promises to revolutionize almost every aspect of human society, including the medical and healthcare domains, altering how we live, work, and communicate. Our study shows the feasibility of using ChatGPT to extract data from free text without extensive task-specific human annotation and model training.

In medical data extraction, our study has demonstrated the advantages of adopting ChatGPT over traditional methods in terms of cost-effectiveness and efficiency. Traditional approaches often require labor-intensive annotation processes that may take weeks and months from medical professionals, while ChatGPT models can be fine-tuned for data extraction within days, significantly reducing the time investment required for implementation. Moreover, our economic analysis revealed the cost savings associated with using ChatGPT, with processing over 900 pathology reports incurring a minimal monetary cost (less than $10 using GPT 3.5 Turbo and less than $30 using GPT-4 Turbo). This finding underscores the potential benefits of incorporating ChatGPT into medical data extraction workflows, not only for its time efficiency but also for its cost-effectiveness, making it a compelling option for medical institutions and researchers seeking to streamline their data extraction processes without compromising accuracy or quality.

A critical requirement for effectively utilizing an LLM is crafting a high-quality “prompt” to instruct the LLM, which has led to the emergence of an important methodology referred to as “prompt engineering.” Two fundamental principles guide this process: firstly, the provision of appropriate context, and secondly, delivering clear instructions about subtasks and the requirements for the desired response and how it should be presented. For a single query for one-time use, the user can experiment with and revise the prompt within the conversation session until a satisfactory answer is obtained. However, prompt design can become more complex when handling repetitive tasks over many input data files using the OpenAI API. In these instances, a prompt must be designed according to a given data feed while maintaining the generality and coverage for various input data features. In this study, we found that providing clear guidance on the output format, emphasizing evidence-based inference, providing chain of thought prompting by asking for tumor size information, and providing specific examples are critical in improving the efficiency and accuracy of extracting structured data from the free-text pathology reports. The approach employed in this study effectively leverages the OpenAI API for batch queries of ChatGPT services across a large set of tasks with similar input data structures, including but not limited to pathology reports and EHR.

Our evaluation results show that the ChatGPT (gpt-3.5-turbo-16k) achieved an overall average accuracy of 89% in extracting and estimating lung cancer staging information and histology subtypes compared to pathologist-curated data. This performance is very promising because some scanned pathology reports included in this study contained random characters, missing parts, typos, varied formats, and divergent information sections. ChatGPT also outperformed traditional NLP methods. Our case analysis shows that most misclassifications were due to a lack of knowledge of detailed pathology terminology or very specialized information in the current versions of ChatGPT models, which could be avoided with future model training or fine-tuning with more domain-specific knowledge.

While our experiments reveal ChatGPT’s strengths, they also underscore its limitations and potential risks, the most significant being the occasional “hallucination” phenomenon 27 , 28 , where the generated content is not faithful to the provided source content. For example, the responses to blank or near-blank reports reflect this issue, though these instances can be detected and corrected due to convergence towards an “attractor”.

The phenomenon of ‘hallucination’ in LLMs presents a significant challenge in the field. It is important to consider several key factors to effectively address the challenges and risks associated with ChatGPT’s application in medicine. Since the output of an LLM depends on both the model and the prompt, mitigating hallucination can be achieved through improvements in GPT models and prompting strategies. From a model perspective, model architecture, robust training, and fine-tuning on a diverse and comprehensive medical dataset, emphasizing accurate labeling and classification, can reduce misclassifications. Additionally, enhancing LLMs’ comprehension of medical terminology and guidelines by incorporating feedback from healthcare professionals during training and through Reinforcement Learning from Human Feedback (RLHF) can further diminish hallucinations. Regarding prompt engineering strategies, a crucial method is to prompt the GPT model with a ‘chain of thought’ and request an explanation with the evidence used in the reasoning. Further improvements could include explicitly requesting evidence from input data (e.g., the pathology report) and inference rules (e.g., AJCC rules). Prompting GPT models to respond with ‘Unknown’ when information is insufficient for making assertions, providing relevant context in the prompt, or using ‘embedding’ of relevant text to narrow down the semantic subspace can also be effective. Harnessing hallucination is an ongoing challenge in AI research, with various methods being explored 5 , 27 . For example, a recent study proposed “SelfCheckGPT” approach to fact-check black-box models 29 . Developing real-time error detection mechanisms is crucial for enhancing the reliability and trustworthiness of AI models. More research is needed to evaluate the extent, impacts, and potential solutions of using LLMs in clinical research and care.

When considering using ChatGPT and similar LLMs in healthcare, it’s important to thoughtfully consider the privacy implications. The sensitivity of medical data, governed by rigorous regulations like HIPAA, naturally raises concerns when integrating technologies like LLMs. Although it is a less concern to analyze public available de-identified data, like the lung cancer pathology notes used in this study, careful considerations are needed for secured healthcare data. More secured OpenAI services are offered by OpenAI security portal, claimed to be compliant to multiple regulation standards, and Microsoft Azure OpenAI, claimed could be used in a HIPAA-compliant manner. For example, de-identified Osteosarcoma pathology notes were analyzed by Microsoft Azure OpenAI covered by the Business Associate Agreement in this study. In addition, exploring options such as private versions of these APIs, or even developing LLMs within a secure healthcare IT environment, might offer good alternatives. Moreover, implementing strong data anonymization protocols and conducting regular security checks could further protect patient information. As we navigate these advancements, it’s crucial to continuously reassess and adapt appropriate privacy strategies, ensuring that the integration of AI into healthcare is both beneficial and responsible.

Despite these challenges, this study demonstrates our effective methodology in “prompt engineering”. It presents a general framework for using ChatGPT’s API in batch queries to process large volumes of pathology reports for structured information extraction and estimation. The application of ChatGPT in interpreting clinical notes holds substantial promise in transforming how healthcare professionals and patients utilize these crucial documents. By generating concise, accurate, and comprehensible summaries, ChatGPT could significantly enhance the effectiveness and efficiency of extracting structured information from unstructured clinical texts, ultimately leading to more efficient clinical research and improved patient care.

In conclusion, ChatGPT and other LLMs are powerful tools, not just for pathology report processing but also for the broader digital transformation of healthcare documents. These models can catalyze the utilization of the rich historical archives of medical practice, thereby creating robust resources for future research.

Data processing, workflow, and prompt engineering

The lung cancer data we used for this study are publicly accessible via CDSA ( https://cancer.digitalslidearchive.org/ ) and TCGA ( https://cBioPortal.org ), and they are de-identified data. The institutional review board at the University of Texas Southwestern Medical Center has approved this study where patient consent was waived for using retrospective, de-identified electronic health record data.

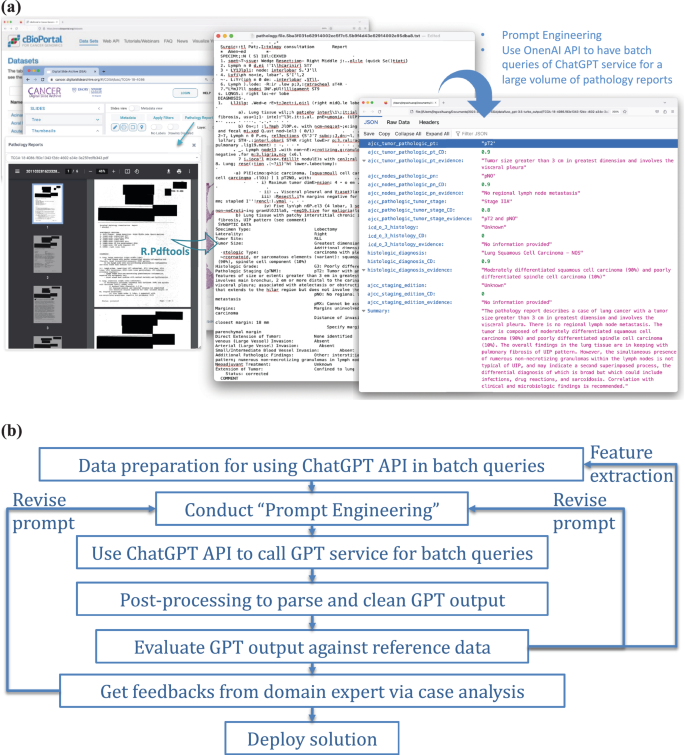

We aimed to leverage ChatGPT to extract and estimate structured data from these notes. Figure 7a displays our process. First, scanned pathology reports in PDF format were downloaded from TCGA and CDSA databases. Second, R package pdftools, an optical character recognition tool, was employed to convert scanned PDF files into text format. After this conversion, we identified reports with near-empty content, poor scanning quality, or missing report forms, and those cases were excluded from the study. Third, the OpenAI API was used to analyze the text data and extract structured data elements based on specific prompts. In addition, we extracted case identifiers and metadata items from the TCGA metadata file, which was used to evaluate the model performance.

a Illustration of the use of OpenAI API for batch queries of ChatGPT service, applied to a substantial volume of clinical notes — pathology reports in our study. b A general framework for integrating ChatGPT into real-world applications.

In this study, we implemented a problem-solving framework rooted in data science workflow and systems engineering principles, as depicted in Fig. 7b . An important step is the spiral approach 30 to ‘prompt engineering’, which involves experimenting with subtasks, different phrasings, contexts, format specifications, and example outputs to improve the quality and relevance of the model’s responses. It was an iterative process to achieve the desired results. For the prompt engineering, we first define the objective: to extract information on TNM staging and histology type as structured attributes from the unstructured pathology reports. Second, we assigned specific tasks to ChatGPT, including estimating the targeted attributes, evaluating certainty levels, identifying key evidence of each attribute estimation, and generating a summary as output. The output was compiled into a JSON file. In this process, clinicians were actively formulating questions and evaluating the results.