An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Behav Sci (Basel)

Assessing Cognitive Factors of Modular Distance Learning of K-12 Students Amidst the COVID-19 Pandemic towards Academic Achievements and Satisfaction

Yung-tsan jou.

1 Department of Industrial and Systems Engineering, Chung Yuan Christian University, Taoyuan 320, Taiwan; wt.ude.ucyc@uojty (Y.-T.J.); moc.oohay@enimrahcrolfas (C.S.S.)

Klint Allen Mariñas

2 School of Industrial Engineering and Engineering Management, Mapua University, Manila 1002, Philippines

3 Department of Industrial Engineering, Occidental Mindoro State College, San Jose 5100, Philippines

Charmine Sheena Saflor

Associated data.

Not applicable.

The COVID-19 pandemic brought extraordinary challenges to K-12 students in using modular distance learning. According to Transactional Distance Theory (TDT), which is defined as understanding the effects of distance learning in the cognitive domain, the current study constructs a theoretical framework to measure student satisfaction and Bloom’s Taxonomy Theory (BTT) to measure students’ academic achievements. This study aims to evaluate and identify the possible cognitive capacity influencing K-12 students’ academic achievements and satisfaction with modular distance learning during this new phenomenon. A survey questionnaire was completed through an online form by 252 K-12 students from the different institutions of Occidental Mindoro. Using Structural Equation Modeling (SEM), the researcher analyses the relationship between the dependent and independent variables. The model used in this research illustrates cognitive factors associated with adopting modular distance learning based on students’ academic achievements and satisfaction. The study revealed that students’ background, experience, behavior, and instructor interaction positively affected their satisfaction. While the effects of the students’ performance, understanding, and perceived effectiveness were wholly aligned with their academic achievements. The findings of the model with solid support of the integrative association between TDT and BTT theories could guide decision-makers in institutions to implement, evaluate, and utilize modular distance learning in their education systems.

1. Introduction

The 2019 coronavirus is the latest infectious disease to develop rapidly worldwide [ 1 ], affecting economic stability, global health, and education. Most countries have suspended thee-to-face classes in order to curb the spread of the virus and reduce infections [ 2 ]. One of the sectors impacted has been education, resulting in the suspension of face-to-face classes to avoid spreading the virus. The Department of Education (DepEd) has introduced modular distance learning for K-12 students to ensure continuity of learning during the COVID-19 pandemic. According to Malipot (2020), modular learning is one of the most popular sorts of distance learning alternatives to traditional face-to-face learning [ 3 ]. As per DepEd’s Learner Enrolment and Survey Forms, 7.2 million enrollees preferred “modular” remote learning, TV and radio-based practice, and other modalities, while two million enrollees preferred online learning. It is a method of learning that is currently being used based on the preferred distance learning mode of the students and parents through the survey conducted by the Department of Education (DepEd); this learning method is mainly done through the use of printed and digital modules [ 4 ]. It also concerns first-year students in rural areas; the place net is no longer available for online learning. Supporting the findings of Ambayon (2020), modular teaching within the teach-learn method is more practical than traditional educational methods because students learn at their own pace during this modular approach. This educational platform allows K-12 students to interact in self-paced textual matter or digital copy modules. With these COVID-19 outbreaks, some issues concerned students’ academic, and the factors associated with students’ psychological status during the COVID-19 lockdown [ 5 ].

Additionally, this new learning platform, modular distance learning, seems to have impacted students’ ability to discover and challenged their learning skills. Scholars have also paid close attention to learner satisfaction and academic achievement when it involves distance learning studies and have used a spread of theoretical frameworks to assess learner satisfaction and educational outcomes [ 6 , 7 ]. Because this study aimed to boost academic achievement and satisfaction in K-12 students, the researcher thoroughly applied transactional distance theory (TDT) to understand the consequences of distance in relationships in education. The TDT was utilized since it has the capability to establish the psychological and communication factors between the learners and the instructors in distance education that could eventually help researchers in identifying the variables that might affect students’ academic achievement and satisfaction [ 8 ]. In this view, distance learning is primarily determined by the number of dialogues between student and teacher and the degree of structuring of the course design. It contributes to the core objective of the degree to boost students’ modular learning experiences in terms of satisfaction. On the other hand, Bloom’s Taxonomy Theory (BTT) was applied to investigate the students’ academic achievements through modular distance learning [ 6 ]. Bloom’s theory was employed in addition to TDT during this study to enhance students’ modular educational experiences. Moreover, TDT was utilized to check students’ modular learning experiences in conjuction with enhacing students’ achievements.

This study aimed to detect the impact of modular distance learning on K-12 students during the COVID-19 pandemic and assess the cognitive factors affecting academic achievement and student satisfaction. Despite the challenging status of the COVID-19 outbreak, the researcher anticipated a relevant result of modular distance learning and pedagogical changes in students, including the cognitive factors identified during this paper as latent variables as possible predictors for the utilization of K-12 student academic achievements and satisfaction.

1.1. Theoretical Research Framework

This study used TDT to assess student satisfaction and Bloom’s theory to quantify academic achievement. It aimed to assess the impact of modular distance learning on academic achievement and student satisfaction among K-12 students. The Transactional Distance Theory (TDT) was selected for this study since it refers to student-instructor distance learning. TDT Moore (1993) states that distance education is “the universe of teacher-learner connections when learners and teachers are separated by place and time.” Moore’s (1990) concept of ”Transactional Distance” adopts the distance that occurs in all linkages in education, according to TDT Moore (1993). Transactional distance theory is theoretically critical because it states that the most important distance is transactional in distance education, rather than geographical or temporal [ 9 , 10 ]. According to Garrison (2000), transactional distance theory is essential in directing the complicated experience of a cognitive process such as distance teaching and learning. TDT evaluates the role of each of these factors (student perception, discourse, and class organization), which can help with student satisfaction research [ 11 ]. Bloom’s Taxonomy is a theoretical framework for learning created by Benjamin Bloom that distinguishes three learning domains: Cognitive domain skills center on knowledge, comprehension, and critical thinking on a particular subject. Bloom recognized three components of educational activities: cognitive knowledge (or mental abilities), affective attitude (or emotions), and psychomotor skills (or physical skills), all of which can be used to assess K-12 students’ academic achievement. According to Jung (2001), “Transactional distance theory provides a significant conceptual framework for defining and comprehending distance education in general and a source of research hypotheses in particular,” shown in Figure 1 [ 12 ].

Theoretical Research Framework.

1.2. Hypothesis Developments and Literature Review

This section will discuss the study hypothesis and relate each hypothesis to its related studies from the literature.

There is a significant relationship between students’ background and students’ behavior .

The teacher’s guidance is essential for students’ preparedness and readiness to adapt to a new educational environment. Most students opt for the Department of Education’s “modular” distance learning options [ 3 ]. Analyzing students’ study time is critical for behavioral engagement because it establishes if academic performance is the product of student choice or historical factors [ 13 ].

There is a significant relationship between students’ background and students’ experience .

Modules provide goals, experiences, and educational activities that assist students in gaining self-sufficiency at their speed. It also boosts brain activity, encourages motivation, consolidates self-satisfaction, and enables students to remember what they have learned [ 14 ]. Despite its success, many families face difficulties due to their parents’ lack of skills and time [ 15 ].

There is a significant relationship between students’ behavior and students’ instructor interaction .

Students’ capacity to answer problems reflects their overall information awareness [ 5 ]. Learning outcomes can either cause or result in students and instructors behavior. Students’ reading issues are due to the success of online courses [ 16 ].

There is a significant relationship between students’ experience and students’ instructor interaction .

The words “student experience” relate to classroom participation. They establish a connection between students and their school, teachers, classmates, curriculum, and teaching methods [ 17 ]. The three types of student engagement are behavioral, emotional, and cognitive. Behavioral engagement refers to a student’s enthusiasm for academic and extracurricular activities. On the other hand, emotional participation is linked to how children react to their peers, teachers, and school. Motivational engagement refers to a learner’s desire to learn new abilities [ 18 ].

There is a significant relationship between students’ behavior and students’ understanding .

Individualized learning connections, outstanding training, and learning culture are all priorities at the Institute [ 19 , 20 ]. The modular technique of online learning offers additional flexibility. The use of modules allows students to investigate alternatives to the professor’s session [ 21 ].

There is a significant relationship between students’ experience and students’ performance .

Student conduct is also vital in academic accomplishment since it may affect a student’s capacity to study as well as the learning environment for other students. Students are self-assured because they understand what is expected [ 22 ]. They are more aware of their actions and take greater responsibility for their learning.

There is a significant relationship between students’ instructor interaction and students’ understanding .

Modular learning benefits students by enabling them to absorb and study material independently and on different courses. Students are more likely to give favorable reviews to courses and instructors if they believe their professors communicated effectively and facilitated or supported their learning [ 23 ].

There is a significant relationship between students’ instructor interaction and students’ performance.

Students are more engaged and active in their studies when they feel in command and protected in the classroom. Teachers play an essential role in influencing student academic motivation, school commitment, and disengagement. In studies on K-12 education, teacher-student relationships have been identified [ 24 ]. Positive teacher-student connections improve both teacher attitudes and academic performance.

There is a significant relationship between students’ understanding and students’ satisfaction .

Instructors must create well-structured courses, regularly present in their classes, and encourage student participation. When learning objectives are completed, students better understand the course’s success and learning expectations. “Constructing meaning from verbal, written, and graphic signals by interpreting, exemplifying, classifying, summarizing, inferring, comparing, and explaining” is how understanding is characterized [ 25 ].

There is a significant relationship between students’ performance and student’s academic achievement .

Academic emotions are linked to students’ performance, academic success, personality, and classroom background [ 26 ]. Understanding the elements that may influence student performance has long been a goal for educational institutions, students, and teachers.

There is a significant relationship between students’ understanding and students’ academic achievement .

Modular education views each student as an individual with distinct abilities and interests. To provide an excellent education, a teacher must adapt and individualize the educational curriculum for each student. Individual learning may aid in developing a variety of exceptional and self-reliant attributes [ 27 ]. Academic achievement is the current level of learning in the Philippines [ 28 ].

There is a significant relationship between students’ performance and students’ satisfaction .

Academic success is defined as a student’s intellectual development, including formative and summative assessment data, coursework, teacher observations, student interaction, and time on a task [ 29 ]. Students were happier with course technology, the promptness with which content was shared with the teacher, and their overall wellbeing [ 30 ].

There is a significant relationship between students’ academic achievement and students’ perceived effectiveness .

Student satisfaction is a short-term mindset based on assessing students’ educational experiences [ 29 ]. The link between student satisfaction and academic achievement is crucial in today’s higher education: we discovered that student satisfaction with course technical components was linked to a higher relative performance level [ 31 ].

There is a significant relationship between students’ satisfaction and students’ perceived effectiveness.

There is a strong link between student satisfaction and their overall perception of learning. A satisfied student is a direct effect of a positive learning experience. Perceived learning results had a favorable impact on student satisfaction in the classroom [ 32 ].

2. Materials and Methods

2.1. participants.

The principal area under study was San Jose, Occidental Mindoro, although other locations were also accepted. The survey took place between February and March 2022, with the target population of K-12 students in Junior and Senior High Schools from grades 7 to 12, aged 12 to 20, who are now implementing the Modular Approach in their studies during the COVID-19 pandemic. A 45-item questionnaire was created and circulated online to collect the information. A total of 300 online surveys was sent out and 252 online forms were received, a total of 84% response rate [ 33 ]. According to several experts, the sample size for Structural Equation Modeling (SEM) should be between 200 and 500 [ 34 ].

2.2. Questionnaire

The theoretical framework developed a self-administered test. The researcher created the questionnaire to examine and discover the probable cognitive capacity influencing K-12 students’ academic achievement in different parts of Occidental Mindoro during this pandemic as well as their satisfaction with modular distance learning. The questionnaire was designed through Google drive as people’s interactions are limited due to the effect of the COVID-19 pandemic. The questionnaire’s link was sent via email, Facebook, and other popular social media platforms.

The respondents had to complete two sections of the questionnaire. The first is their demographic information, including their age, gender, and grade level. The second is about their perceptions of modular learning. The questionnaire is divided into 12 variables: (1) Student’s Background, (2) Student’s Experience, (3) Student’s Behavior, (4) Student’s Instructor Interaction, (5) Student’s Performance, (6) Student’s Understanding, (7) Student’s Satisfaction, (8) Student’s Academic Achievement, and (9) Student’s Perceived Effectiveness. A 5-point Likert scale was used to assess all latent components contained in the SEM shown in Table 1 .

The construct and measurement items.

2.3. Structural Equation Modeling (SEM)

All the variables have been adapted from a variety of research in the literature. The observable factors were scored on a Likert scale of 1–5, with one indicating “strongly disagree” and five indicating “strongly agree”, and the data were analyzed using AMOS software. Theoretical model data were confirmed by Structural Equation Modeling (SEM). SEM is more suitable for testing the hypothesis than other methods [ 53 ]. There are many fit indices in the literature, of which the most commonly used are: CMIN/DF, Comparative Fit Index (CFI), AGFI, GFI, and Root Mean Square Error (RMSEA). Table 2 demonstrates the Good Fit Values and Acceptable Fit Values of the fit indices, respectively. AGFI and GFI are based on residuals; when sample size increases, the value of the AGFI also increase. It takes a value between 0 and 1. The fit is good if the value is more significant than 0.80. GFI is a model index that spans from 0 to 1, with values above 0.80 deemed acceptable. An RMSEA of 0.08 or less suggests a good fit [ 54 ], and a value of 0.05 to 0.08 indicates an adequate fit [ 55 ].

Acceptable Fit Values.

3. Results and Discussion

Figure 2 demonstrates the initial SEM for the cognitive factors of Modular Distance learning towards academic achievements and satisfaction of K-12 students during the COVID-19 pandemic. According to the figure below, three hypotheses were not significant: Students’ Behavior to Students’ Instructor Interaction (Hypothesis 3), Students’ Understanding of Students’ Academic Achievement (Hypothesis 11), and Students’ Performance to Students’ Satisfaction (Hypothesis 12). Therefore, a revised SEM was derived by removing this hypothesis in Figure 3 . We modified some indices to enhance the model fit based on previous studies using the SEM approach [ 47 ]. Figure 3 demonstrates the final SEM for evaluating cognitive factors affecting academic achievements and satisfaction and the perceived effectiveness of K-12 students’ response to Modular Learning during COVID-19, shown in Table 3 . Moreover, Table 4 demonstrates the descriptive statistical results of each indicator.

Initial SEM with indicators for evaluating the cognitive factors of modular distance learning towards academic achievements and satisfaction of K-12 students during COVID-19 pandemic.

Revised SEM with indicators for evaluating the cognitive factors of modular distance learning towards academic achievements and satisfaction of K-12 students during the COVID-19 pandemic.

Summary of the Results.

Descriptive statistic results.

The current study was improved by Moore’s transactional distance theory (TDT) and Bloom’s taxonomy theory (BTT) to evaluate cognitive factors affecting academic achievements and satisfaction and the perceived effectiveness of K-12 students’ response toward modular learning during COVID-19. SEM was utilized to analyze the correlation between Student Background (SB), Student Experience (SE), Student Behavior (SBE), Student Instructor Interaction (SI), Student Performance (SP), Student Understanding (SAU), Student Satisfaction (SS), Student’s Academic achievement (SAA), and Student’s Perceived effectiveness (SPE). A total of 252 data samples were acquired through an online questionnaire.

According to the findings of the SEM, the students’ background in modular learning had a favorable and significant direct effect on SE (β: 0.848, p = 0.009). K-12 students should have a background and knowledge in modular systems to better experience this new education platform. Putting the students through such an experience would support them in overcoming all difficulties that arise due to the limitations of the modular platforms. Furthermore, SEM revealed that SE had a significant adverse impact on SI (β: 0.843, p = 0.009). The study shows that students who had previous experience with modular education had more positive perceptions of modular platforms. Additionally, students’ experience with modular distance learning offers various benefits to them and their instructors to enhance students’ learning experiences, particularly for isolated learners.

Regarding the Students’ Interaction—Instructor, it positively impacts SAU (β: 0.873, p = 0.007). Communication helps students experience positive emotions such as comfort, satisfaction, and excitement, which aim to enhance their understanding and help them attain their educational goals [ 62 ]. The results revealed that SP substantially impacted SI (β: 0.765; p = 0.005). A student becomes more academically motivated and engaged by creating and maintaining strong teacher-student connections, which leads to successful academic performance.

Regarding the Students’ Understanding Response, the results revealed that SAA (β: 0.307; p = 0.052) and SS (β: 0.699; p = 0.008) had a substantial impact on SAU. Modular teaching is concerned with each student as an individual and with their specific capability and interest to assist each K-12 student in learning and provide quality education by allowing individuality to each learner. According to the Department of Education, academic achievement is the new level for student learning [ 63 ]. Meanwhile, SAA was significantly affected by the Students’ Performance Response (β: 0.754; p = 0.014). It implies that a positive performance can give positive results in student’s academic achievement, and that a negative performance can also give negative results [ 64 ]. Pekrun et al. (2010) discovered that students’ academic emotions are linked to their performance, academic achievement, personality, and classroom circumstances [ 26 ].

Results showed that students’ academic achievement significantly positively affects SPE (β: 0.237; p = 0.024). Prior knowledge has had an indirect effect on academic accomplishment. It influences the amount and type of current learning system where students must obtain a high degree of mastery [ 65 ]. According to the student’s opinion, modular distance learning is an alternative solution for providing adequate education for all learners and at all levels in the current scenario under the new education policy [ 66 ]. However, the SEM revealed that SS significantly affected SPE (β: 0.868; p = 0.009). Students’ perceptions of learning and satisfaction, when combined, can provide a better knowledge of learning achievement [ 44 ]. Students’ perceptions of learning outcomes are an excellent predictor of student satisfaction.

Since p -values and the indicators in Students’ Behavior are below 0.5, therefore two paths connecting SBE to students’ interaction—instructor (0.155) and students’ understanding (0.212) are not significant; thus, the latent variable Students’ Behavior has no effect on the latent variable Students’ Satisfaction and academic achievement as well as perceived effectiveness on modular distance learning of K12 students. This result is supported by Samsen-Bronsveld et al. (2022), who revealed that the environment has no direct influence on the student’s satisfaction, behavior engagement, and motivation to study [ 67 ]. On the other hand, the results also showed no significant relationship between Students’ Performance and Students’ Satisfaction (0.602) because the correlation p -values are greater than 0.5. Interestingly, this result opposed the other related studies. According to Bossman & Agyei (2022), satisfaction significantly affects performance or learning outcomes [ 68 ]. In addition, it was discovered that the main drivers of the students’ performance are the students’ satisfaction [ 64 , 69 ].

The result of the study implies that the students’ satisfaction serves as the mediator between the students’ performance and the student-instructor interaction in modular distance learning for K-12 students [ 70 ].

Table 5 The reliabilities of the scales used, i.e., Cronbach’s alphas, ranged from 0.568 to 0.745, which were in line with those found in other studies [ 71 ]. As presented in Table 6 , the IFI, TLI, and CFI values were greater than the suggested cutoff of 0.80, indicating that the specified model’s hypothesized construct accurately represented the observed data. In addition, the GFI and AGFI values were 0.828 and 0.801, respectively, indicating that the model was also good. The RMSEA value was 0.074, lower than the recommended value. Finally, the direct, indirect, and total effects are presented in Table 7 .

Construct Validity Model.

Direct effect, indirect effect, and total effect.

Table 6 shows that the five parameters, namely the Incremental Fit Index, Tucker Lewis Index, the Comparative Fit Index, Goodness of Fit Index, and Adjusted Goodness Fit Index, are all acceptable with parameter estimates greater than 0.8, whereas mean square error is excellent with parameter estimates less than 0.08.

4. Conclusions

The education system has been affected by the 2019 coronavirus disease; face-to-face classes are suspended to control and reduce the spread of the virus and infections [ 2 ]. The suspension of face-to-face classes results in the application of modular distance learning for K-12 students according to continuity of learning during the COVID-19 pandemic. With the outbreak of COVID-19, some issues concerning students’ academic Performance and factors associated with students’ psychological status are starting to emerge, which impacted the students’ ability to learn. This study aimed to perceive the impact of Modular Distance learning on the K-12 students amid the COVID-19 pandemic and assess cognitive factors affecting students’ academic achievement and satisfaction.

This study applied Transactional Distance Theory (TDT) and Bloom Taxonomy Theory (BTT) to evaluate cognitive factors affecting students’ academic achievements and satisfaction and evaluate the perceived effectiveness of K-12 students in response to modular learning. This study applied Structural Equation Modeling (SEM) to test hypotheses. The application of SEM analyzed the correlation among students’ background, experience, behavior, instructor interaction, performance, understanding, satisfaction, academic achievement, and student perceived effectiveness.

A total of 252 data samples were gathered through an online questionnaire. Based on findings, this study concludes that students’ background in modular distance learning affects their behavior and experience. Students’ experiences had significant effects on the performance and understanding of students in modular distance learning. Student instructor interaction had a substantial impact on performance and learning; it explains how vital interaction with the instructor is. The student interacting with the instructor shows that the student may receive feedback and guidance from the instructor. Understanding has a significant influence on students’ satisfaction and academic achievement. Student performance has a substantial impact on students’ academic achievement and satisfaction. Perceived effectiveness was significantly influenced by students’ academic achievement and student satisfaction. However, students’ behavior had no considerable effect on students’ instructor interaction, and students’ understanding while student performance equally had no significant impact on student satisfaction. From this study, students are likely to manifest good performance, behavior, and cognition when they have prior knowledge with regard to modular distance learning. This study will help the government, teachers, and students take the necessary steps to improve and enhance modular distance learning that will benefit students for effective learning.

The modular learning system has been in place since its inception. One of its founding metaphoric pillars is student satisfaction with modular learning. The organization demonstrated its dedication to the student’s voice as a component of understanding effective teaching and learning. Student satisfaction research has been transformed by modular learning. It has caused the education research community to rethink long-held assumptions that learning occurs primarily within a metaphorical container known as a “course.” When reviewing studies on student satisfaction from a factor analytic perspective, one thing becomes clear: this is a complex system with little consensus. Even the most recent factor analytical studies have done little to address the lack of understanding of the dimensions underlying satisfaction with modular learning. Items about student satisfaction with modular distance learning correspond to forming a psychological contract in factor analytic studies. The survey responses are reconfigured into a smaller number of latent (non-observable) dimensions that the students never really articulate but are fully expected to satisfy. Of course, instructors have contracts with their students. Studies such as this one identify the student’s psychological contact after the fact, rather than before the class. The most important aspect is the rapid adoption of this teaching and learning mode in Senior High School. Another balancing factor is the growing sense of student agency in the educational process. Students can express their opinions about their educational experiences in formats ranging from end-of-course evaluation protocols to various social networks, making their voices more critical.

Furthermore, they all agreed with latent trait theory, which holds that the critical dimensions that students differentiate when expressing their opinions about modular learning are formed by the combination of the original items that cannot be directly observed—which underpins student satisfaction. As stated in the literature, the relationship between student satisfaction and the characteristic of a psychological contract is illustrated. Each element is translated into how it might be expressed in the student’s voice, and then a contract feature and an assessment strategy are added. The most significant contributor to the factor pattern, engaged learning, indicates that students expect instructors to play a facilitative role in their teaching. This dimension corresponds to the relational contract, in which the learning environment is stable and well organized, with a clear path to success.

5. Limitations and Future Work

This study was focused on the cognitive capacity of modular distance learning towards academic achievements and satisfaction of K-12 students during the COVID-19 pandemic. The sample size in this study was small, at only 252. If this study is repeated with a larger sample size, it will improve the results. The study’s restriction was to the province of Occidental Mindoro; Structural Equation Modeling (SEM) was used to measure all the variables. Thus, this will give an adequate solution to the problem in the study.

The current study underlines that combining TDT and BTT can positively impact the research outcome. The contribution the current study might make to the field of modular distance learning has been discussed and explained. Based on this research model, the nine (9) factors could broadly clarify the students’ adoption of new learning environment platform features. Thus, the current research suggests that more investigation be carried out to examine relationships among the complexity of modular distance learning.

Funding Statement

This research received no external funding.

Author Contributions

Data collection, methodology, writing and editing, K.A.M.; data collection, writing—review and editing, Y.-T.J. and C.S.S. All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

Informed consent statement.

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Conflicts of interest.

The authors declare no conflict of interest.

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Loading metrics

Open Access

Peer-reviewed

Research Article

Neural Modularity Helps Organisms Evolve to Learn New Skills without Forgetting Old Skills

Affiliation Department of Computer and Information Science, Norwegian University of Science and Technology, Trondheim, Norway

Affiliations Sorbonne Université UPMC Univ Paris 06, UMR 7222, ISIR, Paris, France, CNRS, UMR 7222, ISIR, Paris, France

* E-mail: [email protected]

Affiliation Computer Science Department, University of Wyoming, Laramie, Wyoming, United States of America

- Kai Olav Ellefsen,

- Jean-Baptiste Mouret,

- Published: April 2, 2015

- https://doi.org/10.1371/journal.pcbi.1004128

- Reader Comments

A long-standing goal in artificial intelligence is creating agents that can learn a variety of different skills for different problems. In the artificial intelligence subfield of neural networks, a barrier to that goal is that when agents learn a new skill they typically do so by losing previously acquired skills, a problem called catastrophic forgetting . That occurs because, to learn the new task, neural learning algorithms change connections that encode previously acquired skills. How networks are organized critically affects their learning dynamics. In this paper, we test whether catastrophic forgetting can be reduced by evolving modular neural networks. Modularity intuitively should reduce learning interference between tasks by separating functionality into physically distinct modules in which learning can be selectively turned on or off. Modularity can further improve learning by having a reinforcement learning module separate from sensory processing modules, allowing learning to happen only in response to a positive or negative reward. In this paper, learning takes place via neuromodulation, which allows agents to selectively change the rate of learning for each neural connection based on environmental stimuli (e.g. to alter learning in specific locations based on the task at hand). To produce modularity, we evolve neural networks with a cost for neural connections. We show that this connection cost technique causes modularity, confirming a previous result, and that such sparsely connected, modular networks have higher overall performance because they learn new skills faster while retaining old skills more and because they have a separate reinforcement learning module. Our results suggest (1) that encouraging modularity in neural networks may help us overcome the long-standing barrier of networks that cannot learn new skills without forgetting old ones, and (2) that one benefit of the modularity ubiquitous in the brains of natural animals might be to alleviate the problem of catastrophic forgetting.

Author Summary

A long-standing goal in artificial intelligence (AI) is creating computational brain models (neural networks) that learn what to do in new situations. An obstacle is that agents typically learn new skills only by losing previously acquired skills. Here we test whether such forgetting is reduced by evolving modular neural networks, meaning networks with many distinct subgroups of neurons. Modularity intuitively should help because learning can be selectively turned on only in the module learning the new task. We confirm this hypothesis: modular networks have higher overall performance because they learn new skills faster while retaining old skills more. Our results suggest that one benefit of modularity in natural animal brains may be allowing learning without forgetting.

Citation: Ellefsen KO, Mouret J-B, Clune J (2015) Neural Modularity Helps Organisms Evolve to Learn New Skills without Forgetting Old Skills. PLoS Comput Biol 11(4): e1004128. https://doi.org/10.1371/journal.pcbi.1004128

Editor: Josh C. Bongard, University of Vermont, UNITED STATES

Received: September 17, 2014; Accepted: January 14, 2015; Published: April 2, 2015

Copyright: © 2015 Ellefsen et al. This is an open access article distributed under the terms of the Creative Commons Attribution License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited

Data Availability: The exact source code and experimental configuration files used in our experiments, along with data from all our experiments, are freely available in the online Dryad scientific archive at http://dx.doi.org/10.5061/dryad.s38n5 .

Funding: KOE and JC have no specific financial support for this work. JBM is supported by an ANR young researchers grant (Creadapt, ANR-12-JS03-0009). URL: http://www.agence-nationale-recherche.fr/en/funding-opportunities/documents/aap-en/generic-call-for-proposals-2015-2015/nc/ . The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Competing interests: The authors have declared that no competing interests exist.

Introduction

A long-standing scientific challenge is to create agents that can learn , meaning they can adapt to novel situations and environments within their lifetime. The world is too complex, dynamic, and unpredictable to program all beneficial strategies ahead of time, which is why robots, like natural animals, need to be able to continuously learn new skills on the fly.

Having robots learn a large set of skills, however, has been an elusive challenge because they need to learn new skills without forgetting previously acquired skills [ 1 – 3 ]. Such forgetting is especially problematic in fields that attempt to create artificial intelligence in brain models called artificial neural networks [ 1 , 4 , 5 ]. To learn new skills, neural network learning algorithms change the weights of neural connections [ 6 – 8 ], but old skills are lost because the weights that encoded old skills are changed to improve performance on new tasks. This problem is known as catastrophic forgetting [ 9 , 10 ] to emphasize that it contrasts with biological animals (including humans), where there is gradual forgetting of old skills as new skills are learned [ 11 ]. While robots and artificially intelligent software agents have the potential to significantly help society [ 12 – 14 ], their benefits will be extremely limited until we can solve the problem of catastrophic forgetting [ 1 , 15 ]. To advance our goal of producing sophisticated, functional artificial intelligence in neural networks and make progress in our long-term quest to create general artificial intelligence with them, we need to develop algorithms that can learn how to handle more than a few different problems. Additionally, the difference between computational brain models and natural brains with respect to catastrophic forgetting limits the usefulness of such models as tools to study neurological pathologies [ 16 ].

In this paper, we investigate the hypothesis that modularity, which is widespread in biological neural networks [ 17 – 21 ], helps reduce catastrophic forgetting in artificial neural networks. Modular networks are those that have many clusters (modules) of highly connected neurons that are only sparsely connected to neurons in other modules [ 19 , 22 , 23 ]. The intuition behind this hypothesis is that modularity could allow learning new skills without forgetting old skills because learning can be selectively turned on only in modules learning a new task ( Fig. 1 , top). Selective regulation of learning occurs in natural brains via neuromodulation [ 24 ], and we incorporate an abstraction of it in our model [ 25 ]. We also investigate a second hypothesis: that modularity can improve skill learning by separating networks into a skill module and a reward module , resulting in more precise control of learning ( Fig. 1 , bottom).

- PPT PowerPoint slide

- PNG larger image

- TIFF original image

Hypothesis 1: Evolving non-modular networks leads to the forgetting of old skills as new skills are learned. Evolving networks with a pressure to minimize connection costs leads to modular solutions that can retain old skills as new skills are learned. Hypothesis 2: Evolving modular networks makes reward-based learning easier, because it allows a clear separation of reward signals and learned skills. We present evidence for both hypotheses in this paper.

https://doi.org/10.1371/journal.pcbi.1004128.g001

To evolve modular networks, we add another natural phenomenon: costs for neural connections. In nature, there are many costs associated with neural connections (e.g. building them, maintaining them, and housing them) [ 26 – 28 ] and it was recently demonstrated that incorporating a cost for such connections encourages the evolution of modularity in networks [ 23 ]. Our results support the hypothesis that modularity does mitigate catastrophic forgetting: modular networks have higher overall performance because they learn new skills faster while retaining old skills more. Additional research into this area, including investigating the generality of our results, will catalyze research on creating artificial intelligence, improve models of neural learning, and shed light on whether one benefit of modularity in natural animal brains is an improved ability to learn without forgetting.

Catastrophic forgetting.

Catastrophic forgetting (also called catastrophic interference) has been identified as a problem for artificial neural networks (ANNs) for over two decades: When learning multiple tasks in a sequence, previous skills are forgotten rapidly as new information is learned [ 9 , 10 ]. The problem occurs because learning algorithms only focus on solving the current problem and change any connections that will help solve that problem, even if those connections encoded skills appropriate to previously encountered problems [ 9 ].

Many attempts have been made to mitigate catastrophic forgetting. Novelty vectors modify the backpropagation learning algorithm [ 7 ] to limit the number of connections that are changed in the network based on how novel, or unexpected, the input pattern is [ 29 ]. This technique is only applicable for auto-encoder networks (networks whose target output is identical to their input), thus limiting its value as a general solution to catastrophic forgetting [ 1 ]. Orthogonalization techniques mitigate interference between tasks by reducing their representational overlap in input neurons (via manually designed preprocessing) and by encouraging sparse hidden-neuron activations [ 30 – 32 ]. Interleaved learning avoids catastrophic forgetting by training on both old and new data when learning [ 10 ], although this method cannot scale and does not work for realistic environments because in the real world not all challenges are faced concurrently [ 33 , 34 ]. This problem with interleaved learning can be reduced with pseudo rehearsal , wherein input-output associations from old tasks are remembered and rehearsed [ 34 ]. However, scaling remains an issue with pseudo rehearsal because such associations still must be stored and choosing which associations to store is an unsolved problem [ 15 ]. These techniques are all engineered approaches to reducing the problem of catastrophic forgetting and are not proposed as methods by which natural evolution solved the problem of catastrophic forgetting [ 1 , 10 , 29 – 32 , 34 ].

Dual-net architectures , on the other hand, present a biologically plausible [ 35 ] mechanism for limiting catastrophic forgetting [ 33 , 36 ]. The technique, inspired by theories on how human brains separate and subsequently integrate old and new knowledge, partitions early processing and long-term storage into different subnetworks. Similar to interleaved learning techniques, dual-net architectures enable both new knowledge and input history (in the form of current network state) to affect learning.

Although these methods have been suggested for reducing catastrophic forgetting, many questions remain about how animals avoid this problem [ 1 ] and which mechanisms can help avoid it in neural networks [ 1 , 15 ]. In this paper, we study a new hypothesis, which is that modularity can help avoid catastrophic forgetting. Unlike the techniques mentioned so far, our solution does not require human design, but is automatically generated by evolution. Evolving our solution under biologically realistic constraints has the added benefit of suggesting how such a mechanism may have originated in nature.

Evolving neural networks that learn.

One method for setting the connection weights of neural networks is to evolve them, meaning that an evolutionary algorithm specifies each weight, and the weight does not change within an organism’s “lifetime” [ 5 , 37 – 39 ]. Evolutionary algorithms abstract Darwinian evolution: in each generation a population of “organisms” is subjected to selection (for high performance) and then mutation (and possibly crossover) [ 5 , 38 ]. These algorithms have shown impressive performance—often outperforming human engineers [ 40 , 41 ]—on a range of tasks, such as measuring properties in quantum physics [ 12 ], dynamic rocket guidance [ 42 ], and robot locomotion [ 43 , 44 ].

Another approach to determining the weights of neural networks is to initialize them randomly and then allow them to change via a learning algorithm [ 5 , 7 , 45 ]. Some learning algorithms, such as backpropagation [ 6 , 7 ], require a correct output (e.g. action) for each input. Other learning algorithms are considered more biologically plausible in that they involve only information local to each neuron (e.g. Hebb’s rule [ 45 ]) or infrequent reward signals [ 8 , 46 , 47 ].

Evolution and learning can be combined, wherein evolution creates an initial neural network and then a learning algorithm modifies its connections within the lifetime of the organism [ 5 , 37 , 47 – 49 ]. Compared to behaviors defined solely by evolution, evolving agents that learn leads to better solutions in fewer generations [ 48 , 50 , 51 ], improved adaptability to changing environments [ 48 , 49 ], and enables evolving solutions for larger neural networks [ 48 ]. Computational studies of evolving agents that learn have also shed light on open biological questions regarding the interactions between evolution and learning [ 50 , 52 , 53 ].

The idea of using evolutionary computation to reduce catastrophic forgetting has not been widely explored. In one relevant paper, evolution optimized certain parameters of a neural network to mitigate catastrophic forgetting [ 15 ]. Such parameters included the number of hidden (internal) neurons, learning rates, patterns of connectivity, initial weights, and output error tolerances. That paper did show that there is a potential for evolution to generate a stronger resistance to catastrophic forgetting, but did not investigate the role of modularity in helping produce such a resistance.

Neuromodulatory learning in neural networks.

Evolutionary experiments on artificial neural networks typically model only the classic excitatory and inhibitory actions of neurons in the brain [ 5 ]. In addition to these processes, biological brains employ a number of different neuromodulators , which are chemical signals that can locally modify learning [ 24 , 54 , 55 ]. By allowing evolution to design neuromodulatory dynamics, learning rates for particular synapses can be upregulated and downregulated in response to certain inputs from the environment. These additional degrees of freedom greatly increase the possible complexity of reward-based learning strategies. This type of plasticity-controlling neuromodulation has been successfully applied when evolving neural networks that solve reinforcement learning problems [ 25 , 46 ], and a comparison found that evolution was able to solve more complex tasks with neuromodulated Hebbian learning than with Hebbian learning alone [ 25 ]. Our experiments include this form of neuromodulation ( Methods ).

Evolved modularity in neural networks.

Modularity is ubiquitous in biological networks, including neural networks, genetic regulatory networks, and protein interaction networks [ 17 – 21 ]. Why modularity evolved in such networks has been a long-standing area of research [ 18 – 20 , 56 – 59 ]. Researchers have also long studied how to encourage the evolution of modularity in artificial neural networks, usually by creating the conditions that are thought to promote modularity in natural evolution [ 19 , 57 – 61 ]. Several different hypotheses have been suggested for the evolutionary origins of modularity.

A leading hypothesis has been that modularity emerges when evolution occurs in rapidly changing environments that have common subproblems, but different overall problems [ 57 ]. These environments are said to have modularly varying goals . While such environments can promote modularity [ 57 ], the effect only appears for certain frequencies of environmental change [ 23 ] and can fail to appear with different types of networks [ 58 , 60 , 61 ]. Moreover, it is unclear how many natural environments change modularly and how to design training problems for artificial neural networks that have modularly varying goals. Other experiments have shown that modularity may arise from gene duplication and differentiation [ 19 ], or that it may evolve to make networks more robust to noise in the genotype-phenotype mapping [ 58 ] or to reduce interference between network activity patterns [ 59 ].

Recently, a different cause of module evolution was documented: that modularity evolves when there are costs for connections in networks [ 23 ]. This explanation for the evolutionary origins of modularity is biologically plausible because biological networks have connection costs (e.g. to build connections, maintain them, and house them) and there is evidence that natural selection optimally arranges neurons to minimize these connection costs [ 26 , 27 ]. Moreover, the modularity-inducing effects of adding a connection cost were shown to occur in a wide range of environments, suggesting that adding a selection pressure to reduce connection costs is a robust, general way to encourage modularity [ 23 ]. We apply this technique in our paper because of its efficacy and because it may be a main reason that modularity evolves in natural networks.

Experimental Setup

To test our hypotheses, we set up an environment in which there is a potential for catastrophic forgetting and where individuals able to avoid this forgetting receive a higher evolutionary fitness , meaning they are more likely to reproduce. The environment is an abstraction of a world in which an organism performs a daily routine of trying to eat nutritious food while avoiding eating poisonous food. Every day the organism observes every food item one time: half of the food items are nutritious and half are poisonous. To achieve maximum fitness, the individual needs to eat all the nutritious items and avoid eating the poisonous ones. After a number of days, the season changes abruptly from a summer season to a winter season. In the new season, there is a new set of food sources, half of them nutritious and half poisonous, and the organism has to learn which is which. After this winter season, the environment changes back to the summer season and the food items and their nutritious/poisonous statuses are the same as in the previous summer. The environment switches back and forth between these two seasons multiple times in the organism’s lifetime. Individuals that remember each season’s food associations perform better by avoiding poisonous items without having to try them first.

We consider each pair of a summer and winter season a year . Every season lasts for five days , and in each day an individual encounters all four food items for that season in a random order. A lifetime is three years ( Fig. 2 ). To ensure that individuals must learn associations within their lifetimes instead of having genetically hardcoded associations [ 47 , 62 ], in each lifetime two food items are randomly assigned as nutritious and the other two food items are assigned as poisonous ( Fig. 3 ). To select for general learners rather than individuals that by chance do well in a specific environment, performance is averaged over four random environments (lifetimes) for each individual during evolution, and over 80 random environments (lifetimes) when assessing the performance of final, end-of-experiment individuals ( Methods ).

A lifetime lasts 3 years. Each year has 2 seasons: winter and summer. Each season consists of 5 days. In each day, each individual sees all food items available in that season (only two are shown) in a random order.

https://doi.org/10.1371/journal.pcbi.1004128.g002

To ensure that agents learn associations within their lifetimes instead of genetically hardcoding associations, whether each food item is nutritious or poisonous is randomized each generation. There are four food items per season (two are depicted).

https://doi.org/10.1371/journal.pcbi.1004128.g003

This environment selects for agents that can avoid forgetting old information as they learn new, unrelated information. For instance, if an agent is able to avoid forgetting the summer associations during the winter season, it will immediately perform well when summer returns, thus outcompeting agents that have to relearn summer associations. Agents that forget, especially catastrophically, are therefore at a selective disadvantage.

Our main results were found to be robust to variations in several of our experimental parameters, including changes to the number of years in the organism’s lifetime, the number of different seasons per year, the number of different edible items, and different representations of the inputs (the presence of items being represented either by a single input or distributed across all inputs for a season). We also observed that our results are robust to lengthening the number of days per season: networks in the experimental treatment (called “P&CC” for reasons described below) significantly outperform the networks in the control (“PA”) treatment ( p < 0.05) even when doubling or quadrupling the number of days per season, although the size of the difference diminished in longer seasons.

Neural network model.

The model of the organism’s brain is a neural network with 10 input neurons (Supp. S1 Fig ). From left to right, inputs 1-4 and 5-8 encode which summer and winter food item is present, respectively. During summer, the winter inputs are never active and vice versa. Catastrophic forgetting may appear in these networks because a non-modular neural network is likely to use the same hidden neurons for both seasons ( Fig. 1 , top). We segmented the summer and winter items into separate input neurons to abstract a neural network responsible for an intermediate phase of cognition, where early visual processing and object recognition have already occurred, but before decisions have been made about what to do in response to the recognized visual stimuli. Such disentangled representations of objects have been identified in animal brains [ 63 ] and are common at intermediate layers of neural network models [ 64 ]. The final two inputs are for reinforcement learning: inputs 9 and 10 are reward and punishment signals that fire when a nutritious or poisonous food item is eaten, respectively. The network has a single output that determines if the agent will eat ( output > 0) or ignore ( output < = 0) the presented food item.

Associations can be learned by properly connecting reward signals through neuromodulatory neurons to non-modulatory neurons that determine which actions to take in response to food items ( Methods ). Evolution determines the neural wiring that produces learning dynamics, as described next.

Evolutionary algorithm.

Evolution begins with a randomly generated population of neural networks. The performance of each network is evaluated as described above. More fit networks tend to have more offspring, with fitness being determined differently in each treatment, as explained below. Offspring are generated by copying a parent genome and mutating it by adding or removing connections, changing the strength of connections, and switching neurons from being modulatory to non-modulatory or vice versa. The process repeats for 20,000 generations.

To evolve modular neural networks, we followed a recently demonstrated procedure where modularity evolves as a byproduct of a selection pressure to reduce neural connectivity [ 23 ]. We compared a treatment where the fitness of individuals was based on performance alone (PA) to one based on both maximizing performance and minimizing connection costs (P&CC). Specifically, evolution proceeds according to a multi-objective evolutionary algorithm with one (PA) or two (P&CC) primary objectives. A network’s connection cost equals its number of connections, following [ 23 ]. More details on the evolutionary algorithm can be found in Methods.

A Connection Cost Increases Performance and Modularity

The addition of a cost for connections (the P&CC treatment) leads to a rapid, sustained, and statistically significant fitness advantage versus not having a connection cost (the PA treatment) ( Fig. 4 ). In addition to overall performance across generations, we looked at the day-to-day performance of final, evolved individuals ( Fig. 5 ). P&CC networks learn associations faster in their first summer and winter, and maintain higher performance over multiple years (pairs of seasons).

Modularity is measured via a widely used approximation of the standard Q modularity score [ 23 , 57 , 65 , 67 ] ( Methods ). For each treatment, the median from 100 independent evolution experiments is shown ± 95% bootstrapped confidence intervals of the median ( Methods ). Asterisks below each plot indicate statistically significant differences at p < 0.01 according to the Mann-Whitney U test, which is the default statistical test throughout this paper unless otherwise specified.

https://doi.org/10.1371/journal.pcbi.1004128.g004

Plotted is median performance per day (± 95% bootstrapped confidence intervals of the median) measured across 100 organisms (the highest-performing organism from each experiment per treatment) tested in 80 new environments (lifetimes) with random associations ( Methods ). P&CC networks significantly outperform PA networks on every day (asterisks). Eating no items or all items produces a score of 0.5; eating all and only nutritious food items achieves the maximum score of 1.0.

https://doi.org/10.1371/journal.pcbi.1004128.g005

The presence of a connection cost also significantly increases network modularity ( Fig. 4 ), confirming the finding of Clune et al. [ 23 ] in this different context of networks with within-life learning. Networks evolved in the P&CC treatment tend to create a separate reinforcement learning module that contains the reward and punishment inputs and most or all neuromodulatory neurons ( Fig. 6 ). One of our hypotheses ( Fig. 1 , bottom) suggested that such a separation could improve the efficiency of learning, by regulating learning (via neuromodulatory neurons) in response to whether the network performed a correct or incorrect action, and applying that learning to downstream neurons that determine which action should be taken in response to input stimuli.

Dark blue nodes are inputs that encode which type of food has been encountered. Light blue nodes indicate internal, non-modulatory neurons. Red nodes are reward or punishment inputs that indicate if a nutritious or poisonous item has been eaten. Orange neurons are neuromodulatory neurons that regulate learning. P&CC networks tend to separate the reward/punishment inputs and neuromodulatory neurons into a separate module that applies learning to downstream neurons that determine which actions to take. For each treatment, the highest-performing network from each of the nine highest-performing evolution experiments are shown (all are shown in the Supporting Information). In each panel, the left number reports performance and the right number reports modularity. We follow the convention from [ 23 ] of placing nodes in the way that minimizes the total connection length.

https://doi.org/10.1371/journal.pcbi.1004128.g006

To quantify whether learning is separated into its own module, we adopted a technique from [ 23 ], which splits a network into the most modular decomposition according to the modularity Q score [ 65 ]. We then measured the frequency with which the reinforcement inputs (reward/punishment signals) were placed into a different module from the remaining food-item inputs. This measure reveals that P&CC networks have a separate module for learning in 31% of evolutionary trials, whereas only 4% of the PA trials do, which is a significant difference ( p = 2.71 × 10 −7 ), in agreement with our hypothesis ( Fig. 1 , bottom). Analyses also reveal that the networks from both treatments that have a separate module for learning perform significantly better than networks without this decomposition (median performance of modular networks in 80 randomly generated environments ( Methods ): 0.87 [95% CI: 0.83, 0.88] vs. non-modular networks: 0.80 [0.71, 0.84], p = 0.02). Even though only 31% of the P&CC networks are deemed modular in this particular way, the remaining P&CC networks are still significantly more modular on average than PA networks (median Q scores are 0.25 [0.23, 0.28] and 0.2 [0.19, 0.22] respectively, p = 4.37 × 10 −6 ), suggesting additional ways in which modularity improves the performance of P&CC networks.

After observing that a connection cost significantly improves performance and modularity, we analyzed whether this increased performance can be explained by the increased modularity, or whether it may better correlate with network sparsity , since P&CC networks also have fewer connections (P&CC median number of connections is 35.5 [95% CI: 31.0, 40.0] vs. PA 82.0 [74.0, 97.1], p = 7.97 × 10 −19 ). Both sparsity and modularity are correlated with the performance of networks ( Fig. 7 ). Sparsity also correlates with modularity ( p = 5.15 × 10 −40 as calculated by a t -test of the hypothesis that the correlation is zero), as previously shown [ 23 , 66 ]. Our interpretation of the data is that the pressure for both functionality and sparsity causes modularity, which in turn helps evolve learners that are more resistant to catastrophic forgetting. However, it cannot be ruled out that sparsity itself mitigates catastrophic forgetting [ 1 ], or that the general learning abilities of the network have been improved due to the separation into a skill module and a learning module. Either way, the data support our hypothesis that a connection cost promotes the evolution of sparsity, modularity, and increased performance on learning tasks.

Black dots represent the highest-performing network from each of the 100 experiments from both the PA and P&CC treatments. Both the sparsity ( p = 1.08 × 10 −16 ) and modularity ( p = 1.19 × 10 −5 ) of networks significantly correlates with their performance. Performance was measured in 80 randomly generated environments ( Methods ). Significance was calculated by a t -test of the hypothesis that the correlation is zero. Notice that many of the lowest-performing networks are close to the maximum of 150 connections.

https://doi.org/10.1371/journal.pcbi.1004128.g007

Modular P&CC Networks Learn More and Forget Less

We next investigated whether the improved performance of P&CC individuals is because they forget less. Measuring the percent of information a network retains can be misleading, because networks that never learn anything are reported as never forgetting anything. In many PA experiments, networks did not learn in one or both seasons, which looks like perfect retention , but for the wrong reason: they do not forget anything because they never knew anything to begin with. To prevent such pathological, non-learning networks from clouding this analysis, we compared only the 50 highest-performing experiments from each treatment, instead of all 100 experiments. For both treatments, we then measured retention and forgetting in the highest-performing network from each of these 50 experiments.

To illuminate how old associations are forgotten and new ones are formed, we performed an experiment from studies of association forgetting in humans [ 11 ]: already evolved individuals learned one task and then began training on a new task, during which we measured how their performance on the original task degraded. Specifically, we allowed individuals to learn for 50 winter days—to allow even poor learners time to learn the winter associations—before exposing them to 20 summer days, during which we measured how rapidly they forgot winter associations and learned summer associations ( Methods ). Notice that individuals were evolved in seasons lasting only 5 days, but we measure learning and forgetting for 20 days in this analysis to study the longer-term consequences of the evolved learning architectures. Thus, the key result relevant to catastrophic forgetting is what occurs during the first five days. We included the remaining 15 days to show that the differences in performance persist if the seasons are extended.

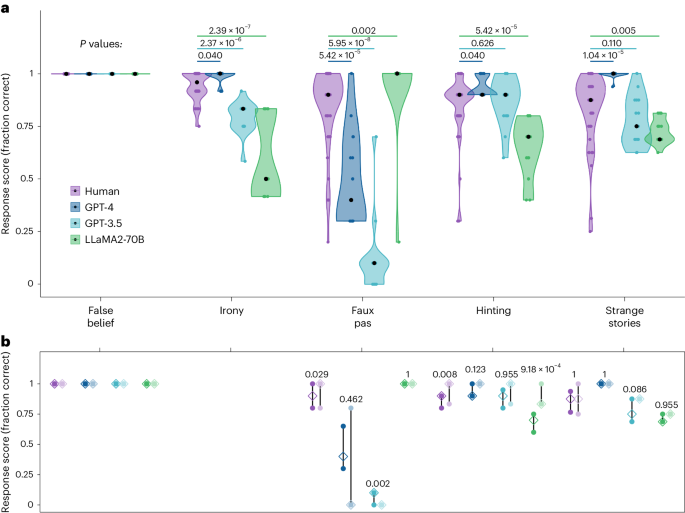

P&CC networks retain higher performance on the original task when learning a new task ( Fig. 8 , left). They also learn the new task better ( Fig. 8 , center). The combined effect significantly improves performance ( Fig. 8 , right), meaning P&CC networks are significantly better at learning associations in a new season while retaining associations from a previous one.

P&CC networks, which are more modular, are better at retaining associations learned on a previous task (winter associations) while learning a new task (summer associations), better at learning new (summer) associations, and significantly better when measuring performance on both the associations for the original task (winter) and the new task (summer). Note that networks were evolved with five days per season, so the results during those first five days are the most informative regarding the evolutionary mitigation of catastrophic forgetting: we show additional days to reveal longer-term consequences of the evolved architectures. Solid lines show median performance and shaded areas indicate 95% bootstrapped confidence intervals of the median. The retention scores (left panel) are normalized relative to the original performance before training on the new task (an unnormalized version is provided as Supp. S6 Fig ). During all performance measurements, learning was disabled to prevent such measurements from changing an individual’s known associations ( Methods ).

https://doi.org/10.1371/journal.pcbi.1004128.g008

To further understand whether the increased performance of the P&CC individuals is because they learn more, retain more, or both, we counted the number of retained and learned associations for individuals in 80 randomly generated environments (lifetimes). If we regard performance in each season as a skill , this experiment measures whether the individuals can retain a previously-learned skill (perfect summer performance) after learning a new skill (perfect winter performance). We tested the knowledge of the individuals in the following way: at the end of each season, we counted the number of sets of associations (summer or winter) that individuals knew perfectly, which required them knowing the correct response for each food item in that season. We formulated four metrics that quantify how well individuals knew and retained associations.

The first metric (“ Perfect ”) measures the number of seasons an individual knew both sets of associations (summer and winter). Doing well on this metric indicates reduced catastrophic forgetting because it requires retaining an old skill even after a new one is learned. P&CC individuals learned significantly more Perfect associations ( Fig. 9 , Perfect).

P&CC individuals learn significantly more associations, whether counting only when the associations for both seasons are known (“Perfect” knowledge) or separately counting knowledge of either season’s association (total “Known”). P&CC networks also forget fewer associations, defined as associations known in one season and then forgotten in the next, which is significant when looking at the percent of known associations forgotten (“% Forgotten”). P&CC networks also retain significantly more associations, meaning they did not forget one season’s association when learning the next season’s association. See text for more information about the “Perfect”, “Known”, “Forgotten,” and “Retained” metrics. During all performance measurements, learning was disabled to prevent such measurements from changing an individual’s known associations ( Methods ). Bars show median performance, whiskers show the 95% bootstrapped confidence interval of the median. Two asterisks indicate p < 0.01, three asterisks indicate p < 0.001.

https://doi.org/10.1371/journal.pcbi.1004128.g009

The second metric (“ Known ”) is the sum of the number of seasons that summer associations were known and the number of seasons that winter associations were known. In other words, it counts knowing either season in a year and doubly counts knowing both. P&CC individuals learned significantly more of these Known associations ( Fig. 9 , Known).

The third metric counts the number of seasons in which an association was “ Forgotten ”, meaning an association was completely known in one season, but was not in the following season. There is no significant difference between treatments on this metric when measured in absolute numbers ( Fig. 9 , Forgotten). However, measured as a percentage of Known items, P&CC individuals forgot significantly fewer associations ( Fig. 9 , % Forgotten). The modular P&CC networks thus learned more and forgot less—leading to a significantly lower percentage of forgotten associations.

The final metric counts the number of seasons in which an association was “ Retained ”, meaning an association was completely known in one season and the following season. P&CC individuals retained significantly more than PA individuals, both in absolute numbers ( Fig. 9 , Retained) and as a percentage of the total number of known items ( Fig. 9 , % Retained).

In each season, an agent can know two associations (summer and winter), leading to a maximum score of 6 × 80 × 2 = 960 for the known metric (6 seasons per lifetime ( Fig. 2 ), 80 random environments). The agent can retain or forget two associations each season except the first, making the maximum score for these metrics 5 × 80 × 2 = 800. However, the agent can only score one perfect association (meaning both summer and winter is known) each season, leading to a maximum score of 6 × 80 = 480 for that metric.

In summary, this analysis reveals that a connection cost caused evolution to find individuals that are better at gaining new knowledge without forgetting old knowledge. In other words, adding a connection cost mitigated catastrophic forgetting. That, in turn, enabled an increase in the total number of associations P&CC individuals learned in their lifetimes.

Removing the Ability of Evolution to Improve Retention

To further test whether the improved performance in the P&CC treatment results from it mitigating catastrophic forgetting, we conducted experiments in a regime where retaining skills between tasks is impossible. Under such a regime, if the P&CC treatment does not outperform the PA treatment, that is evidence for our hypothesis that the ability of P&CC networks to outperform PA networks in the normal regime is because P&CC networks retain previously learned skills more when learning new skills.

To create a regime similar to the original problem, but without the potential to improve performance by minimizing catastrophic forgetting, we forced individuals to forget everything they learned at the end of every season. This forced forgetting was implemented by resetting all neuromodulated weights in the network to random values between each season change. The experimental setup was otherwise identical to the main experiment. In this treatment, evolution cannot evolve individuals to handle forgetting better, and can focus only on evolving good learning abilities for each season. With forced forgetting, the P&CC treatment no longer significantly outperforms the PA treatment ( Fig. 10 ).

With forced forgetting, P&CC does not significantly outperform PA: P&CC 0.91 [95% CI: 0.91, 0.91] vs. PA 0.91 [0.90, 0.91], p > 0.05. In the default treatment where remembering is possible, P&CC significantly outperforms PA: P&CC 0.94 [0.92, 0.94] vs. PA 0.78 [0.78, 0.81], p = 8.08 × 10 −6 .

https://doi.org/10.1371/journal.pcbi.1004128.g010

This result indicates that the connection cost specifically helps evolution in optimizing the parts of learning related to resistance against forgetting old associations while learning new ones.

Interestingly, without the connection cost (the PA treatment), forced forgetting significantly improves performance ( Fig. 10 , p = 2.5 × 10 −5 via bootstrap sampling with randomization [ 68 ]). Forcing forgetting likely removes some of the interference between learning the two separate tasks. With the connection cost, however, forced forgetting leads to worse results, indicating that the modular networks in the P&CC treatment have found solutions that benefit from remembering what they have learned in the past, and thus are worse off when not allowed to remember that information.

The Importance of Neuromodulation

We hypothesized that a key factor that causes modularity to help minimize catastrophic forgetting is neuromodulation , which is the ability for learning to be selectively turned on and off in specific neural connections in specific situations. To test whether neuromodulation is essential to evolving a resistance to forgetting in our experiments, we evolved neural networks with and without neuromodulation. When we evolve without neuromodulation, the Hebbian learning dynamics of each connection are constant throughout the lifetime of the organism: this is accomplished by disallowing neuromodulatory neurons from being included in the networks ( Methods ).

Comparing the performance of networks evolved with and without neuromodulation demonstrates that with purely Hebbian learning (i.e. without neuromodulation) evolution never produces a network that performs even moderately well ( Fig. 11 ). This finding is in line with previous work demonstrating that neuromodulation allows evolution to solve more complex reinforcement learning problems than purely Hebbian learning [ 25 ]. While the non-modulatory P&CC networks perform slightly better than non-modulatory PA networks, the differences, while significant (P&CC performance 0.72 [95% CI: 0.71, 0.72] vs. PA 0.70 [0.69, 0.71], p = 0.003), are small. Because networks in neither treatment learn much, studying whether they suffer from catastrophic forgetting is uninformative. These results reveal that neuromodulation is essential to perform well in these environments, and its presence is effectively a prerequisite for testing the hypothesis that modularity mitigates catastrophic forgetting. Moreover, neuromodulation is ubiquitous in animal brains, justifying its inclusion in our default model. One can think of neuromodulation, like the presence of neurons, as a necessary, but not sufficient, ingredient for learning without forgetting. Including it in the experimental backdrop allows us to isolate whether modularity further improves learning and helps mitigate catastrophic forgetting.

Connection costs and neuromodulatory dynamics interact to evolve forgetting-resistant solutions. Without neuromodulation, neither treatment performs well, suggesting that neuromodulation is a prerequisite for solving these types of problems, a result that is consistent with previous research showing that neuromodulation is required to solve challenging learning tasks [ 25 ]. However, even in the non-neuromodulatory (pure Hebbian) experiments, P&CC is more modular (0.33 [95% CI: 0.33, 0.33] vs PA 0.26 [0.22, 0.31], p = 1.16 × 10 −12 ) and performs significantly better (0.72 [95% CI: 0.71, 0.72] vs. PA 0.70 [0.69, 0.71], p = 0.003). That said, because both treatments perform poorly without neuromodulation, and because natural animal brains contain neuromodulated learning [ 28 ], it is most interesting to see the additional impact of modularity against the backdrop of neuromodulation. Against that backdrop, neural modularity improves performance to a much larger degree (P&CC 0.94 [0.92, 0.94] vs. PA 0.78 [0.78, 0.81], p = 8.08 × 10 −6 ), in part by reducing catastrophic forgetting (see text).

https://doi.org/10.1371/journal.pcbi.1004128.g011