Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Technology Feature

- Published: 11 January 2022

Method of the Year: protein structure prediction

- Vivien Marx 1

Nature Methods volume 19 , pages 5–10 ( 2022 ) Cite this article

38k Accesses

33 Citations

41 Altmetric

Metrics details

- Machine learning

- Protein folding

- Structure determination

Nature Methods has named protein structure prediction the Method of the Year 2021.

You have full access to this article via your institution.

If the Earth moves for you, among other reasons, the causes can be geologic or romantic. In science, in the context of predicting protein structure, you might have felt the ground tremble in late 2020 as you perused the results of the 14th Critical Assessment of Protein Structure Prediction (CASP). In this competition, scientists regularly test the prowess of their methods that computationally predict the intricate twirly-curly three-dimensional (3D) structure of a protein from a sequence of amino acids.

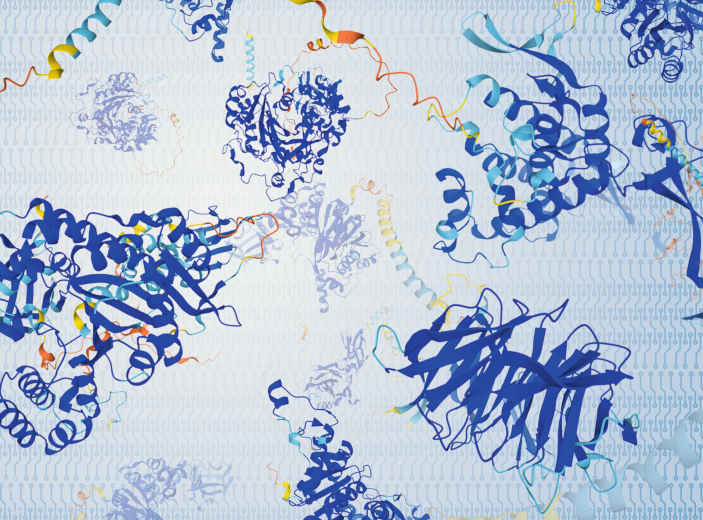

A pleasant frisson may have set in more recently as you browsed the new and rapidly growing AlphaFold Protein Structure Database or perused papers 1 , 2 , 3 about a method called AlphaFold and its application to the entire human proteome, or when you dug into the code that drives this inference engine, with its neural network architecture that yields the 3D structure of proteins from a given amino acid sequence. The team behind AlphaFold is DeepMind Technologies, launched as an AI startup in 2010 by Demis Hassabis, Shane Legg and Mustafa Suleyman and now part of Alphabet after being acquired by Google in 2014. DeepMind has presented AlphaFold1 4 and AlphaFold2 and, more recently, AlphaFold-Multimer 5 for predicting the structures of known protein complexes.

AlphaFold has received much attention, but there are many other recent tools from academic labs, such as RoseTTAFold 6 , a method with a ‘three-track’ network architecture developed in the lab of David Baker and colleagues at the University of Washington along with academic teams around the world. It can be used to, for example, predict protein structures and generate models of protein-protein complexes, too. In their paper, the authors note that they had been “intrigued” by the DeepMind results and sought to increase the accuracy of protein structure prediction as they worked on their architecture.

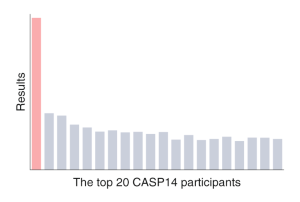

At CASP14 in 2020, AlphaFold2 blew away its competitors. The difference between the DeepMind team results and those of the group in second place “was a bit of a shock,” says University College London researcher David Jones. “I’m still processing that a bit, really.” Only some months later, when DeepMind gave a glimpse of its method and shared the code, were scientists able to begin looking under the hood. No new information was used to transition AlphaFold1 to AlphaFold2; there was no “clever trick,” says Jones. The team used what academics had been doing for years but applied it in a more principled way, he says.

In the lead-up to the 2018 CASP13 competition, which the DeepMind team won quite handily with AlphaFold1, Jones had consulted for DeepMind. Especially after machine-learning-based methods were introduced in 2016, CASP results had been steadily improving, says Dame Janet Thornton, DBE, from the European Bioinformatics Institute (EBI). Thornton is the former EBI director and has long worked on the challenges of protein structure determination. She was interviewed jointly with Jones. At CASP13, she had been delighted to see progress taking place with protein structure prediction methods. Now, as Thornton considers the possibilities AlphaFold2 opens up, by having solved a big methods puzzle in science, “it gives me a spring in my step.” She says she hadn’t thought “we’d get quite this far in my lifetime.”

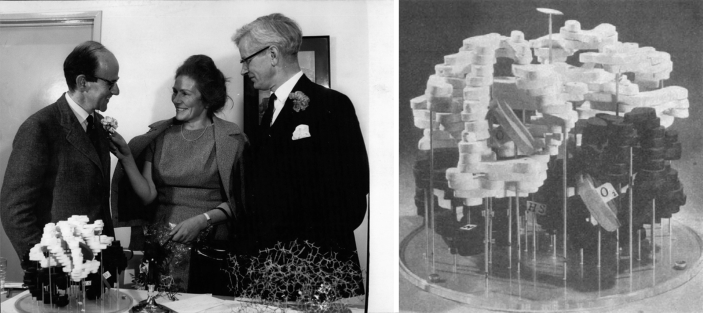

Historical build

The way AlphaFold2 can predict a protein structure is the culmination of a scientific journey that began with the work 7 , 8 of Max Perutz and John Kendrew of the Cavendish Laboratory at the University of Cambridge, says Aled Edwards of the University of Toronto and the Structural Genomics Consortium, a public–private venture. Perutz and Kendrew received the Nobel Prize in 1962 for the way they used X-rays passing through crystallized protein and onto film to painstakingly decipher the structures of proteins such as hemoglobin and myoglobin.

Structural biologists have since followed in their footsteps to experimentally determine structures of many proteins. The research community has deposited structures and accompanying data in the Protein Data Bank (PDB) 9 , an open resource founded in 1971 that holds 185,541 structures as Nature Methods goes to press.

The PDB’s holdings stem from labs around the world that toiled with X-ray crystallography, nuclear magnetic resonance spectroscopy (NMR) or electron microscopy to determine the complex structure of a 3D protein. AlphaFold2’s machine-learning algorithm was trained on the PDB’s data to assess the patterns with which amino acids become the many combinations of helices, sheets and folds that enable a protein to do its specific tasks in a cell.

Converting experimental signals into structures has been the realm of physicists and mathematicians who devoted time, perseverance and sweat to determine protein structures, says Edwards. In the early days, this work involved assessing measurements on photographic film. The fact that they, and those who followed, have been so committed to data quality enabled the continued work in protein structure determination. Speaking more generally, he says, experimentally solving protein structures is “a pain in the (expletive).” It’s why he applauded the foresight of University of Maryland researcher John Moult, who launched CASP in 1994 to highlight and advance community activity related to methods for computationally predicting protein structure. Edwards and many others were part of the NIH-funded Protein Structure Initiative that ran from 2000 to 2015. The project set out to systematically add to PDB’s experimentally determined structures and has certainly contributed to AlphaFold’s success, says Edwards. When the project’s funding ceased, many labs were dismayed. The PSI had been sampling the still-unexplored “structure space,” he says. After the PSI ended, the PDB kept growing as labs continued to add their structures.

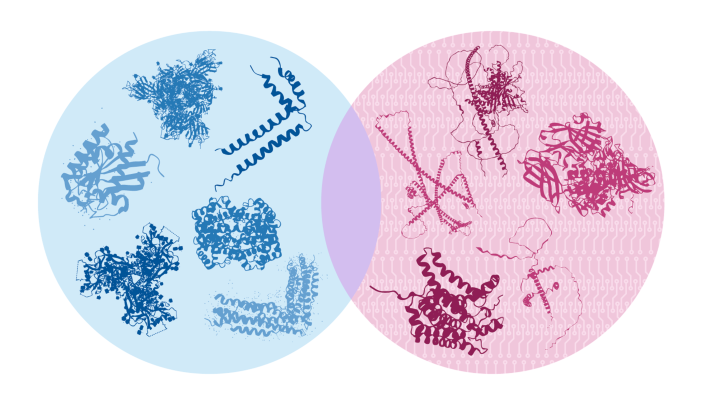

The PDB’s main database has been reserved for structures resolved experimentally and by single methods such as X-ray crystallography, NMR or cryo-electron microscopy (cryo-EM), says Helen Berman, who co-founded the Protein Data Bank. Over time, computational models emerged that used multiple sequence alignments and, later, also machine learning to predict structures. PDB-Dev was set up as a digital home for structures determined with “integrative methods,” which means it’s for structures generated using experimental methods combined with computational ones. The strictly in silico structures are held in the ModelArchive .

“AlphaFold is a triumph,” says Berman. But it “would never ever have succeeded, ever,” she says, if models had been improperly mixed with experimentally determined structures. The training set for AlphaFold’s neural network has been PDB’s well-curated experimental data. DeepMind, in collaboration with EBI, is now filling the AlphaFold Protein Structure Database with hundreds of thousands of computationally generated human protein structures and those from many other organisms, including the ‘classic’ research organisms maize, yeast, rat, mouse, fruit fly and zebrafish.

Every day, the PDB sees around 2.5 million downloads of protein coordinates, says Berman. Biotech and pharma companies regularly download the database for research performed behind their firewalls. Around the time of CASP13 in 2018, Berman noticed massive downloads that seemed unlike the typical downloads from the structural biology community. Usage is not monitored in detail, and all of it, be it from academia or companies, has made her happy about the resource. “If you don’t have people use it, then why have it?” she says. As a child of the 1960s, her personal commitment has been to the “public good” that the resource provides. Over time, the PDB team has navigated expanding its global reach and managing structural data generated by emerging methods. “Now we have to make a new kind of decision,” says Berman, whose workload belies the fact that she recently retired from PDB and her position on the Rutgers University faculty. She has daily calls about how the existing data—the data in the PDB, data generated by AlphaFold2, data generated by RoseTTAFold and other platforms, and other computationally generated data—should be stored and, separately, how they should be served to the community.

Rather than make a centralized behemoth of a database, says Janos Hajdu, who splits his time between the European Extreme Light Infrastructure at the Academy of Sciences of the Czech Republic and the Laboratory of Molecular Biophysics at Uppsala University and who is not involved in these discussions, he would like to see “independent databases that talk to each other.”

The actual database plan is still emerging, and details are under wraps until it’s worked out, says Berman. It will take six months to a year to hammer out these details and find a solution that works for all.

Confidence measures

Each AlphaFold2 structure is accompanied by a “confidence score,” which, as Janet Thornton says, will help and guide users, be they structural biologists or scientists working in other areas. The per-residue confidence score (pLDDT) is between 0 and 100.

Indeed, says Aled Edwards, confidence scores are important pieces of information, but they likely matter more to structural biologists than to other biologists. A diabetes researcher with a hypothesis about a protein who has downloaded a structure with a confidence score of 82% will not be deterred from an experiment he or she is planning, he says. The confidence score could be a point that a reviewer might critically note: the paper authors had chosen to use a “maybe structure,” one with a lower confidence score.

Janos Hajdu sees value in confidence scores. Just as one dresses differently for a weather forecast of a 5% or a 95% probability of rain, confidence scores are important and need to be sufficiently well developed. After all, different parts of a predicted structure can have different quality and reliability. The reliability of interpretation also has a human factor to contend with, says Hajdu: even though a lottery win and a lightning strike of a person walking in a storm have similar probabilities, people generally feel less fearful about lightning strikes and more hopeful about their chances of hitting the jackpot.

Into the machine

Compared to other software developed in the academic community, says Thornton, AlphaFold’s advances include more accurate placement of side chains in the protein models and an improved approach to integrating machine learning with homology modeling, which looks at protein structure in the context of evolutionarily related proteins. The software uses homology modeling at an “ultrafine” level, says Jones. “It’s taking little pieces of everything it needs from the whole of PDB.” Instead of taking an homologous 3D structure, building a model from that and then including the side chains and loops, the system finds all the right pieces in high-dimensional space. In a way, he says, it’s solving “the worst jigsaw puzzle in history made up of tiny little pieces.”

In CASP13, DeepMind entered its AlphaFold1, and then in CASP14 the team entered AlphaFold2. A big difference between CASP13 and CASP14, says Jones, was the way the DeepMind team applied language modeling, specifically the self-attention model, to reduce the need for computing steps run sequentially. The leap made the academic community look like “we’d all been spending 30 years staring at the wall doing nothing,” says Jones, which, of course, is not the case. DeepMind’s computational approach is based on one that Google Brain scientists presented at the 2017 Conference on Neural Information Processing Systems called ‘Attention is all you need’ 10 . It’s had great impact on AlphaFold, bioinformatics and the computer science community, he says.

Applying this approach in AlphaFold pares back the recurrent layers that the encoder–decoder architectures in machine learning apply and replaces them with “multi-headed self-attention,” which interconnects many operations at the same time. These attention models “can just mix data across all the data you feed in,” says Jones. Such data-mixing on a scale larger than previously accomplished, lifted constraints that academic groups had faced. Removing computational constraints gives AlphaFold its power to juggle data. “They can mix it up in any way necessary to solve the problem,” he says. At the time of CASP14, bioinformaticians were not yet applying this technology, but since then, says Jones, in machine-learning circles he encounters many scientists who work on variations of attention models.

‘Attention’ is indeed part of a big change in this field, says Burkhard Rost, a computational biologist at the Technical University of Munich who was previously at Columbia University. Both AlphaFold1 and AlphaFold2 rely on multiple sequence alignment and on machine learning. When combining these techniques, academics have used standard feedforward networks with a network of processing units, or nodes, that are arranged in layers with outputs from one layer leading to the next. Training weights the nodes. By including natural-language processing techniques in AlphaFold and in academic labs such as his and others, researchers have enabled machines to ‘learn’ the grammar of a given protein sequence, says Rost, and the grammar gives context. Based on sentences from Wikipedia, a neural network can extract grammar rules for general language. In the same way, the network can extract the grammar of “a protein language,” he says, one that is learned from input amino acid sequence and the corresponding 3D output.

CASP14 felt like being “hit by a truck or a freight train,” says Burkhard Rost. “I’m utterly impressed by what they did.”

A platform can learn, for example, that the amino acid alanine might be both at position 42 and 81 in a protein. But it’s the 3D environment around these amino acids that affects the protein in different ways. Even though this computational approach does not teach 3D structure or evolutionary constraints, systems can learn rules such as physical constraints that shape protein structure. Rost says that never before has there been a CASP winner from outside the field of protein structure prediction. CASP14 felt like being “hit by a truck or a freight train,” he says. He found AlphaFold1’s predictions to be “amazingly accurate.” AlphaFold2 is “a completely different product” in which he sees “so much novelty” he says. “I’m utterly impressed by what they did.”

To train the system, the DeepMind approach used tensor processing units (TPUs), which are Google’s proprietary processors. They are not for sale; academics can only access them through the Google Cloud . Indeed, DeepMind has “great hardware,” says Juan Restrepo-López, a physicist who has turned to biology as a PhD student in the lab of Jürgen Cox at the Max Planck Institute of Biochemistry. AlphaFold2 is likely inconceivable without that hardware, says Restrepo-López. AlphaFold1, with its convolutional neural networks (CNNs), is “for sure much easier to understand due to its simpler architecture.” Both AlphaFold1 and AlphaFold2 were trained on TPUs. AlphaFold1 could be run on graphics processing units (GPUs), and this has also eventually become true for AlphaFold2, he says. In AlphaFold2, DeepMind no longer used CNNs but rather transformers, says Restrepo-López. The main advantage for AlphaFold2 came from Google’s huge computing clusters, which made it possible to run many types of models. “You can go crazy and run 200,000 experiments because you have unlimited resources,” he says. To generate structures, DeepMind first uses multiple sequence analysis, which originated in academia. The core of the algorithm uses transformers, developed at Google. Transformers originated in the field of natural-language processing and are now being applied in many areas. “They are particularly interesting because they can detect long correlations,” he says.

This AlphaFold2 architecture with transformers makes it possible, as previously mentioned, to process many aspects of the sequence in parallel and figure out long-term dependencies very well,says Restrepo-López. For example, residues far apart in a sequence can be very close in a folded protein, and this concept has to be introduced into a model.

For decades, academic groups around the world have been predicting structures using the millions of amino acid sequences in databases and integrating evolutionary information as part of homology modeling. But DeepMind has used many more sequences plus a different way of scaling computation, says Rost.

When he saw CASP13 results, Konstantin Weissenow, now a PhD student in the Rost lab, was a master’s degree student working on a protein structure prediction method. It seemed to him that DeepMind was taking a “traditional” deep learning approach not unlike his. At the time, DeepMind was not sharing the code, but Weissenow felt he could reverse engineer the method and “this is essentially what I tried to do,” he says. He incorporated what he gleaned into his method. But CASP14 and AlphaFold2 “was a different story.” A few months later, Deep Mind made the AlphaFold2 code public. Michael Heinzinger, another graduate student in the Rost lab, was wrapping up protein language modeling as he watched the livestream of CASP14, which the Rost lab was competing in with Weissenow’s tool. When experimentalists began saying that this computational system was reaching close to the quality of experimentally generated results and structures, Heinzinger felt like it was a moment that “people might actually then read in the history books years or decades after this point,” he says. “This was just mind blowing.”

“The big impact came with CASP14,” says Weissenow. By then he had started his PhD work in the Rost lab. He and others had entered their software tool, called EMBedding-based inter-residue distance predictor (EMBER), for CASP14. It’s geared toward predicting protein structures for which there are few evolutionary relatives, and computationally it uses a many-layered convolutional network similar to that of AlphaFold1. EMBER allows the team to predict structures on a large scale, and it can predict the human proteome on a typical computer. It was not going to be as good as AlphaFold2, says Rost, but it has a lower carbon footprint. After CASP14, says Weissenow, some participants got together to consider reverse engineering AlphaFold2, but they soon realized that was not going to work. Then, DeepMind published predictions for 98.5% of the human proteome 2 . This was a few weeks before Weissenow had planned to present his tool at a conference and show how it could generate structures of the human proteome. “Scooped again,” says Rost, who was interviewed jointly with Heinzinger, Weissenow and postdoctoral fellow Maria Littmann, who works on ways to predict, from amino acid sequence, which residues bind DNA, metal or small molecules.

One issue Littmann faced around 2018 and 2019, says Rost, was the lack of experimental data. It will now be interesting, says Litmann, to see how she and others can integrate the availability of these models into their work and extend it. When predicting residues only from sequence but without a structure, “you don’t know what the actual binding site looks like,” she says. In the folded structure, residues may be close together or far apart, and it’s impossible to know, for example, if two residues are part of the same or a different DNA-binding site. “For that she needs a model,” says Rost. Now, given AlphaFold2, Littmann feels she can move beyond the task of predicting which residues bind to being able to predict binding sites.

“This is a game-changer for several applications we are pursuing in the lab,” says Jürgen Cox.

AlphaFold has immense value for work in his lab, says the MPI’s Cox. He finds AlphaFold2 is enabling for proteomics more generally. His team integrates structure information into the lab’s computational-mass-spectrometry-based proteomics workflows, and Restrepo-López is integrating AlphaFold2 predictions into the Cox lab’s MaxQuant algorithms. AlphaFold has trumped a number of existing tools in the protein prediction space, but many of them had been close to retirement age, says Cox. The best way to predict structural information along the protein sequence such as secondary structure or solvent accessibility “is to just do the 3D structure prediction and project these properties from the structure onto the sequence.” With the advent of AlphaFold2, says Cox, it’s become possible to assume that a structure—either a computationally generated or an experimentally deciphered one—is at researchers’ fingertips for nearly every protein and organism and that a computationally generated structure is similar in quality to one determined by X-ray crystallography. “This is a game-changer for several applications we are pursuing in the lab,” he says.

Science or engineering?

To some, AlphaFold’s achievement is more an engineering feat than a scientific one. AlphaFold2’s utility is indisputable, says Jürgen Cox. Every achievement in the development of algorithms and computational tools runs into the issue of being perceived as ‘just’ engineering as opposed to ‘real’ science,” he says. But it’s not justified in this case or in other aspects of computational biology. “Think of the BLAST algorithm. Is it science or engineering?” he asks. Bioinformatics supports life science research and, in so doing, enables findings not achievable through other means. Advances in machine-learning methods are science unto themselves, he says. Differentiating between science and engineering doesn’t matter, says Hajdu, given that tool-making is an integral part of science. “A drill, an XFEL, various algorithms, mathematical breakthroughs” can all turn into tools in some fields, he says, referring to X-ray free electron lasers. “Someone’s science today is someone’s tool tomorrow.”

“You can’t do the science without the engineering,” says Jones. He is essentially ‘split’ across engineering and science in that he holds a double appointment at University College London: in computer science and in structural and molecular biology. “If the science is wrong, it doesn’t matter how good your engineering is,” he says. And if there is bad engineering, no correct answers are to be had. “Engineering makes things a reality,” he says. “And the science builds the foundations on which that happens.”

When a company does it

Some researchers have been irked that a commercial venture achieved this goal of large-scale protein prediction, as opposed to an academic lab or consortium. “I was just pleased overall,” says Thornton, who feels the achievement will benefit the entire field. “In a way it was quite disappointing,” she says, but it’s a company with access to “a lot of compute” and one positioned at the forefront of machine learning.

To Hajdu, it makes no difference that a company and not an academic group reached this goal, he says. Going forward, scientists now have access to many more protein structures, most of them computationally generated. The situation is comparable to one with the sequencing of the human genome, which both a company and an academic consortium worked on. “The important thing is that it is done,” he says. And it matters that the results and tools are or will be available to all. That, he hopes, is an aspect the research community will be able to shape.

When Littmann first saw the CASP14 results, she assumed that because a company had developed the method, it would be kept “behind closed doors,” which would prevent the academic community from ever figuring out how the team had achieved what they had. She also assumed one would have to pay to obtain structures, meaning that the academic community would still have needed other methods to predict structure. Her eye-opener moment was when the DeepMind team announced that they are publishing for the research community’s benefit the structures from UniProt , which is the database of protein sequences, for the entire human proteome. “That’s something that I never expected,” she says. Gone was the situation of a lack of high-resolution structures for most proteins. Now, she says, researchers can revisit projects done with sequences and see if they can improve them by adding a structure to their analysis.

Isabell Bludau, a postdoctoral fellow and computational biologist in the lab of Matthias Mann at the Max Planck Institute of Biochemistry, picked up on the excitement in the research community about AlphaFold, but its “real impact” on her and for her work, she says, also occurred when DeepMind published structures for the entire human proteome, dramatically expanding the structures available. “This information can now be easily integrated into any systems biology analysis that I do,” says Bludau. As she explores patterns in proteomic data, she can now complement information about the presence and quantity of proteins with structural information, meaning that her analysis can provide a more complete picture. “This is, for me, probably the most exciting part of it,” she says.

Local muscle

AlphaFold was trained on the Protein Data Bank, and the DeepMind team used tensor processing units (TPUs), which are Google’s proprietary processors, to do so. Academics can access them through the Google Cloud . As of the end of 2021, AlphaFold could not only be run locally, any TPU constraint was removed, says Burkhard Rost. There is AlphaFold Colab , with which users can predict protein structures using, as the team indicates, a “slightly simplified version of AlphaFold v2.1.0.” This sets up an AlphaFold2 Jupyter Notebook in Google Colaboratory, which is a proprietary version of Jupyter Notebook hosted by Google that offers access to powerful GPUs. A user can ‘execute’ the Python code from a browser on a local computer. AlphaFold2 will run on Google hardware, which might be CPUs, GPUs or TPUs depending on a researcher’s needs. Separately, researchers have developed a Colab notebook called ColabFold AlphaFold2 for predicting protein structures with AlphaFold2 or RoseTTAFold.

The developers include Martin Steinegger at Seoul National Laboratory, who is one of the co-authors of the AlphaFold2 1 paper, Sergey Ovchinnikov and his team at Harvard University, and colleagues at other institutions. Graduate student Konstantin Schütze is part of the developer team; he’s been a member of the Rost lab and has been working in the Steinegger lab as part of his master’s degree research. As the Rost lab’s Michael Heinzinger explains, ColabFold speeds up AlphaFold2 protein prediction many times over, mainly by accelerating the way multiple sequence alignments are generated with Steinegger’s MMseqs2 , which is software for iterative protein sequence searching. Users can install ColabFold locally by following the tips on Konstantin Schütze’s section of the ColabFold github page . The ability to run AlphaFold2 on GPUs can remove dependency on Google infrastructure, says Heinzinger, because one can choose to install AlphaFold2 on one’s own machine. Multiple sequence alignments can be generated on Steinegger’s servers, he says, “so you do not even have to compute your MSAs locally.”

A landscape of change

AlphaFold is poised to change the structural biology community in a number of ways. The AlphaFold–EBI database gives scientists around the world a “global picture” of the data, says Jones, and this might change the discipline of biology itself.

Early in Jones’s career, when he interacted with biologists, he heard them say that protein structure mattered little to their work. Proteins are, he says as he recalls their words, “just blobs that do things and they stick to other blobs.” As a PhD student in Thornton’s lab, he felt differently about protein structures and began working on computational tools for predicting and analyzing them. Labs these days that use cryo-electron tomography (cryo-ET) and cryo-EM are revealing ever more about the structure of ‘blobs’, says Thornton. Resolution with cryo-ET is improving and can reach 1.2 Å, she says, although it’s still generally “relatively low.” For some biological questions, “a blob is enough,” she says. But she and Jones both believe the computationally generated models can help many labs to assess proteins, for instance by fitting the computational structure onto the ‘blob’ they captured with cryo-ET or cryo-EM experiments. What will change overall because of the wealth of computationally generated structures that are becoming available, says Jones, is that the field of structural biology will need to spend less time on technology and thus have more time for assessing why solving structures matters. It will be possible, he says, to appreciate the power of models and the predicted protein structure coordinates for exploring deeper questions.

As Janet Thornton considers the possibilities AlphaFold2 opens up by having solved a big methods puzzle in science, “it gives me a spring in my step.”

Jones and Thornton have many entries on their to-do list of things they wish to understand: the protein folding pathway, protein–protein and protein–DNA interactions, intrinsically disordered proteins, the interactions of proteins with small molecules, questions of drug design, protein complexes, molecular machines and the overarching question of what proteins do. Having a complete proteome of structures opens entirely new avenues for research questions involving the complexity of protein function. When trying to, for instance, explore and understand protein–protein interactions, it’s “quite difficult if you don’t have protein structures,” says Thornton. “It’s not easy when you have them,” says Jones, and, says Thornton, “it’s impossible when you don’t have them.” They both laughed as they said this.

Among the problems Cox and his team want to tackle is predicting the effect of post-translational modifications on the structure of proteins and complexes. Speaking more generally, Hajdu says, the next chapter of research in this area “has just turned absolutely wonderful.” Not only is there much room to improve the methodology, there are tremendous new opportunities to explore using the new tools. “The scale of possibilities is huge,” he says.

AlphaFold2 does not show, says Thornton, how the path of protein folding occurs, how flexibility shapes protein function or what happens to a structure once it’s stabilized with a ligand. At the moment, machine learning struggles with such problems, she says. AlphaFold cannot predict how a mutation affects a protein such that it folds differently or becomes less stable, an effect that lies at the core of many diseases and disorders. “It hasn’t seen all the variants,” says Jones, so it cannot extrapolate how changes affect a protein’s flexibility or stability. In the wake of AlphaFold, some scientists will likely shift their focus. Thornton has observed that “the crystallographers were the most crushed” by AlphaFold and have privately expressed concern that their skills are no longer needed. In the near future, says Cox, he does not see crystallographers as endangered. “Structural information of whole complex structures still requires experiments,” he says. But “the combination of cryo-EM with AlphaFold2 predictions will pose a threat to crystallographers soon.”

In 1970, Walter Hamilton, a chemist and crystallographer at Brookhaven National Laboratory, published a paper 11 in which he stated that determining a molecular structure by crystallography is routine and that “we have reached the day when such a determination is an essential part of the arsenal of any chemist interested in molecular configuration—and what chemist is not?” Hamilton worked on the molecular and crystal structure of amino acids. “The professional crystallographers really got on his case,” says Berman, for saying it had become routine to experimentally determine the structure of small molecules. They were concerned, she says, that he was putting them out of a job, which didn’t happen. And, says Thornton, it’s not happening now.

AlphaFold is shifting the research landscape, though, says Thornton, given that protein structures will be available for most any amino acid sequence. Over time, X-ray crystallographers have become electron microscopists, she says. “They’re looking at bigger complexes, bigger sets or they’re doing electron tomography.” As such, they are colleagues needed for the next phase in structural biology.

The research community is now in the same place with protein structures as it was with small-molecule structure, says Berman. Back in the day, Berman and her merry band of like-minded junior scientists petitioned Hamilton and others to set up the PDB 7 . “We were very young, we talked a lot, we were so excited about looking at the structures,” she says. Hamilton and others did finally agree, but he unfortunately passed away at age 41.

“Ever since I was a postdoc, I’ve really started to appreciate how enabling cryo-EM was for structural biology,” says Bastian Bräuning, who leads a project group in the lab of Brenda Schulman at the Max Planck Institute of Biochemistry. He completed his PhD research in protein crystallography and dabbled, as he says, in cryo-EM. Now he sees how AlphaFold can help with cryo-ET, which produces lower-confidence data than single-particle cryo-EM, but is leading to ever better predictions for parts of bigger protein complexes. Thus, he says, AlphaFold2 “will really enable cryo-electron tomography, too.” Says Bräuning, “I’ve gone from one revolution to the next between my PhD and my postdoc.” Once the big shock and surprise to structural biologists settles in and “you really start looking at the opportunities it gives to you, it becomes less worrying,” he says. “There’s still so much to be done, and not one method or one revolution is going to solve everything.” To a large extent, he says, to characterize proteins bound to small ligands one still needs crystallographic data, which these days are generated at large synchrotrons. This approach is high throughput and is used to screen ligands in a way that cryo-EM cannot yet deliver.

AlphaFold2 is likely to affect a small subset of researchers in negative ways, in that this platform has leapfrogged over their works in progress, says Edwards. He mainly interacts with structural biologists and, to them, solving a structure enables their thinking about a biological problem and guides the design of their next experiment. Traditionally, he says, the “big paper” in the academic world has gone to the scientists who solved the structure, not the person who explained the science of that structure. But he hopes a shift can now take place such that more emphasis will be placed on creative scientific insights about the functions of structures. The academic literature contains fewer than 10 papers on half of the proteins that the human genome generates, says Edwards. Understanding more proteins and more about function is going to help to understand disease. Having structures enables the “‘what is life?’ question,” he says, and the questions about what these proteins do. “The vastness of what we don’t know is the coolest thing in biology.”

Jumper, J. et al. Nature 596 , 583–589 (2021).

Article CAS Google Scholar

Tunyasuvunakool, K. et al. Nature 596 , 590–596 (2021).

AlQuraishi, M. Nature 596 , 487–488 (2021).

Senior, A. W. et al. Nature 577 , 706–710 (2020).

Evans, R. et al. Preprint at https://doi.org/10.1101/2021.10.04.463034 (2021).

Baek, M. et al. Science https://doi.org/10.1126/science.abj8754 (2021).

Perutz, M. F. et al. Nature 185 , 416–422 (1960).

Kendrew, J. C. et al. Nature 185 , 422–427 (1960).

Anonymous Nat. New Biol. 233 , 223 (1971).

Google Scholar

Vaswani, A. et al. Preprint at https://arxiv.org/pdf/1706.03762.pdf (2017).

Hamilton, W. Science 169 , 133–141 (1970).

Download references

Author information

Authors and affiliations.

Nature Methods http://www.nature.com/nmeth

Vivien Marx

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Vivien Marx .

Rights and permissions

Reprints and permissions

About this article

Cite this article.

Marx, V. Method of the Year: protein structure prediction. Nat Methods 19 , 5–10 (2022). https://doi.org/10.1038/s41592-021-01359-1

Download citation

Published : 11 January 2022

Issue Date : January 2022

DOI : https://doi.org/10.1038/s41592-021-01359-1

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

This article is cited by

Packaging monoamine neurotransmitters.

- Cornelius Gati

Cell Research (2024)

Can AlphaFold’s breakthrough in protein structure help decode the fundamental principles of adaptive cellular immunity?

- Benjamin McMaster

- Christopher Thorpe

- Hashem Koohy

Nature Methods (2024)

AlphaFold2 reveals commonalities and novelties in protein structure space for 21 model organisms

- Nicola Bordin

- Ian Sillitoe

- Christine Orengo

Communications Biology (2023)

Protein structure prediction from the complementary science perspective

- Jorge A. Vila

Biophysical Reviews (2023)

Rethinking the protein folding problem from a new perspective

European Biophysics Journal (2023)

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Sign up for the Nature Briefing newsletter — what matters in science, free to your inbox daily.

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Comput Struct Biotechnol J

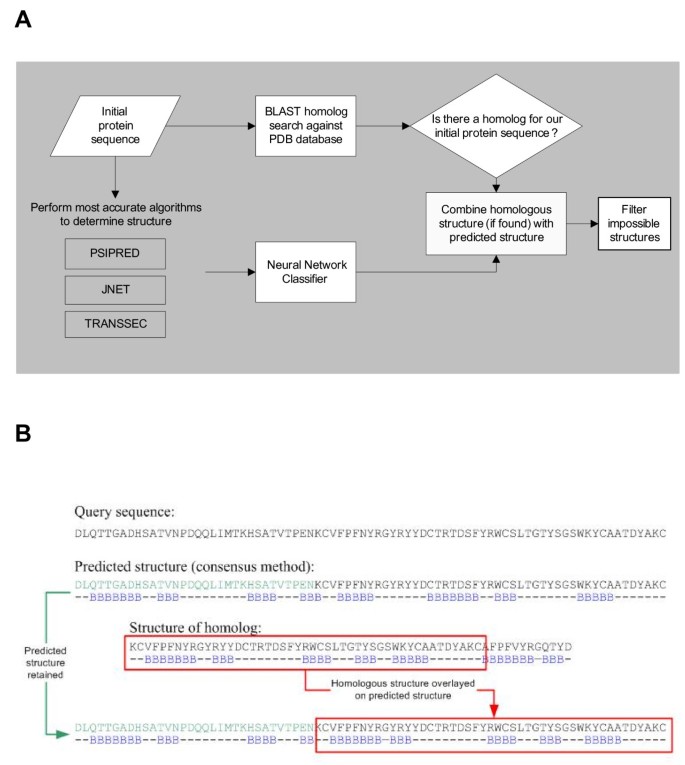

Deep learning for protein secondary structure prediction: Pre and post-AlphaFold

Dewi pramudi ismi.

a Department of Computer Science and Electronics, Faculty of Mathematics and Natural Sciences, Universitas Gadjah Mada, Yogyakarta, Indonesia

b Department of Infomatics, Faculty of Industrial Technology, Universitas Ahmad Dahlan, Yogyakarta, Indonesia

Reza Pulungan

Graphical abstract.

- • Deep neural networks-based predictors have enhanced the PSSP accuracy.

- • There is still an accuracy gap in PSSP, the PSSP theoretical limit expands to 94%.

- • The use of pre-trained LMs as input has successfully improved PSSP performance.

- • PSSP without using evolutionary information is a potential future work.

This paper aims to provide a comprehensive review of the trends and challenges of deep neural networks for protein secondary structure prediction (PSSP). In recent years, deep neural networks have become the primary method for protein secondary structure prediction. Previous studies showed that deep neural networks had uplifted the accuracy of three-state secondary structure prediction to more than 80%. Favored deep learning methods, such as convolutional neural networks, recurrent neural networks, inception networks, and graph neural networks, have been implemented in protein secondary structure prediction. Methods adapted from natural language processing (NLP) and computer vision are also employed, including attention mechanism, ResNet, and U-shape networks. In the post-AlphaFold era, PSSP studies focus on different objectives, such as enhancing the quality of evolutionary information and exploiting protein language models as the PSSP input. The recent trend to utilize pre-trained language models as input features for secondary structure prediction provides a new direction for PSSP studies. Moreover, the state-of-the-art accuracy achieved by previous PSSP models is still below its theoretical limit. There are still rooms for improvement to be made in the field.

1. Introduction

Proteins play important roles for living organisms due to their diverse functions, for example, acting as a catalyst in cell metabolism, playing an essential role in DNA replication, forming cell structures, forming living tissues, and constructing antibodies for the immune system. Protein has four different levels of structure: primary structure, secondary structure, tertiary structure, and quaternary structure. The protein’s primary structure is the amino acid sequence composing its polypeptide chain. The protein’s secondary structure is a local conformation formed within the polypeptide chain due to the hydrogen bonds between atoms in the backbone. The tertiary structure of protein exposes the three-dimensional structure of the protein in the physical space. The quaternary structure of protein is made up of multiple polypeptide chains that come together.

Proteins function differently from one another due to variations in their structures, mainly due to the folds that make up varying tertiary structures [1] , [2] . Since the function of a particular protein is influenced by its tertiary structure, understanding the protein’s tertiary structure is necessary to reveal its functionality. Hence, protein tertiary structure prediction is a crucial task in structural bioinformatics. Prior to the invention of AlphaFold [3] , predicting protein tertiary structure directly from its primary structure was a challenging problem [4] , [5] , [6] , [7] . Thus, protein structure prediction was divided into subsidiary tasks that are easier to accomplish and beneficial for ultimate protein tertiary structure prediction. Researchers attempted to build models that solve these subsidiary tasks.

Protein secondary structure prediction (PSSP) is one of the subsidiary tasks of protein structure prediction and is regarded as an intermediary step in predicting protein tertiary structure. If protein secondary structure can be determined precisely, it helps to predict various structural properties useful for tertiary structure prediction. For example, secondary structures affect structural folds in the polypeptide chain [8] , [9] , and the same protein secondary structures define similar folds in the protein’s polypeptide chain [10] . PSSP started when Pauling et al. [11] suggested α -helices and β -sheets as the dominant conformations even before the first protein structure was revealed. The first protein structure was revealed using an X-ray by Kendrew et al. [12] . PSSP has gone through five generations since then [13] . Table 1 shows the generations and their respective traits.

The history of PSSP studies.

Leveraging evolutionary information as an input feature and utilizing deep neural networks (DNN)-based architectures has become the primary approach done by PSSP researchers in recent years. Three main reasons that warrant the use of DNN in PSSP are: (1) the increasing number of protein sequences in the Protein Data Bank (PDB), (2) the need to understand and capture long-range interactions in protein sequences, and (3) the capability of DNN to observe the underlying characteristics and hidden patterns in protein sequences.

Considering the immense usage of DNN in PSSP recently, we aim to provide a comprehensive literature review on PSSP studies that leverage DNN. Although there have been several publications on the topic, such as [39] , [40] , [41] , [42] , several key points differentiate this review from previous reviews, namely:

- 1. This review focuses on the implementation and architecture details of DNN methods utilized in PSSP;

- 2. This review discusses various approaches that have been applied to improve PSSP accuracy, such as multi-task learning, ensemble learning, iterative learning, attention mechanism, and language modeling;

- 3. This review discusses the more recent and advanced methods employed in PSSP, such as the involvement of pre-trained language models (LMs) and knowledge distillation; and

- 4. This review discusses the progress and perspectives of PSSP in two different time plots, namely before and after the invention of AlphaFold.

This review is organized as follows: general description, data, PSSP in pre-AlphaFold publication, new approaches for feature generation, AlphaFold and its impacts, PSSP in post-AlphaFold publication, and summary and outlook.

2. General description

PSSP can be seen as a classification problem that categorizes an amino acid residue to the type of secondary structure in which it is located. A typical PSSP model takes a sequence of amino acids as input and returns a sequence of the corresponding secondary structure (see Fig. 1 ). There are two different types of PSSP: three-state PSSP and eight-state PSSP. In three-state PSSP, the secondary structure elements consist of helix (H), sheet (E), and coil (C). Helix and sheet are the two main conformations suggested by Pauling et al. [11] . Coil (C) denotes an amino acid that does not fit both H and E. In eight-state PSSP, proposed by Kabsch and Sander [43] , the secondary structure elements consist of α -helix (H), 3 10 -helix (G), parallel/anti-parallel β -sheet conformation (E), isolated β -bridge (B), bend (S), turn (T), π -helix (I), and coil (C). The three-state PSSP is a coarse-grained classification of secondary structure elements, whereas the eight-state PSSP provides more specific traits of the secondary structure elements. Several transformation rules to map the eight-state secondary structure to the three-state secondary structure are proposed by researchers, including DSSP [43] , DEFINE [44] , and Rost and Sander [45] .

A PSSP model takes sequences of amino acids as an input and produces the sequences of corresponding secondary structure elements.

In 2020, four-state and five-state PSSP alternatives were proposed by Shapovalov et al. [13] . The reason for proposing four-state and five-state PSSP was because isolated β -bridge (B) and bend (S) have a small number of samples and low true-positive rates. In five-state PSSP, B and S are considered as C, whereas in four-state PSSP, B, S, and G are considered as C. Moreover, 75% of π -helix (I) was located at the beginning or the end of an α -helix structure (H), so it was categorized as H.

A PSSP model takes a sequence of amino acids as input. Features representing the sequence of amino acids are generated and used to train the PSSP model. Like other machine learning-based systems, DNN-based PSSP models are developed through the training and evaluation phase. The general framework of DNN-based PSSP is shown in Fig. 2 . Based on the framework depicted in the figure, three aspects play essential roles in producing high prediction accuracy: the training data, the features representing the sequence of amino acids, and the model’s architecture. A typical PSSP study usually tackles one or more aspects.

The general framework of PSSP models: two phases in model development, namely training and evaluation. The training dataset is used to build the model, while the test dataset is used to confirm the performance of the trained model.

A DNN-based PSSP model incorporates one or more deep learning methods in its architecture, such as recurrent neural networks (RNN), including long short-term memory (LSTM) and gated recurrent unit (GRU), convolutional neural networks (CNN), inception networks, and other networks. Although most previous PSSP studies focused on developing novel architectures, several PSSP studies focused on different objectives. Several studies focused on proposing new feature representations, such as new amino acid encoding schemes [46] , [47] , [48] , and other features such as protein language models [49] , [50] . Several studies focused on evaluating the evolutionary information used as input of the PSSP model, for example [51] , [52] , [53] , [54] , [55] . Other studies proposed novel architectures as well as input features, for example [36] , [56] . Besides the model’s architecture and features, several studies also proposed new training and test datasets that eliminate data redundancy such that the models trained using these datasets can achieve a good performance [13] , [57] , [58] .

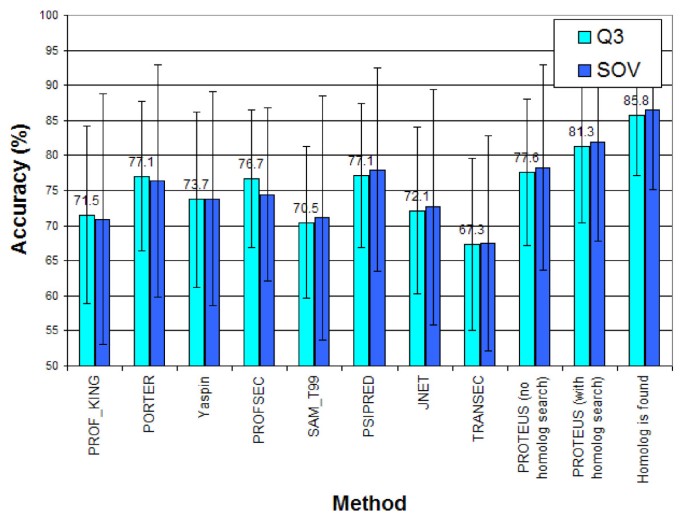

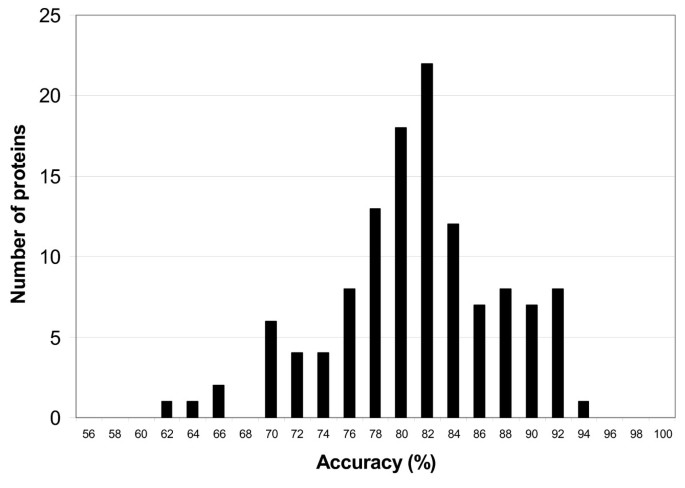

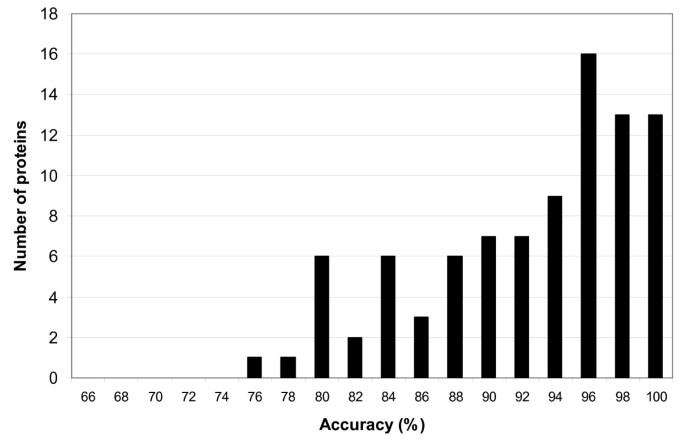

The performance of PSSP models is usually assessed by predicting the secondary structures of benchmark test datasets. It is measured by the prediction accuracy, namely the percentage of correctly classified amino acid residues in the test datasets. Q3 denotes the accuracy of three-state PSSP, whereas Q8 denotes the accuracy of eight-state PSSP. In addition to accuracy, several PSSP studies also used other standard classification performance metrics, such as precision, recall, and F1 score. PSSP model performance is also measured by segment overlap (SOV). SOV calculates segment-based accuracy that tolerates false prediction at the segment’s boundary but penalizes false prediction in the middle of the segment [59] . In 1999, Zemla et al. [60] proposed SOV’99, which normalizes the SOV score such that the final SOV score is on a percentage scale. SOV is unable to extend allowance when more residues can be predicted correctly in a particular segment. This issue becomes the drawback of SOV and SOV_Refine was proposed by Liu and Wang [61] to tackle the issue. Although less prevalent in PSSP, Kappa Performance was used to measure the performance of the PSSP model proposed by [62] , and Matthew’s correlation coefficient [63] was used in [64] .

3.1. Training data

The development of DNN-based PSSP models requires two different datasets: training and test datasets. There are two different fashions with which the researchers obtain training and test datasets. The first is to collect the datasets from the protein database by themselves. The second is to use the benchmark datasets previously collected by other researchers. Researchers self-collected datasets from the protein data bank (PDB) [65] to build their PSSP models [ [13] , [34] , [35] , [37] , [47] , [53] , [56] , [66] , [67] , [68] , [69] , [70] , [71] , [72] , [73] , [74] , [75] ]. In this case, the size of culled datasets varies depending on the date when the data collecting is performed.

Protein data used to train the DNN-based PSSP models are typically obtained using the PISCES CullPDB server [76] . A sequence identity cutoff of 25–30% usually applies. Besides using the PISCES CullPDB server, protein sequences are also obtained from ASTRAL [77] . Several PSSP studies used datasets extracted from ASTRAL including [78] , [68] , [79] . Proteins from the Genbank database [80] were used as training data to build the PSSP model developed by Xavier and Thirunavukarasu [81] . Li et al. [82] predicted the secondary structure of transmembrane proteins and took the protein sequences from the OPM database [83] .

Instead of performing self data assembly, some researchers prefer to use existing datasets from previous researchers. Several well-known benchmark datasets have been used to train DNN-based PSSP (see Table 2 ).

PSSP training datasets. The dataset name usually indicates the number of data included in the dataset. CullPDB dataset usually refers to the dataset collected by [84] .

AlQuraishi [57] published the ProteinNet dataset, a standardized dataset for training and evaluating PSSP models. It was motivated by the availability of standard datasets in computer vision enabling researchers to build models and evaluate their performances using standardized data. The ProteinNet dataset consists of protein sequences, MSAs, position-specific scoring matrices (PSSMs), and training-validation-test splits. The dataset was designed to support the critical assessment of protein structure prediction (CASP) [87] . AlQuraishi [57] collected all protein sequences available in the protein databases before the date of each CASP challenge commencing. The dataset is designed to provide the complete set of protein sequences needed to build PSSP models to solve the respective CASP challenge. For example, the dataset provides protein sequences and structures available in the databases before the date of the CASP10 challenge to train the PSSP models to be tested on CASP10.

Although there are various benchmark datasets for PSSP model training, previous PSSP researchers tend to use different training datasets to maximize the performance of their PSSP models, i.e., using a combination of several benchmark datasets or culling the training dataset from the protein database by themselves.

3.2. Test data

Although PSSP researchers may take the test data from the same source as the training data by culling a portion of the training data as the test dataset, several independent benchmark test datasets are widely used to compare the performance among PSSP models. CASP datasets from the biennial CASP events [87] are notable benchmark test datasets for PSSP studies. There have been 14 CASP events conducted to date; hence there are 14 CASP datasets publicly available [87] . Besides CASP datasets, several benchmark test datasets used to evaluate PSSP performances are shown in Table 3 .

PSSP benchmark test datasets.

4. PSSP in pre-AlphaFold publication

This section focuses on the DNN-based PSSP models released within five years before the publication of AlphaFold (2016–2021). The discussion covers the features representing amino acid sequences, the architecture of PSSP models, and the performance achieved by those models.

4.1. Features

The features representing amino acid sequences can usually be divided into three types: standard features, single-sequence, and other features.

Standard features. The standard input features representing an amino acid sequence in DNN-based PSSP models are the one-hot encoding of amino acids, sequence profiles, and physicochemical properties of amino acids. A one-hot encoding of amino acids is a matrix of size n × 20 , where n denotes the number of amino acids in the sequence, and 20 denotes the number of types of amino acids. Matrix entries corresponding to the amino acid types are filled with 1, while the remaining entries are filled with 0.

The sequence profile is derived from MSA and represents evolutionary information of the sequence. Two different sequence profiles are commonly used in previous PSSP works: position-specific scoring matrix (PSSM) and hidden Markov model (HMM) profiles. PSSM exhibits the probability of each amino acid residue at each position in the sequence based on the MSA. On the other hand, HMM profile offers state transition probability and emission probability as additional information in the sequence profile. PSSM is usually obtained using PSI-BLAST [92] , while the HMM profile is usually obtained using HHBlits [93] . Besides PSI-BLAST and HHBlits, MMSeq2 [94] , an optimized search for massive datasets, is also used to generate sequence profiles. The details of one-hot encoding of amino acids and PSSM are depicted in Fig. 3 .

One hot encoding of amino acids and position specific scoring matrix (PSSM).

Sequence profiles have been used as fundamental features for PSSP models. A sequence profile is generated based on the known protein sequences in the target dataset, i.e., UniRef90. With the significant growth of the number of known protein sequences in the target dataset, the computational resources and time needed to perform PSSP, especially for generating sequence profiles, also increase. Juan et al. [95] proposed an approach to increase the speed of PSSP without compromising accuracy. The approach was carried out by reducing the size of the target dataset by taking a random sampling and reducing the sequence homology of the target dataset to 25% of the sequence identity. This study showed that reducing the size of the target dataset decreased the time cost of PSSP, whereas reducing the sequence homology of the target dataset improved the complexity of the generated PSSM and enhanced the PSSP accuracy.

Several studies performed critical appraisals on the use of sequence profiles in PSSP. Urban et al. [55] performed a study to critically evaluate the role of sequence profiles in the performance of PSSP models. Sequence profiles have shown the ability to increase the performance of PSSP models. A significant accuracy gap exists between the PSSP models using sequence profiles and those using only amino acid sequences. However, its underlying reason has yet to be clearly explained because only amino acid sequences play a role when protein folds are constructed. Moreover, the study was also based on the observation that although training and test datasets used in the prediction have less than 25% of sequence identity, sequences in training and test datasets are likely to come from the same family [96] , [97] , [98] . In that case, a high profile similarity between training and test datasets occurs, resulting in performance evaluation bias. The prediction accuracy of models utilizing sequence profiles is enhanced by the redundancy found in the sequence profiles of the training and test datasets which lead to an invalid evaluation result. Urban et al. [55] also proposed an evaluation protocol, named EVALPro, to measure the performance of the PSSP models with the assessment of profile similarity between training and test proteins. EVALPro employed Gaussian process regression (GPR) to portray the relationship between the profile similarity of training and test proteins and the accuracy gained.

In addition to the one-hot encoding of amino acids and the sequence profiles, the physicochemical properties of amino acids are commonly used as an input feature for the PSSP model. The physicochemical properties include the steric parameter (graph shape index), polarizability, normalized Van der Waals volume, hydrophobicity, isoelectric point, helix probability, and sheet probability. The values of those parameters usually are those specified in Table 1 of [28] .

Single-sequence prediction. Several studies have begun to develop PSSP models that only accept one-hot encoding of amino acids and do not use sequence profiles. It is motivated by the argument that protein structures are only influenced by the amino acid sequences and not by evolutionary information. The PSSP models in this category include SPIDER3-Single [74] , ProteinUnet [99] , SPOT-1D-Single [100] , and S4PRED [70] . With only using amino acid encoding, the highest reported Q3 accuracy is 75.3%, achieved by S4PRED on the CB513 dataset [70] .

Non-standard features. Besides the standard and sequence-only features, previous PSSP studies also used non-standard features and evaluated their impacts on the performance of the PSSP model. Hanson et al. [36] proposed a predicted contact map [101] as an additional input feature in the PSSP model and showed that using a contact map successfully increases accuracy. A conservation score was proposed as an input feature for eCRRNN [38] . However, the effect of this conservation score on eCRRNN accuracy was not mentioned.

4.2. Model architectures

Various deep learning methods have been employed in the architecture of previous PSSP models. We collected all the PSSP publications, including journal papers and conference proceedings during 2016–2021 and we summarized the usage of popular deep learning methods in Fig. 4 . The chart shows that CNN and RNN (including LSTM and GRU) are the two most dominant methods implemented in DNN-based PSSP models.

Deep learning methods and the number of PSSP works employing them during 2016–2021.

Extracting local contexts using convolutional networks. A protein secondary structure element is a local conformation formed in the polypeptide chain of the protein, composed of several amino acids. In physical space, the amino acids that make up a single secondary structure element interact with each other. From the computational point of view, these interactions, in the form of hydrogen bonds, are often invisible in the input features fed to PSSP models. Therefore, identification of local contexts/interactions on amino acid sequences needs to be done by PSSP models, and CNN has been extensively used in previous PSSP models for this purpose.

Both one-dimensional (CNN-1D) and two-dimensional CNN (CNN-2D) have been employed in previous PSSP models. CNN-1D is used more frequently since the input of a PSSP model is in the form of sequences. However, several models used CNN-2D in their architectures. CNN-2D is used in PSSP model architecture to extract temporal and spatial features of the input sequences better. Feature vectors (PSSM and one-hot encoding) of a fixed length residue window were employed in [102] , [103] , [104] , [82] as input to the two-dimensional CNN.

DeepACLSTM [105] used asymmetric convolutional filters, including two different filters, namely 1 × 2 d and k × 1 , to extract the local dependency feature of the amino acid sequence. These asymmetric convolutional filters are implemented using CNN-1D and CNN-2D. Shapovalov et al. [13] proposed a PSSP model that employed four stacked convolutional layers in its architecture. Jalal et al. [106] used multi-input CNN layers and merged the convolution outputs of each input channel.

Several studies proposed modified CNN architectures. Lin et al. [107] implemented multilayer shift and stitch in deep convolutional networks for their PSSP model to accelerate training and testing time. CNNH_PSS [108] used a highway to connect two adjacent convolutional layers to send information from the first layer to the second layer. Long and Tian [73] proposed a PSSP model with context convolutional networks, in which standard convolutional and dilated convolutional operations were joined. OCLSTM [68] optimized the parameters used in CNN using Bayesian optimization. IGPRED [66] combined CNN and graph convolutional networks.

Various architectures derived from standard CNN, such as ResNet, inception networks, U-shape networks, and fractal networks, were also employed in previous PSSP models. SPOT-1D [36] and SPOT-1D-Single [100] exploited residual networks in their architectures. Residual networks were also used in [109] . Inception networks have been utilized in several PSSP models, such as MUFOLD-SS [109] and SAINT [110] . DNSS2 [67] developed several model architectures and used various types of convolutional networks, including standard CNN, ResNet (residual block), InceptionNet (inception block), convolutional residual memory (CRM), and FractalNet. The U-shape networks have been utilized in ProteinUNet [99] .

The kernel size used in convolutional layers in a PSSP model varies, i.e., 3, 5, 7, and 11. Previous researchers determined the most appropriate kernel size by experimentation. It means that the kernel size used in PSSP models is not standardized, and different architectures may use different kernel sizes. SecNet [13] was built with stacked CNN layers. Experimentation on SecNet found that a kernel size of 7 achieved the highest prediction accuracy, and lower accuracy was achieved using larger and smaller kernel sizes. The kernel size below 7 has worse performance than that above 7. It was probably because the hydrogen bonds in the helix are formed between 3, 4, and 5 residues apart. Moreover, the average length of sheets is about six residues [111] . Although Shapovalov et al. [13] did not recommend kernel size below 7, several previous works, including [38] , [35] , used kernel sizes of 3 and 5 and achieved their best performances. Li and Yu [112] employed multiscale CNN layers with a kernel size of 3, 7, and 11. The three feature maps obtained are concatenated together as the local contextual feature vector. Although the kernel sizes mentioned above are widely used in previous PSSP models, larger kernel sizes are used in NetSurfP-2.0 [37] (129 and 257) and OPUS-TASS [56] (11, 21, 31, 41 and 51). Besides the kernel size, the number of filters used in convolutional layers in previous PSSP models also varies.

Capturing long-range interactions using RNN. In addition to identifying the local context/interactions in amino acid sequences, a PSSP model must also identify long-range interactions between amino acid residues. In sheet secondary structure, the interactions of amino acid residues (forming the hydrogen bonds) occur between distant amino acids. RNN is widely used in PSSP models to identify these long-range interactions. Furthermore, bidirectional RNNs have been used to capture forward and backward interactions. Two kinds of RNN implementation are used for this case: LSTM and GRU.

The backbone architecture of SPIDER3 [34] and SPIDER-Single [74] employed two stacked LSTM layers and two fully connected layers. Hu et al. [72] utilized ensemble learning of five base learners, and each of the base learners consisted of stacked bidirectional LSTM layers and fully connected layers. Hattori et al. [113] and Wang et al. [114] also utilized stacked LSTM layers in their PSSP model architectures. Yang et al. [115] combined bidirectional GRU and batch normalization in their PSSP model. Lyu et al. [116] built their PSSP model using two stacked bidirectional GRU layers flanked by two multi-layer-perceptron (MLP) layers. De Oliveira et al. [117] used five bidirectional GRU layers in their global classifier and five random forests in their local classifier. Ensemble learning is utilized to combine local and global classifiers.

Many PSSP models integrated both CNN and RNN in their architectures. The combination of CNN and RNN in the architecture of a PSSP model enables the PSSP model to capture both local contexts and long-range interactions.

Feature extraction using autoencoder. Besides using CNN and RNN for feature extraction, an autoencoder has been utilized to extract features on protein sequences. Several PSSP models have integrated autoencoder in their architectures [118] , [119] , [120] , [121] , [81] .

Prediction algorithms. A typical PSSP model consists of feature extraction modules and prediction modules. For the latter part, many researchers tend to use a fully connected layer and a softmax activation function. However, several PSSP studies have attempted to use different algorithms to make secondary structure predictions. Dionysiou et al. [122] , Sutanto et al. [123] , and Görmez and Aydın [124] used SVM instead of a fully connected layer. Random forest was utilized in several PSSP studies [125] , [126] , [127] , while a Bayesian classifier was used in other studies [119] , [103] , [121] .

Multitask learning. Several previous PSSP researchers designed their models to be able to perform more than one prediction task. For example, a particular model predicts not only the protein secondary structure but also other structural features, such as solvent accessibility, dihedral angles, protein disorder, and protein structural classes. Solvent accessibility measures the exposure of amino acid residues to solvent, and it is essential for understanding and predicting protein structure, function, and interactions [128] . Torsion angles ( ϕ and ψ ) provide a continuous representation of the local conformations [129] rather than the discrete secondary structures. Moreover, these continuous representations are potential for predicted local structure in fragment-free tertiary-structure prediction. Hence, it is advantageous to use protein structural properties in addition to secondary structures for protein tertiary structure prediction. Motivated by that, previous PSSP researchers performed multitask learning to predict not only the secondary structure but also other protein structural features.

Li and Yu [112] developed a PSSP model that predicts both secondary structure and solvent accessibility. SPIDER3 [34] produced several prediction outputs: secondary structure, solvent accessibility, contact number, half-sphere exposure, and dihedral angles. NetSurfP-2.0 [37] was developed using a single model to predict three-state PSSP, eight-state PSSP, relative solvent accessibility, dihedral angles, and protein disorder. MASSP [82] was designed to be able to predict both residue-level structural attributes (secondary structure, location, orientation, and topology) and protein-level structural classes (bitopic, α -helical, β -barrel, and soluble).

Ensemble learning. Ensemble learning is an approach in machine learning that combines several base classifiers/predictors to achieve better prediction performance. Previous PSSP researchers have used ensemble learning to boost the accuracy gained by their models. Hasic et al. [130] developed an ensemble of multiple artificial neural networks, where each network can have distinct parameters and architecture. eCRRNN [38] employed ten independently trained CRRNN models. PORTER5 [131] employed an ensemble of seven BRNN networks. SPOT-1D [36] leveraged the ensemble of several deep learning networks, including LSTM-BRNN and ResNet. Guo et al. [132] leveraged the ensemble learning of conditionally parameterized convolutional networks (CondGCNN) and bidirectional LSTM. Cheng et al. [126] used the ensemble learning of CNN-softmax and LSTM-random forest. AlGhamdi et al. [62] used bagging and AdaBoost with several feed-forward neural networks as the base classifiers.

Iterative learning. SPIDER3 [34] initiated iterative learning to predict protein secondary structure. The model training took four iterations, for which the earlier iteration’s output and the original input features were used as the input for the next iteration. The original input features of SPIDER3 include physicochemical properties of amino acids, PSSM, and HMM profiles.

Attention mechanism. PSSP is similar to machine translation in NLP whereby both cases use sequences as input and output. Previous PSSP models had adopted approaches and methods used in the machine translation field. One of the methods adopted by PSSP models is the attention mechanism. The first PSSP model utilizing the attention mechanism was proposed by Drori et al. [133] , where the attention mechanism proposed in [134] was used. SAINT [110] proposed a new PSSP model architecture by augmenting self-attention (transformer) [135] in Deep3I (deep inception inside inception) architecture. OPUS-TASS [56] utilized modified self-attention (transformer) by dismissing the decoder part. TMPSS [69] utilized a shared attention mechanism initially proposed in [136] for multi-way multi-language machine translation. The attention mechanism was also used in the PSSP model proposed by Guo et al. [132] .

Miscellaneous. Wang et al. [137] integrated a deep convolutional neural network with conditional random field (CRF) to model complex sequence-structure relationships and interdependency between neighboring amino acids. Instead of using real-valued neural networks, complex-valued neural networks were utilized in [140] and applied to a small training set (compact model) of the CB513 dataset. Zhao et al. [79] applied a generative adversarial networks (GAN) to perform feature extraction on the original PSSM data and fed the result into CNN. Yavuz et al. [141] proposed using a clonal selection algorithm in the PSSP model architecture to improve the data before the classification process.

Table 4 summarizes the various deep learning methods involved in the architecture of previous notable PSSP models.

Deep learning methods involved in previous notable PSSP models.

GC: graph convolutional networks; CN: convolutional neural networks; RN: residual neural networks; IN: inception networks; US: U-shape networks; FN: fractal networks; AE: auto-encoder; LS: long short-term memory; GR: gated recurrent unit; FF: feed-forward neural networks; FC: fully connected layers; CR: conditional random field; Slf: self attention (transformer); Glb: global attention.

4.3. Performance

The performance of previous notable PSSP models using hybrid features is shown in Table 5 . The performance of single-sequence-based PSSP models is shown in Table 6 .

Performance of the previous PSSP models using hybrid features including one hot encoding of amino acids, sequence profiles (PSSM, HMM profiles), and other features.

*SOV’99

Performance of the previous PSSP models using only one hot encoding of amino acids (single-sequence prediction).

Both tables show that previous PSSP models are trained on non-uniform datasets. As the training data determine the capability of PSSP models to extract patterns from the input sequences and influence the performance of the classification task, a fair performance comparison cannot be made in this setting. Moreover, using sequence profiles as input of the PSSP models may lead to an evaluation bias primarily when redundancy of profiles between the training dataset and test dataset occurs [55] . Hence, a proper comparison among PSSP models could be made if two conditions are met: (1) PSSP models are trained with identical training datasets, (2) profile redundancy between training and test dataset is diminished.

5. New approaches for feature generation

The favorable outcome of language models (LMs) in NLP has driven researchers to implement LMs in protein structure prediction. Researchers have developed protein LMs to learn the biological properties of large protein sequences in the databases [142] , [49] , [50] . The embedding features derived from these LMs carry contextual features of the amino acid residues [143] . These embedding features may replace sequence profiles for the prediction input.

Heinzinger et al. [142] adopted the bidirectional language model ELMo (Embeddings from Language Models) [144] to build a protein representation in the form of continuous vectors (embeddings) called as SecVec (sequence-to-vector). They trained ELMo on UniRef50 and assessed the predictive capability of the embeddings by applications to per-residue (word-level) and per-protein (sentence-level) tasks. For the per-residue task, they evaluated PSSP performance given one-hot amino acid encoding, SecVec embeddings, evolutionary profiles, or their combinations as inputs. SeqVec embeddings produced the best performance for prediction without evolutionary information, although it did not improve over the best existing method using evolutionary information. Regarding the computation time, generating SecVec embedding is about 60-fold faster than generating HMM profiles using HHblits.

ProtTrans [49] trained six LMs in NLP, including T5 [145] , Electra [146] , BERT [147] , ALBERT [148] , Transformer-XL [149] , and XLNet [150] on protein sequences. Five models, including ProtTXL, ProtBert, ProtXLNet, ProtAlbert, and ProtElectra, are trained using UniRef100 [151] . Besides UniRef100, ProtBert and ProtXL are also trained using BFD [152] . ProtT5 is trained on UniRef50 [151] and also trained on BFD. ProtTrans initially tokenizes the protein sequences. For the per-residue task, the token will be each amino acid. It then adds positional encoding for each respective residue. Afterward, the resulting vectors are passed through one of the ProtTrans models to create embeddings for each input token. The last hidden state of the Transformer’s attention stack of the models is used as input for the task-specific predictors (i.e., three-state PSSP model). ProtTrans experiments showed that the Q3 accuracy on CASP12 achieved by using ProtTrans embeddings is higher than using word2vec embeddings. Using the same test dataset (CASP12), the ProtTXL version is less accurate compared to an existing ELMo/LSTM-based solution (SeqVec) [142] , while all other Transformer-models outperformed the SeqVec model. Moreover, ProtTrans resulted in lower Q3 accuracy than NetSurfP-2.0 [37] , which used evolutionary information.

ESM-1b [50] used up to 250 million sequences of the UniParc database [153] , which has 86 billion amino acids. ESM-1b was trained using Transformer [135] . Transformer receives sequences of amino acids (tokens) and produces sequences of log probabilities. The output sequences of Transformer are then optimized using the masked language modeling objective. In this case, each sequence is modified by replacing a fraction of the amino acid sequence with a special mask token. The network is then trained to predict the missing tokens from the modified sequence. The purpose of using masked language modeling is to reveal the relationships/dependencies between the masked and non-masked regions. ESM-1b’s effectiveness in increasing PSSP performance was tested on the baseline PSSP model, NetSurfP-2.0. The result showed that including the ESM-1b embedding feature as NetSurfP-2.0 input substantially increases the performance of NetSurfP-2.0.

The ProtTrans and ESM-1b embedding features have been utilized to train the following PSSP models, NetSurfP-3.0 [154] , DML_SS [143] , and SPOT-1D-LM [155] . Moreover, these embedding features have been employed to train the contact map prediction model, SPOT-Contact-LM [156] , and the inter-residue distance predictor [157] .

6. AlphaFold and its impact

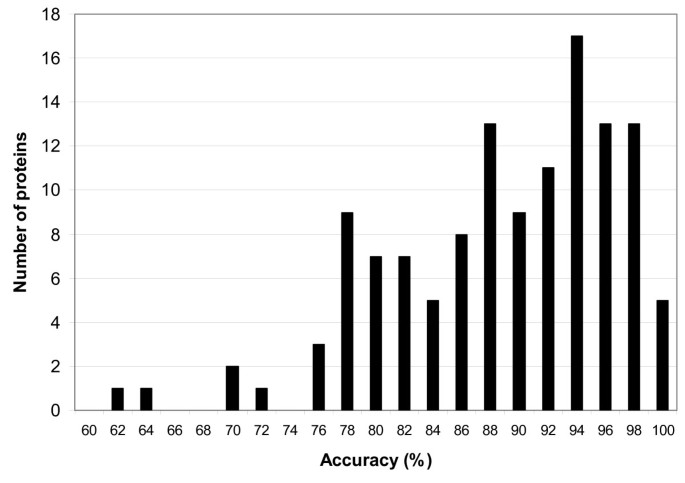

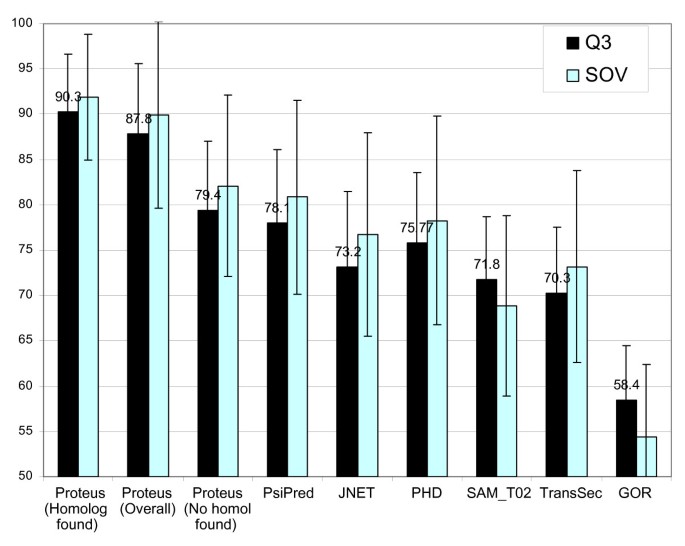

AlphaFold [3] became a revolution in the field of structural bioinformatics after it was able to predict 3D coordinates of protein structures with high accuracy. AlphaFold succeeded in predicting protein structures in the critical assessment of protein structure prediction round 14 (CASP14) [87] , a biennial protein structure competition held by the international community. AlphaFold can predict not only the 3D structure of single-chain proteins but also protein complexes [158] .