- Comparative Politics

- Comparative Analysis

A Comparative Analysis of XGBoost

- November 2019

- This person is not on ResearchGate, or hasn't claimed this research yet.

- Universidad Autónoma de Madrid

Abstract and Figures

Discover the world's research

- 25+ million members

- 160+ million publication pages

- 2.3+ billion citations

- Manuel Fernández-Delgado

- Leo Breiman

- Richard A. Olshen

- Charles J. Stone

- INFORM FUSION

- Mesut Gumus

- NEUROCOMPUTING

- Álvaro Alonso

- EXPERT SYST APPL

- Chuanzhe Liu

- Tianqi Chen

- Carlos Guestrin

- ASTROPHYS J

- Recruit researchers

- Join for free

- Login Email Tip: Most researchers use their institutional email address as their ResearchGate login Password Forgot password? Keep me logged in Log in or Continue with Google Welcome back! Please log in. Email · Hint Tip: Most researchers use their institutional email address as their ResearchGate login Password Forgot password? Keep me logged in Log in or Continue with Google No account? Sign up

Ir al contenido (pulsa Retorno)

- Català

- Inicia la sessió Registre (usuaris no UPC) Entrada (usuaris no UPC)

- Intercanvia la navegació

- mail Contacta

- user Inicia sessió Inicia la sessió Registre (usuaris no UPC) Entrada (usuaris no UPC)

UPCommons. Portal del coneixement obert de la UPC

- Pàgina inicial de UPCommons

- Treballs acadèmics

- Màsters oficials

- Màster universitari en Estadística i Investigació Operativa (UPC-UB)

- Visualitza l'ítem

Tree Boosting Data Competitions with XGBoost

Visualitza/Obre

- Màsters oficials - Màster universitari en Estadística i Investigació Operativa (UPC-UB) [444]

| Fitxers | Descripció | Mida | Format | Visualitza |

|---|---|---|---|---|

| 948,7Kb |

Grab your spot at the free arXiv Accessibility Forum

Help | Advanced Search

Computer Science > Machine Learning

Title: xgboost: a scalable tree boosting system.

Abstract: Tree boosting is a highly effective and widely used machine learning method. In this paper, we describe a scalable end-to-end tree boosting system called XGBoost, which is used widely by data scientists to achieve state-of-the-art results on many machine learning challenges. We propose a novel sparsity-aware algorithm for sparse data and weighted quantile sketch for approximate tree learning. More importantly, we provide insights on cache access patterns, data compression and sharding to build a scalable tree boosting system. By combining these insights, XGBoost scales beyond billions of examples using far fewer resources than existing systems.

| Comments: | KDD'16 changed all figures to type1 |

| Subjects: | Machine Learning (cs.LG) |

| Cite as: | [cs.LG] |

| (or [cs.LG] for this version) | |

| Focus to learn more arXiv-issued DOI via DataCite | |

| : | Focus to learn more DOI(s) linking to related resources |

Submission history

Access paper:.

- Other Formats

References & Citations

- Google Scholar

- Semantic Scholar

13 blog links

Dblp - cs bibliography, bibtex formatted citation.

Bibliographic and Citation Tools

Code, data and media associated with this article, recommenders and search tools.

- Institution

arXivLabs: experimental projects with community collaborators

arXivLabs is a framework that allows collaborators to develop and share new arXiv features directly on our website.

Both individuals and organizations that work with arXivLabs have embraced and accepted our values of openness, community, excellence, and user data privacy. arXiv is committed to these values and only works with partners that adhere to them.

Have an idea for a project that will add value for arXiv's community? Learn more about arXivLabs .

- Corpus ID: 114191144

Tree Boosting With XGBoost - Why Does XGBoost Win "Every" Machine Learning Competition?

- Didrik Nielsen

- Published 2016

- Computer Science

271 Citations

A machine-learning model for automatic detection of movement compensations in stroke patients.

- Highly Influenced

- 20 Excerpts

On Boosting: Theory and Applications

Estimation of inorganic crystal densities using gradient boosted trees, xgbod: improving supervised outlier detection with unsupervised representation learning, predicting hotel cancellations using machine learning, android malware detection and classification using lofo feature selection and tree-based models, enterprise applications, markets and services in the finance industry: 10th international workshop, financecom 2020, helsinki, finland, august 18, 2020, revised selected papers, comparative study of algorithms for predictions of traffic flow and road accidents, comparison of machine learning approaches used to identify the drivers of bakken oil well productivity, a study on gradient boosting algorithms for development of ai monitoring and prediction systems, 3 references, ensemble methods: foundations and algorithms, boosting with early stopping: convergence and consistency, essentials of statistical inference: index, related papers.

Showing 1 through 3 of 0 Related Papers

Data Analyst at Bulk Nutrients

- Custom Social Profile Link

TreeBoosting-03: Why Does XGBoost Win Every Machine Learning Competition?

26 minute read

Introduction

In this part of the Tree Boosting series , we have already talked about the basics of decision trees which are used as base leaners of tree boosting algorithms. We also discussed several approaches of building ensembling models from decision trees such as bagging, random forests and boosting.

In this post, we will dive deep into tree boosting methods and then answer the question “Why Does XGBoost Win “Every” Machine Learning Competition?” . The content of this post was extracted from a one-hundred-page paper named Tree Boosting With XGBoost

Supervised Learning

Supervised learning is a part of Machine Learning, which is concerned with modelling the relationship between a response variable $Y$ and a set of predictor variables $X$.

Predictive Modelling

There are two kinds of modelling - explanatory and predictive . In explanatory modelling, we are interested in understanding the causal relationship between $X$ and $Y$, while predictive modelling is concerned with predicting $Y$ by using $X$ as predictors. From now on, we will concern ourself with predictive modelling.

The Loss Function

In the statistical view, predictive modelling can be viewed as a problem of function estimation . The prediction accuracy of the function is measured using a loss function , which measures the discrepancy between a prediction and the true outcome.

In theory we can use any loss function that reflect discrepancy between the observations and the predictions. In practice however, there are a few popular loss functions which tend to be used for a wide variety of problems. Many of the loss functions used in practice are likelihood-based , i.e. negative log-likelihoods.

Likelihood-based Loss Functions

For regression , we assume a conditional Gaussian distribution

The loss function based on this likelihood is

Maximum likelihood estimation with a Gaussian error assumption is however equivalent to least-squares regression as the loss function is equivalent to the squared error loss

For binary classification , the Bernoulli distribution is useful. Letting $ \mathcal{Y} = \{ 0, 1 \} $, we can assume

The loss function based on the Bernoulli likelihood is also referred to as the log-loss , the cross-entropy or the Kullback-Leibler information.

The Risk Function

While the loss function measures the accuracy of a prediction after the outcome is observed. At the time we make the prediction however, the true outcome is still unknown, and the loss incurred is consequently a random variable $L(Y, f(X))$. The true risk of function $f$ is defined as the expected loss

The target function is now defined as

and the goal is to estimate this target function.

Empirical Risk Minimization

Unfortunately, since we may never know the true risk $R(f)$, we need to rely on the empirical risk when inferring our model.

By the strong law of large numbers we have that

almost surely.

The problem of function estimation is now to estimate the empirical target function

Empirical risk minimization (ERM) is an induction principle which relies on minimization of the empirical risk. ERM is a criterion to select the optimal function $\hat{f}$ from a set of functions $\mathcal{F}$, so called model class . The choice of $\mathcal{F}$ is of major importance.

The perhaps most popular model class is the class of linear models

The Learning Algorithm

The model class together with the ERM principle reduces the learning problem to an optimization problem . The computational aspect of the problem is to actually solve the the optimization problem defined by ERM. This is the job of the learning algorithm , which is essentially just an optimization algorithm .

The learning algorithm takes a data set $\mathcal{D}$ as input and outputs a fitted model $\hat{f}$. Most of model classes will have some parameters $\theta$ that the learning algorithm will adjust to fit the data. In this case, model estimation becomes estimating the parameters $\theta$.

Common learning methods include

- The Constant

- Linear Methods

- Local Regression Methods

- Basis Function Expansions

- Adaptive Basis Function Models

The Optimization Algorithm

Different choices of model classes and loss functions will lead to different optimization problems varying in dificulty and thus requiring different approaches. The simplest problems yield analytic solutions. Most problems do however require numerical methods .

When the objective function is continuous with respect to $\theta$ we get a continuous optimization problem . When this is not the case, we have a discrete optimization problem . The continuous optimization problems are typically easier to solve than discrete optimization problems thus are more desirable when it comes to choose the model class and loss function.

One notable example of a model class which leads to a discrete optimization problem is tree models. Most model classes does however lead to continuous optimization problems. There are numerous methods for continuous optimization problems. Two prominent methods that will be important for tree boosting implementation however, is the method of gradient descent and Newton’s method . Gradient boosting and Newton boosting are approximate nonparametric versions of these optimization algorithms.

Numerical Optimization in Parameter Space

Assume that we are trying to fit a model $f(x) = f(x;\theta)$ parameterized by $\theta$ then the risk of the model can be written as

Given that $R(\theta)$ is differentiable with respect to $\theta$ we can estimate $\theta$ using a numerical optimization algorithm such as gradient descent or Newton’s method .

For both algorithms, at iteration $m$, the estimate of $\theta$ is updated according to

where $\theta_m$ is the step taken at iteration $m$.

The resulting estimate of $\theta$ after $M$ iterations can be written as a sum

where $\theta_0$ is an initial guess and $\theta_1$, …, $\theta_M$ are the successive steps taken by the optimization algorithm.

The difference between two algorithms is in the step $\theta_m$ they take.

Gradient Descent

At each interation, gradient descent takes a step along the direction of steepest descent of the risk given by

To surely reduce risk however, the length of the step taken should be not too long. A popular way to determine the step length $\rho_m$ to take in the steepest descent direction is to use line search

The step taken at iteration $m$ can thus be written

Newton’s Method

Unlike gradient descent, Newton’s method determines both the step direction and step length at the same time. Newton’s method can be motivated as a way to approximately solve

By doing a second-order Taylor expansion, we get

where $H_m$ is the Hessian matrix at the current estimate

The solution to this is given by

From the discussion above, we can see that Newton’s method is a second-order method, while gradient descent is a first-order method.

Boosting refers to a class of learning algorithms that fit the data by combining multiple simple models. Each simple model is learnt using a base learner or weak learner which tend to have a limited predictive ability, but when selected carefully using a boosting algorithm, they form a relatively more accurate model. This is the meaning of “boosting”.

AdaBoost is regarded as the first practical boosting algorithm for binary classification. This algorithm fits a weak learner to weighted versions of the data iteratively . At each iteration, the weights are updated such that the misclassified data points recieve higher weights.

The resulting model can be written

where $\hat{c}_m(x) \in \{ −1, 1 \} $ are the weak classifiers and hard classifications are given by $\hat{c}_m(x) = sign(\hat{f}(x))$.

In the statistical view of the algorithm, it has been shown that AdaBoost was actually minimizing the exponential loss function

The early work on boosting focused on binary classification. After that, the view of boosting algorithms as numerical optimization techniques was developed. This led to the development of general boosting algorithms, e.g. gradient boosting , that allowed for optimization of any differentiable loss function. Boosting became applicable to general regression problems and not only classification.

Numerical Optimization in Function Space

Above, we have discussed numerical optimization in parameter space. We will now discuss numerical optimization in function space.

Note that minimizing

is equivalent to minimizing

At a current estimate $f^{(m−1)}$, the “step” $f_m$ is taken in function space to obtain $f^{(m)}$. Analogously to parameter optimization update we can write the update at iteration $m$ as

The resulting estimate of $f$ after $M$ iterations can be written as a sum

where $f_0$ is an initial guess and $f_1$,…, $f_M$ are the successive “steps” taken in function space.

Gradient Descent in Function Space

Similar to the case of gradient descent in parameter space , we have the direction of steepest descent of the risk by taking the negative gradient

The step length $\rho_m$ to take in the steepest descent direction can be determined using line search

The “step” taken at each iteration $m$ is then given by

Performing updates iteratively according to this yields the gradient descent algorithm in function space.

Newton’s Method in Function Space

The Newton “step” in function space is given by

where $h_m(x)$ is the Hessian at the current estimate

Boosting Algorithms

As seen from the previous sections, boosting fits ensemble models of the kind

These can be rewritten as adaptive basis function models

where $f_0(x) = \theta_0$ and $f_m(x) = \theta_m \phi_m(x)$ for $m = 1, …M$.

Most boosting algorithms can be seen to solve

either exactly or approximately at each iteration. Gradient boosting and Newton boosting can be viewed as general algorithms that solve the equation approximately for any suitable loss function. Also, gradient boosting and Newton boosting can be viewed as empirical versions of the numerical optimization algorithms in function space we developed above.

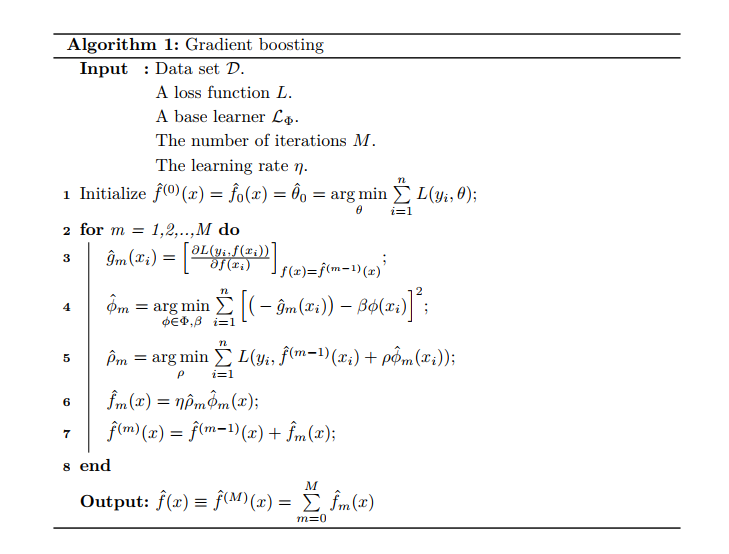

Gradient Boosting

The development of gradient boosting is based on gradient descent in function space that we derived before. Here, the empirical risk will take the place of the true risk.

The empirical version of the negative gradient is given by

Note that this empirical gradient is only defined at the data points $ \{ x_i \}_{i=1}^n $. Thus, to generalize to other points and prevent overfitting, we need to learn an approximate negative gradient using a set of basis functions $\Phi$.

At iteration $m$, the basis function $\phi_m \in \Phi$ is learnt from the data such that it produces output $ \{ \phi_m(x_i) \}_{i=1}^n $ which is most highly correlated with the negative gradient. This is obtained by

The basis function $\phi_m$ is learnt using a base learner where the squared error loss is used as a surrogate loss. The step length ρm to take in this step direction can subsequently be determined using line search

A regularization technique is also used where the step length at each iteration is multiplied by some factor $0 < \eta \leq 1$. The factor $\eta$ is sometimes referred to as the learning rate as lowering it can slow down learning.

Combining all this, the “step” taken at each iteration $m$ is given by

Doing this iteratively yields the gradient boosting procedure.

Gradient Boosting Algorithm

Newton Boosting

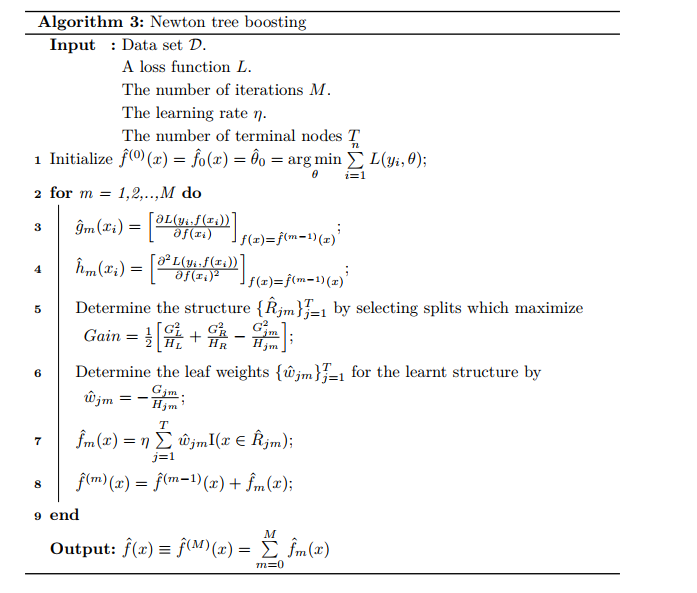

Similar to the case for gradient boosting, we have that the empirical gradient is defined solely at the data points. We thus also need a base learner here to select a basis function from a restricted set of functions. The Newton “step” is found by solving

where $\hat{g}_m(x_i)$ is the gradient and $\hat{h}_m(x_i)$ is the Hessian matrix.

The “step” taken at each iteration $m$ is given by

Repeating this iteratively yields the Newton boosting procedure. Note that the boosting algorithm developed here is the one used by XGBoost.

Newton Boosting Algorithm

Tree Boosting Methods

Using trees as base models for boosting is a very popular choice. In practice, gradient boosting has been particularly successful when applied to tree models, in which case it fits additive tree models. We will refer to this method as MART (Multiple Additive Regression Trees), but it is also known as GBRT (Gradient Boosted Regression Trees) and GBM (Gradient Boosting Machine), which was devised by Friedman in 2001, 2002. More recently, a new tree boosting method has come to stage and quickly gained popularity. It goes by the name XGBoost by Chen and Guestrin in 2016. While both tree boosting methods are conceptually similar, they however differs in multiple ways, i.e. regularization techniques they offer and the boosting algorithm they employ.

Boosted tree models can be viewed as adaptive basis function models.

Boosting tree models results in a sum of multiple trees $f_1$, …, $f_M$. They are therefore also referred to as tree ensembles or additive tree models. While the predictive ability is great increased through boosting, the main drawback of boosted tree models compared to single tree models is that most of the interpretability is lost.

Regularization

Complexity constraints.

There are three ways of controlling the complexity of an additive tree model:

- Constraining the number of trees.

- Restricting the maximum number of terminal nodes of each individual tree.

- Limiting the minimum number of observations falling in any terminal node.

For additive tree models, shallow regression trees are commonly used, i.e. regression trees with few terminal nodes.

Complexity Penalization

One of the core improvements of XGBoost over MART is that it offers the possibility of penalizing the complexity of the trees. The penalty is the sum of the complexity penalties of the individual trees in the additive tree model.

We see that the regularization term includes penalization of the number of terminal nodes of each individual tree through $gamma$, $l_2$ regularization of the leaf weights and $l_1$ regularization on the term weights.

Randomization

Introducing randomness in the learning procedure could improve generalization performance. Two common methods are row subsampling and column subsampling . At each boosting iteration, row subsampling draws a random sample of the data without replacement, which slightly differs from bootstrapping method. Meanwhile, column subsampling means randomly sampling the predictors which is similar to the idea used in Random Forests .

MART includes row subsampling, while XGBoost includes both row and column subsampling. The row and column subsampling fraction $0 < w_r, w_c < 1$ are the two randomization hyperparameters for the boosting procedure in XGBoost.

Learning Algorithms

When fitting additive tree models, MART and XGBoost employ two different tree boosting algorithms which are gradient tree boosting (GTB) and Newton tree boosting (NTB), respectively. For simplicity, we will assume the objective is the empirical risk without any penalization. They are thus learning algorithms for solving the same empirical risk minimization problem.

The optimization problem is simplified by doing forward stagewise additive modeling (FSAM). This simplies the problem by performing a greedy search, adding one tree at a time. At iteration $m$, both these algorithms seek to minimize the FSAM criterion

where the basis functions are trees

While Newton tree boosting is simply Newton boosting with trees as basis functions, gradient tree boosting is a modification of regular gradient boosting to the case where the basis functions are trees. We will in this section show how Newton tree boosting and gradient tree boosting learns the tree structure and leaf weights at each iteration.

Newton Tree Boosting Algorithm

The Newton tree boosting algorithm is simply the Newton boosting shown in Algorithm 2 where the basis functions are tree models. As dicussed above, Newton boosting approximates the FSAM criterion by

which is the second-order approximation.

Gradient Tree Boosting Algorithm

The gradient tree boosting algorithm is closely related to the gradient boosting algorithm shown in Algorithm 1. In line 4, a tree is learnt using the criterion

which is an approximation to the FSAM criterion.

Comparison of Tree Boosting Algorithms

In this section, we will compare the two tree boosting algorithms discussed above. Recall that, at iteration $m$, both these two algorithms seek to minimize the FSAM criterion which can be rewritten

This is repeated for $m = 1, …, M$ to yield an approximation to the solution to empirical risk minimization problem.

Gradient tree boosting and Newton tree boosting however, differ in the tree structures they learn and how they learn the leaf weights to assign in the terminal nodes of the learnt tree structure.

Learning the Structure

Gradient tree boosting and Newton tree boosting optimize different criteria when learning the structure of the tree at each iteration.

Gradient tree boosting learns the tree which is most highly correlated with the negative gradient of the current empirical risk.

Newton tree boosting, on the other hand, learns the tree which best fits the second-order Taylor expansion of the loss function.

Learning the structure amounts to searching for splits which maximize the gain. For gradient tree boosting, this gain is given by

while for Newton tree boosting, the gain is given by

Newton tree boosting learns the tree structure using a higher-order approximation of the FSAM criterion. We would thus expect it to learn better tree structures than gradient tree boosting. The leaf weights learnt during the search for structure are given by

for gradient tree boosting and by

for Newton tree boosting. For gradient tree boosting these leaf weights are however subsequently readjusted.

Learning the Leaf Weights

After a tree structure is learnt, the leaf weights need to be determined. For Newton tree boosting, the final leaf weights are the same as the leaf weights learnt when searching for the tree structure, i.e.

Gradient tree boosting, on the other hand, uses a different criterion to learn the leaf weights. The final leaf weights are determined by separate line searches in each terminal node $j = 1, …, T$

Considering this, gradient tree boosting can be seen to generally use a more accurate criterion to learn the leaf weights than Newton tree boosting. These more accurate leaf weights are however determined for a less accurate tree structure.

Empirical Comparison

To get an idea of how they compare in practice, we will test their performance on two standard datasets, the Sonar and the Ionosphere datasets. The log-loss was used to measure prediction accuracy. We will use only tree stumps (trees with two terminal nodes) and no other form regularization of the individual trees.

The learning rate was set to $\eta = 0.1$ and the number of trees was set to $M = 10,000$. Predictions were made at each iteration. To get a fairly stable estimate of out-of-sample perfomance, three repetitions of 10-fold cross-validation was used. The folds were the same for both algorithms.

To study the effect of line search for gradient tree boosting, one fit were also done using gradient boosting with line search.

From figures above, we observe that NTB seems to outperform GTB slighly for the Sonar dataset. We can see that NTB converges slightly faster, but GTB outperforms NTB when run for enough iterations for the Ionosphere dataset. They do however seem to have very similar performance for both problems. Of course, due to randomness, any algorithm might perform best in practice for any particular data set.

We can also see that line search clearly improves the rate of convergence. In both cases, line search also seems to improve the lowest log-loss achieved. Line search thus seems to be very beneficial for gradient tree boosting.

Why Does XGBoost Win “Every” Competition?

We all know that, for any specific data set, any method may dominate others. In this section, we will thus provide arguments as to why tree boosting seems to be such a versatile and adaptive approach, yielding good results for a wide range of problems, and why XGBoost may outperform MART for many problem.

Generally, tree boosting is so effective because it fits additive tree models, which have rich representational ability, using adaptively determined neighbourhoods. The property of adaptive neighbourhoods makes it able to use variable degrees of flexibility in different regions of the input space. Consequently, it will be able to perform automatic feature selection and capture high-order interactions without breaking down. It can thus be seen to be robust to the curse of dimensionality.

For MART, the number of terminal nodes is kept fixed for all trees, which might be suboptimal. For example, for highdimensional data sets, there might be some group of features which have a high order of interaction with each other, while other features only have lower order interactions, perhaps only additive structure. We would thus like to use deeper trees for some features than for the others. If the number of terminal nodes is fixed, the tree might be forced to do further splitting when it is not necessary. The variance of the additive tree model might thus increase unnecessarily. In contrast, XGBoost uses clever penalization of the individual trees. The trees are consequently allowed to have varying number of terminal nodes.

Moreover, while MART uses only shrinkage to reduce the leaf weights, XGBoost can also shrink them using penalization. The benefit of this is that the leaf weights are not all shrunk by the same factor, but leaf weights estimated using less evidence in the data will be shrunk more heavily. Again, we see the bias-variance tradeoff being taken into account during model fitting. XGBoost can thus be seen to be even more adaptive to the data than MART.

In addition, XGBoost employs Newton boosting rather than gradient boosting. By doing this, XGBoost is likely to learn better tree structures.

Finally, XGBoost includes an extra randomization parameter. This can be used to decorrelate the individual trees even further, possibly resulting in reduced overall variance of the model.

Nielsen, D. (2016). Tree Boosting With XGBoost - Why Does XGBoost Win “Every” Machine Learning Competition?

You may also enjoy

Treeboosting-02: bagging, random forests and boosting.

7 minute read

TreeBoosting-01: The Basics of Decision Trees

8 minute read

Kalapa’s Credit Scoring Challenge - #13 Solution, 0.2729 Gini Score - [FINAL RESULT: #4/847]

Kalapa’s credit scoring challenge - #17 solution, 0.22737 gini score.

5 minute read

Navigation Menu

Search code, repositories, users, issues, pull requests..., provide feedback.

We read every piece of feedback, and take your input very seriously.

Saved searches

Use saved searches to filter your results more quickly.

To see all available qualifiers, see our documentation .

- Notifications You must be signed in to change notification settings

christinethier/XGBoost_algorithm

Folders and files.

| Name | Name | |||

|---|---|---|---|---|

| 5 Commits | ||||

Repository files navigation

Xgboost_algorithm_master_thesis.

This repository illustrates the code for my Master Thesis, which centers around the implementation of the XGBoost Algorithm for calculating housing prices.

The project incorporates the following algorithms:

- Webscraping

- Random Forest

- Gradient Boosting

The project aims to address the following question: How does the XGBoost algorithm perform as a hedonic housing price model compared to Random Forest and Gradient Boosting, and what impact does it have on the efficiency and interpretation of the estimated housing price?

Abstract: This thesis aims to investigate the performance of the XGBoost algorithm as a hedonic housing price model in comparison to Random Forest and Gradient Boosting. Housing prices in Greater Copenhagen from 2018 to 2022 are obtained by scraping in order to predict housing prices. Feature importance and partial dependence plots are used to analyze the variables with the most significant impact on the models. The thesis has found size, location, and time to be the most important factors in housing price estimation. The study finds that XGBoost captures about 77% of the variance in housing prices, indicating that more information may be needed to fully understand the factors driving housing prices. Compared to Random Forest and Gradient Boosting, XGBoost outperforms both in terms of prediction accuracy and computational efficiency with a mean square error at 0.90072 and a total time per tree af 0.04 seconds. A more accurate hedonic housing price model could benefit real estate agents, banks, and credit institutions by providing insights into the housing market and assisting in the decision-making process.

- Jupyter Notebook 100.0%

- Erasmus School of Economics

- Erasmus School of History, Culture and Communication

- Erasmus School of Law

- Erasmus School of Philosophy

- Erasmus School of Social and Behavioural Sciences

- Erasmus School of Health Policy & Management

- International Institute of Social Studies

- Rotterdam School of Management

- Tinbergen Institute

- Institute for Housing and Urban Development Studies

- RSM Parttime Master Bedrijfskunde

- Erasmus University Library

- Thesis Repository.

- Erasmus School of Economics /

- Business Economics /

- Master Thesis

- Search: Search

Intermittent Demand Forecasting Using XGBoost Method under Linearly Decreasing Process

Publication.

| Additional Metadata | |

|---|---|

| Keywords | intermittent demand forecasting; XGBoost; inventory control |

| Thesis Advisor | Dekker, R. |

| Persistent URL | |

| Series | |

| Organisation | |

| Citation | . . Retrieved from http://hdl.handle.net/2105/59099 |

Add Content

IMAGES

COMMENTS

classification performance. The interpretability method to be used is SHAP on both XGBoost and the logistic regression and will be put against the built-in interpretation approach for logistic regression. 1.3 Structure of the thesis The remainder of the thesis is structured as follows. Chapter 2 gives an overview of the literature

In this thesis, the focus is primarily on the machine learning aspect of behavioral biometrics, more specifically applying Extreme Gradient Boosting (XGBoost), a gradient boosting approach, to classify users as either genuine or imposters. The impact of XGBoost has been widely recognized in many machine learning and

More recently, a tree boosting method known as XGBoost has gained popularity by winning numerous machine learning competitions. In this thesis, we will investigate how XGBoost differs from the more traditional MART. We will show that XGBoost employs a boosting algorithm which we will term Newton boosting. This boosting algorithm will further be ...

In this thesis, we will investigate how XGBoost differs from the more traditional MART. We will show that XGBoost employs a boosting algorithm which we will ... This thesis concludes my master's degree in Industrial Mathematics at the Norwe-gian University of Science and Technology (NTNU). It was written at the Depart-

Finally an extensive analysis of XGBoost parametrization tuning process is carried out. Average ranks (a higher rank is better) for the tested methods across 28 datasets (Critical difference CD= 1.42)

A THESIS FOR THE DEGREE OF MSc in Applied Data Science Utrecht, Netherlands SUPERVISORS Prof. dr. Y. (Yannis) Velegrakis V. (Vahid) Shahrivari Joghan, MSc ... XGBoost utilizes a boosting approach, while Random Forest adopts a bagging strategy. The selection of these two models, Random Forest and XGBoost, for this investigation was based on two ...

Master Thesis: Comparing the Performance of XGBoost and Shapley Values to Last Touch Attribution HARNESSING MACHINE LEARNING FOR MARKETING CHANNEL ATTRIBUTION MODELING Julia Tout - 528162 Date: 22, August, 2023 Supervisor: Professor Dr. Kathrin Gruber Second Assessor: Dr. (Erjen) JEM van Nierop

XGBoost was used by every winning team in the top-10. Moreover, the winning teams reported that ensemble meth-ods outperform a well-con gured XGBoost by only a small amount [1]. These results demonstrate that our system gives state-of-the-art results on a wide range of problems. Examples of the problems in these winning solutions include: store ...

This Master's Degree Thesis objective is to provide understanding on how to approach a supervised learning predictive problem and illustrate it using a statistical/machine learning algorithm, Tree Boosting. ... The methodology is explained following the XGBoost implementation, which achieved state-of-the-art results in several data competitions ...

Tree Boosting With XGBoost Why Does XGBoost Win "Every" Machine Learning Competition? Trondheim, December 2016 Master's thesis Master's thesis Trondheim, 2016 NTNU Norwegian University of Science and Technology Faculty of Information Technology, Mathematics and Electrical Engineering Department of Mathematical Sciences.

Tree boosting is a highly effective and widely used machine learning method. In this paper, we describe a scalable end-to-end tree boosting system called XGBoost, which is used widely by data scientists to achieve state-of-the-art results on many machine learning challenges. We propose a novel sparsity-aware algorithm for sparse data and weighted quantile sketch for approximate tree learning ...

Similarly, Prasanth Senthan et al.[10], considered a comparative study of standalone models using Decision Tree, Neural Network, Logis- tic Regression, Random Forest, XGBoost, AdaBoost and SVM on the native dataset of Telecommunication Industry of Sri Lanka containing 10000 samples and concluded XGBoost performed well with an accuracy of 82.90%.

Master Thesis; Search: Search Donk, D.M. van den. 2020-04-16. ... The XGBoost is better in predicting prepayments than the multinomial logit model. Using SHAP values, this thesis finds that XGBoost is better able to capture the non-linear dependencies of prepayment events on explanatory variables. Although prepayment dynamics are better ...

MASTER Automated machine learning with gradient boosting and meta-learning van Hoof, J.M.A.P. Award date: 2019 Link to publication Disclaimer This document contains a student thesis (bachelor's or master's), as authored by a student at Eindhoven University of Technology. Student

The results of the case study show that, overall, XGBoost performs better than AdaBoost and it shows best performance when shallow trees, moderate shrinking, the number of iterations increased with respect to default as well as subsampling of both features and training data points are considered. Expand

XGBoost can thus be seen to be even more adaptive to the data than MART. In addition, XGBoost employs Newton boosting rather than gradient boosting. By doing this, XGBoost is likely to learn better tree structures. Finally, XGBoost includes an extra randomization parameter. This can be used to decorrelate the individual trees even further ...

A tag already exists with the provided branch name. Many Git commands accept both tag and branch names, so creating this branch may cause unexpected behavior.

In the thesis, the author describes the CART because MART uses CART, XGBoost also implements a Tree model related to CART. CART grows the tree in a top-down fashion.

Official XGBoost Resources. The best source of information on XGBoost is the official GitHub repository for the project.. From there you can get access to the Issue Tracker and the User Group that can be used for asking questions and reporting bugs.. A great source of links with example code and help is the Awesome XGBoost page.. There is also an official documentation page that includes a ...

Photo by Emanuel Kionke on Unsplash. X GBoost has become a bit legendary in machine learning. Among its accomplishments are: (1) 17 of 29 challenges on machine-learning competition site Kaggle in 2015 were won with XGBoost, eight exclusively used XGBoost, and nine used XGBoost in ensembles with neural networks; and, (2) at KDD Cup 2016, a leading conference-based machine-learning competition ...

RSM Parttime Master Bedrijfskunde; Erasmus University Library ... Master Thesis; Search: Search Chang, C. 2021-08-30. Intermittent Demand Forecasting Using XGBoost Method under Linearly Decreasing Process Publication Publication. Additional Metadata; Keywords: intermittent demand forecasting; XGBoost; inventory control: Thesis Advisor: Dekker ...

XGBoost (eXtreme Gradient Boosting) is an advanced implementation of gradient boosting algorithm. It's a powerful machine learning algorithm especially popular for structured or tabular data.

XGBoost is an efficient implementation of gradient boosting for classification and regression problems. It is both fast and efficient, performing well, if not the best, on a wide range of predictive modeling tasks and is a favorite among data science competition winners, such as those on Kaggle. XGBoost can also be used for time series forecasting, although it requires that…