10 Unique Data Science Capstone Project Ideas

A capstone project is a culminating assignment that allows students to demonstrate the skills and knowledge they’ve acquired throughout their degree program. For data science students, it’s a chance to tackle a substantial real-world data problem.

If you’re short on time, here’s a quick answer to your question: Some great data science capstone ideas include analyzing health trends, building a predictive movie recommendation system, optimizing traffic patterns, forecasting cryptocurrency prices, and more .

In this comprehensive guide, we will explore 10 unique capstone project ideas for data science students. We’ll overview potential data sources, analysis methods, and practical applications for each idea.

Whether you want to work with social media datasets, geospatial data, or anything in between, you’re sure to find an interesting capstone topic.

Project Idea #1: Analyzing Health Trends

When it comes to data science capstone projects, analyzing health trends is an intriguing idea that can have a significant impact on public health. By leveraging data from various sources, data scientists can uncover valuable insights that can help improve healthcare outcomes and inform policy decisions.

Data Sources

There are several data sources that can be used to analyze health trends. One of the most common sources is electronic health records (EHRs), which contain a wealth of information about patient demographics, medical history, and treatment outcomes.

Other sources include health surveys, wearable devices, social media, and even environmental data.

Analysis Approaches

When analyzing health trends, data scientists can employ a variety of analysis approaches. Descriptive analysis can provide a snapshot of current health trends, such as the prevalence of certain diseases or the distribution of risk factors.

Predictive analysis can be used to forecast future health outcomes, such as predicting disease outbreaks or identifying individuals at high risk for certain conditions. Machine learning algorithms can be trained to identify patterns and make accurate predictions based on large datasets.

Applications

The applications of analyzing health trends are vast and far-reaching. By understanding patterns and trends in health data, policymakers can make informed decisions about resource allocation and public health initiatives.

Healthcare providers can use these insights to develop personalized treatment plans and interventions. Researchers can uncover new insights into disease progression and identify potential targets for intervention.

Ultimately, analyzing health trends has the potential to improve overall population health and reduce healthcare costs.

Project Idea #2: Movie Recommendation System

When developing a movie recommendation system, there are several data sources that can be used to gather information about movies and user preferences. One popular data source is the MovieLens dataset, which contains a large collection of movie ratings provided by users.

Another source is IMDb, a trusted website that provides comprehensive information about movies, including user ratings and reviews. Additionally, streaming platforms like Netflix and Amazon Prime also provide access to user ratings and viewing history, which can be valuable for building an accurate recommendation system.

There are several analysis approaches that can be employed to build a movie recommendation system. One common approach is collaborative filtering, which uses user ratings and preferences to identify patterns and make recommendations based on similar users’ preferences.

Another approach is content-based filtering, which analyzes the characteristics of movies (such as genre, director, and actors) to recommend similar movies to users. Hybrid approaches that combine both collaborative and content-based filtering techniques are also popular, as they can provide more accurate and diverse recommendations.

A movie recommendation system has numerous applications in the entertainment industry. One application is to enhance the user experience on streaming platforms by providing personalized movie recommendations based on individual preferences.

This can help users discover new movies they might enjoy and improve overall satisfaction with the platform. Additionally, movie recommendation systems can be used by movie production companies to analyze user preferences and trends, aiding in the decision-making process for creating new movies.

Finally, movie recommendation systems can also be utilized by movie critics and reviewers to identify movies that are likely to be well-received by audiences.

For more information on movie recommendation systems, you can visit https://www.kaggle.com/rounakbanik/movie-recommender-systems or https://www.researchgate.net/publication/221364567_A_new_movie_recommendation_system_for_large-scale_data .

Project Idea #3: Optimizing Traffic Patterns

When it comes to optimizing traffic patterns, there are several data sources that can be utilized. One of the most prominent sources is real-time traffic data collected from various sources such as GPS devices, traffic cameras, and mobile applications.

This data provides valuable insights into the current traffic conditions, including congestion, accidents, and road closures. Additionally, historical traffic data can also be used to identify recurring patterns and trends in traffic flow.

Other data sources that can be used include weather data, which can help in understanding how weather conditions impact traffic patterns, and social media data, which can provide information about events or incidents that may affect traffic.

Optimizing traffic patterns requires the use of advanced data analysis techniques. One approach is to use machine learning algorithms to predict traffic patterns based on historical and real-time data.

These algorithms can analyze various factors such as time of day, day of the week, weather conditions, and events to predict traffic congestion and suggest alternative routes.

Another approach is to use network analysis to identify bottlenecks and areas of congestion in the road network. By analyzing the flow of traffic and identifying areas where traffic slows down or comes to a halt, transportation authorities can make informed decisions on how to optimize traffic flow.

The optimization of traffic patterns has numerous applications and benefits. One of the main benefits is the reduction of traffic congestion, which can lead to significant time and fuel savings for commuters.

By optimizing traffic patterns, transportation authorities can also improve road safety by reducing the likelihood of accidents caused by congestion.

Additionally, optimizing traffic patterns can have positive environmental impacts by reducing greenhouse gas emissions. By minimizing the time spent idling in traffic, vehicles can operate more efficiently and emit fewer pollutants.

Furthermore, optimizing traffic patterns can have economic benefits by improving the flow of goods and services. Efficient traffic patterns can reduce delivery times and increase productivity for businesses.

Project Idea #4: Forecasting Cryptocurrency Prices

With the growing popularity of cryptocurrencies like Bitcoin and Ethereum, forecasting their prices has become an exciting and challenging task for data scientists. This project idea involves using historical data to predict future price movements and trends in the cryptocurrency market.

When working on this project, data scientists can gather cryptocurrency price data from various sources such as cryptocurrency exchanges, financial websites, or APIs. Websites like CoinMarketCap (https://coinmarketcap.com/) provide comprehensive data on various cryptocurrencies, including historical price data.

Additionally, platforms like CryptoCompare (https://www.cryptocompare.com/) offer real-time and historical data for different cryptocurrencies.

To forecast cryptocurrency prices, data scientists can employ various analysis approaches. Some common techniques include:

- Time Series Analysis: This approach involves analyzing historical price data to identify patterns, trends, and seasonality in cryptocurrency prices. Techniques like moving averages, autoregressive integrated moving average (ARIMA), or exponential smoothing can be used to make predictions.

- Machine Learning: Machine learning algorithms, such as random forests, support vector machines, or neural networks, can be trained on historical cryptocurrency data to predict future price movements. These algorithms can consider multiple variables, such as trading volume, market sentiment, or external factors, to make accurate predictions.

- Sentiment Analysis: This approach involves analyzing social media sentiment and news articles related to cryptocurrencies to gauge market sentiment. By considering the collective sentiment, data scientists can predict how positive or negative sentiment can impact cryptocurrency prices.

Forecasting cryptocurrency prices can have several practical applications:

- Investment Decision Making: Accurate price forecasts can help investors make informed decisions when buying or selling cryptocurrencies. By considering the predicted price movements, investors can optimize their investment strategies and potentially maximize their returns.

- Trading Strategies: Traders can use price forecasts to develop trading strategies, such as trend following or mean reversion. By leveraging predicted price movements, traders can make profitable trades in the volatile cryptocurrency market.

- Risk Management: Cryptocurrency price forecasts can help individuals and organizations manage their risk exposure. By understanding potential price fluctuations, risk management strategies can be implemented to mitigate losses.

Project Idea #5: Predicting Flight Delays

One interesting and practical data science capstone project idea is to create a model that can predict flight delays. Flight delays can cause a lot of inconvenience for passengers and can have a significant impact on travel plans.

By developing a predictive model, airlines and travelers can be better prepared for potential delays and take appropriate actions.

To create a flight delay prediction model, you would need to gather relevant data from various sources. Some potential data sources include:

- Flight data from airlines or aviation organizations

- Weather data from meteorological agencies

- Historical flight delay data from airports

By combining these different data sources, you can build a comprehensive dataset that captures the factors contributing to flight delays.

Once you have collected the necessary data, you can employ different analysis approaches to predict flight delays. Some common approaches include:

- Machine learning algorithms such as decision trees, random forests, or neural networks

- Time series analysis to identify patterns and trends in flight delay data

- Feature engineering to extract relevant features from the dataset

By applying these analysis techniques, you can develop a model that can accurately predict flight delays based on the available data.

The applications of a flight delay prediction model are numerous. Airlines can use the model to optimize their operations, improve scheduling, and minimize disruptions caused by delays. Travelers can benefit from the model by being alerted in advance about potential delays and making necessary adjustments to their travel plans.

Additionally, airports can use the model to improve resource allocation and manage passenger flow during periods of high delay probability. Overall, a flight delay prediction model can significantly enhance the efficiency and customer satisfaction in the aviation industry.

Project Idea #6: Fighting Fake News

With the rise of social media and the easy access to information, the spread of fake news has become a significant concern. Data science can play a crucial role in combating this issue by developing innovative solutions.

Here are some aspects to consider when working on a project that aims to fight fake news.

When it comes to fighting fake news, having reliable data sources is essential. There are several trustworthy platforms that provide access to credible news articles and fact-checking databases. Websites like Snopes and FactCheck.org are good starting points for obtaining accurate information.

Additionally, social media platforms such as Twitter and Facebook can be valuable sources for analyzing the spread of misinformation.

One approach to analyzing fake news is by utilizing natural language processing (NLP) techniques. NLP can help identify patterns and linguistic cues that indicate the presence of misleading information.

Sentiment analysis can also be employed to determine the emotional tone of news articles or social media posts, which can be an indicator of potential bias or misinformation.

Another approach is network analysis, which focuses on understanding how information spreads through social networks. By analyzing the connections between users and the content they share, it becomes possible to identify patterns of misinformation dissemination.

Network analysis can also help in identifying influential sources and detecting coordinated efforts to spread fake news.

The applications of a project aiming to fight fake news are numerous. One possible application is the development of a browser extension or a mobile application that provides users with real-time fact-checking information.

This tool could flag potentially misleading articles or social media posts and provide users with accurate information to help them make informed decisions.

Another application could be the creation of an algorithm that automatically identifies fake news articles and separates them from reliable sources. This algorithm could be integrated into news aggregation platforms to help users distinguish between credible and non-credible information.

Project Idea #7: Analyzing Social Media Sentiment

Social media platforms have become a treasure trove of valuable data for businesses and researchers alike. When analyzing social media sentiment, there are several data sources that can be tapped into. The most popular ones include:

- Twitter: With its vast user base and real-time nature, Twitter is often the go-to platform for sentiment analysis. Researchers can gather tweets containing specific keywords or hashtags to analyze the sentiment of a particular topic.

- Facebook: Facebook offers rich data for sentiment analysis, including posts, comments, and reactions. Analyzing the sentiment of Facebook posts can provide valuable insights into user opinions and preferences.

- Instagram: Instagram’s visual nature makes it an interesting platform for sentiment analysis. By analyzing the comments and captions on Instagram posts, researchers can gain insights into the sentiment associated with different images or topics.

- Reddit: Reddit is a popular platform for discussions on various topics. By analyzing the sentiment of comments and posts on specific subreddits, researchers can gain insights into the sentiment of different communities.

These are just a few examples of the data sources that can be used for analyzing social media sentiment. Depending on the research goals, other platforms such as LinkedIn, YouTube, and TikTok can also be explored.

When it comes to analyzing social media sentiment, there are various approaches that can be employed. Some commonly used analysis techniques include:

- Lexicon-based analysis: This approach involves using predefined sentiment lexicons to assign sentiment scores to words or phrases in social media posts. By aggregating these scores, researchers can determine the overall sentiment of a post or a collection of posts.

- Machine learning: Machine learning algorithms can be trained to classify social media posts into positive, negative, or neutral sentiment categories. These algorithms learn from labeled data and can make predictions on new, unlabeled data.

- Deep learning: Deep learning techniques, such as recurrent neural networks (RNNs) or convolutional neural networks (CNNs), can be used to capture the complex patterns and dependencies in social media data. These models can learn to extract sentiment information from textual or visual content.

It is important to note that the choice of analysis approach depends on the specific research objectives, available resources, and the nature of the social media data being analyzed.

Analyzing social media sentiment has a wide range of applications across different industries. Here are a few examples:

- Brand reputation management: By analyzing social media sentiment, businesses can monitor and manage their brand reputation. They can identify potential issues, respond to customer feedback, and take proactive measures to maintain a positive image.

- Market research: Social media sentiment analysis can provide valuable insights into consumer opinions and preferences. Businesses can use this information to understand market trends, identify customer needs, and develop targeted marketing strategies.

- Customer feedback analysis: Social media sentiment analysis can help businesses understand customer satisfaction levels and identify areas for improvement. By analyzing sentiment in customer feedback, companies can make data-driven decisions to enhance their products or services.

- Public opinion analysis: Researchers can analyze social media sentiment to study public opinion on various topics, such as political events, social issues, or product launches. This information can be used to understand public sentiment, predict trends, and inform decision-making.

These are just a few examples of how analyzing social media sentiment can be applied in real-world scenarios. The insights gained from sentiment analysis can help businesses and researchers make informed decisions, improve customer experience, and drive innovation.

Project Idea #8: Improving Online Ad Targeting

Improving online ad targeting involves analyzing various data sources to gain insights into users’ preferences and behaviors. These data sources may include:

- Website analytics: Gathering data from websites to understand user engagement, page views, and click-through rates.

- Demographic data: Utilizing information such as age, gender, location, and income to create targeted ad campaigns.

- Social media data: Extracting data from platforms like Facebook, Twitter, and Instagram to understand users’ interests and online behavior.

- Search engine data: Analyzing search queries and user behavior on search engines to identify intent and preferences.

By combining and analyzing these diverse data sources, data scientists can gain a comprehensive understanding of users and their ad preferences.

To improve online ad targeting, data scientists can employ various analysis approaches:

- Segmentation analysis: Dividing users into distinct groups based on shared characteristics and preferences.

- Collaborative filtering: Recommending ads based on users with similar preferences and behaviors.

- Predictive modeling: Developing algorithms to predict users’ likelihood of engaging with specific ads.

- Machine learning: Utilizing algorithms that can continuously learn from user interactions to optimize ad targeting.

These analysis approaches help data scientists uncover patterns and insights that can enhance the effectiveness of online ad campaigns.

Improved online ad targeting has numerous applications:

- Increased ad revenue: By delivering more relevant ads to users, advertisers can expect higher click-through rates and conversions.

- Better user experience: Users are more likely to engage with ads that align with their interests, leading to a more positive browsing experience.

- Reduced ad fatigue: By targeting ads more effectively, users are less likely to feel overwhelmed by irrelevant or repetitive advertisements.

- Maximized ad budget: Advertisers can optimize their budget by focusing on the most promising target audiences.

Project Idea #9: Enhancing Customer Segmentation

Enhancing customer segmentation involves gathering relevant data from various sources to gain insights into customer behavior, preferences, and demographics. Some common data sources include:

- Customer transaction data

- Customer surveys and feedback

- Social media data

- Website analytics

- Customer support interactions

By combining data from these sources, businesses can create a comprehensive profile of their customers and identify patterns and trends that will help in improving their segmentation strategies.

There are several analysis approaches that can be used to enhance customer segmentation:

- Clustering: Using clustering algorithms to group customers based on similar characteristics or behaviors.

- Classification: Building predictive models to assign customers to different segments based on their attributes.

- Association Rule Mining: Identifying relationships and patterns in customer data to uncover hidden insights.

- Sentiment Analysis: Analyzing customer feedback and social media data to understand customer sentiment and preferences.

These analysis approaches can be used individually or in combination to enhance customer segmentation and create more targeted marketing strategies.

Enhancing customer segmentation can have numerous applications across industries:

- Personalized marketing campaigns: By understanding customer preferences and behaviors, businesses can tailor their marketing messages to individual customers, increasing the likelihood of engagement and conversion.

- Product recommendations: By segmenting customers based on their purchase history and preferences, businesses can provide personalized product recommendations, leading to higher customer satisfaction and sales.

- Customer retention: By identifying at-risk customers and understanding their needs, businesses can implement targeted retention strategies to reduce churn and improve customer loyalty.

- Market segmentation: By identifying distinct customer segments, businesses can develop tailored product offerings and marketing strategies for each segment, maximizing the effectiveness of their marketing efforts.

Project Idea #10: Building a Chatbot

A chatbot is a computer program that uses artificial intelligence to simulate human conversation. It can interact with users in a natural language through text or voice. Building a chatbot can be an exciting and challenging data science capstone project.

It requires a combination of natural language processing, machine learning, and programming skills.

When building a chatbot, data sources play a crucial role in training and improving its performance. There are various data sources that can be used:

- Chat logs: Analyzing existing chat logs can help in understanding common user queries, responses, and patterns. This data can be used to train the chatbot on how to respond to different types of questions and scenarios.

- Knowledge bases: Integrating a knowledge base can provide the chatbot with a wide range of information and facts. This can be useful in answering specific questions or providing detailed explanations on certain topics.

- APIs: Utilizing APIs from different platforms can enhance the chatbot’s capabilities. For example, integrating a weather API can allow the chatbot to provide real-time weather information based on user queries.

There are several analysis approaches that can be used to build an efficient and effective chatbot:

- Natural Language Processing (NLP): NLP techniques enable the chatbot to understand and interpret user queries. This involves tasks such as tokenization, part-of-speech tagging, named entity recognition, and sentiment analysis.

- Intent recognition: Identifying the intent behind user queries is crucial for providing accurate responses. Machine learning algorithms can be trained to classify user intents based on the input text.

- Contextual understanding: Chatbots need to understand the context of the conversation to provide relevant and meaningful responses. Techniques such as sequence-to-sequence models or attention mechanisms can be used to capture contextual information.

Chatbots have a wide range of applications in various industries:

- Customer support: Chatbots can be used to handle customer queries and provide instant support. They can assist with common troubleshooting issues, answer frequently asked questions, and escalate complex queries to human agents when necessary.

- E-commerce: Chatbots can enhance the shopping experience by assisting users in finding products, providing recommendations, and answering product-related queries.

- Healthcare: Chatbots can be deployed in healthcare settings to provide preliminary medical advice, answer general health-related questions, and assist with appointment scheduling.

Building a chatbot as a data science capstone project not only showcases your technical skills but also allows you to explore the exciting field of artificial intelligence and natural language processing.

It can be a great opportunity to create a practical and useful tool that can benefit users in various domains.

Completing an in-depth capstone project is the perfect way for data science students to demonstrate their technical skills and business acumen. This guide outlined 10 unique project ideas spanning industries like healthcare, transportation, finance, and more.

By identifying the ideal data sources, analysis techniques, and practical applications for their chosen project, students can produce an impressive capstone that solves real-world problems and showcases their abilities.

Similar Posts

Is Computer Science An Engineering Degree? An In-Depth Explanation

For students exploring technology-related fields, a common question arises: is computer science actually an engineering degree, or is it fundamentally different? With overlapping subject matter and career options, the lines can seem blurred. If you’re short on time, here’s a quick answer: While computer science shares some qualities with engineering, most universities designate computer science…

Is Data Science Easier Than Computer Science? A Detailed Comparison

With technology fields booming, many students find themselves trying to choose between majors like data science and computer science. But which one is easier? If you’re short on time, here’s a quick answer: Data science is generally considered easier than computer science overall, thanks to less intensive coding and math requirements. However, data science still…

Rensselaer Polytechnic Institute Computer Science Rankings: An In-Depth Analysis

Rensselaer Polytechnic Institute (RPI) is renowned for its computing and IT programs, but how does it stack up in major computer science rankings? In this comprehensive guide, we’ll analyze RPI’s CS program rankings across various authoritative college lists and metrics. In short, RPI computer science is consistently ranked among the top computer science schools in…

A Guide To The Uiuc Online Master’S In Computer Science

As one of the top computer science schools in the nation, the University of Illinois Urbana-Champaign offers a high-caliber online master’s program catering to working professionals. If you’re considering advancing your computer science career with flexible, remote coursework, UIUC’s online master’s may be an ideal option. This comprehensive guide covers everything you need to know,…

Is Cyber Security Computer Science? An In-Depth Look

Cyber threats are more rampant than ever, with hackers and cyber criminals using increasingly sophisticated tools and techniques to carry out attacks. Naturally, cyber security has become a top priority for organizations of all sizes. But where does cyber security fit within the broader field of computer science? Let’s take an in-depth look. If you’re…

Utd Computer Science Acceptance Rates And Admissions Tips

With its highly ranked programs in computer science and engineering, the University of Texas at Dallas (UTD) is a top choice for many aspiring tech students. But how easy is it to get into UTD computer science? What are the acceptance rates? If you’re short on time, here’s the quick answer: UTD computer science acceptance…

- Skip to main content

- Skip to secondary menu

- Skip to primary sidebar

- Skip to footer

Data and Technology Insights

Big Data – Capstone Project

Welcome to the Capstone Project for Big Data! In this culminating project, you will build a big data ecosystem using tools and methods form the earlier courses in this specialization. You will analyze a data set simulating big data generated from a large number of users who are playing our imaginary game “Catch the Pink Flamingo”. During the five week Capstone Project, you will walk through the typical big data science steps for acquiring, exploring, preparing, analyzing, and reporting.

In the first two weeks, we will introduce you to the data set and guide you through some exploratory analysis using tools such as Splunk and Open Office. Then we will move into more challenging big data problems requiring the more advanced tools you have learned including KNIME, Spark's MLLib and Gephi. Finally, during the fifth and final week, we will show you how to bring it all together to create engaging and compelling reports and slide presentations.

Interested in what the future will bring? Download our 2024 Technology Trends eBook for free.

As a result of our collaboration with Splunk, a software company focus on analyzing machine-generated big data, learners with the top projects will be eligible to present to Splunk and meet Splunk recruiters and engineering leadership.

About Coursera

- 5 Reasons Why Modern Data Integration Gives You a Competitive Advantage

- 5 Most Common Database Structures for Small Businesses

- 6 Ways to Reduce IT Costs Through Observability

- How is Big Data Analytics Used in Business? These 5 Use Cases Share Valuable Insights

- How Realistic Are Self-Driving Cars?

Dear visitor, Thank you for visiting Datafloq. If you find our content interesting, please subscribe to our weekly newsletter:

Did you know that you can publish job posts for free on Datafloq? You can start immediately and find the best candidates for free! Click here to get started.

Thanks for visiting Datafloq If you enjoyed our content on emerging technologies, why not subscribe to our weekly newsletter to receive the latest news straight into your mailbox?

- Privacy Overview

- Necessary Cookies

- Marketing cookies

This website uses cookies so that we can provide you with the best user experience possible. Cookie information is stored in your browser and performs functions such as recognising you when you return to our website and helping our team to understand which sections of the website you find most interesting and useful.

Strictly Necessary Cookie should be enabled at all times so that we can save your preferences for cookie settings.

If you disable this cookie, we will not be able to save your preferences. This means that every time you visit this website you will need to enable or disable cookies again.

This website uses Google Analytics to collect anonymous information such as the number of visitors to the site, and the most popular pages.

Keeping this cookie enabled helps us to improve our website.

Please enable Strictly Necessary Cookies first so that we can save your preferences!

21 Interesting Data Science Capstone Project Ideas [2024]

Data science, encompassing the analysis and interpretation of data, stands as a cornerstone of modern innovation.

Capstone projects in data science education play a pivotal role, offering students hands-on experience to apply theoretical concepts in practical settings.

These projects serve as a culmination of their learning journey, providing invaluable opportunities for skill development and problem-solving.

Our blog is dedicated to guiding prospective students through the selection process of data science capstone project ideas. It offers curated ideas and insights to help them embark on a fulfilling educational experience.

Join us as we navigate the dynamic world of data science, empowering students to thrive in this exciting field.

Data Science Capstone Project: A Comprehensive Overview

Table of Contents

Data science capstone projects are an essential component of data science education, providing students with the opportunity to apply their knowledge and skills to real-world problems.

Capstone projects challenge students to acquire and analyze data to solve real-world problems. These projects are designed to test students’ skills in data visualization, probability, inference and modeling, data wrangling, data organization, regression, and machine learning.

In addition, capstone projects are conducted with industry, government, and academic partners, and most projects are sponsored by an organization.

The projects are drawn from real-world problems, and students work in teams consisting of two to four students and a faculty advisor.

However, the goal of the capstone project is to create a usable/public data product that can be used to show students’ skills to potential employers.

Best Data Science Capstone Project Ideas – According to Skill Level

Data science capstone projects are a great way to showcase your skills and apply what you’ve learned in a real-world context. Here are some project ideas categorized by skill level:

Beginner-Level Data Science Capstone Project Ideas

1. Exploratory Data Analysis (EDA) on a Dataset

Start by analyzing a dataset of your choice and exploring its characteristics, trends, and relationships. Practice using basic statistical techniques and visualization tools to gain insights and present your findings clearly and understandably.

2. Predictive Modeling with Linear Regression

Build a simple linear regression model to predict a target variable based on one or more input features. Learn about model evaluation techniques such as mean squared error and R-squared, and interpret the results to make meaningful predictions.

3. Classification with Decision Trees

Use decision tree algorithms to classify data into distinct categories. Learn how to preprocess data, train a decision tree model, and evaluate its performance using metrics like accuracy, precision, and recall. Apply your model to practical scenarios like predicting customer churn or classifying spam emails.

4. Clustering with K-Means

Explore unsupervised learning by applying the K-Means algorithm to group similar data points together. Practice feature scaling and model evaluation to identify meaningful clusters within your dataset. Apply your clustering model to segment customers or analyze patterns in market data.

5. Sentiment Analysis on Text Data

Dive into natural language processing (NLP) by analyzing text data to determine sentiment polarity (positive, negative, or neutral).

Learn about tokenization, text preprocessing, and sentiment analysis techniques using libraries like NLTK or spaCy. Apply your skills to analyze product reviews or social media comments.

6. Time Series Forecasting

Predict future trends or values based on historical time series data. Learn about time series decomposition, trend analysis, and seasonal patterns using methods like ARIMA or exponential smoothing. Apply your forecasting skills to predict stock prices, weather patterns, or sales trends.

7. Image Classification with Convolutional Neural Networks (CNNs)

Explore deep learning concepts by building a basic CNN model to classify images into different categories.

Learn about convolutional layers, pooling, and fully connected layers, and experiment with different architectures to improve model performance. Apply your CNN model to tasks like recognizing handwritten digits or classifying images of animals.

Intermediate-Level Data Science Capstone Project Ideas

8. Customer Segmentation and Market Basket Analysis

Utilize advanced clustering techniques to segment customers based on their purchasing behavior. Conduct market basket analysis to identify frequent item associations and recommend personalized product suggestions.

Implement techniques like the Apriori algorithm or association rules mining to uncover valuable insights for targeted marketing strategies.

9. Time Series Anomaly Detection

Apply anomaly detection algorithms to identify unusual patterns or outliers in time series data. Utilize techniques such as moving average, Z-score, or autoencoders to detect anomalies in various domains, including finance, IoT sensors, or network traffic.

Develop robust anomaly detection models to enhance data security and predictive maintenance.

10. Recommendation System Development

Build a recommendation engine to suggest personalized items or content to users based on their preferences and behavior. Implement collaborative filtering, content-based filtering, or hybrid recommendation approaches to improve user engagement and satisfaction.

Evaluate the performance of your recommendation system using metrics like precision, recall, and mean average precision.

11. Natural Language Processing for Topic Modeling

Dive deeper into NLP by exploring topic modeling techniques to extract meaningful topics from text data.

Implement algorithms like Latent Dirichlet Allocation (LDA) or Non-Negative Matrix Factorization (NMF) to identify hidden themes or subjects within large text corpora. Apply topic modeling to analyze customer feedback, news articles, or academic papers.

12. Fraud Detection in Financial Transactions

Develop a fraud detection system using machine learning algorithms to identify suspicious activities in financial transactions. Utilize supervised learning techniques such as logistic regression, random forests, or gradient boosting to classify transactions as fraudulent or legitimate.

Employ feature engineering and model evaluation to improve fraud detection accuracy and minimize false positives.

13. Predictive Maintenance for Industrial Equipment

Implement predictive maintenance techniques to anticipate equipment failures and prevent costly downtime.

Analyze sensor data from machinery using machine learning algorithms like support vector machines or recurrent neural networks to predict when maintenance is required. Optimize maintenance schedules to minimize downtime and maximize operational efficiency.

14. Healthcare Data Analysis and Disease Prediction

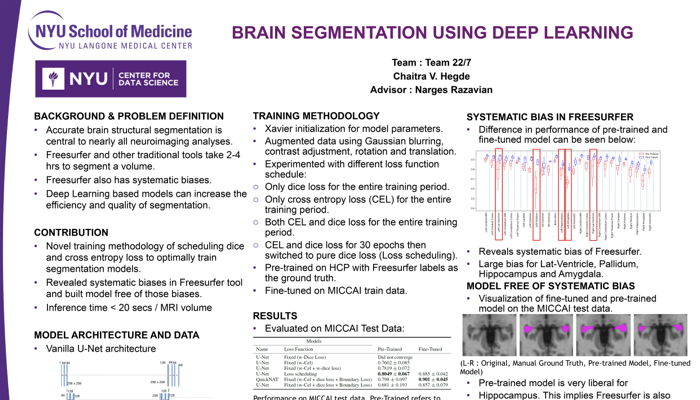

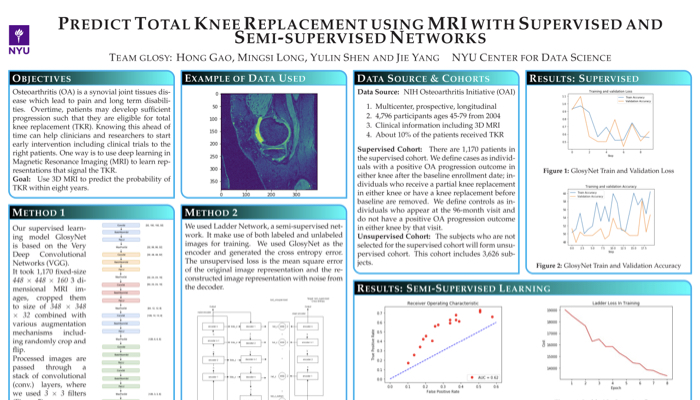

Utilize healthcare datasets to analyze patient demographics, medical history, and diagnostic tests to predict the likelihood of disease occurrence or progression.

Apply machine learning algorithms such as logistic regression, decision trees, or support vector machines to develop predictive models for diseases like diabetes, cancer, or heart disease. Evaluate model performance using metrics like sensitivity, specificity, and area under the ROC curve.

Advanced Level Data Science Capstone Project Ideas

15. Deep Learning for Image Generation

Explore generative adversarial networks (GANs) or variational autoencoders (VAEs) to generate realistic images from scratch. Experiment with architectures like DCGAN or StyleGAN to create high-resolution images of faces, landscapes, or artwork.

Evaluate image quality and diversity using perceptual metrics and human judgment.

16. Reinforcement Learning for Game Playing

Implement reinforcement learning algorithms like deep Q-learning or policy gradients to train agents to play complex games like Atari or board games.

Experiment with exploration-exploitation strategies and reward-shaping techniques to improve agent performance and achieve superhuman levels of gameplay.

17. Anomaly Detection in Streaming Data

Develop real-time anomaly detection systems to identify abnormal behavior in streaming data streams such as network traffic, sensor readings, or financial transactions.

Utilize online learning algorithms like streaming k-means or Isolation Forest to detect anomalies and trigger timely alerts for intervention.

18. Multi-Modal Sentiment Analysis

Extend sentiment analysis to incorporate multiple modalities such as text, images, and audio to capture rich emotional expressions.

However, utilize deep learning architectures like multimodal transformers or fusion models to analyze sentiment across different modalities and improve understanding of complex human emotions.

19. Graph Neural Networks for Social Network Analysis

Apply graph neural networks (GNNs) to model and analyze complex relational data in social networks. Use techniques like graph convolutional networks (GCNs) or graph attention networks (GATs) to learn node embeddings and predict node properties such as community detection or influential users.

20. Time Series Forecasting with Deep Learning

Explore advanced deep learning architectures like long short-term memory (LSTM) networks or transformer-based models for time series forecasting.

Utilize attention mechanisms and multi-horizon forecasting to capture long-term dependencies and improve prediction accuracy in dynamic and volatile environments.

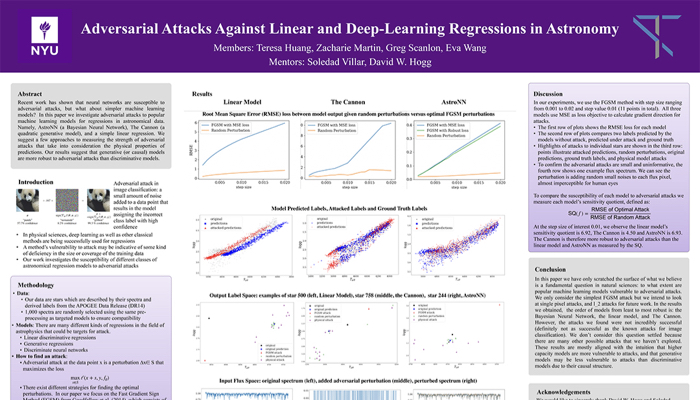

21. Adversarial Robustness in Machine Learning

Investigate techniques to improve the robustness of machine learning models against adversarial attacks.

Explore methods like adversarial training, defensive distillation, or certified robustness to mitigate vulnerabilities and ensure model reliability in adversarial perturbations, particularly in critical applications like autonomous vehicles or healthcare.

These project ideas cater to various skill levels in data science, ranging from beginners to experts. Choose a project that aligns with your interests and skill level, and don’t hesitate to experiment and learn along the way!

Factors to Consider When Choosing a Data Science Capstone Project

Choosing the right data science capstone project is crucial for your learning experience and effectively showcasing your skills. Here are some factors to consider when selecting a data science capstone project:

Personal Interest

Select a project that aligns with your passions and career goals to stay motivated and engaged throughout the process.

Data Availability

Ensure access to relevant and sufficient data to complete the project and draw meaningful insights effectively.

Complexity Level

Consider your current skill level and choose a project that challenges you without overwhelming you, allowing for growth and learning.

Real-World Impact

Aim for projects with practical applications or societal relevance to showcase your ability to solve tangible problems.

Resource Requirements

Evaluate the availability of resources such as time, computing power, and software tools needed to execute the project successfully.

Mentorship and Support

Seek projects with opportunities for guidance and feedback from mentors or peers to enhance your learning experience.

Novelty and Innovation

Explore projects that push boundaries and explore new techniques or approaches to demonstrate creativity and originality in your work.

Tips for Successfully Completing a Data Science Capstone Project

Successfully completing a data science capstone project requires careful planning, effective execution, and strong communication skills. Here are some tips to help you navigate through the process:

- Plan and Prioritize: Break down the project into manageable tasks and create a timeline to stay organized and focused.

- Understand the Problem: Clearly define the project objectives, requirements, and expected outcomes before analyzing.

- Explore and Experiment: Experiment with different methodologies, algorithms, and techniques to find the most suitable approach.

- Document and Iterate: Document your process, results, and insights thoroughly, and iterate on your analyses based on feedback and new findings.

- Collaborate and Seek Feedback: Collaborate with peers, mentors, and stakeholders, actively seeking feedback to improve your work and decision-making.

- Practice Communication: Communicate your findings effectively through clear visualizations, reports, and presentations tailored to your audience’s understanding.

- Reflect and Learn: Reflect on your challenges, successes, and lessons learned throughout the project to inform your future endeavors and continuous improvement.

By following these tips, you can successfully navigate the data science capstone project and demonstrate your skills and expertise in the field.

Wrapping Up

In wrapping up, data science capstone project ideas are invaluable in bridging the gap between theory and practice, offering students a chance to apply their knowledge in real-world scenarios.

They are a cornerstone of data science education, fostering critical thinking, problem-solving, and practical skills development.

As you embark on your journey, don’t hesitate to explore diverse and challenging project ideas. Embrace the opportunity to push boundaries, innovate, and make meaningful contributions to the field.

Share your insights, challenges, and successes with others, and invite fellow enthusiasts to exchange ideas and experiences.

1. What is the purpose of a data science capstone project?

A data science capstone project serves as a culmination of a student’s learning experience, allowing them to apply their knowledge and skills to solve real-world problems in the field of data science. It provides hands-on experience and showcases their ability to analyze data, derive insights, and communicate findings effectively.

2. What are some examples of data science capstone projects?

Data science capstone projects can cover a wide range of topics and domains, including predictive modeling, natural language processing, image classification, recommendation systems, and more. Examples may include analyzing customer behavior, predicting stock prices, sentiment analysis on social media data, or detecting anomalies in financial transactions.

3. How long does it typically take to complete a data science capstone project?

The duration of a data science capstone project can vary depending on factors such as project complexity, available resources, and individual pace. Generally, it may take several weeks to several months to complete a project, including tasks such as data collection, preprocessing, analysis, modeling, and presentation of findings.

Related Posts

Science Fair Project Ideas For 6th Graders

When it comes to Science Fair Project Ideas For 6th Graders, the possibilities are endless! These projects not only help students develop essential skills, such…

Java Project Ideas for Beginners

Java is one of the most popular programming languages. It is used for many applications, from laptops to data centers, gaming consoles, scientific supercomputers, and…

25+ Solved End-to-End Big Data Projects with Source Code

Solved End-to-End Real World Mini Big Data Projects Ideas with Source Code For Beginners and Students to master big data tools like Hadoop and Spark.

Ace your big data analytics interview by adding some unique and exciting Big Data projects to your portfolio. This blog lists over 20 big data analytics projects you can work on to showcase your big data skills and gain hands-on experience in big data tools and technologies. You will find several big data projects depending on your level of expertise- big data projects for students, big data projects for beginners, etc.

Build a big data pipeline with AWS Quicksight, Druid, and Hive

Downloadable solution code | Explanatory videos | Tech Support

Have you ever looked for sneakers on Amazon and seen advertisements for similar sneakers while searching the internet for the perfect cake recipe? Maybe you started using Instagram to search for some fitness videos, and now, Instagram keeps recommending videos from fitness influencers to you. And even if you’re not very active on social media, I’m sure you now and then check your phone before leaving the house to see what the traffic is like on your route to know how long it could take you to reach your destination. None of this would have been possible without the application of big data analysis process on by the modern data driven companies. We bring the top big data projects for 2023 that are specially curated for students, beginners, and anybody looking to get started with mastering data skills.

Table of Contents

What is a big data project, how do you create a good big data project, 25+ big data project ideas to help boost your resume , big data project ideas for beginners, intermediate projects on data analytics, advanced level examples of big data projects, real-time big data projects with source code, sample big data project ideas for final year students, big data project ideas using hadoop , big data projects using spark, gcp and aws big data projects, best big data project ideas for masters students, fun big data project ideas, top 5 apache big data projects, top big data projects on github with source code, level-up your big data expertise with projectpro's big data projects, faqs on big data projects.

A big data project is a data analysis project that uses machine learning algorithms and different data analytics techniques on structured and unstructured data for several purposes, including predictive modeling and other advanced analytics applications. Before actually working on any big data projects, data engineers must acquire proficient knowledge in the relevant areas, such as deep learning, machine learning, data visualization , data analytics, data science, etc.

Many platforms, like GitHub and ProjectPro, offer various big data projects for professionals at all skill levels- beginner, intermediate, and advanced. However, before moving on to a list of big data project ideas worth exploring and adding to your portfolio, let us first get a clear picture of what big data is and why everyone is interested in it.

Kicking off a big data analytics project is always the most challenging part. You always encounter questions like what are the project goals, how can you become familiar with the dataset, what challenges are you trying to address, what are the necessary skills for this project, what metrics will you use to evaluate your model, etc.

Well! The first crucial step to launching your project initiative is to have a solid project plan. To build a big data project, you should always adhere to a clearly defined workflow. Before starting any big data project, it is essential to become familiar with the fundamental processes and steps involved, from gathering raw data to creating a machine learning model to its effective implementation.

Understand the Business Goals of the Big Data Project

The first step of any good big data analytics project is understanding the business or industry that you are working on. Go out and speak with the individuals whose processes you aim to transform with data before you even consider analyzing the data. Establish a timeline and specific key performance indicators afterward. Although planning and procedures can appear tedious, they are a crucial step to launching your data initiative! A definite purpose of what you want to do with data must be identified, such as a specific question to be answered, a data product to be built, etc., to provide motivation, direction, and purpose.

Here's what valued users are saying about ProjectPro

Tech Leader | Stanford / Yale University

Ameeruddin Mohammed

ETL (Abintio) developer at IBM

Not sure what you are looking for?

Collect Data for the Big Data Project

The next step in a big data project is looking for data once you've established your goal. To create a successful data project, collect and integrate data from as many different sources as possible.

Here are some options for collecting data that you can utilize:

Connect to an existing database that is already public or access your private database.

Consider the APIs for all the tools your organization has been utilizing and the data they have gathered. You must put in some effort to set up those APIs so that you can use the email open and click statistics, the support request someone sent, etc.

There are plenty of datasets on the Internet that can provide more information than what you already have. There are open data platforms in several regions (like data.gov in the U.S.). These open data sets are a fantastic resource if you're working on a personal project for fun.

Data Preparation and Cleaning

The data preparation step, which may consume up to 80% of the time allocated to any big data or data engineering project, comes next. Once you have the data, it's time to start using it. Start exploring what you have and how you can combine everything to meet the primary goal. To understand the relevance of all your data, start making notes on your initial analyses and ask significant questions to businesspeople, the IT team, or other groups. Data Cleaning is the next step. To ensure that data is consistent and accurate, you must review each column and check for errors, missing data values, etc.

Making sure that your project and your data are compatible with data privacy standards is a key aspect of data preparation that should not be overlooked. Personal data privacy and protection are becoming increasingly crucial, and you should prioritize them immediately as you embark on your big data journey. You must consolidate all your data initiatives, sources, and datasets into one location or platform to facilitate governance and carry out privacy-compliant projects.

New Projects

Data Transformation and Manipulation

Now that the data is clean, it's time to modify it so you can extract useful information. Starting with combining all of your various sources and group logs will help you focus your data on the most significant aspects. You can do this, for instance, by adding time-based attributes to your data, like:

Acquiring date-related elements (month, hour, day of the week, week of the year, etc.)

Calculating the variations between date-column values, etc.

Joining datasets is another way to improve data, which entails extracting columns from one dataset or tab and adding them to a reference dataset. This is a crucial component of any analysis, but it can become a challenge when you have many data sources.

Visualize Your Data

Now that you have a decent dataset (or perhaps several), it would be wise to begin analyzing it by creating beautiful dashboards, charts, or graphs. The next stage of any data analytics project should focus on visualization because it is the most excellent approach to analyzing and showcasing insights when working with massive amounts of data.

Another method for enhancing your dataset and creating more intriguing features is to use graphs. For instance, by plotting your data points on a map, you can discover that some geographic regions are more informative than some other nations or cities.

Build Predictive Models Using Machine Learning Algorithms

Machine learning algorithms can help you take your big data project to the next level by providing you with more details and making predictions about future trends. You can create models to find trends in the data that were not visible in graphs by working with clustering techniques (also known as unsupervised learning). These organize relevant outcomes into clusters and more or less explicitly state the characteristic that determines these outcomes.

Advanced data scientists can use supervised algorithms to predict future trends. They discover features that have influenced previous data patterns by reviewing historical data and can then generate predictions using these features.

Lastly, your predictive model needs to be operationalized for the project to be truly valuable. Deploying a machine learning model for adoption by all individuals within an organization is referred to as operationalization.

Repeat The Process

This is the last step in completing your big data project, and it's crucial to the whole data life cycle. One of the biggest mistakes individuals make when it comes to machine learning is assuming that once a model is created and implemented, it will always function normally. On the contrary, if models aren't updated with the latest data and regularly modified, their quality will deteriorate with time.

You need to accept that your model will never indeed be "complete" to accomplish your first data project effectively. You need to continually reevaluate, retrain it, and create new features for it to stay accurate and valuable.

If you are a newbie to Big Data, keep in mind that it is not an easy field, but at the same time, remember that nothing good in life comes easy; you have to work for it. The most helpful way of learning a skill is with some hands-on experience. Below is a list of Big Data analytics project ideas and an idea of the approach you could take to develop them; hoping that this could help you learn more about Big Data and even kick-start a career in Big Data.

Yelp Data Processing Using Spark And Hive Part 1

Yelp Data Processing using Spark and Hive Part 2

Hadoop Project for Beginners-SQL Analytics with Hive

Tough engineering choices with large datasets in Hive Part - 1

Finding Unique URL's using Hadoop Hive

AWS Project - Build an ETL Data Pipeline on AWS EMR Cluster

Orchestrate Redshift ETL using AWS Glue and Step Functions

Analyze Yelp Dataset with Spark & Parquet Format on Azure Databricks

Data Warehouse Design for E-commerce Environments

Analyzing Big Data with Twitter Sentiments using Spark Streaming

PySpark Tutorial - Learn to use Apache Spark with Python

Tough engineering choices with large datasets in Hive Part - 2

Event Data Analysis using AWS ELK Stack

Web Server Log Processing using Hadoop

Data processing with Spark SQL

Build a Time Series Analysis Dashboard with Spark and Grafana

GCP Data Ingestion with SQL using Google Cloud Dataflow

Deploying auto-reply Twitter handle with Kafka, Spark, and LSTM

Dealing with Slowly Changing Dimensions using Snowflake

Spark Project -Real-Time data collection and Spark Streaming Aggregation

Snowflake Real-Time Data Warehouse Project for Beginners-1

Real-Time Log Processing using Spark Streaming Architecture

Real-Time Auto Tracking with Spark-Redis

Building Real-Time AWS Log Analytics Solution

Explore real-world Apache Hadoop projects by ProjectPro and land your Big Data dream job today!

In this section, you will find a list of good big data project ideas for masters students.

Hadoop Project-Analysis of Yelp Dataset using Hadoop Hive

Online Hadoop Projects -Solving small file problem in Hadoop

Airline Dataset Analysis using Hadoop, Hive, Pig, and Impala

AWS Project-Website Monitoring using AWS Lambda and Aurora

Explore features of Spark SQL in practice on Spark 2.0

MovieLens Dataset Exploratory Analysis

Bitcoin Data Mining on AWS

Create A Data Pipeline Based On Messaging Using PySpark And Hive - Covid-19 Analysis

Spark Project-Analysis and Visualization on Yelp Dataset

Project Ideas on Big Data Analytics

Let us now begin with a more detailed list of good big data project ideas that you can easily implement.

This section will introduce you to a list of project ideas on big data that use Hadoop along with descriptions of how to implement them.

1. Visualizing Wikipedia Trends

Human brains tend to process visual data better than data in any other format. 90% of the information transmitted to the brain is visual, and the human brain can process an image in just 13 milliseconds. Wikipedia is a page that is accessed by people all around the world for research purposes, general information, and just to satisfy their occasional curiosity.

Raw page data counts from Wikipedia can be collected and processed via Hadoop. The processed data can then be visualized using Zeppelin notebooks to analyze trends that can be supported based on demographics or parameters. This is a good pick for someone looking to understand how big data analysis and visualization can be achieved through Big Data and also an excellent pick for an Apache Big Data project idea.

Visualizing Wikipedia Trends Big Data Project with Source Code .

2. Visualizing Website Clickstream Data

Clickstream data analysis refers to collecting, processing, and understanding all the web pages a particular user visits. This analysis benefits web page marketing, product management, and targeted advertisement. Since users tend to visit sites based on their requirements and interests, clickstream analysis can help to get an idea of what a user is looking for.

Visualization of the same helps in identifying these trends. In such a manner, advertisements can be generated specific to individuals. Ads on webpages provide a source of income for the webpage, and help the business publishing the ad reach the customer and at the same time, other internet users. This can be classified as a Big Data Apache project by using Hadoop to build it.

Big Data Analytics Projects Solution for Visualization of Clickstream Data on a Website

3. Web Server Log Processing

A web server log maintains a list of page requests and activities it has performed. Storing, processing, and mining the data on web servers can be done to analyze the data further. In this manner, webpage ads can be determined, and SEO (Search engine optimization) can also be done. A general overall user experience can be achieved through web-server log analysis. This kind of processing benefits any business that heavily relies on its website for revenue generation or to reach out to its customers. The Apache Hadoop open source big data project ecosystem with tools such as Pig, Impala, Hive, Spark, Kafka Oozie, and HDFS can be used for storage and processing.

Big Data Project using Hadoop with Source Code for Web Server Log Processing

This section will provide you with a list of projects that utilize Apache Spark for their implementation.

4. Analysis of Twitter Sentiments Using Spark Streaming

Sentimental analysis is another interesting big data project topic that deals with the process of determining whether a given opinion is positive, negative, or neutral. For a business, knowing the sentiments or the reaction of a group of people to a new product launch or a new event can help determine the profitability of the product and can help the business to have a more extensive reach by getting an idea of the feel of the customers. From a political standpoint, the sentiments of the crowd toward a candidate or some decision taken by a party can help determine what keeps a specific group of people happy and satisfied. You can use Twitter sentiments to predict election results as well.

Sentiment analysis has to be done for a large dataset since there are over 180 million monetizable daily active users ( https://www.businessofapps.com/data/twitter-statistics/) on Twitter. The analysis also has to be done in real-time. Spark Streaming can be used to gather data from Twitter in real time. NLP (Natural Language Processing) models will have to be used for sentimental analysis, and the models will have to be trained with some prior datasets. Sentiment analysis is one of the more advanced projects that showcase the use of Big Data due to its involvement in NLP.

Access Big Data Project Solution to Twitter Sentiment Analysis

5. Real-time Analysis of Log-entries from Applications Using Streaming Architectures

If you are looking to practice and get your hands dirty with a real-time big data project, then this big data project title must be on your list. Where web server log processing would require data to be processed in batches, applications that stream data will have log files that would have to be processed in real-time for better analysis. Real-time streaming behavior analysis gives more insight into customer behavior and can help find more content to keep the users engaged. Real-time analysis can also help to detect a security breach and take necessary action immediately. Many social media networks work using the concept of real-time analysis of the content streamed by users on their applications. Spark has a Streaming tool that can process real-time streaming data.

Access Big Data Spark Project Solution to Real-time Analysis of log-entries from applications using Streaming Architecture

6. Analysis of Crime Datasets

Analysis of crimes such as shootings, robberies, and murders can result in finding trends that can be used to keep the police alert for the likelihood of crimes that can happen in a given area. These trends can help to come up with a more strategized and optimal planning approach to selecting police stations and stationing personnel.

With access to CCTV surveillance in real-time, behavior detection can help identify suspicious activities. Similarly, facial recognition software can play a bigger role in identifying criminals. A basic analysis of a crime dataset is one of the ideal Big Data projects for students. However, it can be made more complex by adding in the prediction of crime and facial recognition in places where it is required.

Big Data Analytics Projects for Students on Chicago Crime Data Analysis with Source Code

Explore Categories

In this section, you will find big data projects that rely on cloud service providers such as AWS and GCP.

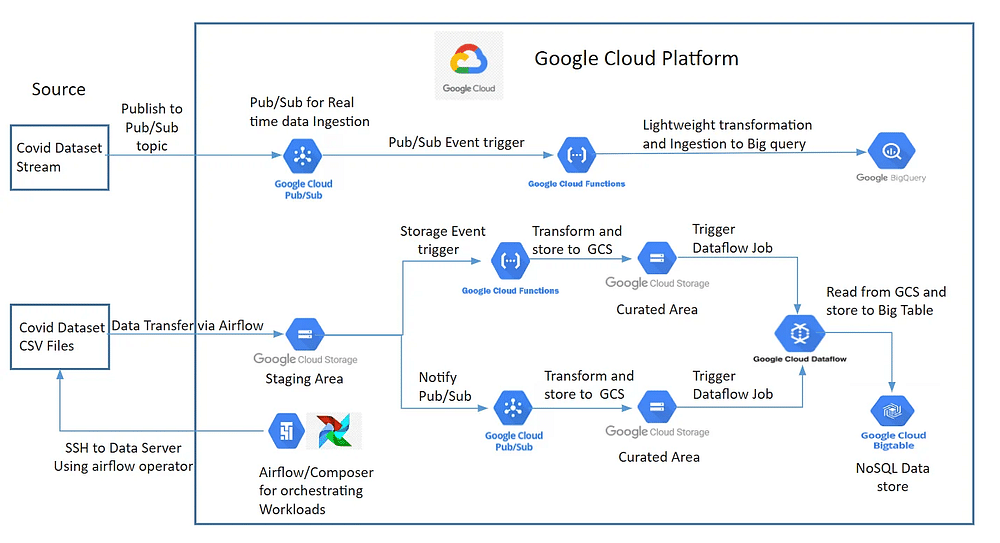

7. Build a Scalable Event-Based GCP Data Pipeline using DataFlow

Suppose you are running an eCommerce website, and a customer places an order. In that case, you must inform the warehouse team to check the stock availability and commit to fulfilling the order. After that, the parcel has to be assigned to a delivery firm so it can be shipped to the customer. For such scenarios, data-driven integration becomes less comfortable, so you must prefer event-based data integration.

This project will teach you how to design and implement an event-based data integration pipeline on the Google Cloud Platform by processing data using DataFlow .

Data Description: You will use the Covid-19 dataset(COVID-19 Cases.csv) from data.world , for this project, which contains a few of the following attributes:

people_positive_cases_count

county_name

data_source

Language Used: Python 3.7

Services: Cloud Composer , Google Cloud Storage (GCS), Pub-Sub , Cloud Functions, BigQuery, BigTable

Big Data Project with Source Code: Build a Scalable Event-Based GCP Data Pipeline using DataFlow

8. Topic Modeling

The future is AI! You must have come across similar quotes about artificial intelligence (AI). Initially, most people found it difficult to believe that could be true. Still, we are witnessing top multinational companies drift towards automating tasks using machine learning tools.

Understand the reason behind this drift by working on one of our repository's most practical data engineering project examples .

Project Objective: Understand the end-to-end implementation of Machine learning operations (MLOps) by using cloud computing .

Learnings from the Project: This project will introduce you to various applications of AWS services . You will learn how to convert an ML application to a Flask Application and its deployment using Gunicord webserver. You will be implementing this project solution in Code Build. This project will help you understand ECS Cluster Task Definition.

Tech Stack:

Language: Python

Libraries: Flask, gunicorn, scipy , nltk , tqdm, numpy, joblib, pandas, scikit_learn, boto3

Services: Flask, Docker, AWS, Gunicorn

Source Code: MLOps AWS Project on Topic Modeling using Gunicorn Flask

9. MLOps on GCP Project for Autoregression using uWSGI Flask

Here is a project that combines Machine Learning Operations (MLOps) and Google Cloud Platform (GCP). As companies are switching to automation using machine learning algorithms, they have realized hardware plays a crucial role. Thus, many cloud service providers have come up to help such companies overcome their hardware limitations. Therefore, we have added this project to our repository to assist you with the end-to-end deployment of a machine learning project .

Project Objective: Deploying the moving average time-series machine-learning model on the cloud using GCP and Flask.

Learnings from the Project: You will work with Flask and uWSGI model files in this project. You will learn about creating Docker Images and Kubernetes architecture. You will also get to explore different components of GCP and their significance. You will understand how to clone the git repository with the source repository. Flask and Kubernetes deployment will also be discussed in this project.

Tech Stack: Language - Python

Services - GCP, uWSGI, Flask, Kubernetes, Docker

Build Professional SQL Projects for Data Analysis with ProjectPro

Unlock the ProjectPro Learning Experience for FREE

This section has good big data project ideas for graduate students who have enrolled in a master course.

10. Real-time Traffic Analysis

Traffic is an issue in many major cities, especially during some busier hours of the day. If traffic is monitored in real-time over popular and alternate routes, steps could be taken to reduce congestion on some roads. Real-time traffic analysis can also program traffic lights at junctions – stay green for a longer time on higher movement roads and less time for roads showing less vehicular movement at a given time. Real-time traffic analysis can help businesses manage their logistics and plan their commute accordingly for working-class individuals. Concepts of deep learning can be used to analyze this dataset properly.

11. Health Status Prediction

“Health is wealth” is a prevalent saying. And rightly so, there cannot be wealth unless one is healthy enough to enjoy worldly pleasures. Many diseases have risk factors that can be genetic, environmental, dietary, and more common for a specific age group or sex and more commonly seen in some races or areas. By gathering datasets of this information relevant for particular diseases, e.g., breast cancer, Parkinson’s disease, and diabetes, the presence of more risk factors can be used to measure the probability of the onset of one of these issues.

In cases where the risk factors are not already known, analysis of the datasets can be used to identify patterns of risk factors and hence predict the likelihood of onset accordingly. The level of complexity could vary depending on the type of analysis that has to be done for different diseases. Nevertheless, since prediction tools have to be applied, this is not a beginner-level big data project idea.

12. Analysis of Tourist Behavior

Tourism is a large sector that provides a livelihood for several people and can adversely impact a country's economy.. Not all tourists behave similarly simply because individuals have different preferences. Analyzing this behavior based on decision-making, perception, choice of destination, and level of satisfaction can be used to help travelers and locals have a more wholesome experience. Behavior analysis, like sentiment analysis, is one of the more advanced project ideas in the Big Data field.

13. Detection of Fake News on Social Media

With the popularity of social media, a major concern is the spread of fake news on various sites. Even worse, this misinformation tends to spread even faster than factual information. According to Wikipedia, fake news can be visual-based, which refers to images, videos, and even graphical representations of data, or linguistics-based, which refers to fake news in the form of text or a string of characters. Different cues are used based on the type of news to differentiate fake news from real. A site like Twitter has 330 million users , while Facebook has 2.8 billion users. A large amount of data will make rounds on these sites, which must be processed to determine the post's validity. Various data models based on machine learning techniques and computational methods based on NLP will have to be used to build an algorithm that can be used to detect fake news on social media.

Access Solution to Interesting Big Data Project on Detection of Fake News

14. Prediction of Calamities in a Given Area

Certain calamities, such as landslides and wildfires, occur more frequently during a particular season and in certain areas. Using certain geospatial technologies such as remote sensing and GIS (Geographic Information System) models makes it possible to monitor areas prone to these calamities and identify triggers that lead to such issues.

If calamities can be predicted more accurately, steps can be taken to protect the residents from them, contain the disasters, and maybe even prevent them in the first place. Past data of landslides has to be analyzed, while at the same time, in-site ground monitoring of data has to be done using remote sensing. The sooner the calamity can be identified, the easier it is to contain the harm. The need for knowledge and application of GIS adds to the complexity of this Big Data project.

15. Generating Image Captions

With the emergence of social media and the importance of digital marketing, it has become essential for businesses to upload engaging content. Catchy images are a requirement, but captions for images have to be added to describe them. The additional use of hashtags and attention-drawing captions can help a little more to reach the correct target audience. Large datasets have to be handled which correlate images and captions.

This involves image processing and deep learning to understand the image and artificial intelligence to generate relevant but appealing captions. Python can be used as the Big Data source code. Image caption generation cannot exactly be considered a beginner-level Big Data project idea. It is probably better to get some exposure to one of the projects before proceeding with this.

Get confident to build end-to-end projects

Access to a curated library of 250+ end-to-end industry projects with solution code, videos and tech support.

16. Credit Card Fraud Detection

The goal is to identify fraudulent credit card transactions, so a customer is not billed for an item that the customer did not purchase. This can tend to be challenging since there are huge datasets, and detection has to be done as soon as possible so that the fraudsters do not continue to purchase more items. Another challenge here is the data availability since the data is supposed to be primarily private. Since this project involves machine learning, the results will be more accurate with a larger dataset. Data availability can pose a challenge in this manner. Credit card fraud detection is helpful for a business since customers are likely to trust companies with better fraud detection applications, as they will not be billed for purchases made by someone else. Fraud detection can be considered one of the most common Big Data project ideas for beginners and students.

If you are looking for big data project examples that are fun to implement then do not miss out on this section.

17. GIS Analytics for Better Waste Management

Due to urbanization and population growth, large amounts of waste are being generated globally. Improper waste management is a hazard not only to the environment but also to us. Waste management involves the process of handling, transporting, storing, collecting, recycling, and disposing of the waste generated. Optimal routing of solid waste collection trucks can be done using GIS modeling to ensure that waste is picked up, transferred to a transfer site, and reaches the landfills or recycling plants most efficiently. GIS modeling can also be used to select the best sites for landfills. The location and placement of garbage bins within city localities must also be analyzed.

18. Customized Programs for Students

We all tend to have different strengths and paces of learning. There are different kinds of intelligence, and the curriculum only focuses on a few things. Data analytics can help modify academic programs to nurture students better. Programs can be designed based on a student’s attention span and can be modified according to an individual’s pace, which can be different for different subjects. E.g., one student may find it easier to grasp language subjects but struggle with mathematical concepts.

In contrast, another might find it easier to work with math but not be able to breeze through language subjects. Customized programs can boost students’ morale, which could also reduce the number of dropouts. Analysis of a student’s strong subjects, monitoring their attention span, and their responses to specific topics in a subject can help build the dataset to create these customized programs.

19. Real-time Tracking of Vehicles

Transportation plays a significant role in many activities. Every day, goods have to be shipped across cities and countries; kids commute to school, and employees have to get to work. Some of these modes might have to be closely monitored for safety and tracking purposes. I’m sure parents would love to know if their children’s school buses were delayed while coming back from school for some reason.