Information

- Author Services

Initiatives

You are accessing a machine-readable page. In order to be human-readable, please install an RSS reader.

All articles published by MDPI are made immediately available worldwide under an open access license. No special permission is required to reuse all or part of the article published by MDPI, including figures and tables. For articles published under an open access Creative Common CC BY license, any part of the article may be reused without permission provided that the original article is clearly cited. For more information, please refer to https://www.mdpi.com/openaccess .

Feature papers represent the most advanced research with significant potential for high impact in the field. A Feature Paper should be a substantial original Article that involves several techniques or approaches, provides an outlook for future research directions and describes possible research applications.

Feature papers are submitted upon individual invitation or recommendation by the scientific editors and must receive positive feedback from the reviewers.

Editor’s Choice articles are based on recommendations by the scientific editors of MDPI journals from around the world. Editors select a small number of articles recently published in the journal that they believe will be particularly interesting to readers, or important in the respective research area. The aim is to provide a snapshot of some of the most exciting work published in the various research areas of the journal.

Original Submission Date Received: .

- Active Journals

- Find a Journal

- Proceedings Series

- For Authors

- For Reviewers

- For Editors

- For Librarians

- For Publishers

- For Societies

- For Conference Organizers

- Open Access Policy

- Institutional Open Access Program

- Special Issues Guidelines

- Editorial Process

- Research and Publication Ethics

- Article Processing Charges

- Testimonials

- Preprints.org

- SciProfiles

- Encyclopedia

Article Menu

- Subscribe SciFeed

- Recommended Articles

- Google Scholar

- on Google Scholar

- Table of Contents

Find support for a specific problem in the support section of our website.

Please let us know what you think of our products and services.

Visit our dedicated information section to learn more about MDPI.

JSmol Viewer

Educational design principles of using ai chatbot that supports self-regulated learning in education: goal setting, feedback, and personalization.

1. Introduction

2. theoretical framework, 2.1. review of zimmerman’s multi-level self-regulated learning framework, 2.2. definition and background of jol, 3. educational principles that guide integration of chatbots, 3.1. define chatbots and describe their potential use in educational settings, 3.2. goal setting and prompting, 3.3. feedback and self-assessment mechanism, 3.4. facilitating self-regulation: personalization and adaptation, 4. limitations, 5. concluding remarks, author contributions, institutional review board statement, informed consent statement, data availability statement, conflicts of interest.

- Pérez, J.Q.; Daradoumis, T.; Puig, J.M.M. Rediscovering the use of chatbots in education: A systematic literature review. Comput. Appl. Eng. Educ. 2020 , 28 , 1549–1565. [ Google Scholar ] [ CrossRef ]

- Smutny, P.; Schreiberova, P. Chatbots for learning: A review of educational chatbots for the Facebook Messenger. Comput. Educ. 2020 , 151 , 103862. [ Google Scholar ] [ CrossRef ]

- Kuhail, M.A.; Alturki, N.; Alramlawi, S.; Alhejori, K. Interacting with educational chatbots: A systematic review. Educ. Inf. Technol. 2023 , 28 , 973–1018. [ Google Scholar ] [ CrossRef ]

- Okonkwo, C.W.; Ade-Ibijola, A. Chatbots applications in education: A systematic review. Comput. Educ. Artif. Intell. 2021 , 2 , 100033. [ Google Scholar ] [ CrossRef ]

- Koriat, A. Monitoring one’s own knowledge during study: A cue-utilization approach to judgments of learning. J. Exp. Psychol. Gen. 1997 , 126 , 349–370. [ Google Scholar ] [ CrossRef ]

- Son, L.K.; Metcalfe, J. Judgments of learning: Evidence for a two-stage process. Mem. Cogn. 2005 , 33 , 1116–1129. [ Google Scholar ] [ CrossRef ] [ PubMed ]

- Panadero, E. A Review of Self-regulated Learning: Six Models and Four Directions for Research. Front. Psychol. 2017 , 8 , 422. [ Google Scholar ] [ CrossRef ] [ PubMed ]

- Zimmerman, B.J. Attaining Self-Regulation. In Handbook of Self-Regulation ; Elsevier: Amsterdam, The Netherlands, 2000; pp. 13–39. [ Google Scholar ] [ CrossRef ]

- Baars, M.; Wijnia, L.; de Bruin, A.; Paas, F. The Relation Between Students’ Effort and Monitoring Judgments During Learning: A Meta-analysis. Educ. Psychol. Rev. 2020 , 32 , 979–1002. [ Google Scholar ] [ CrossRef ]

- Leonesio, R.J.; Nelson, T.O. Do different metamemory judgments tap the same underlying aspects of memory? J. Exp. Psychol. Learn. Mem. Cogn. 1990 , 16 , 464–470. [ Google Scholar ] [ CrossRef ]

- Double, K.S.; Birney, D.P.; Walker, S.A. A meta-analysis and systematic review of reactivity to judgements of learning. Memory 2018 , 26 , 741–750. [ Google Scholar ] [ CrossRef ]

- Janes, J.L.; Rivers, M.L.; Dunlosky, J. The influence of making judgments of learning on memory performance: Positive, negative, or both? Psychon. Bull. Rev. 2018 , 25 , 2356–2364. [ Google Scholar ] [ CrossRef ]

- Hamzah, M.I.; Hamzah, H.; Zulkifli, H. Systematic Literature Review on the Elements of Metacognition-Based Higher Order Thinking Skills (HOTS) Teaching and Learning Modules. Sustainability 2022 , 14 , 813. [ Google Scholar ] [ CrossRef ]

- Veenman, M.V.J.; Van Hout-Wolters, B.H.A.M.; Afflerbach, P. Metacognition and learning: Conceptual and methodological considerations. Metacognition Learn. 2006 , 1 , 3–14. [ Google Scholar ] [ CrossRef ]

- Nelson, T.; Narens, L. Why investigate metacognition. In Metacognition: Knowing about Knowing ; MIT Press: Cambridge, MA, USA, 1994. [ Google Scholar ] [ CrossRef ]

- Tuysuzoglu, B.B.; Greene, J.A. An investigation of the role of contingent metacognitive behavior in self-regulated learning. Metacognition Learn. 2015 , 10 , 77–98. [ Google Scholar ] [ CrossRef ]

- Bandura, A. Social Cognitive Theory: An Agentic Perspective. Asian J. Soc. Psychol. 1999 , 2 , 21–41. [ Google Scholar ] [ CrossRef ]

- Bem, D.J. Self-Perception Theory. In Advances in Experimental Social Psychology ; Berkowitz, L., Ed.; Academic Press: Cambridge, MA, USA, 1972; Volume 6, pp. 1–62. [ Google Scholar ] [ CrossRef ]

- Abu Shawar, B.; Atwell, E. Different measurements metrics to evaluate a chatbot system. In Proceedings of the Workshop on Bridging the Gap: Academic and Industrial Research in Dialog Technologies, Rochester, NY, USA, 26 April 2007; pp. 89–96. [ Google Scholar ] [ CrossRef ]

- Turing, A.M. Computing machinery and intelligence. Mind 1950 , 59 , 433–460. [ Google Scholar ] [ CrossRef ]

- Weizenbaum, J. ELIZA—A computer program for the study of natural language communication between man and machine. Commun. ACM 1966 , 9 , 36–45. [ Google Scholar ] [ CrossRef ]

- Wallace, R.S. The anatomy of A.L.I.C.E. In Parsing the Turing Test: Philosophical and Methodological Issues in the Quest for the Thinking Computer ; Epstein, R., Roberts, G., Beber, G., Eds.; Springer: Dordrecht, The Netherlands, 2009; pp. 181–210. [ Google Scholar ] [ CrossRef ]

- Hwang, G.-J.; Chang, C.-Y. A review of opportunities and challenges of chatbots in education. Interact. Learn. Environ. 2021 , 1–14. [ Google Scholar ] [ CrossRef ]

- Yamada, M.; Goda, Y.; Matsukawa, H.; Hata, K.; Yasunami, S. A Computer-Supported Collaborative Learning Design for Quality Interaction. IEEE MultiMedia 2016 , 23 , 48–59. [ Google Scholar ] [ CrossRef ]

- Muniasamy, A.; Alasiry, A. Deep Learning: The Impact on Future eLearning. Int. J. Emerg. Technol. Learn. (iJET) 2020 , 15 , 188–199. [ Google Scholar ] [ CrossRef ]

- Bendig, E.; Erb, B.; Schulze-Thuesing, L.; Baumeister, H. The Next Generation: Chatbots in Clinical Psychology and Psychotherapy to Foster Mental Health—A Scoping Review. Verhaltenstherapie 2022 , 32 , 64–76. [ Google Scholar ] [ CrossRef ]

- Kennedy, C.M.; Powell, J.; Payne, T.H.; Ainsworth, J.; Boyd, A.; Buchan, I. Active Assistance Technology for Health-Related Behavior Change: An Interdisciplinary Review. J. Med. Internet Res. 2012 , 14 , e80. [ Google Scholar ] [ CrossRef ]

- Poncette, A.-S.; Rojas, P.-D.; Hofferbert, J.; Sosa, A.V.; Balzer, F.; Braune, K. Hackathons as Stepping Stones in Health Care Innovation: Case Study with Systematic Recommendations. J. Med. Internet Res. 2020 , 22 , e17004. [ Google Scholar ] [ CrossRef ] [ PubMed ]

- Ferrell, O.C.; Ferrell, L. Technology Challenges and Opportunities Facing Marketing Education. Mark. Educ. Rev. 2020 , 30 , 3–14. [ Google Scholar ] [ CrossRef ]

- Behera, R.K.; Bala, P.K.; Ray, A. Cognitive Chatbot for Personalised Contextual Customer Service: Behind the Scene and beyond the Hype. Inf. Syst. Front. 2021 , 1–21. [ Google Scholar ] [ CrossRef ]

- Crolic, C.; Thomaz, F.; Hadi, R.; Stephen, A.T. Blame the Bot: Anthropomorphism and Anger in Customer–Chatbot Interactions. J. Mark. 2022 , 86 , 132–148. [ Google Scholar ] [ CrossRef ]

- Clarizia, F.; Colace, F.; Lombardi, M.; Pascale, F.; Santaniello, D. Chatbot: An education support system for student. In CSS 2018: Cyberspace Safety and Security ; Castiglione, A., Pop, F., Ficco, M., Palmieri, F., Eds.; Lecture Notes in Computer Science Book Series; Springer International Publishing: Cham, Switzerland, 2018; Volume 11161, pp. 291–302. [ Google Scholar ] [ CrossRef ]

- Firat, M. What ChatGPT means for universities: Perceptions of scholars and students. J. Appl. Learn. Teach. 2023 , 6 , 57–63. [ Google Scholar ] [ CrossRef ]

- Kim, H.-S.; Kim, N.Y. Effects of AI chatbots on EFL students’ communication skills. Commun. Ski. 2021 , 21 , 712–734. [ Google Scholar ]

- Hill, J.; Ford, W.R.; Farreras, I.G. Real conversations with artificial intelligence: A comparison between human–human online conversations and human–chatbot conversations. Comput. Hum. Behav. 2015 , 49 , 245–250. [ Google Scholar ] [ CrossRef ]

- Wu, E.H.-K.; Lin, C.-H.; Ou, Y.-Y.; Liu, C.-Z.; Wang, W.-K.; Chao, C.-Y. Advantages and Constraints of a Hybrid Model K-12 E-Learning Assistant Chatbot. IEEE Access 2020 , 8 , 77788–77801. [ Google Scholar ] [ CrossRef ]

- Brandtzaeg, P.B.; Følstad, A. Why people use chatbots. In INSCI 2017: Internet Science ; Kompatsiaris, I., Cave, J., Satsiou, A., Carle, G., Passani, A., Kontopoulos, E., Diplaris, S., McMillan, D., Eds.; Lecture Notes in Computer Science Book Series; Springer International Publishing: Cham, Switzerland, 2017; Volume 10673, pp. 377–392. [ Google Scholar ] [ CrossRef ]

- Deng, X.; Yu, Z. A Meta-Analysis and Systematic Review of the Effect of Chatbot Technology Use in Sustainable Education. Sustainability 2023 , 15 , 2940. [ Google Scholar ] [ CrossRef ]

- de Quincey, E.; Briggs, C.; Kyriacou, T.; Waller, R. Student Centred Design of a Learning Analytics System. In Proceedings of the 9th International Conference on Learning Analytics & Knowledge, Tempe, AZ, USA, 4 March 2019; pp. 353–362. [ Google Scholar ] [ CrossRef ]

- Hattie, J. The black box of tertiary assessment: An impending revolution. In Tertiary Assessment & Higher Education Student Outcomes: Policy, Practice & Research ; Ako Aotearoa: Wellington, New Zealand, 2009; pp. 259–275. [ Google Scholar ]

- Wisniewski, B.; Zierer, K.; Hattie, J. The Power of Feedback Revisited: A Meta-Analysis of Educational Feedback Research. Front. Psychol. 2020 , 10 , 3087. [ Google Scholar ] [ CrossRef ]

- Winne, P.H. Cognition and metacognition within self-regulated learning. In Handbook of Self-Regulation of Learning and Performance , 2nd ed.; Routledge: London, UK, 2017. [ Google Scholar ]

- Serban, I.V.; Sankar, C.; Germain, M.; Zhang, S.; Lin, Z.; Subramanian, S.; Kim, T.; Pieper, M.; Chandar, S.; Ke, N.R.; et al. A deep reinforcement learning chatbot. arXiv 2017 , arXiv:1709.02349. [ Google Scholar ]

- Shneiderman, B.; Plaisant, C. Designing the User Interface: Strategies for Effective Human-Computer Interaction , 4th ed.; Pearson: Boston, MA, USA; Addison Wesley: Hoboken, NJ, USA, 2004. [ Google Scholar ]

- Abbasi, S.; Kazi, H. Measuring effectiveness of learning chatbot systems on student’s learning outcome and memory retention. Asian J. Appl. Sci. Eng. 2014 , 3 , 57–66. [ Google Scholar ] [ CrossRef ]

- Winkler, R.; Soellner, M. Unleashing the Potential of Chatbots in Education: A State-Of-The-Art Analysis. Acad. Manag. Proc. 2018 , 2018 , 15903. [ Google Scholar ] [ CrossRef ]

- Dwivedi, Y.K.; Kshetri, N.; Hughes, L.; Slade, E.L.; Jeyaraj, A.; Kar, A.K.; Baabdullah, A.M.; Koohang, A.; Raghavan, V.; Ahuja, M.; et al. Opinion Paper: “So what if ChatGPT wrote it?” Multidisciplinary perspectives on opportunities, challenges and implications of generative conversational AI for research, practice and policy. Int. J. Inf. Manag. 2023 , 71 , 102642. [ Google Scholar ] [ CrossRef ]

- Dai, W.; Lin, J.; Jin, F.; Li, T.; Tsai, Y.S.; Gasevic, D.; Chen, G. Can large language models provide feedback to students? A case study on ChatGPT. 2023; preprint . [ Google Scholar ] [ CrossRef ]

- Lin, M.P.-C.; Chang, D. Enhancing post-secondary writers’ writing skills with a Chatbot: A mixed-method classroom study. J. Educ. Technol. Soc. 2020 , 23 , 78–92. [ Google Scholar ]

- Zhu, C.; Sun, M.; Luo, J.; Li, T.; Wang, M. How to harness the potential of ChatGPT in education? Knowl. Manag. E-Learn. 2023 , 15 , 133–152. [ Google Scholar ] [ CrossRef ]

- Prøitz, T.S. Learning outcomes: What are they? Who defines them? When and where are they defined? Educ. Assess. Eval. Account. 2010 , 22 , 119–137. [ Google Scholar ] [ CrossRef ]

- Burke, J. (Ed.) Outcomes, Learning and the Curriculum: Implications for Nvqs, Gnvqs and Other Qualifications ; Routledge: London, UK, 1995. [ Google Scholar ] [ CrossRef ]

- Locke, E.A. New Developments in Goal Setting and Task Performance , 1st ed.; Routledge: London, UK, 2013. [ Google Scholar ] [ CrossRef ]

- Leake, D.B.; Ram, A. Learning, goals, and learning goals: A perspective on goal-driven learning. Artif. Intell. Rev. 1995 , 9 , 387–422. [ Google Scholar ] [ CrossRef ]

- Greene, J.A.; Azevedo, R. A Theoretical Review of Winne and Hadwin’s Model of Self-Regulated Learning: New Perspectives and Directions. Rev. Educ. Res. 2007 , 77 , 334–372. [ Google Scholar ] [ CrossRef ]

- Pintrich, P.R. A Conceptual Framework for Assessing Motivation and Self-Regulated Learning in College Students. Educ. Psychol. Rev. 2004 , 16 , 385–407. [ Google Scholar ] [ CrossRef ]

- Schunk, D.H.; Greene, J.A. (Eds.) Handbook of Self-Regulation of Learning and Performance , 2nd ed.; In Educational Psychology Handbook Series; Routledge: New York, NY, USA; Taylor & Francis Group: Milton Park, UK, 2018. [ Google Scholar ]

- Chen, C.-H.; Su, C.-Y. Using the BookRoll e-book system to promote self-regulated learning, self-efficacy and academic achievement for university students. J. Educ. Technol. Soc. 2019 , 22 , 33–46. [ Google Scholar ]

- Michailidis, N.; Kapravelos, E.; Tsiatsos, T. Interaction analysis for supporting students’ self-regulation during blog-based CSCL activities. J. Educ. Technol. Soc. 2018 , 21 , 37–47. [ Google Scholar ]

- Paans, C.; Molenaar, I.; Segers, E.; Verhoeven, L. Temporal variation in children’s self-regulated hypermedia learning. Comput. Hum. Behav. 2019 , 96 , 246–258. [ Google Scholar ] [ CrossRef ]

- Morisano, D.; Hirsh, J.B.; Peterson, J.B.; Pihl, R.O.; Shore, B.M. Setting, elaborating, and reflecting on personal goals improves academic performance. J. Appl. Psychol. 2010 , 95 , 255–264. [ Google Scholar ] [ CrossRef ]

- Krathwohl, D.R. A Revision of Bloom’s Taxonomy: An Overview. Theory Pract. 2002 , 41 , 212–218. [ Google Scholar ] [ CrossRef ]

- Bouffard, T.; Boisvert, J.; Vezeau, C.; Larouche, C. The impact of goal orientation on self-regulation and performance among college students. Br. J. Educ. Psychol. 1995 , 65 , 317–329. [ Google Scholar ] [ CrossRef ]

- Javaherbakhsh, M.R. The Impact of Self-Assessment on Iranian EFL Learners’ Writing Skill. Engl. Lang. Teach. 2010 , 3 , 213–218. [ Google Scholar ] [ CrossRef ]

- Zepeda, C.D.; Richey, J.E.; Ronevich, P.; Nokes-Malach, T.J. Direct instruction of metacognition benefits adolescent science learning, transfer, and motivation: An in vivo study. J. Educ. Psychol. 2015 , 107 , 954–970. [ Google Scholar ] [ CrossRef ]

- Ndoye, A. Peer/self assessment and student learning. Int. J. Teach. Learn. High. Educ. 2017 , 29 , 255–269. [ Google Scholar ]

- Schunk, D.H. Goal and Self-Evaluative Influences During Children’s Cognitive Skill Learning. Am. Educ. Res. J. 1996 , 33 , 359–382. [ Google Scholar ] [ CrossRef ]

- King, A. Enhancing Peer Interaction and Learning in the Classroom Through Reciprocal Questioning. Am. Educ. Res. J. 1990 , 27 , 664–687. [ Google Scholar ] [ CrossRef ]

- Mason, L.H. Explicit Self-Regulated Strategy Development Versus Reciprocal Questioning: Effects on Expository Reading Comprehension Among Struggling Readers. J. Educ. Psychol. 2004 , 96 , 283–296. [ Google Scholar ] [ CrossRef ]

- Newman, R.S. Adaptive help seeking: A strategy of self-regulated learning. In Self-Regulation of Learning and Performance: Issues and Educational Applications ; Lawrence Erlbaum Associates, Inc.: Hillsdale, NJ, USA, 1994; pp. 283–301. [ Google Scholar ]

- Rosenshine, B.; Meister, C. Reciprocal Teaching: A Review of the Research. Rev. Educ. Res. 1994 , 64 , 479–530. [ Google Scholar ] [ CrossRef ]

- Baleghizadeh, S.; Masoun, A. The Effect of Self-Assessment on EFL Learners’ Self-Efficacy. TESL Can. J. 2014 , 31 , 42. [ Google Scholar ] [ CrossRef ]

- Moghadam, S.H. What Types of Feedback Enhance the Effectiveness of Self-Explanation in a Simulation-Based Learning Environment? Available online: https://summit.sfu.ca/item/34750 (accessed on 14 July 2023).

- Vanichvasin, P. Effects of Visual Communication on Memory Enhancement of Thai Undergraduate Students, Kasetsart University. High. Educ. Stud. 2020 , 11 , 34–41. [ Google Scholar ] [ CrossRef ]

- Schumacher, C.; Ifenthaler, D. Features students really expect from learning analytics. Comput. Hum. Behav. 2018 , 78 , 397–407. [ Google Scholar ] [ CrossRef ]

- Marzouk, Z.; Rakovic, M.; Liaqat, A.; Vytasek, J.; Samadi, D.; Stewart-Alonso, J.; Ram, I.; Woloshen, S.; Winne, P.H.; Nesbit, J.C. What if learning analytics were based on learning science? Australas. J. Educ. Technol. 2016 , 32 , 1–18. [ Google Scholar ] [ CrossRef ]

- Akhtar, S.; Warburton, S.; Xu, W. The use of an online learning and teaching system for monitoring computer aided design student participation and predicting student success. Int. J. Technol. Des. Educ. 2015 , 27 , 251–270. [ Google Scholar ] [ CrossRef ]

- Lo, C.K. What Is the Impact of ChatGPT on Education? A Rapid Review of the Literature. Educ. Sci. 2023 , 13 , 410. [ Google Scholar ] [ CrossRef ]

- Baidoo-Anu, D.; Ansah, L.O. Education in the era of generative Artificial Intelligence (AI): Understanding the potential benefits of ChatGPT in promoting teaching and learning. SSRN Electron. J. 2023 , 1–22. [ Google Scholar ] [ CrossRef ]

- Mogali, S.R. Initial impressions of ChatGPT for anatomy education. Anat. Sci. Educ. 2023 , 1–4. [ Google Scholar ] [ CrossRef ] [ PubMed ]

Click here to enlarge figure

| Prompt Types | Process-Based | Outcome-Based |

|---|---|---|

| Cognitive | Understand Remember | Create Apply |

| Metacognitive | Evaluate | |

| The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

Share and Cite

Chang, D.H.; Lin, M.P.-C.; Hajian, S.; Wang, Q.Q. Educational Design Principles of Using AI Chatbot That Supports Self-Regulated Learning in Education: Goal Setting, Feedback, and Personalization. Sustainability 2023 , 15 , 12921. https://doi.org/10.3390/su151712921

Chang DH, Lin MP-C, Hajian S, Wang QQ. Educational Design Principles of Using AI Chatbot That Supports Self-Regulated Learning in Education: Goal Setting, Feedback, and Personalization. Sustainability . 2023; 15(17):12921. https://doi.org/10.3390/su151712921

Chang, Daniel H., Michael Pin-Chuan Lin, Shiva Hajian, and Quincy Q. Wang. 2023. "Educational Design Principles of Using AI Chatbot That Supports Self-Regulated Learning in Education: Goal Setting, Feedback, and Personalization" Sustainability 15, no. 17: 12921. https://doi.org/10.3390/su151712921

Article Metrics

Article access statistics, further information, mdpi initiatives, follow mdpi.

Subscribe to receive issue release notifications and newsletters from MDPI journals

- Sign into My Research

- Create My Research Account

- Company Website

- Our Products

- About Dissertations

- Español (España)

- Support Center

Select language

- Bahasa Indonesia

- Português (Brasil)

- Português (Portugal)

Welcome to My Research!

You may have access to the free features available through My Research. You can save searches, save documents, create alerts and more. Please log in through your library or institution to check if you have access.

Translate this article into 20 different languages!

If you log in through your library or institution you might have access to this article in multiple languages.

Get access to 20+ different citations styles

Styles include MLA, APA, Chicago and many more. This feature may be available for free if you log in through your library or institution.

Looking for a PDF of this document?

You may have access to it for free by logging in through your library or institution.

Want to save this document?

You may have access to different export options including Google Drive and Microsoft OneDrive and citation management tools like RefWorks and EasyBib. Try logging in through your library or institution to get access to these tools.

- Document 1 of 1

- More like this

- Scholarly Journal

Educational Design Principles of Using AI Chatbot That Supports Self-Regulated Learning in Education: Goal Setting, Feedback, and Personalization

No items selected.

Please select one or more items.

1. Introduction

Educational chatbots, also called conversational agents, hold immense potential in delivering personalized and interactive learning experiences to students [1,2]. However, the advent of ChatGPT or generative AI poses a substantial challenge to the role of educators, as it gives rise to concerns that students may exploit generative AI tools to obtain academic recognition without actively engaging in the learning process. In light of this transformative development, it is observable that AI represents a contemporary trend in education, and it will be used by learners inevitably. Rather than attempting to suppress using AI in education, educators should proactively seek and explore ways to adapt its presence. This adaptation can be effectively achieved by establishing fruitful collaborations between educators, instructional designers, and researchers in the AI field. Such partnerships should strive to explore the integration of pedagogical principles within AI platforms, ensuring that students not only derive benefits from AI but also acquire the essential skills mandated by the educational curriculum. Consequently, it becomes crucial for chatbot designers and educators to collaborate closely, considering key pedagogical principles such as goal setting, self-assessment, and personalization at various stages of learning [3,4]. These principles should guide the design process, guaranteeing that the chatbot effectively supports the student learning experience.

In this paper, drawing from Barry Zimmerman’s Self-Regulated Learning (SRL) framework, we propose several key pedagogical principles that can be considered when teachers decide to integrate AI chatbots in classrooms in order to foster SRL. While composing this paper, it is evident that the majority of research on generative AI tools (like ChatGPT) mainly focuses on their wide-ranging applications and potential drawbacks in education. However, there has been a notable shortage of studies that actively engage teachers and instructional designers to determine the most effective ways to incorporate these AI tools in classroom settings. In one of our pedagogical principles, we will specifically draw on Judgement of Learning (JOL), which refers to assessing and evaluating student understanding and progress [5,6], and explore how JOL can be integrated into AI-based pedagogy and instructional design that fosters students’ SRL. By integrating Zimmerman’s SRL framework with JOL, we hope to address the major cognitive, metacognitive, and socio-educational concerns contributing to the enhancement and personalization of AI in teaching and learning.

2. Theoretical Framework

Let us conceptualize a learning scenario on writing and learning. A student accesses their institution’s learning management system (LMS) and selects the course titled “ENGL 100—Introduction to Literature”, a foundational writing course under the Department of English. Upon navigating to an assignment, the student delves into its details and reads the assignment instructions. After a brief review, the student copies the assignment’s instructions. In a separate browser tab, the student opens up ChatGPT and decides to engage with it. The student then pastes the assignment instructions, prompting ChatGPT with, “Plan my essay based on the provided assignment instructions, [copied assignment instructions]”.

In response, ChatGPT outlines a structured plan, beginning with the crafting of an introduction. However, the student is puzzled about the nature and structure of an introduction, so the student inquires and re-prompts again, “Could you provide an example instruction for the assignment?” ChatGPT then offers a sample. After studying the example, the student clicks a word processing software on their computer and commences the writing process. Upon completing the introduction, the student seeks feedback from ChatGPT, asking, “Could you assess and evaluate the quality of my introduction?” ChatGPT provides its evaluation. Throughout the writing process, the student frequently consults ChatGPT for assistance on various elements, such as topic sentences, examples, and argumentation, refining their work until the student is satisfied with the work they produce for the ENGL 100 assignment.

This scenario depicts a perfect and ideal SRL cycle executed by the student, encompassing goal-setting, standard reproduction, iterative engagement with ChatGPT, and solicitation of evaluative feedback. However, in real-world educational contexts, students might not recognize this cycle. They might perceive ChatGPT merely as a problem-solving AI chatbot, which can help them with the assignment. On the side of instructors, instructors are not fully aware of how AI tools can be integrated as part of their pedagogy, yet they are afraid that students will use this AI chatbot unethically for learning.

In our position, we argue that generative AI tools, like ChatGPT, have the potential to facilitate SRL when instructors are aware of the SRL process from which students can benefit. To harness the potential of generative AI tools, educators must be cognizant of their capabilities, functions, and pedagogical values. To this end, we employ Zimmerman’s multi-level SRL framework, which will be elaborated upon in the subsequent section.

2.1. Review of Zimmerman’s Multi-Level Self-Regulated Learning Framework

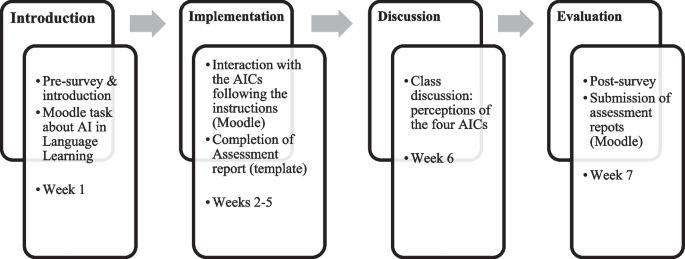

Zimmerman’s multi-level SRL framework [7,8] encompasses four distinct levels: observation, emulation, self-control, and self-regulation (see Figure 1). Each level represents a progressive stage in the development of SRL skills. This framework guides us to explore how a chatbot can facilitate SRL at each stage of Zimmerman’s framework. For example, when students use AI chatbots for their learning, they treat the chatbots as a resource. They enter questions or commands into the AI chatbots, hoping to seek clarifications or information from the chatbots for the task at hand. We assume that this type of utilization of AI chatbots elicits students’ self-regulation. We propose that Zimmerman’s multi-level SRL framework helps to interpret the SRL processes undertaken by students.

Specifically, the observation level denotes a stage where students possess prior knowledge of how conversations occur in a real-life context and their general goal for the learning task. During this phase, students may set their goals, or primarily observe and learn from others who prompt the chatbot, gaining insights into the expected outcomes and interactions. Moving onto the emulation level, students demonstrate their comprehension of the task requirements by independently prompting the chatbot using their own words or similar phrases they have observed or recommended by others. At this stage, students strive to replicate successful interactions they have witnessed, applying their understanding of the task to engage with the chatbot. At this stage, they may also use their goals as the prompts being fed into a chatbot, or they can use the prompts they observe from others. The self-control level, on the other hand, represents a critical juncture where students face decisions about their learning. Such decisions can be ethical conduct and academic integrity decisions, or further re-engagement (re-prompting the chatbot). Specifically, once the chatbot generates a response, students must choose between potentially resorting to taking the chatbot’s responses verbatim for the assignments (academic integrity and ethical conduct) and modifying their approach, such as re-prompting, or sorting out other strategies. This phase provides an opportunity for the chatbot to contribute by offering evaluations and feedback on students’ work, guiding them to determine whether their output meets the required standards or if further revisions are necessary. In sum, at this self-control stage, it can be considered as a two-way interaction between the chatbot and students.

As students march into the self-regulation level when they use the chatbot, they begin to recognize the potential benefits of the chatbot as a useful, efficient, and valuable learning tool to assist their learning. Regarding the self-regulation level, students may seek an evaluation of their revised paragraph generated by the chatbot. Moreover, they might request the chatbot to provide their learning analytics report. Fine-grained student data can be visualized as learning analytics in the chatbot or receive recommendations for further learning improvement. This stage exemplifies the students’ growing understanding of how the chatbot can facilitate their learning process, guiding them toward achieving specific objectives and refining their SRL skills. Zimmerman’s multi-level SRL framework provides a comprehensive perspective on the gradual development of increasing SRL abilities. It illustrates how students proceed from observing and emulating others, exercising self-control, and ultimately achieving self-regulation by harnessing the chatbot’s capabilities as a supportive learning resource.

2.2. Definition and Background of JOL

In Zimmerman’s self-control and self-regulation phases of SRL, students have to engage in some levels of judgement about the chatbot’s output, so they can decide what their next actions are. Such judgement is known as self-assessment, and self-assessment is grounded in Judgement of Learning (JOL), a concept dominant in educational psychology.

JOL is a psychological and educational concept that refers to an individual’s evaluation of their learning [6]. It reflects the extent to which an individual believes they have learned or retained new information, which can impact their motivation and behavior during the learning process [5]. Several studies have indicated that various factors could impact an individual’s JOL, including the difficulty of the material, the individual’s pre-existing knowledge and skills, and the effectiveness of the learning strategy used [5,6]. There is empirical evidence showing that people with a higher JOL tend to be more motivated to learn and more likely to engage in SRL activities, while those with a lower JOL may be less motivated and avoid difficult learning tasks [9,10]. JOL can also serve as a feedback mechanism for learners by allowing them to identify areas where they need to focus more effort and adjust their learning strategies accordingly [11,12]. Additionally, JOL can influence an individual’s confidence, which in turn can affect their overall approach to learning [11].

One of the most influential theories of JOL is the cue-utilization approach, which proposes that individuals use various cues, or indicators, to assess their learning [5]. These cues can include things like how difficult the material was to learn, how much time was spent studying, and how well the material was understood. According to Koriat [5], individuals are more likely to have higher JOL if they encounter more favorable cues while learning (e.g., domain-specific knowledge), and more likely to have a low JOL if they encounter less favorable cues (e.g., feelings of unfamiliarity or difficulty). Another important outcome of JOL is metacognitive awareness, which emphasizes the role of metacognitive processes, or higher-order thinking skills, in the learning process. Research [13,14] shows that individuals use metacognitive strategies, such as planning, monitoring, and evaluating, to guide their learning and assess their progress. As a result, individuals with higher JOL are more likely to use effective metacognitive strategies and be more successful learners. In certain conditions, students recognize their lack of understanding of specific concepts, a phenomenon referred to as “negative JOL” [15], which may result in the improvement of previously adopted learning skills and strategies. Suppose the student does not change their strategy use following such judgement. In that case, the student’s metacognitive behavior is called “static”, implying that they are aware of their knowledge deficit but are resistant to change [16]. Various models of JOL have been proposed. For example, the social cognitive model [17] emphasizes the influence of social and environmental factors on learning, and the self-perception model suggests that individuals’ JOL is influenced by their perceptions of their abilities and self-worth [18].

Taken together, incorporating Zimmerman’s SRL theoretical framework and JOL into the existing capacity of AI in Education has significant potential for improving students’ SRL. Currently, AI technology operates in a unidirectional manner, where users (or students) prompt the generative AI tool to fulfill its intended function and purposes (in the following section, we also call it “goal setting”), as what we have shown above with respect to the emulation and the self-control stages. However, in education, it is crucial to emphasize the importance of bidirectional interaction (from user to AI and AI to user). Enabling AI to initiate personalized learning feedback (i.e., learning analytics, which we will elaborate in the Section 3.4) to users can create meaningful and educational interactions. In the sections below, we propose several educational principles that can guide the integration of chatbots into various aspects of educational practices.

3. Educational Principles That Guide Integration of Chatbots

3.1. Define Chatbots and Describe Their Potential Use in Educational Settings

The term “chatbot” refers to computer programs that communicate with users using natural language [19]. The history of chatbots can be extended back to the early 1950s [20]. In particular, ELIZA [21] and A.L.I.C.E. [22] were well-known early chatbot systems simulating real human communication. Chatbots are technological innovations that may efficiently supplement services delivered to humans. In addition to educational chatbots [23,24] and applying deep learning algorithms in learning management systems [25], chatbots have been used as a tool for many purposes and have a wide range of industrial applications, such as medical education [26,27], counseling consultations [28], marketing education [29], and telecommunications support and in financial industries [30,31].

In particular, research has been conducted to investigate the methods and impacts of chatbot implementation in education in recent years [25,32,33]. Chatbots’ interactive learning feature and their flexibility in terms of time and location have made their usage more appealing and gained popularity in the field of education [23]. Several studies have shown that utilizing chatbots in educational settings may provide students with a positive learning experience, as human-to-chatbot interaction allows real-time engagement [34], improves students’ communication skills [35], and improves students’ efficiency of learning [36].

The growing need for AI technology has opened a new avenue for constructing chatbots when combined with natural language processing capabilities and machine learning techniques [37]. Smutny and Schreiberova’s study [2] showed that chatbots have the potential to become smart teaching assistants in the future, as they might be capable of supplementing in-class instructions alongside instructors. In the case of ChatGPT, some students might have used it as personal assistants, regardless of its underlying ethical conduct in academia. However, we would like to argue that utilizing generative AI chatbots, like ChatGPT, can be a platform for students to become self-regulated under the conditions that they are taught about the context of appropriate use, such as when, where, and how they should use the AI chatbot system for learning. In addition, according to a meta-analysis conducted by Deng and Yu [38], chatbots can potentially have a medium-to-high effect on achievement or learning outcomes. Therefore, integrating AI chatbots into classrooms has now been a question of how educators should do it appropriately to foster learning rather than how educators should suppress it so students will observe the boundary of ethical conduct.

Conventional teaching approaches, such as giving students feedback, encouraging students, or customizing course material to student groups, are still dominant pedagogical practices. Suppose we can take these conventional approaches into account while integrating AI into pedagogy. In that case, we believe that computers and other digital gadgets can bring up far-reaching possibilities that have yet to be completely realized. For example, incorporating process data in student learning may offer students some opportunities to monitor their understanding of materials as well as additional opportunities for formative feedback, self-reflection, and competence development [39]. Hattie [40] has argued that the effect of feedback has a median effect size of d = 0.75 in terms of achievement. On the other hand, Wisniewski et al. [41] have shown that feedback can produce an effect size of d = 0.99 for highly informative feedback on student achievement. Such feedback may foster an SRL process and strong metacognitive monitoring and control [8,15,42]. With these pieces of evidence, we can propose that AI that model teachers’ scaffolding and feedback mechanism after students prompt the AI will support SRL activities.

As stated earlier, under the unidirectional condition (student-to-AI), it has been unclear what instructional and pedagogical functions of chatbots can serve to produce learning effects. In particular, it is unclear what teaching and learning implications are when students use a chatbot to learn. We, therefore, propose an educational framework for integrating an AI educational chatbot based on learning science—Zimmerman’s SRL framework along with JOL.

To our best knowledge, the design of chatbots has focused greatly on the backend design [43], user interface [44], and improving learning [36,45,46]. For example, Winkler and Söllner [46] reviewed the application of chatbots in improving student learning outcomes and suggested that chatbots could support individuals’ development of procedural knowledge and competency skills such as information searching, data collection, decision making, and analytical thinking.

Specifically for learning improvement, since the rise of Open AI’s ChatGPT, there have been several emerging calls for examining how ChatGPT can be integrated pedagogically to support the SRL process. As Dwivedi et al. [47] writes, “Applications like ChatGPT can be used either as a companion or tutor, [or] to support … self-regulated learning” [47] (p. 9). A recent case study also found that ChatGPT gave feedback to student assignments is comparable to that of a human instructor [48]. Lin and Chang’s study [49] and Lin’s doctoral dissertation have also provided a clear bulletin for designing and implementing chatbots for educational purposes and documented several interaction pathways leading to effective peer reviewing activities and writing achievement [49]. Similarly, Zhu et al. [50] argued that “self-regulated learning has been widely promoted in educational settings, the provision of personalized support to sustain self-regulated learning is crucial but inadequately accomplished” (p. 146). Therefore, we are addressing the emerging need to integrate chatbots in education and how chatbots can be developed or used to support learners’ SRL activities. This will be the reason why the fundamental educational principles of pedagogical AI chatbots need to be established. To do so, we have identified several instructional dimensions that we argue should be featured in the design of educational chatbots to facilitate effective learning for students or at least to supplement classroom instructions. These instructional dimensions include (1) goal setting, (2) feedback and self-assessment, and (3) personalization and adaptation.

3.2. Goal Setting and Prompting

Goals and motivation are two highly correlated constructs in education. These two instructional dimensions can guide the design of educational chatbots. In the field of education, the three terms, learning goals, learning objectives, and learning outcomes, have been used interchangeably, though with some conceptual differences [51]. Prøitz [51] mentioned: “the two terms [learning outcomes and learning objectives] are often intertwined and interconnected in the literature makes it difficult to distinguish between them” (p. 122). In the context of SRL and AI chatbots, we argue that the three are inherently similar to some extent. It is because, according to Burke [52] and Prøitz [51], these teacher-written statements contain learning orientation and purpose orientation that manifest their expectations from students. Therefore, these orientations can serve as process-oriented or result-oriented goals that guide learners’ strategies and SRL activities.

In goal-setting theory, learning goals (objectives or outcomes) that are process-oriented, specific, challenging, and achievable can motivate students and serve SRL functions. For instance, Locke and Latham [53] explained that goals may help shape students’ strategies to tackle a learning task, monitor their progress in a studying session, and increase engagement and motivation. Let us take a scenario. Imagine that a student needs to write a report. This result-oriented goal can give rise to two process-based sub-goals: first, they want to synthesize information A, B, and C during a writing session. Secondly, they want to generate an argument. In order to synthesize information, the student may need to apply some strategies. The student’s synthesis goal can drive the student to use some process-oriented writing strategies, such as classifying, listing, or comparing and contrasting. To generate an argument, the student may need to find out what is missing in the synthesized information or what is common among the syntheses. Thus, this example demonstrates that goals articulate two dimensions of learning: the focus of attention and resources needed to achieve the result. As Leake and Ram [54] argued, “a goal-driven learner determines what to learn by reasoning about the information it needs, and determines how to learn by reasoning about the relative merits of alternative learning strategies in the current circumstances” (p. 389).

SRL also consists of learners exercising their metacognitive control and metacognitive monitoring. These two processes are guided by pre-determined result-oriented outcomes: objectives or goals [8,42,55,56,57]. SRL researchers generally agree that goals can trigger several SRL events and metacognitive activities that should be investigated as they occur during learning and problem-solving activities [55,58,59]. Moreover, Paans et al.’s study [60] argues that learner-initiated SRL activities occurring at the micro-level and macro-level can be developed and occur simultaneously, including goal setting or knowledge acquisition. It implies that, in certain pedagogical tasks or problem-solving environments, such as working with chatbots, students need to identify goals by prompting the AI chatbot in a learning session corresponding to the tasks.

Additionally, goals can function as benchmarks by which learners assess the efficacy of their learning endeavors. When students possess the capacity to monitor their progress toward these goals, they are more likely to sustain their motivation and active involvement in the learning process [61]. Within the context of AI chatbot interaction, consider a scenario where a student instructs a chatbot to execute certain actions, such as synthesizing a given set of information. Subsequently, the chatbot provides the requested synthesis, allowing students to evaluate its conformity with their expectations and the learning context. Within Zimmerman’s framework of Self-Regulated Learning, this process aligns with the stages of emulation and self-control. Once a student prompts the chatbot for a response, they continuously monitor and self-assess its quality, subsequently re-prompting the chatbot for further actions. This bidirectional interaction transpires within the stages of simulation and self-control, as students actively participate in a cycle of prompts, monitoring and adjustments, and subsequent re-prompts, persisting until they attain a satisfactory outcome. Yet we have to acknowledge that the interaction assumes student autonomy, in which students keep prompting the chatbot and relying on the chatbot’s output. A more sophisticated way of student–chatbot interaction is bidirectional, where a chatbot is capable of reverse prompting, a concept which we will dive into deeper in our next section.

We believe it is crucial to teach students how to effectively prompt a generative AI chatbot. As we mentioned earlier, prompts are the goals that students set for the AI chatbot, but often students just want the tool’s output without engaging in the actual process. To better understand this, we can break prompts down into two types: cognitive prompts and metacognitive prompts, by drawing on Bloom’s Taxonomy [62]. Cognitive prompts are goal-oriented, strategic inquiries that learners feed into a generative AI chatbot. Metacognitive prompts, on the other hand, are to foster learners’ learning judgement and metacognitive growth. For example, in the case of a writing class, a cognitive prompt could be, “Help me grasp the concept of a thesis statement”. An outcome-based prompt might be, “Revise the following sentence for clarity”. In the case of metacognitive prompts, a teacher could encourage the students to reflect on their essays by asking the AI chatbot, “Evaluate my essay and suggest improvements”. The AI chatbot may function as a writing consultant that provides feedback. Undeniably, students might take a quicker route by framing the process more “outcome-oriented”, such as asking the AI, “Refine and improve this essay”. This is where the educator’s role comes in to explain the ethics of conduct and its associated consequences. Self-regulated learners stand as ethical AI users who care about the learning journey, valuing more than just the end product. In summary, goals, outcomes, or objectives can be utilized as defined learning pathways (also known as prompts) when students interact with chatbots. Students defining goals while working with a chatbot can be seen as setting a parameter for their learning. This goal defining (or prompting) helps students clearly understand what they are expected to achieve during a learning session and facilitates their work self-assessment while working with a chatbot.

3.3. Feedback and Self-Assessment Mechanism

Self-assessment is a process in which individuals evaluate their learning, performance, and understanding of a particular subject or skill. Research has shown that self-assessment can positively impact learning outcomes, motivation, and metacognitive skills [63,64,65]. Specifically, self-assessment can help learners identify their strengths and weaknesses, re-set goals, and monitor their progress toward achieving those goals. Self-assessment, grounded in JOL, involves learners reflecting on their learning and making judgements about their level of understanding and progress [66]. Self-assessment is also a component of SRL, as it allows learners to monitor their progress and adjust their learning strategies or learning goals as needed [67]. Self-assessment can therefore be a feature of a chatbot regardless of whether learners employ it to self-assess their learning, or it can be automatically promoted by the chatbot system to guide students to self-assess.

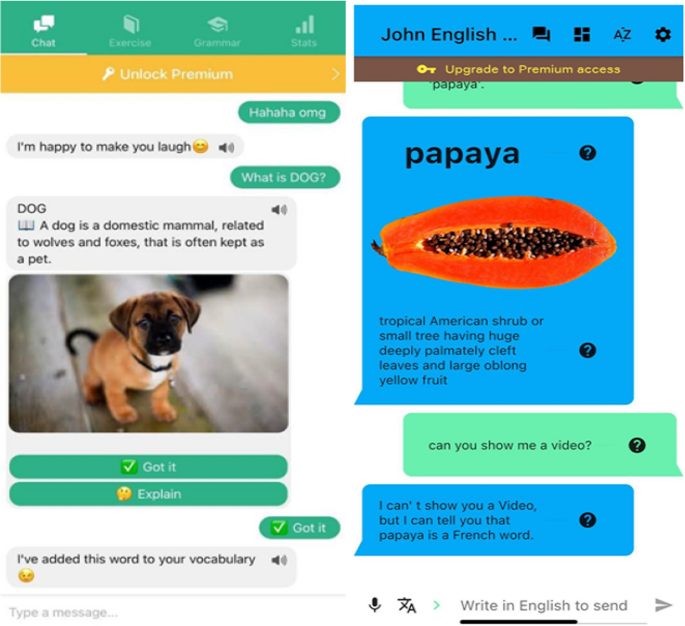

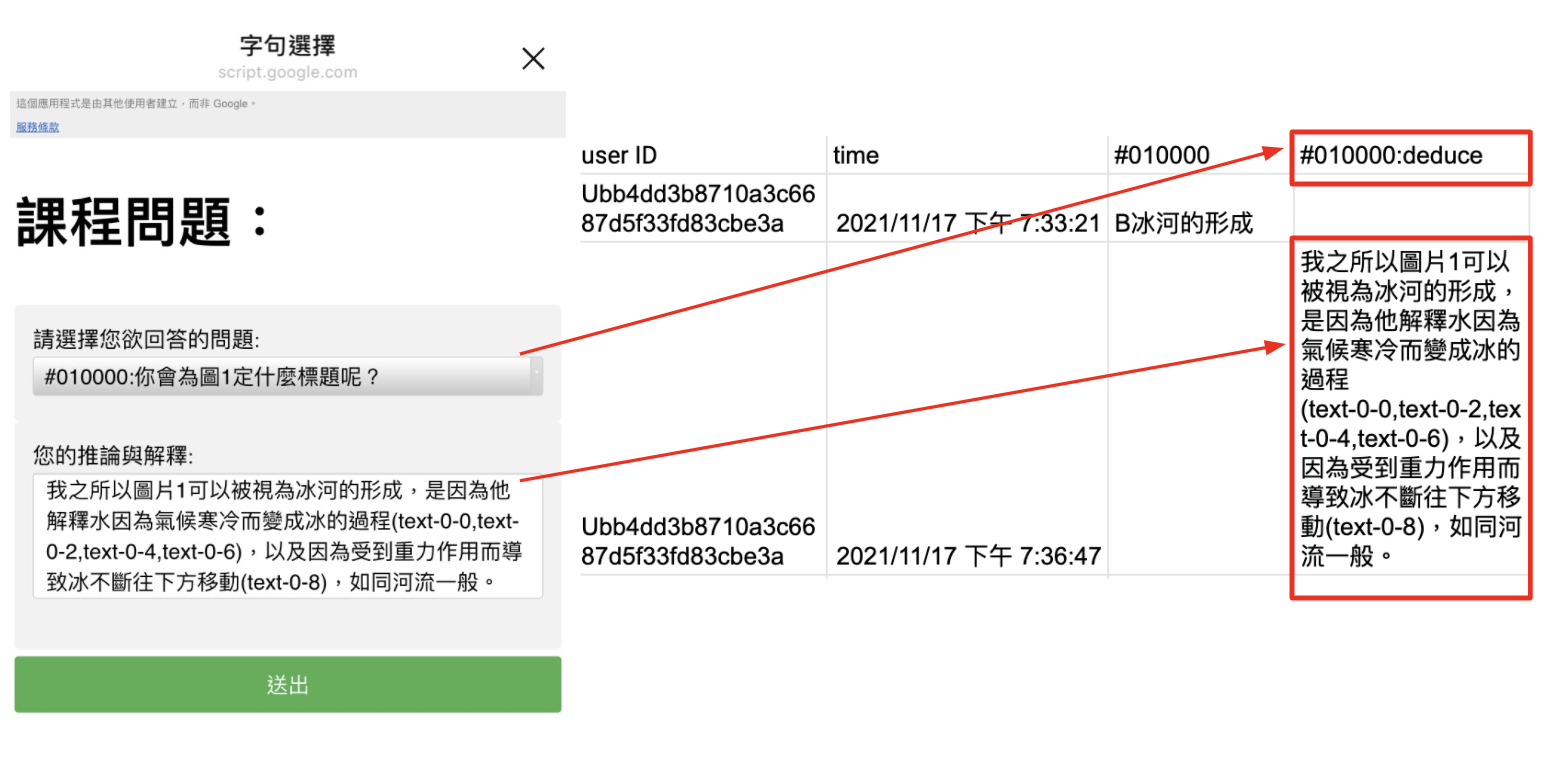

However, so far, we have found that the current AI-powered chatbots, like ChatGPT, have limited capabilities in reverse prompting when used for educational purposes. Reverse prompting functions as guiding questions after students prompt the chatbot. As suggested in the last section, after learners identify their prompts and goals, chatbots can ask learners to reflect on their learning and provide “reverse prompts” for self-assessment. The concept of reverse prompts is similar to reciprocal questioning. Reciprocal questioning is a group-based process in which two students pose their own questions for each other to answer [68]. This method has been used mainly to facilitate the reading process for emergent readers [69,70,71]. For instance, a chatbot could ask a learner an explanatory question like “Now, I give you two thesis statements you requested. Can you provide more examples of the relationship between the two statements of X and Y?” or “Can you provide more details on the requested speech or action?” as well as reflective questions like “How do you generalize this principle to similar cases?” to rate their understanding of a particular concept on a scale from 1 to 5 or to identify areas where they need more practice. We mock an example of such a conversation below in Figure 2.

The chatbot could then provide feedback and resources to help the learner improve in areas with potential knowledge gaps and low confidence levels. In this way, chatbots can be an effective tool for encouraging student self-assessment and SRL. A great body of evidence shows that the integrative effect of self-assessment and just-in-time feedback goes beyond understanding and learning new concepts and skills [72]. Goal-oriented and criteria-based self-assessment (e.g., self-explanation and reflection prompts) allows the learner to identify the knowledge gaps and misconceptions that often lead to incorrect conceptions or cognitive conflicts. Just-in-time feedback (i.e., the information provided by an agent/tutor in response to the diagnosed gap) can then act as a knowledge repair mechanism if the provided information is perceived as clear, logical, coherent, and applicable by the learner [73].

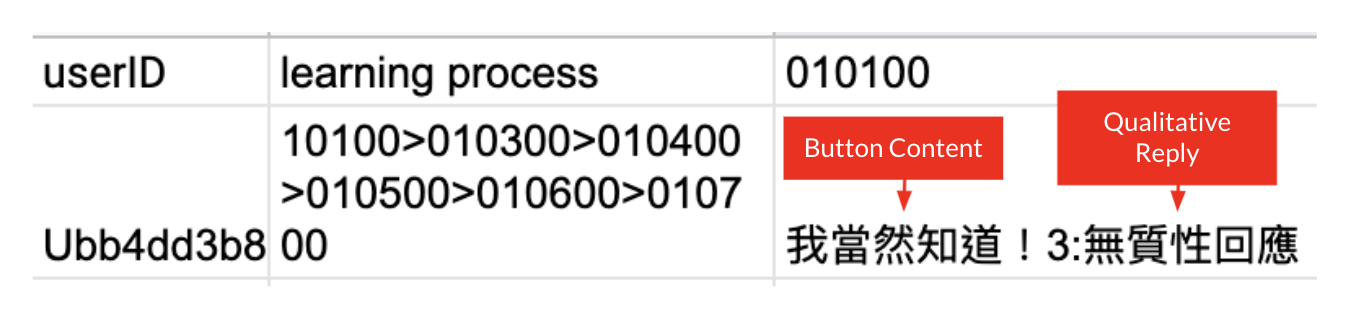

Based on Table 1 and the previous section on prompting and reverse prompting, teachers can also focus on facilitating learning judgement while teaching students to work with an AI chatbot. However, we propose that reverse prompting from an AI chatbot is also important so that educational values and SRL can be achieved.

According to Zimmerman [8], a chatbot is the social assistance that students can obtain. If the chatbot can provide reverse prompts that guide thinking, reflection, and self-assessment, students can then execute strategies that fit their goals and knowledge level. When learners engage in self-assessment activities, they are engaging in the process of making judgments about their learning. Throughout self-assessment, learners develop an awareness of their strengths and weaknesses, which can help them modify or set new goals. If they are satisfied with their goals, they can use their goals to monitor their progress and adjust their strategies as needed. This process also aligns with Zimmerman’s SRL model of self-control. At this phase, students can decide whether to go with what the chatbot suggests or if they need to take what they have and implement the suggestions that the chatbot provides. For example, a chatbot could reversibly ask learners to describe their strategies to solve a particular problem or reflect on what they have learned from a particular activity. This type of reflection can help learners become more aware of their learning processes and develop more effective strategies for learning [74,75]. Thus, the reverse interaction from chatbot to students provides an opportunity for developing self-awareness because learners become more self-directed or self-regulated and independent in their learning while working with the chatbot, which can lead to improved academic performance and overall success. Furthermore, by incorporating self-assessment prompts into educational chatbots, learners can receive immediate feedback and support as they engage in the self-assessment process, which can help to develop their metacognitive skills further and promote deeper learning.

3.4. Facilitating Self-Regulation: Personalization and Adaptation

Personalization and adaptation are unique characteristics of learning technology. When students engage with an LMS, the LMS platform inherently captures and records their behaviors and interactions. This can encompass actions such as page views, time allocation per page, link traversal, and page-specific operations. Even the act of composing content within a discussion forum can offer comprehensive trace data, such as temporal markers indicating the writing and conclusion of a discussion forum post, syntactic structures employed, discernible genre attributes, and lexical choices. This collection of traceable data forms the foundation for the subsequent generation of comprehensive learning analytics for learners, being manifested as either textual reports or information visualizations, both encapsulating a synthesis of pertinent insights regarding the students’ learning trajectories [76]. These fine-grained analytical outputs can fulfill a key role in furnishing students with a holistic overview of how they learn and what they learn, fostering opportunities for reflection, evaluation, and informed refinement of their learning tactics. Therefore, by using data-driven insights and algorithms described above, chatbots can be tailored to the individual needs of learners, providing personalized feedback and guidance that supports their unique learning goals and preferences. However, we believe that the current AI-powered chatbot is inadequate in education; in particular, chatbots thus far lack capabilities for learning personalization and adaptation. A chatbot, like ChatGPT, often acts as a knowledge giver unless a learner knows how to feed the prompts. Our framework repositions the role of educational AI chatbots from knowledge providers to facilitators in the learning process. By encouraging students to initiate interactions through prompts, the chatbot assumes the role of a learning partner that progressively understands the students’ requirements. As outlined in the preceding section, the chatbot possesses the capability to tactfully prompt learners when necessary, offering guidance and directions instead of outright solutions based on the given prompts.

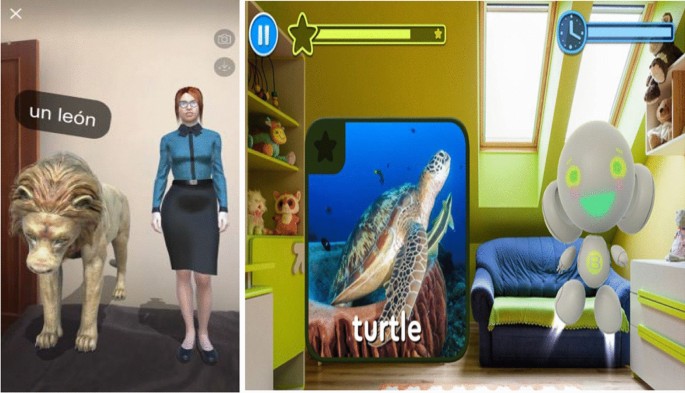

Learner adaptation can be effectively facilitated through the utilization of learning analytics, which serves as a valuable method for collecting learner data and enhancing overall learning outcomes [75]. Chatbots have become more practical and intelligent by improving natural language, data mining, and machine-learning techniques. The chatbot could use the trace data collected on LMS to provide students with the best course of action. Data that the chatbot can collect from the LMS can include analysis of students’ time spent on a page, students’ clicking behaviors, deadlines set by the instructors, or prompts (goals) initiated by the students. For example, a student has not viewed their module assignment pages on a learning management system for a long time, but they request the chatbot to generate a sample essay for their assignments. Instead of giving the direct output of a sample essay, the chatbot can direct the student to view the assignment pages more closely (i.e., “It looks like you haven’t spent enough time on this page, I suggest you review this page before attempting to ask me to give you an essay”), as shown in Figure 3. In this way, learning analytics can also help learners take ownership of their learning by providing real-time feedback on their progress and performance. By giving learners access to their learning analytics, educators can empower students to actively learn and make informed decisions about improving their performance [75,77]. An example is shown in Figure 4. Therefore, through personalized and adaptive chatbot interactions, learners can receive feedback and resources that are tailored to their specific needs and performance, helping to improve their metacognitive skills and ultimately enhancing their overall learning outcomes.

4. Limitations

Lo’s [78] comprehensive rapid review indicates three primary limitations inherent in generative AI tools: 1. biased information, 2. constrained access to current knowledge, and 3. propensity for disseminating false information [78]. Baidoo-Anu and Ansa [79] underscore that the efficacy of generative AI tools is intricately linked to the training data that were fed into the tool, wherein the composition of training data can inadvertently contain biases that subsequently manifest in the AI-generated content, potentially compromising the neutrality, objectivity, and reliability of information imparted to student users. Moreover, the precision and accuracy of the information generated by generative AI tools further emerge as a key concern. Scholarly investigations have discovered several instances where content produced by ChatGPT has demonstrated inaccuracy and spuriousness, particularly when tasked with generating citations for academic papers [79,80].

Amidst these acknowledged limitations, our position leans toward an emphasis on students’ educational use of these tools, transcending the preoccupation with the tools’ inherent characteristics of bias, inaccuracy, or falsity. Based on our proposal, we want to develop students’ capacity for self-regulation and discernment when evaluating received information. Furthermore, educators bear an important role in guiding students on harnessing the potential of generative AI tools to enhance the learning process, instead of the generative AI tools can provide information akin to a textbook. This justifies the reason why we integrate Zimmerman’s SRL model, illustrating how the judicious incorporation of generative AI tools can foster students’ self-regulation, synergizing with the guidance of educators and the efficacy of instructional technology design.

5. Concluding Remarks

This paper explores how educational chatbots, or so-called conversational agents, can support student self-regulatory processes and self-evaluation in the learning process. As shown in Figure 5 below, drawing on Zimmerman’s SRL framework, we postulate that chatbot designers should consider pedagogical principles, such as goal setting and planning, self-assessment, and personalization, to ensure that the chatbot effectively supports student learning and improves academic performance. We suggest that such a chatbot could provide personalized feedback to students on their understanding of course material and promote self-assessment by prompting them to reflect on their learning process. We also emphasize the importance of establishing the pedagogical functions of chatbots to fit the actual purposes of education and supplement teacher instruction. The paper provides examples of successful implementations of educational chatbots that can inform SRL process as well as self-assessment and reflection based on JOL principles. Overall, this paper highlights the potential benefits of educational chatbots for personalized and interactive learning experiences while emphasizing the importance of considering pedagogical principles in their design. Educational chatbots may supplement classroom instruction by providing personalized feedback and prompting reflection on student learning progress. However, chatbot designers must carefully consider how these tools fit into existing pedagogical practices to ensure their effectiveness in supporting student learning.

Through the application of our framework, future researchers are encouraged to delve into three important topics of inquiry that can empirically validate our conceptual model. The first dimension entails scrutiny of educational principles. For instance, how can AI chatbots be designed to support learners in setting and pursuing personalized learning goals, fostering a sense of ownership over the learning process? Addressing this question involves exploring how learners can form a sense of ownership over their interactions with the AI chatbots, while working towards the learning objectives.

The second dimension involves a closer examination of the actual Self-Regulated Learning (SRL) process. This necessitates an empirical exploration of the ways AI chatbots can effectively facilitate learners’ self-regulated reflections and the honing of self-regulation skills. For example, how effective is AI’s feedback to a student’s essay and how do students develop subsequent SRL strategies to address the AI’s feedback and evaluation? Additionally, inquiries might also revolve around educators’ instructional methods in leveraging AI chatbots to not only nurture learners’ skills in interacting with the technology but also foster their self-regulatory processes. Investigating the extent to which AI chatbots can provide learning analytics as feedback that harmonizes with individual learners’ self-regulation strategies is also of significance. Moreover, ethical considerations must be taken into account when integrating AI chatbots into educational settings, ensuring the preservation of learners’ autonomy and self-regulation.

The third dimension is related to user interface research. A research endeavor could revolve around identifying which conversational interface proves the most intuitive for learners as they engage with an AI chatbot. Additionally, an inquiry might probe the extent to which the AI chatbot should engage in dialogue within educational contexts. Furthermore, delineating the circumstances under which AI chatbots should abstain from delivering outcome-based outputs to learners constitutes a worthwhile avenue of investigation. Numerous additional inquiries can be derived from our conceptual model, yet the central message that we want to deliver remains clear: Our objective is to engage educators, instructional designers, and students in the learning process while navigating in this AI world. It is important to educate students on the potential of AI chatbots to enhance their self-regulation skills while also emphasizing the importance of avoiding actions that contravene the principles of academic integrity.

Conceptualization, D.H.C. and M.P.-C.L.; writing—original draft preparation, D.H.C.; writing—review and editing, D.H.C., M.P.-C.L., S.H. and Q.Q.W.; visualization, Q.Q.W.; funding acquisition, D.H.C. All authors have read and agreed to the published version of the manuscript.

Not applicable.

The authors declare no conflict of interest.

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

![View Image - Figure 1. Zimmerman’s multi-level SRL Framework (adopted from Panadero [7]). View Image - Figure 1. Zimmerman’s multi-level SRL Framework (adopted from Panadero [7]).](https://media.proquest.com/media/hms/THUM/2/Dr3yU?_a=ChgyMDI0MDYyMzA2NDE1NzA4ODo5NjM5MDYSBzEzODMzMjMaCk9ORV9TRUFSQ0giDjkxLjE5My4xMTEuMjE2KgcyMDMyMzI3MgoyODYyNzI1MzU0OgtJbmxpbmVJbWFnZUIBMFIGT25saW5lWgNJTEliBFRIVU1qCjIwMjMvMDEvMDFyCjIwMjMvMTIvMzF6AIIBIFAtMTAwMDA4NC0yMDEzOTUtU1RBRkYtbnVsbC1udWxskgEGT25saW5lygFPTW96aWxsYS81LjAgKFdpbmRvd3MgTlQgNi4xOyBXaW42NDsgeDY0OyBydjoxMDcuMCkgR2Vja28vMjAxMDAxMDEgRmlyZWZveC8xMDcuMNIBElNjaG9sYXJseSBKb3VybmFsc5oCB1ByZVBhaWSqAhtPUzpFTVMtRnVsbFRleHQtZ2V0RnVsbFRleHTKAg9BcnRpY2xlfEZlYXR1cmXiAgFO8gIA%2BgIBWYIDA1dlYooDHENJRDoyMDI0MDYyMzA2NDE1NzA4ODo1ODAyNTM%3D&_s=SM1%2BUAnRXmGtPgR3%2FUzB%2FKik%2BlA%3D)

Figure 1. Zimmerman’s multi-level SRL Framework (adopted from Panadero [7]).

Figure 2. A mocked example of reverse prompting from a chatbot.

Figure 3. An example of a chatbot in a learning management system that supports SRL by delivering personalized feedback.

Figure 4. An example of a chatbot that supports SRL by delivering learning analytics.

Figure 5. Putting it all together: educational principles, SRL, and directionality.

Types of prompts based on Bloom’s Taxonomy [ ].

| Prompt Types | Process-Based | Outcome-Based |

|---|---|---|

| Cognitive | Understand | Create |

| Metacognitive | Evaluate | |

1. Pérez, J.Q.; Daradoumis, T.; Puig, J.M.M. Rediscovering the use of chatbots in education: A systematic literature review. Comput. Appl. Eng. Educ. ; 2020; 28 , pp. 1549-1565. [DOI: https://dx.doi.org/10.1002/cae.22326]

2. Smutny, P.; Schreiberova, P. Chatbots for learning: A review of educational chatbots for the Facebook Messenger. Comput. Educ. ; 2020; 151 , 103862. [DOI: https://dx.doi.org/10.1016/j.compedu.2020.103862]

3. Kuhail, M.A.; Alturki, N.; Alramlawi, S.; Alhejori, K. Interacting with educational chatbots: A systematic review. Educ. Inf. Technol. ; 2023; 28 , pp. 973-1018. [DOI: https://dx.doi.org/10.1007/s10639-022-11177-3]

4. Okonkwo, C.W.; Ade-Ibijola, A. Chatbots applications in education: A systematic review. Comput. Educ. Artif. Intell. ; 2021; 2 , 100033. [DOI: https://dx.doi.org/10.1016/j.caeai.2021.100033]

5. Koriat, A. Monitoring one’s own knowledge during study: A cue-utilization approach to judgments of learning. J. Exp. Psychol. Gen. ; 1997; 126 , pp. 349-370. [DOI: https://dx.doi.org/10.1037/0096-3445.126.4.349]

6. Son, L.K.; Metcalfe, J. Judgments of learning: Evidence for a two-stage process. Mem. Cogn. ; 2005; 33 , pp. 1116-1129. [DOI: https://dx.doi.org/10.3758/BF03193217] [PubMed: https://www.ncbi.nlm.nih.gov/pubmed/16496730]

7. Panadero, E. A Review of Self-regulated Learning: Six Models and Four Directions for Research. Front. Psychol. ; 2017; 8 , 422. [PubMed: https://www.ncbi.nlm.nih.gov/pubmed/28503157][DOI: https://dx.doi.org/10.3389/fpsyg.2017.00422]

8. Zimmerman, B.J. Attaining Self-Regulation. Handbook of Self-Regulation ; Elsevier: Amsterdam, The Netherlands, 2000; pp. 13-39. [DOI: https://dx.doi.org/10.1016/B978-012109890-2/50031-7]

9. Baars, M.; Wijnia, L.; de Bruin, A.; Paas, F. The Relation Between Students’ Effort and Monitoring Judgments During Learning: A Meta-analysis. Educ. Psychol. Rev. ; 2020; 32 , pp. 979-1002. [DOI: https://dx.doi.org/10.1007/s10648-020-09569-3]

10. Leonesio, R.J.; Nelson, T.O. Do different metamemory judgments tap the same underlying aspects of memory?. J. Exp. Psychol. Learn. Mem. Cogn. ; 1990; 16 , pp. 464-470. [DOI: https://dx.doi.org/10.1037/0278-7393.16.3.464]

11. Double, K.S.; Birney, D.P.; Walker, S.A. A meta-analysis and systematic review of reactivity to judgements of learning. Memory ; 2018; 26 , pp. 741-750. [DOI: https://dx.doi.org/10.1080/09658211.2017.1404111]

12. Janes, J.L.; Rivers, M.L.; Dunlosky, J. The influence of making judgments of learning on memory performance: Positive, negative, or both?. Psychon. Bull. Rev. ; 2018; 25 , pp. 2356-2364. [DOI: https://dx.doi.org/10.3758/s13423-018-1463-4]

13. Hamzah, M.I.; Hamzah, H.; Zulkifli, H. Systematic Literature Review on the Elements of Metacognition-Based Higher Order Thinking Skills (HOTS) Teaching and Learning Modules. Sustainability ; 2022; 14 , 813. [DOI: https://dx.doi.org/10.3390/su14020813]

14. Veenman, M.V.J.; Van Hout-Wolters, B.H.A.M.; Afflerbach, P. Metacognition and learning: Conceptual and methodological considerations. Metacognition Learn. ; 2006; 1 , pp. 3-14. [DOI: https://dx.doi.org/10.1007/s11409-006-6893-0]

15. Nelson, T.; Narens, L. Why investigate metacognition. Metacognition: Knowing about Knowing ; MIT Press: Cambridge, MA, USA, 1994; [DOI: https://dx.doi.org/10.7551/mitpress/4561.003.0003]

16. Tuysuzoglu, B.B.; Greene, J.A. An investigation of the role of contingent metacognitive behavior in self-regulated learning. Metacognition Learn. ; 2015; 10 , pp. 77-98. [DOI: https://dx.doi.org/10.1007/s11409-014-9126-y]

17. Bandura, A. Social Cognitive Theory: An Agentic Perspective. Asian J. Soc. Psychol. ; 1999; 2 , pp. 21-41. [DOI: https://dx.doi.org/10.1111/1467-839X.00024]

18. Bem, D.J. Self-Perception Theory. Advances in Experimental Social Psychology ; Berkowitz, L. Academic Press: Cambridge, MA, USA, 1972; Volume 6 , pp. 1-62. [DOI: https://dx.doi.org/10.1016/s0065-2601(08)60024-6]

19. Abu Shawar, B.; Atwell, E. Different measurements metrics to evaluate a chatbot system. Proceedings of the Workshop on Bridging the Gap: Academic and Industrial Research in Dialog Technologies ; Rochester, NY, USA, 26 April 2007; pp. 89-96. [DOI: https://dx.doi.org/10.3115/1556328.1556341]

20. Turing, A.M. Computing machinery and intelligence. Mind ; 1950; 59 , pp. 433-460. [DOI: https://dx.doi.org/10.1093/mind/LIX.236.433]

21. Weizenbaum, J. ELIZA—A computer program for the study of natural language communication between man and machine. Commun. ACM ; 1966; 9 , pp. 36-45. [DOI: https://dx.doi.org/10.1145/365153.365168]

22. Wallace, R.S. The anatomy of A.L.I.C.E. Parsing the Turing Test: Philosophical and Methodological Issues in the Quest for the Thinking Computer ; Epstein, R.; Roberts, G.; Beber, G. Springer: Dordrecht, The Netherlands, 2009; pp. 181-210. [DOI: https://dx.doi.org/10.1007/978-1-4020-6710-5_13]

23. Hwang, G.-J.; Chang, C.-Y. A review of opportunities and challenges of chatbots in education. Interact. Learn. Environ. ; 2021; pp. 1-14. [DOI: https://dx.doi.org/10.1080/10494820.2021.1952615]

24. Yamada, M.; Goda, Y.; Matsukawa, H.; Hata, K.; Yasunami, S. A Computer-Supported Collaborative Learning Design for Quality Interaction. IEEE MultiMedia ; 2016; 23 , pp. 48-59. [DOI: https://dx.doi.org/10.1109/MMUL.2015.95]

25. Muniasamy, A.; Alasiry, A. Deep Learning: The Impact on Future eLearning. Int. J. Emerg. Technol. Learn. (iJET) ; 2020; 15 , pp. 188-199. [DOI: https://dx.doi.org/10.3991/ijet.v15i01.11435]

26. Bendig, E.; Erb, B.; Schulze-Thuesing, L.; Baumeister, H. The Next Generation: Chatbots in Clinical Psychology and Psychotherapy to Foster Mental Health—A Scoping Review. Verhaltenstherapie ; 2022; 32 , pp. 64-76. [DOI: https://dx.doi.org/10.1159/000501812]

27. Kennedy, C.M.; Powell, J.; Payne, T.H.; Ainsworth, J.; Boyd, A.; Buchan, I. Active Assistance Technology for Health-Related Behavior Change: An Interdisciplinary Review. J. Med. Internet Res. ; 2012; 14 , e80. [DOI: https://dx.doi.org/10.2196/jmir.1893]

28. Poncette, A.-S.; Rojas, P.-D.; Hofferbert, J.; Sosa, A.V.; Balzer, F.; Braune, K. Hackathons as Stepping Stones in Health Care Innovation: Case Study with Systematic Recommendations. J. Med. Internet Res. ; 2020; 22 , e17004. [PubMed: https://www.ncbi.nlm.nih.gov/pubmed/32207691][DOI: https://dx.doi.org/10.2196/17004]

29. Ferrell, O.C.; Ferrell, L. Technology Challenges and Opportunities Facing Marketing Education. Mark. Educ. Rev. ; 2020; 30 , pp. 3-14. [DOI: https://dx.doi.org/10.1080/10528008.2020.1718510]

30. Behera, R.K.; Bala, P.K.; Ray, A. Cognitive Chatbot for Personalised Contextual Customer Service: Behind the Scene and beyond the Hype. Inf. Syst. Front. ; 2021; pp. 1-21. [DOI: https://dx.doi.org/10.1007/s10796-021-10168-y]

31. Crolic, C.; Thomaz, F.; Hadi, R.; Stephen, A.T. Blame the Bot: Anthropomorphism and Anger in Customer–Chatbot Interactions. J. Mark. ; 2022; 86 , pp. 132-148. [DOI: https://dx.doi.org/10.1177/00222429211045687]

32. Clarizia, F.; Colace, F.; Lombardi, M.; Pascale, F.; Santaniello, D. Chatbot: An education support system for student. CSS 2018: Cyberspace Safety and Security ; Castiglione, A.; Pop, F.; Ficco, M.; Palmieri, F. Lecture Notes in Computer Science Book Series; Springer International Publishing: Cham, Switzerland, 2018; Volume 11161 , pp. 291-302. [DOI: https://dx.doi.org/10.1007/978-3-030-01689-0_23]

33. Firat, M. What ChatGPT means for universities: Perceptions of scholars and students. J. Appl. Learn. Teach. ; 2023; 6 , pp. 57-63. [DOI: https://dx.doi.org/10.37074/jalt.2023.6.1.22]

34. Kim, H.-S.; Kim, N.Y. Effects of AI chatbots on EFL students’ communication skills. Commun. Ski. ; 2021; 21 , pp. 712-734.

35. Hill, J.; Ford, W.R.; Farreras, I.G. Real conversations with artificial intelligence: A comparison between human–human online conversations and human–chatbot conversations. Comput. Hum. Behav. ; 2015; 49 , pp. 245-250. [DOI: https://dx.doi.org/10.1016/j.chb.2015.02.026]

36. Wu, E.H.-K.; Lin, C.-H.; Ou, Y.-Y.; Liu, C.-Z.; Wang, W.-K.; Chao, C.-Y. Advantages and Constraints of a Hybrid Model K-12 E-Learning Assistant Chatbot. IEEE Access ; 2020; 8 , pp. 77788-77801. [DOI: https://dx.doi.org/10.1109/ACCESS.2020.2988252]

37. Brandtzaeg, P.B.; Følstad, A. Why people use chatbots. INSCI 2017: Internet Science ; Kompatsiaris, I.; Cave, J.; Satsiou, A.; Carle, G.; Passani, A.; Kontopoulos, E.; Diplaris, S.; McMillan, D. Lecture Notes in Computer Science Book Series; Springer International Publishing: Cham, Switzerland, 2017; Volume 10673 , pp. 377-392. [DOI: https://dx.doi.org/10.1007/978-3-319-70284-1_30]

38. Deng, X.; Yu, Z. A Meta-Analysis and Systematic Review of the Effect of Chatbot Technology Use in Sustainable Education. Sustainability ; 2023; 15 , 2940. [DOI: https://dx.doi.org/10.3390/su15042940]

39. de Quincey, E.; Briggs, C.; Kyriacou, T.; Waller, R. Student Centred Design of a Learning Analytics System. Proceedings of the 9th International Conference on Learning Analytics & Knowledge ; Tempe, AZ, USA, 4 March 2019; pp. 353-362. [DOI: https://dx.doi.org/10.1145/3303772.3303793]

40. Hattie, J. The black box of tertiary assessment: An impending revolution. Tertiary Assessment & Higher Education Student Outcomes: Policy, Practice & Research ; Ako Aotearoa: Wellington, New Zealand, 2009; pp. 259-275.

41. Wisniewski, B.; Zierer, K.; Hattie, J. The Power of Feedback Revisited: A Meta-Analysis of Educational Feedback Research. Front. Psychol. ; 2020; 10 , 3087. [DOI: https://dx.doi.org/10.3389/fpsyg.2019.03087]

42. Winne, P.H. Cognition and metacognition within self-regulated learning. Handbook of Self-Regulation of Learning and Performance ; 2nd ed. Routledge: London, UK, 2017.

43. Serban, I.V.; Sankar, C.; Germain, M.; Zhang, S.; Lin, Z.; Subramanian, S.; Kim, T.; Pieper, M.; Chandar, S.; Ke, N.R. et al. A deep reinforcement learning chatbot. arXiv ; 2017; arXiv: 1709.02349

44. Shneiderman, B.; Plaisant, C. Designing the User Interface: Strategies for Effective Human-Computer Interaction ; 4th ed. Pearson: Boston, MA, USA, Addison Wesley: Hoboken, NJ, USA, 2004.

45. Abbasi, S.; Kazi, H. Measuring effectiveness of learning chatbot systems on student’s learning outcome and memory retention. Asian J. Appl. Sci. Eng. ; 2014; 3 , pp. 57-66. [DOI: https://dx.doi.org/10.15590/ajase/2014/v3i7/53576]

46. Winkler, R.; Soellner, M. Unleashing the Potential of Chatbots in Education: A State-Of-The-Art Analysis. Acad. Manag. Proc. ; 2018; 2018 , 15903. [DOI: https://dx.doi.org/10.5465/AMBPP.2018.15903abstract]

47. Dwivedi, Y.K.; Kshetri, N.; Hughes, L.; Slade, E.L.; Jeyaraj, A.; Kar, A.K.; Baabdullah, A.M.; Koohang, A.; Raghavan, V.; Ahuja, M. et al. Opinion Paper: “So what if ChatGPT wrote it?” Multidisciplinary perspectives on opportunities, challenges and implications of generative conversational AI for research, practice and policy. Int. J. Inf. Manag. ; 2023; 71 , 102642. [DOI: https://dx.doi.org/10.1016/j.ijinfomgt.2023.102642]

48. Dai, W.; Lin, J.; Jin, F.; Li, T.; Tsai, Y.S.; Gasevic, D.; Chen, G. Can large language models provide feedback to students? A case study on ChatGPT. 2023; preprint [DOI: https://dx.doi.org/10.35542/osf.io/hcgzj]

49. Lin, M.P.-C.; Chang, D. Enhancing post-secondary writers’ writing skills with a Chatbot: A mixed-method classroom study. J. Educ. Technol. Soc. ; 2020; 23 , pp. 78-92.

50. Zhu, C.; Sun, M.; Luo, J.; Li, T.; Wang, M. How to harness the potential of ChatGPT in education?. Knowl. Manag. E-Learn. ; 2023; 15 , pp. 133-152. [DOI: https://dx.doi.org/10.34105/j.kmel.2023.15.008]

51. Prøitz, T.S. Learning outcomes: What are they? Who defines them? When and where are they defined?. Educ. Assess. Eval. Account. ; 2010; 22 , pp. 119-137. [DOI: https://dx.doi.org/10.1007/s11092-010-9097-8]