- Bipolar Disorder

- Therapy Center

- When To See a Therapist

- Types of Therapy

- Best Online Therapy

- Best Couples Therapy

- Best Family Therapy

- Managing Stress

- Sleep and Dreaming

- Understanding Emotions

- Self-Improvement

- Healthy Relationships

- Student Resources

- Personality Types

- Guided Meditations

- Verywell Mind Insights

- 2023 Verywell Mind 25

- Mental Health in the Classroom

- Editorial Process

- Meet Our Review Board

- Crisis Support

How to Write a Great Hypothesis

Hypothesis Format, Examples, and Tips

Kendra Cherry, MS, is a psychosocial rehabilitation specialist, psychology educator, and author of the "Everything Psychology Book."

:max_bytes(150000):strip_icc():format(webp)/IMG_9791-89504ab694d54b66bbd72cb84ffb860e.jpg)

Amy Morin, LCSW, is a psychotherapist and international bestselling author. Her books, including "13 Things Mentally Strong People Don't Do," have been translated into more than 40 languages. Her TEDx talk, "The Secret of Becoming Mentally Strong," is one of the most viewed talks of all time.

:max_bytes(150000):strip_icc():format(webp)/VW-MIND-Amy-2b338105f1ee493f94d7e333e410fa76.jpg)

Verywell / Alex Dos Diaz

- The Scientific Method

Hypothesis Format

Falsifiability of a hypothesis, operational definitions, types of hypotheses, hypotheses examples.

- Collecting Data

Frequently Asked Questions

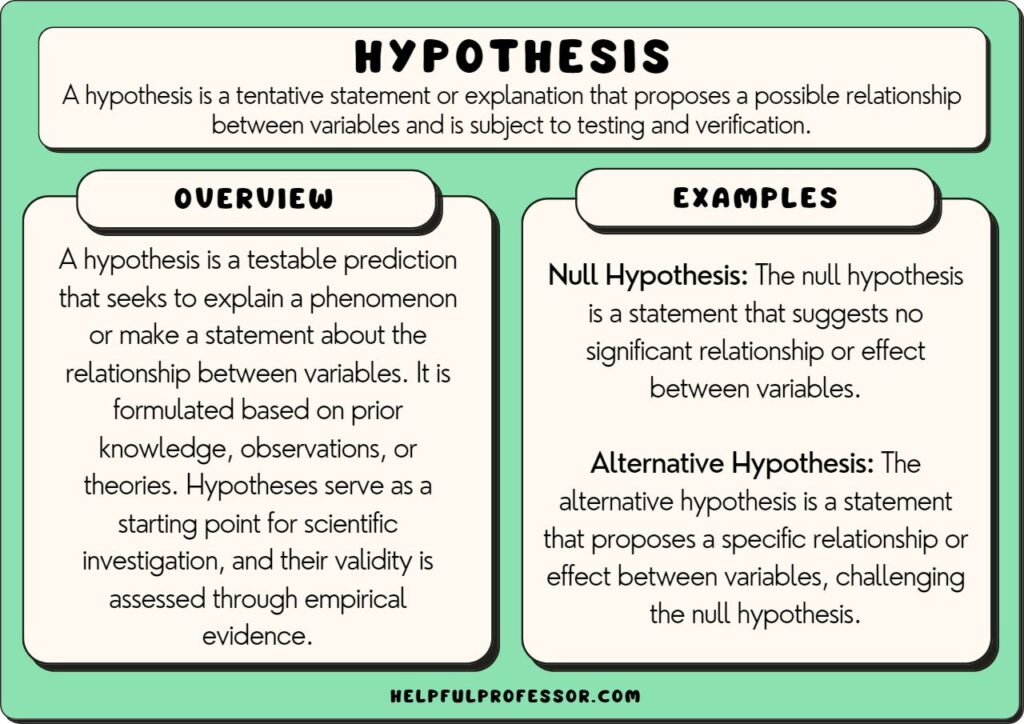

A hypothesis is a tentative statement about the relationship between two or more variables. It is a specific, testable prediction about what you expect to happen in a study.

One hypothesis example would be a study designed to look at the relationship between sleep deprivation and test performance might have a hypothesis that states: "This study is designed to assess the hypothesis that sleep-deprived people will perform worse on a test than individuals who are not sleep-deprived."

This article explores how a hypothesis is used in psychology research, how to write a good hypothesis, and the different types of hypotheses you might use.

The Hypothesis in the Scientific Method

In the scientific method , whether it involves research in psychology, biology, or some other area, a hypothesis represents what the researchers think will happen in an experiment. The scientific method involves the following steps:

- Forming a question

- Performing background research

- Creating a hypothesis

- Designing an experiment

- Collecting data

- Analyzing the results

- Drawing conclusions

- Communicating the results

The hypothesis is a prediction, but it involves more than a guess. Most of the time, the hypothesis begins with a question which is then explored through background research. It is only at this point that researchers begin to develop a testable hypothesis. Unless you are creating an exploratory study, your hypothesis should always explain what you expect to happen.

In a study exploring the effects of a particular drug, the hypothesis might be that researchers expect the drug to have some type of effect on the symptoms of a specific illness. In psychology, the hypothesis might focus on how a certain aspect of the environment might influence a particular behavior.

Remember, a hypothesis does not have to be correct. While the hypothesis predicts what the researchers expect to see, the goal of the research is to determine whether this guess is right or wrong. When conducting an experiment, researchers might explore a number of factors to determine which ones might contribute to the ultimate outcome.

In many cases, researchers may find that the results of an experiment do not support the original hypothesis. When writing up these results, the researchers might suggest other options that should be explored in future studies.

In many cases, researchers might draw a hypothesis from a specific theory or build on previous research. For example, prior research has shown that stress can impact the immune system. So a researcher might hypothesize: "People with high-stress levels will be more likely to contract a common cold after being exposed to the virus than people who have low-stress levels."

In other instances, researchers might look at commonly held beliefs or folk wisdom. "Birds of a feather flock together" is one example of folk wisdom that a psychologist might try to investigate. The researcher might pose a specific hypothesis that "People tend to select romantic partners who are similar to them in interests and educational level."

Elements of a Good Hypothesis

So how do you write a good hypothesis? When trying to come up with a hypothesis for your research or experiments, ask yourself the following questions:

- Is your hypothesis based on your research on a topic?

- Can your hypothesis be tested?

- Does your hypothesis include independent and dependent variables?

Before you come up with a specific hypothesis, spend some time doing background research. Once you have completed a literature review, start thinking about potential questions you still have. Pay attention to the discussion section in the journal articles you read . Many authors will suggest questions that still need to be explored.

To form a hypothesis, you should take these steps:

- Collect as many observations about a topic or problem as you can.

- Evaluate these observations and look for possible causes of the problem.

- Create a list of possible explanations that you might want to explore.

- After you have developed some possible hypotheses, think of ways that you could confirm or disprove each hypothesis through experimentation. This is known as falsifiability.

In the scientific method , falsifiability is an important part of any valid hypothesis. In order to test a claim scientifically, it must be possible that the claim could be proven false.

Students sometimes confuse the idea of falsifiability with the idea that it means that something is false, which is not the case. What falsifiability means is that if something was false, then it is possible to demonstrate that it is false.

One of the hallmarks of pseudoscience is that it makes claims that cannot be refuted or proven false.

A variable is a factor or element that can be changed and manipulated in ways that are observable and measurable. However, the researcher must also define how the variable will be manipulated and measured in the study.

For example, a researcher might operationally define the variable " test anxiety " as the results of a self-report measure of anxiety experienced during an exam. A "study habits" variable might be defined by the amount of studying that actually occurs as measured by time.

These precise descriptions are important because many things can be measured in a number of different ways. One of the basic principles of any type of scientific research is that the results must be replicable. By clearly detailing the specifics of how the variables were measured and manipulated, other researchers can better understand the results and repeat the study if needed.

Some variables are more difficult than others to define. How would you operationally define a variable such as aggression ? For obvious ethical reasons, researchers cannot create a situation in which a person behaves aggressively toward others.

In order to measure this variable, the researcher must devise a measurement that assesses aggressive behavior without harming other people. In this situation, the researcher might utilize a simulated task to measure aggressiveness.

Hypothesis Checklist

- Does your hypothesis focus on something that you can actually test?

- Does your hypothesis include both an independent and dependent variable?

- Can you manipulate the variables?

- Can your hypothesis be tested without violating ethical standards?

The hypothesis you use will depend on what you are investigating and hoping to find. Some of the main types of hypotheses that you might use include:

- Simple hypothesis : This type of hypothesis suggests that there is a relationship between one independent variable and one dependent variable.

- Complex hypothesis : This type of hypothesis suggests a relationship between three or more variables, such as two independent variables and a dependent variable.

- Null hypothesis : This hypothesis suggests no relationship exists between two or more variables.

- Alternative hypothesis : This hypothesis states the opposite of the null hypothesis.

- Statistical hypothesis : This hypothesis uses statistical analysis to evaluate a representative sample of the population and then generalizes the findings to the larger group.

- Logical hypothesis : This hypothesis assumes a relationship between variables without collecting data or evidence.

A hypothesis often follows a basic format of "If {this happens} then {this will happen}." One way to structure your hypothesis is to describe what will happen to the dependent variable if you change the independent variable .

The basic format might be: "If {these changes are made to a certain independent variable}, then we will observe {a change in a specific dependent variable}."

A few examples of simple hypotheses:

- "Students who eat breakfast will perform better on a math exam than students who do not eat breakfast."

- Complex hypothesis: "Students who experience test anxiety before an English exam will get lower scores than students who do not experience test anxiety."

- "Motorists who talk on the phone while driving will be more likely to make errors on a driving course than those who do not talk on the phone."

Examples of a complex hypothesis include:

- "People with high-sugar diets and sedentary activity levels are more likely to develop depression."

- "Younger people who are regularly exposed to green, outdoor areas have better subjective well-being than older adults who have limited exposure to green spaces."

Examples of a null hypothesis include:

- "Children who receive a new reading intervention will have scores different than students who do not receive the intervention."

- "There will be no difference in scores on a memory recall task between children and adults."

Examples of an alternative hypothesis:

- "Children who receive a new reading intervention will perform better than students who did not receive the intervention."

- "Adults will perform better on a memory task than children."

Collecting Data on Your Hypothesis

Once a researcher has formed a testable hypothesis, the next step is to select a research design and start collecting data. The research method depends largely on exactly what they are studying. There are two basic types of research methods: descriptive research and experimental research.

Descriptive Research Methods

Descriptive research such as case studies , naturalistic observations , and surveys are often used when it would be impossible or difficult to conduct an experiment . These methods are best used to describe different aspects of a behavior or psychological phenomenon.

Once a researcher has collected data using descriptive methods, a correlational study can then be used to look at how the variables are related. This type of research method might be used to investigate a hypothesis that is difficult to test experimentally.

Experimental Research Methods

Experimental methods are used to demonstrate causal relationships between variables. In an experiment, the researcher systematically manipulates a variable of interest (known as the independent variable) and measures the effect on another variable (known as the dependent variable).

Unlike correlational studies, which can only be used to determine if there is a relationship between two variables, experimental methods can be used to determine the actual nature of the relationship—whether changes in one variable actually cause another to change.

A Word From Verywell

The hypothesis is a critical part of any scientific exploration. It represents what researchers expect to find in a study or experiment. In situations where the hypothesis is unsupported by the research, the research still has value. Such research helps us better understand how different aspects of the natural world relate to one another. It also helps us develop new hypotheses that can then be tested in the future.

Some examples of how to write a hypothesis include:

- "Staying up late will lead to worse test performance the next day."

- "People who consume one apple each day will visit the doctor fewer times each year."

- "Breaking study sessions up into three 20-minute sessions will lead to better test results than a single 60-minute study session."

The four parts of a hypothesis are:

- The research question

- The independent variable (IV)

- The dependent variable (DV)

- The proposed relationship between the IV and DV

Castillo M. The scientific method: a need for something better? . AJNR Am J Neuroradiol. 2013;34(9):1669-71. doi:10.3174/ajnr.A3401

Nevid J. Psychology: Concepts and Applications. Wadworth, 2013.

By Kendra Cherry, MSEd Kendra Cherry, MS, is a psychosocial rehabilitation specialist, psychology educator, and author of the "Everything Psychology Book."

Special Educator Academy

Free resources, ep. 13: how to write useful fba hypothesis statements.

Sharing is caring!

Welcome back and I am so glad that you have joined us again. We are talking about behavior, which I know is an issue for many of us in special education classrooms. I am Chris Reeve, I’m your host and up to now we’ve taken our data, we have gathered all of our information and today we’re going to start getting to the good stuff because we’re starting to get to the point where we’re going to look at why in the world is this behavior happening in the first place and what are we going to do about it

You also will see a number of visual examples that I obviously cannot give you on a podcast, so that may make it a little bit easier. So you can go to the blog post and you can see all the different examples of hypothesis statements, download the template and you’ll also be able to download a transcript or read this if you would rather make sense of it that way. It gets a little interesting when I start to talk about these things without any visuals, because you know how I love visuals. So let me give you just a quick disclaimer as well.

SYNTHESIZE FBA INFORMATION

I’m going to give you hypothesis statements in this podcast and I’m going to kind of give you a summary of the information about the student’s behavior. It’s going to sound like those instances came from one instance, but they didn’t.

We have to triangulate all of our information, our information from staff, our information from families, our data collection, our record review, all the things we’ve talked about up until this point are going to go into that hypothesis statement. So they are all very important and I’m going to pick up from where we’ve triangulated all that information. We’ve got some idea about some setting events, we’ve seen what happens before, we’ve seen what happens afterwards and put it in kind of a compilation. So it isn’t as easy as I make it sound because as I often say, human behavior is just not simple. But when you just hear me talk about it, the cases kind of sound like I’m just picking out one instance. I’m not picking out a single episode of behavior, I’m using a composite of all the different information. So let’s get started.

BUILDING EFFECTIVE HYPOTHESIS STATEMENTS

We’re now moving into step 3 in our 5-step process of meaningful behavioral support and that is really developing our hypothesis statements. Now keep in mind that a hypothesis is a best guess. We don’t know that this is what’s actually driving the behavior until we confirm our hypothesis and I’ll be talking about that later in the series and how we can do that. Because you can do it when you develop them and you can test them more likely you will develop interventions that address them and see if they work when we’re within a school setting.

We want to make sure that when we are developing our hypothesis statements that we are clearly tying them to the data that we’re not getting lost in our interviews and things like that. We want to make sure that we’re accounting for that interview and that less objective information, but that we are making sure that our data is solidly supporting our hypothesis. That’s why we took it.

FREE DOWNLOAD OF GRAPHIC ORGANIZER

Writing our hypothesis statements is critical to the success of the intervention plan because they should lead you to what your behavioral solutions are going to be and in the blog post that goes with today’s podcast, you will find a download that you can get that actually structures your hypothesis statements.

One of the things that I like about using this hypothesis statement structure is I can take my antecedent information and my setting event information and put it in one block. Then my behavior goes in the next block and how the environment is responding or what’s happening in the environment comes afterwards. So it’s very easy to take my ABCs and translate them into this. I can then take this set up and say, when this happens he is likely to engage in this behavior and in the environment this commonly happens if that’s what my data tech trends are telling me.

That then allows me to take those antecedents and make adjustments to the environment so that we can prevent the behavior from happening. It allows me to know if he start off with smaller behaviors, that should be an indicator to me that something bigger is coming, then I should intervene earlier and it lets me know what do we need to change about how we react or respond to the behavior or what’s happening in the environment after the behavior so that we can reduce the reinforcement to it. And all of that gets directly mapped onto the hypothesis from the hypothesis statement. So go to autismclassroomresources/episode13 and download the hypothesis statement graphic and it will walk you through how to put that together. And you can also download a transcript and you can also read this post if you’d rather do that rather than listening.

WHAT GOES INTO A HYPOTHESIS STATEMENT

So let’s talk for a few minutes about what goes into your hypothesis statement.

SETTING EVENTS

One is the setting events. So that leads us to how do we eliminate or reduce the impact of distant factors that might influence the behavior. So our setting events in our hypothesis. He is more likely to engage in this behavior when X, Y, and Z, tell us we need to address X, Y, and Z in some way. Now as we’ve said in episode 11 we cannot always make X, Y and Z go away. If I could make him sleep through the night, I’d do it.

But I do know that maybe I can modify what I ask you to do on a day when you didn’t sleep well at night, or a day that you don’t feel well, or a day that you didn’t take your medicine. Maybe I modify my demands. Maybe I have you participate in group activities less. And that’s where that brainstorming process that we’ll talk about when we get to intervention plans becomes really key. But your setting events are going to tell you what you need to try to accommodate for if you cannot change it.

ANTECEDENTS

Your antecedents are going to lead us to to know exactly how to restructure the environment to prevent the behavior.

Our behavior tells us whether or not the form of the behavior is relevant to the function. So does he only scream and get attention, but when he hits people ignore him. Probably what happened the other way around, but it is the form related to the function. Most of the time, it’s not in my experience, but it is possible that you will have a student that engages one kind of behavior. Because people may come to him sometimes and another type of behavior because it gets people to go away.

CONSEQUENCES

The consequence tells us what might be maintaining the behavior. So we need to know how we need to change our response to try to prevent the behavior from increasing over time. So when we use the graphic organizer for the hypothesis statements, we have three boxes. When the student and we fill that in, he will. That’s the behavior. And as a result this happens. And the setting events kind of go over that. So when this situation is in place, when this student does this or encounters this, he engages in this behavior and this is what happens in the environment.

FBA HYPOTHESES STATEMENT EXAMPLES

So to give you an example of a hypothesis, when the student, so when faced with situations with social or academic demands, particularly those involving language. So very specific. I’ve been able to take my data and say this almost always happens in situations with social or academic demands, so not other kinds of demands. And those that involve making him practice language related tasks are much more likely to have problem behaviors. The behavior is when faced with those situations, he sometimes, because it’s not every single time hits, screams and or bites others, and then what happens as a result, he is sometimes removed from the situation, the task is delayed by the behavior or staff provides assistance in completing the task. And those are all consequences that often differ based on what situation he’s in and what setting he’s in and things like that, but they were common consequences to this behavior that basically kept him from having to do the activity or delayed it in some way.

INTEGRATE WHAT YOU’VE LEARNED

Now that’s a whole lot more descriptive than a function that just says he engages in this behavior to escape. Because now I know when he’s faced with situations with social or academic demands. in particular, those involving language, we need to maybe include more easy tasks in with our heart, with our language demands. We need to give him, maybe, more breaks during that time.

We know what his behavior is and he does a constellation of behavior. There’s not one specific form of behavior related to this situation and then we need to give him a way to replace this because it is an escape. We need to give him a way to ask for a break, because the result of his behavior is having to be removed or having the task be delayed. It’s essentially escape related. So we want to make sure that we’ve got a replacement behavior that focuses on that. And we will talk about a in a whole episode about replacement behaviors because they aren’t often what many people think they are. But back to task so you can see how that gives me much more specific information about where I’m going to address my behavior intervention plan.

MORE SPECIFIC

Now I may get even more specific. I may say something like…

James appears to engage in challenging behavior to escape from tasks that are difficult for him. Some of these tasks are work-related. Some may be overwhelming or difficult socially, and some may be things that are frustrating for him like waiting. Engaging in significant challenging behaviors serves to gain assistance or removal from these situations effectively.

You may also have,

James sometimes engages in challenging behavior to protest or express frustration about what not being allowed to have something that he wants.

BEHAVIOR OFTEN HAS MULTIPLE FUNCTIONS

So we know what situations he’s likely to have the problem in. And we also know that his behavior is complex. And you’ve heard me say this throughout this series. Human behavior isn’t simple. And rarely except in very young children occasionally, but rarely ,do we see behavior serving only one function very frequently. We see it having maybe a main function, but also another function.

So often we will see a student who engages in behavior to escape. But when you give him just a break where nobody interacts with them, you continue to see problems because that behavior was also to get attention. So it got him out of the task and it got people engaging with him together. So never think when you’re writing your hypothesis statements that you have to be limited to one function. We will have to pick what we’re going to do when we get to the behavior plan based on that. So our setting events factor into the “When the student..” section of the hypothesis and they help us explain why behaviors happen on one day in relation to an antecedent and on another day they don’t.

COMPLEX PROBLEMS HAVE COMPLEX HYPOTHESIS STATEMENTS

So James’ data indicated that the behaviors occurred on some days and not on others. And further investigation into the data showed us that days on which he hadn’t had his medicine were more likely to result in challenging behavior. One solution: make sure he always takes his medicine. We may be able to do that. And I’ve certainly had students that we’ve said, “You know what? Send his medicine to school. We’re happy to give it to him first thing in the morning if they’re having a hard time getting him to take it”. Sometimes even at school, James wouldn’t necessarily take his medicine. He put it in his mouth, he spit it out. Twenty minutes later we’d find out he hadn’t taken it. So, another solution factored into his program and the hypothesis statements:

On days when James has not had his medication, he is and he is presented with a language task, he is likely to engage in these behaviors which then result in being removed from the task.

So maybe on the days when we knew he hasn’t taken his medicine, we adjust our demands so that we might lower that antecedent that sets that behavior off.

MORE EXAMPLES OF HYPOTHESIS STATEMENTS

So let’s look at a few other examples for different kinds of functions.

ESCAPE FUNCTION HYPOTHESIS STATEMENT

So let’s look at Sammy. And Sammy’s data, one of his instances is when has been to more group activities during a day. These behaviors are more likely to occur when he checks his schedule and sees the teacher icon, he falls on the ground and screams. Sometimes he does this when he transitions out of the room for assembly and group activities. So this is kind of my summary of what we see in his data. Sammy screams and cries when the staff tries to redirect him, he screams louder. If given the opportunity to go to a quiet area and calm down, he stopped screaming and he’s calm and the outcome is his staff moves him to the work table or the upcoming activity. His behavior continues.

So that tells us that when we look at Sammie’s behavior,

Sammy appears to engage in challenging behaviors to escape from tasks that are difficult for him. Some of these tasks are work-related, some may be overwhelming or difficult socially and some may be things that are frustrating for him like waiting. Engaging in significant challenging behavior serves to gain assistance or removal from these situations effectively. Sammy is more likely to engage in these behaviors when he’s had a lot of group work during the day.

So I put my setting event kind of at the end of that one. But you can see it’s obviously an escape from work and social situations that is the real underlying function. But I now know that there are certain tasks that I need to adjust to prevent the behaviors. I can teach him a way to escape appropriately as a replacement behavior.

And my outcome needs to be that the behavior doesn’t get him out of the task as quickly as the replacement behavior. And we’ll talk about all of that more when we talk about behavior support plans. But I want you to understand how it all lines up.

ESCAPING FROM WHAT?

Let’s think about Simon. Simon has had several instances talking to his friends in the atrium of the high school. And suddenly in the middle of the conversation he started telling the other kids that he was going to kill them. tThe other kids left him alone and went to tell the teacher. So let’s think about the function for Simon or the hypothesis…

When presented with unstructured social interactions, which is when Simon is in the atrium of the school, there’s nobody there setting up interactions. Simon’s violent threats have been successful in extricating him from the social situation and escaping from the social demands.

So what we’re saying is that he is trying to escape social situations. Unstructured social situations set him up to have these behaviors, and this is a very efficient manner of getting people to leave him alone. So I now know that maybe I need to structure his social interactions a little bit more. I need to teach him a better way to get people to leave him alone more appropriately. And then we need to work on probably some underlying social skills as part of that as well.

ESCAPE FUNCTION WITH SETTING EVENT

Let’s look at Jimmy. Jimmy was playing with the other kids on the playground and they were playing horse with the basketball and when it was Jimmy’s Turney missed the basket. The other kids told him he got the letter S and the teacher, her, Bobby, tell him better luck next time and slap him on the back. Okay, very common. Hey, I’m trying to make you feel better kind of activity kind of behavior. Jimmy then hit Bobby and they got into a fight. When the playground supervisor asked what happened, Jimmy told her Bobby was bullying him. When we looked at Jimmy’s data, we found a large pattern of difficulty in social situations as the antecedent and that he was interpreting the perspectives when we talked to other kids that he was accusing of bullying him or fighting with them. He would tell them that, that they had done something.

And all of the things that he described were things that, from the perspective of the person who did them, were meant to be supportive, not problematic. So in knowing Jimmy and everything we know about Jimmy, we know that Jimmy has significant difficulty interpreting the perspectives of others and therefore understanding their intentions in his environment. He frequently interprets their behavior as a negative action toward himself. So….

When presented with an action, he interprets it negatively and he responds in a way to escape from that situation.

So he gets removed from the situation because he’s fighting. It gets him removed from the difficult situation. And so we’ve got an escape from social situations, but there’s an underlying setting event of not understanding the perspectives of other people.

And this is something we see a lot with our students with autism, that social piece is a big piece, but it’s also something I see a lot with students who have other types of disabilities other than autism where people aren’t necessarily picking up on the social thinking and the social perspective piece of it because they don’t have that diagnosis. So keep that in mind as we’re working with some of our students with emotional disturbances and things like that.

TANGIBLE SEEKING FUNCTION

Let’s look at two more. It’s time for Jimmy to be doing some math seat work and instead he gets up and he runs to the computer, he sits down and when the teacher tries to move him back to his desk, he throws himself on the floor and kicks her. So in this case we’ve got a kid who clearly wants something that he can’t have. It’s time to do work. And so he’s going to that thing that he wants and he’s behaving this way until it ends up being his term. So we’ve got an obtaining function of a tangible item.

Jimmy is highly interested in the computer when presented with a situation in which he has to wait his turn on the computer, he falls on the floor and kicks and screams until it is his turn.

AUTOMATIC FUNCTION HYPOTHESIS STATEMENT

Now let’s look at one has an automatic function because I think that’s a really hard one to focus on. Abe engages in a variety of repetitive movements throughout the day, including hitting his forehead and head with his hand. He will engage in these behaviors when there are no demands and there is no one to attend to him. These behaviors appear more frequently during downtime and appear to provide some type of internal reinforcement. So they occur more likely when people are not around and the staff report that he seems calmer after he hits himself. tTt’s kind of a summary of Abe. Our automatic reinforcement hypothesis might be… when asked to wait or left to work independently or without someone specifically engaging him.

Because remember, we can only have an automatic function if it would happen when nothing else is there and no one is around because that means there are no other factors.That’s the way we rule it out. It can’t simply be, we don’t know what the function is. So we think it’s automatic. It’s automatic, which some people call a sensory function. I think that’s a little misleading. And I talk about all of that in our episode on functions, which I’ll link in the show notes, but we really want to make sure that our antecedent is that he’s kind of left alone with nothing to do.

The behavior is that he frequently hits his head with his fist and following this behavior, his demeanor appears calmer. If stopped, he’ll begin to hit himself harder and scream. So that’s kind of our consequence for that behavior. So our hypothesis might be…

Abe engages in a variety of repetitive movements throughout the day, including hitting his forehead and head with his hand. He will engage in these behaviors when there are no demands and there is no one around to attend to him. These behaviors appear more frequently during downtime and appear to provide some type of internal reinforcement. His demeanor appears calmer after completing them.

So that tells us that if we lead Abe alone, we need to give him something to do that he will engage with because not having that is going to be a trigger for the automatic self injury behavior. We know that when he does this, we need to engage him in something so that the behaviors decrease rather than simply trying to stop him. So this then leads us to what our behavior support plan is.

HYPOTHESIS STATEMENT DO AND DON’TS

So I want to finish just with a few do’s and don’ts about hypothesis statements. You want to make sure that you do include as much information as possible. I realized that when I talk about hypothesis statements, some people will think that they’re kind of wordy, but I find that wordy to be a good summary of the function of the behavior that can lead us directly into our behavior support plan. And I’ll talk in our next episode of how we do that.

How you write the hypothesis statements for your functional behavior assessment is critical to how strong your behavior support plan will be.

HYPOTHESIS STATEMENT DOS

Do: only describe what you can see and observe..

And we talked about that when we talked about the data collection . And so I’ll link to that episode. But earlier in the series we’ve talked about the fact that if I can’t see it, I don’t know that it’s happened and so I really have to focus on the behaviors that I see.

DO: INCLUDE SETTING EVENTS

You want to make sure that we do include our setting events into our hypothesis statements because they are things we’re going to have to address in our behavior support plan.

DO: VERIFY HYPOTHESIS STATEMENTS

And so one thing that we can do is set up a situation similar to the thing that we think is setting off and reinforcing the behavior and see if it happens. So if the behavior is not self-injurious or really dangerous, then we could actually set up situations, take data and see if the behavior occurs in the situations that we think that they do.

DO: DEVELOP HYPOTHESIS STATEMENTS TIED TO OUR DATA

Another thing that we can do is develop a behavior support plan that we know is tightly tied to our hypotheses and take data to see whether or not the behavior continues. If it does continue that then confirms our hypothesis. If it does continue, then it tells us we need to go back and re look at our hypothesis. So we can use our intervention as our way to verify our hypotheses. But it’s critical when we do that that we make sure that our hypothesis statements and our behavior support plans are very tightly linked. And this format that you can download on the blog page actually will give you that linkage.

HYPOTHESIS STATEMENT DON’TS

So let’s talk about some things you shouldn’t do with your hypothesis statements.

DON’T GET DISTRACTED BY THE FORM OF BEHAVIOR

Don’t get misled by the form of the behavior. In other words, don’t assume that because somebody is biting or eating things that they’re not supposed to have, that it is an automatic reinforcer. Those behaviors can have outward impacts on an antecedent as well. So just because it involves a sense does not mean it’s a sensory function.

DON’T ASSUME FUNCTIONS.

I think a lot of times we assume the automatic and function or the sensory function because we can’t see what the pattern is. But that’s not really a valid way to make that decision as I’ve talked about earlier.

DON’T ASSUME THAT A BEHAVIOR HAS ONLY ONE FUNCTION.

Very frequently, behavior has more than one function and you might have more than one hypothesis. So you might have more than one hypothesis that describes the range of behaviors that the student is showing or the range of situations that the behaviors are occurring in.

DON’T STOP TAKING DATA.

Now you don’t necessarily need to continue to take ABC data unless you really don’t know what your functions are. So if you haven’t been able to come up with a hypothesis statement, you need more data.

If you have a hypothesis statement, take that, make sure you’ve got solid baseline data of how often behaviors are occurring now. You can do that if you’ve been taken ABC data throughout the day. You can do that by adding up the incidents. Then look at taking something like frequency data or duration data to monitor your plan and we’ll talk about that in a future episode. But it’s important that we don’t stop taking the data just because we’ve developed our hypothesis.

So I will be back next week and I will talk more about designing behavior support plans and how we take this information and actually turn it into something that actually may change the behavior of the student in your classroom, which I know is the piece that all of you have been waiting for, but you have to have these pieces in place in order to get to that place. So that will be our next topic and I will give you some examples and we’ll kind of walk through how do you take this information and turn it into that.

If you would like to do a bigger deep dive into behavioral problem solving, I highly encourage you to check out the Special Educator Academy . That is where you’ll find me. I’m available in our forums to answer questions, provide support and also our behavioral course has a wide variety of data sheets, strategies, videos and information about this entire process and hopefully pulls it all together. And then when there are questions about it, people can come to the community and ask them and we’re all working off of the same page.

You can find more information about the Special Educator Academy at specialeducatoracademy.com come try our free 7- day trial and see if it’s for you.

Thank you so much for spending this time with me. I really appreciate it. I hope that this has been helpful in giving you some ideas about formulating hypotheses for your students, and I hope to see you again in our next episode.

I hope that you’re enjoying the podcast and I’d love it if you’d hop over to iTunes and leave a review or and or subscribe a so that you will continue to get episodes.

Never Miss An Episode!

Unlock Unlimited Access to Our FREE Resource Library!

Welcome to an exclusive collection designed just for you!

Our library is packed with carefully curated printable resources and videos tailored to make your journey as a special educator or homeschooling family smoother and more productive.

- Affiliate Program

- UNITED STATES

- 台灣 (TAIWAN)

- TÜRKIYE (TURKEY)

- Academic Editing Services

- - Research Paper

- - Journal Manuscript

- - Dissertation

- - College & University Assignments

- Admissions Editing Services

- - Application Essay

- - Personal Statement

- - Recommendation Letter

- - Cover Letter

- - CV/Resume

- Business Editing Services

- - Business Documents

- - Report & Brochure

- - Website & Blog

- Writer Editing Services

- - Script & Screenplay

- Our Editors

- Client Reviews

- Editing & Proofreading Prices

- Wordvice Points

- Partner Discount

- Plagiarism Checker

- APA Citation Generator

- MLA Citation Generator

- Chicago Citation Generator

- Vancouver Citation Generator

- - APA Style

- - MLA Style

- - Chicago Style

- - Vancouver Style

- Writing & Editing Guide

- Academic Resources

- Admissions Resources

How to Write a Research Hypothesis: Good & Bad Examples

What is a research hypothesis?

A research hypothesis is an attempt at explaining a phenomenon or the relationships between phenomena/variables in the real world. Hypotheses are sometimes called “educated guesses”, but they are in fact (or let’s say they should be) based on previous observations, existing theories, scientific evidence, and logic. A research hypothesis is also not a prediction—rather, predictions are ( should be) based on clearly formulated hypotheses. For example, “We tested the hypothesis that KLF2 knockout mice would show deficiencies in heart development” is an assumption or prediction, not a hypothesis.

The research hypothesis at the basis of this prediction is “the product of the KLF2 gene is involved in the development of the cardiovascular system in mice”—and this hypothesis is probably (hopefully) based on a clear observation, such as that mice with low levels of Kruppel-like factor 2 (which KLF2 codes for) seem to have heart problems. From this hypothesis, you can derive the idea that a mouse in which this particular gene does not function cannot develop a normal cardiovascular system, and then make the prediction that we started with.

What is the difference between a hypothesis and a prediction?

You might think that these are very subtle differences, and you will certainly come across many publications that do not contain an actual hypothesis or do not make these distinctions correctly. But considering that the formulation and testing of hypotheses is an integral part of the scientific method, it is good to be aware of the concepts underlying this approach. The two hallmarks of a scientific hypothesis are falsifiability (an evaluation standard that was introduced by the philosopher of science Karl Popper in 1934) and testability —if you cannot use experiments or data to decide whether an idea is true or false, then it is not a hypothesis (or at least a very bad one).

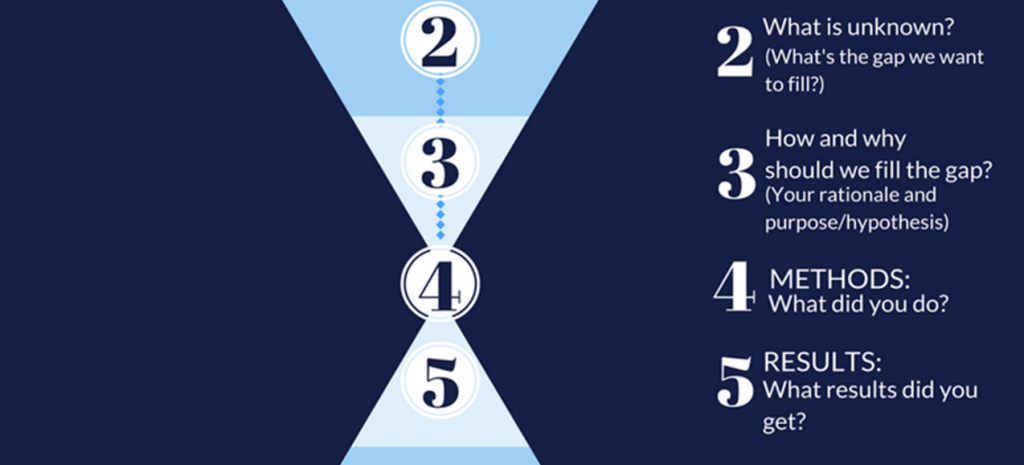

So, in a nutshell, you (1) look at existing evidence/theories, (2) come up with a hypothesis, (3) make a prediction that allows you to (4) design an experiment or data analysis to test it, and (5) come to a conclusion. Of course, not all studies have hypotheses (there is also exploratory or hypothesis-generating research), and you do not necessarily have to state your hypothesis as such in your paper.

But for the sake of understanding the principles of the scientific method, let’s first take a closer look at the different types of hypotheses that research articles refer to and then give you a step-by-step guide for how to formulate a strong hypothesis for your own paper.

Types of Research Hypotheses

Hypotheses can be simple , which means they describe the relationship between one single independent variable (the one you observe variations in or plan to manipulate) and one single dependent variable (the one you expect to be affected by the variations/manipulation). If there are more variables on either side, you are dealing with a complex hypothesis. You can also distinguish hypotheses according to the kind of relationship between the variables you are interested in (e.g., causal or associative ). But apart from these variations, we are usually interested in what is called the “alternative hypothesis” and, in contrast to that, the “null hypothesis”. If you think these two should be listed the other way round, then you are right, logically speaking—the alternative should surely come second. However, since this is the hypothesis we (as researchers) are usually interested in, let’s start from there.

Alternative Hypothesis

If you predict a relationship between two variables in your study, then the research hypothesis that you formulate to describe that relationship is your alternative hypothesis (usually H1 in statistical terms). The goal of your hypothesis testing is thus to demonstrate that there is sufficient evidence that supports the alternative hypothesis, rather than evidence for the possibility that there is no such relationship. The alternative hypothesis is usually the research hypothesis of a study and is based on the literature, previous observations, and widely known theories.

Null Hypothesis

The hypothesis that describes the other possible outcome, that is, that your variables are not related, is the null hypothesis ( H0 ). Based on your findings, you choose between the two hypotheses—usually that means that if your prediction was correct, you reject the null hypothesis and accept the alternative. Make sure, however, that you are not getting lost at this step of the thinking process: If your prediction is that there will be no difference or change, then you are trying to find support for the null hypothesis and reject H1.

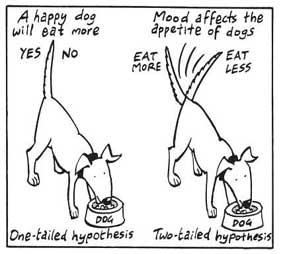

Directional Hypothesis

While the null hypothesis is obviously “static”, the alternative hypothesis can specify a direction for the observed relationship between variables—for example, that mice with higher expression levels of a certain protein are more active than those with lower levels. This is then called a one-tailed hypothesis.

Another example for a directional one-tailed alternative hypothesis would be that

H1: Attending private classes before important exams has a positive effect on performance.

Your null hypothesis would then be that

H0: Attending private classes before important exams has no/a negative effect on performance.

Nondirectional Hypothesis

A nondirectional hypothesis does not specify the direction of the potentially observed effect, only that there is a relationship between the studied variables—this is called a two-tailed hypothesis. For instance, if you are studying a new drug that has shown some effects on pathways involved in a certain condition (e.g., anxiety) in vitro in the lab, but you can’t say for sure whether it will have the same effects in an animal model or maybe induce other/side effects that you can’t predict and potentially increase anxiety levels instead, you could state the two hypotheses like this:

H1: The only lab-tested drug (somehow) affects anxiety levels in an anxiety mouse model.

You then test this nondirectional alternative hypothesis against the null hypothesis:

H0: The only lab-tested drug has no effect on anxiety levels in an anxiety mouse model.

How to Write a Hypothesis for a Research Paper

Now that we understand the important distinctions between different kinds of research hypotheses, let’s look at a simple process of how to write a hypothesis.

Writing a Hypothesis Step:1

Ask a question, based on earlier research. Research always starts with a question, but one that takes into account what is already known about a topic or phenomenon. For example, if you are interested in whether people who have pets are happier than those who don’t, do a literature search and find out what has already been demonstrated. You will probably realize that yes, there is quite a bit of research that shows a relationship between happiness and owning a pet—and even studies that show that owning a dog is more beneficial than owning a cat ! Let’s say you are so intrigued by this finding that you wonder:

What is it that makes dog owners even happier than cat owners?

Let’s move on to Step 2 and find an answer to that question.

Writing a Hypothesis Step 2:

Formulate a strong hypothesis by answering your own question. Again, you don’t want to make things up, take unicorns into account, or repeat/ignore what has already been done. Looking at the dog-vs-cat papers your literature search returned, you see that most studies are based on self-report questionnaires on personality traits, mental health, and life satisfaction. What you don’t find is any data on actual (mental or physical) health measures, and no experiments. You therefore decide to make a bold claim come up with the carefully thought-through hypothesis that it’s maybe the lifestyle of the dog owners, which includes walking their dog several times per day, engaging in fun and healthy activities such as agility competitions, and taking them on trips, that gives them that extra boost in happiness. You could therefore answer your question in the following way:

Dog owners are happier than cat owners because of the dog-related activities they engage in.

Now you have to verify that your hypothesis fulfills the two requirements we introduced at the beginning of this resource article: falsifiability and testability . If it can’t be wrong and can’t be tested, it’s not a hypothesis. We are lucky, however, because yes, we can test whether owning a dog but not engaging in any of those activities leads to lower levels of happiness or well-being than owning a dog and playing and running around with them or taking them on trips.

Writing a Hypothesis Step 3:

Make your predictions and define your variables. We have verified that we can test our hypothesis, but now we have to define all the relevant variables, design our experiment or data analysis, and make precise predictions. You could, for example, decide to study dog owners (not surprising at this point), let them fill in questionnaires about their lifestyle as well as their life satisfaction (as other studies did), and then compare two groups of active and inactive dog owners. Alternatively, if you want to go beyond the data that earlier studies produced and analyzed and directly manipulate the activity level of your dog owners to study the effect of that manipulation, you could invite them to your lab, select groups of participants with similar lifestyles, make them change their lifestyle (e.g., couch potato dog owners start agility classes, very active ones have to refrain from any fun activities for a certain period of time) and assess their happiness levels before and after the intervention. In both cases, your independent variable would be “ level of engagement in fun activities with dog” and your dependent variable would be happiness or well-being .

Examples of a Good and Bad Hypothesis

Let’s look at a few examples of good and bad hypotheses to get you started.

Good Hypothesis Examples

Bad hypothesis examples, tips for writing a research hypothesis.

If you understood the distinction between a hypothesis and a prediction we made at the beginning of this article, then you will have no problem formulating your hypotheses and predictions correctly. To refresh your memory: We have to (1) look at existing evidence, (2) come up with a hypothesis, (3) make a prediction, and (4) design an experiment. For example, you could summarize your dog/happiness study like this:

(1) While research suggests that dog owners are happier than cat owners, there are no reports on what factors drive this difference. (2) We hypothesized that it is the fun activities that many dog owners (but very few cat owners) engage in with their pets that increases their happiness levels. (3) We thus predicted that preventing very active dog owners from engaging in such activities for some time and making very inactive dog owners take up such activities would lead to an increase and decrease in their overall self-ratings of happiness, respectively. (4) To test this, we invited dog owners into our lab, assessed their mental and emotional well-being through questionnaires, and then assigned them to an “active” and an “inactive” group, depending on…

Note that you use “we hypothesize” only for your hypothesis, not for your experimental prediction, and “would” or “if – then” only for your prediction, not your hypothesis. A hypothesis that states that something “would” affect something else sounds as if you don’t have enough confidence to make a clear statement—in which case you can’t expect your readers to believe in your research either. Write in the present tense, don’t use modal verbs that express varying degrees of certainty (such as may, might, or could ), and remember that you are not drawing a conclusion while trying not to exaggerate but making a clear statement that you then, in a way, try to disprove . And if that happens, that is not something to fear but an important part of the scientific process.

Similarly, don’t use “we hypothesize” when you explain the implications of your research or make predictions in the conclusion section of your manuscript, since these are clearly not hypotheses in the true sense of the word. As we said earlier, you will find that many authors of academic articles do not seem to care too much about these rather subtle distinctions, but thinking very clearly about your own research will not only help you write better but also ensure that even that infamous Reviewer 2 will find fewer reasons to nitpick about your manuscript.

Perfect Your Manuscript With Professional Editing

Now that you know how to write a strong research hypothesis for your research paper, you might be interested in our free AI proofreader , Wordvice AI, which finds and fixes errors in grammar, punctuation, and word choice in academic texts. Or if you are interested in human proofreading , check out our English editing services , including research paper editing and manuscript editing .

On the Wordvice academic resources website , you can also find many more articles and other resources that can help you with writing the other parts of your research paper , with making a research paper outline before you put everything together, or with writing an effective cover letter once you are ready to submit.

- Learning Modules

- About the Project

- Project Resources

Functional Behavior Assessment

- Overview of Functional Behavior Assessment

- Step 1 Planning

- Step 2.1 Collect baseline data using direct and indirect assessment methods

- Step 2.2 Gather observation-based data on the occurrence of the interfering behavior

- Step 2.3a Identify variables of the behavior

Step 2.3b Create a hypothesis statement for the purpose of the behavior

- Step 2.3c Test the hypothesis (behavior) statement

- Step 2.4 Develop a behavior intervention plan (BIP)

- Practice Scenarios: Implementing FBA

- Knowledge Check

- Step 3 Monitoring Progress

- Module Resources

Create a hypothesis (behavior) statement

A hypothesis statement should be based upon the assessment results and describes the best guess of the purpose of the behavior in sufficient detail. That is, what is the behavior trying to tell us? Analyzing assessment data helps team members identify patterns or behaviors across time and settings. Often times, patterns of behavior and the possible reasons for the behaviors will be obvious; however, at other times, the behavior patterns may be subtle and difficult to identify. When this occurs, additional data might need to be gathered to guide the development of a behavior statement.

Team members develop a behavior statement for the interfering behavior that includes:

- the setting events, immediate antecedents, and immediate consequences that surround the interfering behavior

- a restatement and refinement of the description of the interfering behavior that is occurring

- the purpose the behavior serves (i.e., get/obtain, escape/avoid)

Example hypothesis (behavior) statement:

“Tino falls onto the floor, screaming and crying, when asked to clean up his toys, and he is then taken to his room where his mom rocks him on the rocking chair to calm him down.”

- Printer-friendly version

This project is a program of the Frank Porter Graham Child Development Institute at University of North Carolina at Chapel Hill .

Research Hypothesis In Psychology: Types, & Examples

Saul Mcleod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul Mcleod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Learn about our Editorial Process

Olivia Guy-Evans, MSc

Associate Editor for Simply Psychology

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

On This Page:

A research hypothesis, in its plural form “hypotheses,” is a specific, testable prediction about the anticipated results of a study, established at its outset. It is a key component of the scientific method .

Hypotheses connect theory to data and guide the research process towards expanding scientific understanding

Some key points about hypotheses:

- A hypothesis expresses an expected pattern or relationship. It connects the variables under investigation.

- It is stated in clear, precise terms before any data collection or analysis occurs. This makes the hypothesis testable.

- A hypothesis must be falsifiable. It should be possible, even if unlikely in practice, to collect data that disconfirms rather than supports the hypothesis.

- Hypotheses guide research. Scientists design studies to explicitly evaluate hypotheses about how nature works.

- For a hypothesis to be valid, it must be testable against empirical evidence. The evidence can then confirm or disprove the testable predictions.

- Hypotheses are informed by background knowledge and observation, but go beyond what is already known to propose an explanation of how or why something occurs.

Predictions typically arise from a thorough knowledge of the research literature, curiosity about real-world problems or implications, and integrating this to advance theory. They build on existing literature while providing new insight.

Types of Research Hypotheses

Alternative hypothesis.

The research hypothesis is often called the alternative or experimental hypothesis in experimental research.

It typically suggests a potential relationship between two key variables: the independent variable, which the researcher manipulates, and the dependent variable, which is measured based on those changes.

The alternative hypothesis states a relationship exists between the two variables being studied (one variable affects the other).

A hypothesis is a testable statement or prediction about the relationship between two or more variables. It is a key component of the scientific method. Some key points about hypotheses:

- Important hypotheses lead to predictions that can be tested empirically. The evidence can then confirm or disprove the testable predictions.

In summary, a hypothesis is a precise, testable statement of what researchers expect to happen in a study and why. Hypotheses connect theory to data and guide the research process towards expanding scientific understanding.

An experimental hypothesis predicts what change(s) will occur in the dependent variable when the independent variable is manipulated.

It states that the results are not due to chance and are significant in supporting the theory being investigated.

The alternative hypothesis can be directional, indicating a specific direction of the effect, or non-directional, suggesting a difference without specifying its nature. It’s what researchers aim to support or demonstrate through their study.

Null Hypothesis

The null hypothesis states no relationship exists between the two variables being studied (one variable does not affect the other). There will be no changes in the dependent variable due to manipulating the independent variable.

It states results are due to chance and are not significant in supporting the idea being investigated.

The null hypothesis, positing no effect or relationship, is a foundational contrast to the research hypothesis in scientific inquiry. It establishes a baseline for statistical testing, promoting objectivity by initiating research from a neutral stance.

Many statistical methods are tailored to test the null hypothesis, determining the likelihood of observed results if no true effect exists.

This dual-hypothesis approach provides clarity, ensuring that research intentions are explicit, and fosters consistency across scientific studies, enhancing the standardization and interpretability of research outcomes.

Nondirectional Hypothesis

A non-directional hypothesis, also known as a two-tailed hypothesis, predicts that there is a difference or relationship between two variables but does not specify the direction of this relationship.

It merely indicates that a change or effect will occur without predicting which group will have higher or lower values.

For example, “There is a difference in performance between Group A and Group B” is a non-directional hypothesis.

Directional Hypothesis

A directional (one-tailed) hypothesis predicts the nature of the effect of the independent variable on the dependent variable. It predicts in which direction the change will take place. (i.e., greater, smaller, less, more)

It specifies whether one variable is greater, lesser, or different from another, rather than just indicating that there’s a difference without specifying its nature.

For example, “Exercise increases weight loss” is a directional hypothesis.

Falsifiability

The Falsification Principle, proposed by Karl Popper , is a way of demarcating science from non-science. It suggests that for a theory or hypothesis to be considered scientific, it must be testable and irrefutable.

Falsifiability emphasizes that scientific claims shouldn’t just be confirmable but should also have the potential to be proven wrong.

It means that there should exist some potential evidence or experiment that could prove the proposition false.

However many confirming instances exist for a theory, it only takes one counter observation to falsify it. For example, the hypothesis that “all swans are white,” can be falsified by observing a black swan.

For Popper, science should attempt to disprove a theory rather than attempt to continually provide evidence to support a research hypothesis.

Can a Hypothesis be Proven?

Hypotheses make probabilistic predictions. They state the expected outcome if a particular relationship exists. However, a study result supporting a hypothesis does not definitively prove it is true.

All studies have limitations. There may be unknown confounding factors or issues that limit the certainty of conclusions. Additional studies may yield different results.

In science, hypotheses can realistically only be supported with some degree of confidence, not proven. The process of science is to incrementally accumulate evidence for and against hypothesized relationships in an ongoing pursuit of better models and explanations that best fit the empirical data. But hypotheses remain open to revision and rejection if that is where the evidence leads.

- Disproving a hypothesis is definitive. Solid disconfirmatory evidence will falsify a hypothesis and require altering or discarding it based on the evidence.

- However, confirming evidence is always open to revision. Other explanations may account for the same results, and additional or contradictory evidence may emerge over time.

We can never 100% prove the alternative hypothesis. Instead, we see if we can disprove, or reject the null hypothesis.

If we reject the null hypothesis, this doesn’t mean that our alternative hypothesis is correct but does support the alternative/experimental hypothesis.

Upon analysis of the results, an alternative hypothesis can be rejected or supported, but it can never be proven to be correct. We must avoid any reference to results proving a theory as this implies 100% certainty, and there is always a chance that evidence may exist which could refute a theory.

How to Write a Hypothesis

- Identify variables . The researcher manipulates the independent variable and the dependent variable is the measured outcome.

- Operationalized the variables being investigated . Operationalization of a hypothesis refers to the process of making the variables physically measurable or testable, e.g. if you are about to study aggression, you might count the number of punches given by participants.

- Decide on a direction for your prediction . If there is evidence in the literature to support a specific effect of the independent variable on the dependent variable, write a directional (one-tailed) hypothesis. If there are limited or ambiguous findings in the literature regarding the effect of the independent variable on the dependent variable, write a non-directional (two-tailed) hypothesis.

- Make it Testable : Ensure your hypothesis can be tested through experimentation or observation. It should be possible to prove it false (principle of falsifiability).

- Clear & concise language . A strong hypothesis is concise (typically one to two sentences long), and formulated using clear and straightforward language, ensuring it’s easily understood and testable.

Consider a hypothesis many teachers might subscribe to: students work better on Monday morning than on Friday afternoon (IV=Day, DV= Standard of work).

Now, if we decide to study this by giving the same group of students a lesson on a Monday morning and a Friday afternoon and then measuring their immediate recall of the material covered in each session, we would end up with the following:

- The alternative hypothesis states that students will recall significantly more information on a Monday morning than on a Friday afternoon.

- The null hypothesis states that there will be no significant difference in the amount recalled on a Monday morning compared to a Friday afternoon. Any difference will be due to chance or confounding factors.

More Examples

- Memory : Participants exposed to classical music during study sessions will recall more items from a list than those who studied in silence.

- Social Psychology : Individuals who frequently engage in social media use will report higher levels of perceived social isolation compared to those who use it infrequently.

- Developmental Psychology : Children who engage in regular imaginative play have better problem-solving skills than those who don’t.

- Clinical Psychology : Cognitive-behavioral therapy will be more effective in reducing symptoms of anxiety over a 6-month period compared to traditional talk therapy.

- Cognitive Psychology : Individuals who multitask between various electronic devices will have shorter attention spans on focused tasks than those who single-task.

- Health Psychology : Patients who practice mindfulness meditation will experience lower levels of chronic pain compared to those who don’t meditate.

- Organizational Psychology : Employees in open-plan offices will report higher levels of stress than those in private offices.

- Behavioral Psychology : Rats rewarded with food after pressing a lever will press it more frequently than rats who receive no reward.

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Methodology

- How to Write a Strong Hypothesis | Guide & Examples

How to Write a Strong Hypothesis | Guide & Examples

Published on 6 May 2022 by Shona McCombes .

A hypothesis is a statement that can be tested by scientific research. If you want to test a relationship between two or more variables, you need to write hypotheses before you start your experiment or data collection.

Table of contents

What is a hypothesis, developing a hypothesis (with example), hypothesis examples, frequently asked questions about writing hypotheses.

A hypothesis states your predictions about what your research will find. It is a tentative answer to your research question that has not yet been tested. For some research projects, you might have to write several hypotheses that address different aspects of your research question.

A hypothesis is not just a guess – it should be based on existing theories and knowledge. It also has to be testable, which means you can support or refute it through scientific research methods (such as experiments, observations, and statistical analysis of data).

Variables in hypotheses

Hypotheses propose a relationship between two or more variables . An independent variable is something the researcher changes or controls. A dependent variable is something the researcher observes and measures.

In this example, the independent variable is exposure to the sun – the assumed cause . The dependent variable is the level of happiness – the assumed effect .

Prevent plagiarism, run a free check.

Step 1: ask a question.

Writing a hypothesis begins with a research question that you want to answer. The question should be focused, specific, and researchable within the constraints of your project.

Step 2: Do some preliminary research

Your initial answer to the question should be based on what is already known about the topic. Look for theories and previous studies to help you form educated assumptions about what your research will find.

At this stage, you might construct a conceptual framework to identify which variables you will study and what you think the relationships are between them. Sometimes, you’ll have to operationalise more complex constructs.

Step 3: Formulate your hypothesis

Now you should have some idea of what you expect to find. Write your initial answer to the question in a clear, concise sentence.

Step 4: Refine your hypothesis

You need to make sure your hypothesis is specific and testable. There are various ways of phrasing a hypothesis, but all the terms you use should have clear definitions, and the hypothesis should contain:

- The relevant variables

- The specific group being studied

- The predicted outcome of the experiment or analysis

Step 5: Phrase your hypothesis in three ways

To identify the variables, you can write a simple prediction in if … then form. The first part of the sentence states the independent variable and the second part states the dependent variable.

In academic research, hypotheses are more commonly phrased in terms of correlations or effects, where you directly state the predicted relationship between variables.

If you are comparing two groups, the hypothesis can state what difference you expect to find between them.

Step 6. Write a null hypothesis

If your research involves statistical hypothesis testing , you will also have to write a null hypothesis. The null hypothesis is the default position that there is no association between the variables. The null hypothesis is written as H 0 , while the alternative hypothesis is H 1 or H a .

Hypothesis testing is a formal procedure for investigating our ideas about the world using statistics. It is used by scientists to test specific predictions, called hypotheses , by calculating how likely it is that a pattern or relationship between variables could have arisen by chance.

A hypothesis is not just a guess. It should be based on existing theories and knowledge. It also has to be testable, which means you can support or refute it through scientific research methods (such as experiments, observations, and statistical analysis of data).

A research hypothesis is your proposed answer to your research question. The research hypothesis usually includes an explanation (‘ x affects y because …’).

A statistical hypothesis, on the other hand, is a mathematical statement about a population parameter. Statistical hypotheses always come in pairs: the null and alternative hypotheses. In a well-designed study , the statistical hypotheses correspond logically to the research hypothesis.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

McCombes, S. (2022, May 06). How to Write a Strong Hypothesis | Guide & Examples. Scribbr. Retrieved 9 April 2024, from https://www.scribbr.co.uk/research-methods/hypothesis-writing/

Is this article helpful?

Shona McCombes

Other students also liked, operationalisation | a guide with examples, pros & cons, what is a conceptual framework | tips & examples, a quick guide to experimental design | 5 steps & examples.

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

Overview of the Scientific Method

Learning Objectives

- Distinguish between a theory and a hypothesis.

- Discover how theories are used to generate hypotheses and how the results of studies can be used to further inform theories.

- Understand the characteristics of a good hypothesis.

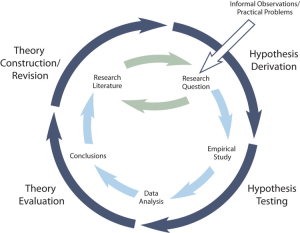

Theories and Hypotheses

Before describing how to develop a hypothesis, it is important to distinguish between a theory and a hypothesis. A theory is a coherent explanation or interpretation of one or more phenomena. Although theories can take a variety of forms, one thing they have in common is that they go beyond the phenomena they explain by including variables, structures, processes, functions, or organizing principles that have not been observed directly. Consider, for example, Zajonc’s theory of social facilitation and social inhibition (1965) [1] . He proposed that being watched by others while performing a task creates a general state of physiological arousal, which increases the likelihood of the dominant (most likely) response. So for highly practiced tasks, being watched increases the tendency to make correct responses, but for relatively unpracticed tasks, being watched increases the tendency to make incorrect responses. Notice that this theory—which has come to be called drive theory—provides an explanation of both social facilitation and social inhibition that goes beyond the phenomena themselves by including concepts such as “arousal” and “dominant response,” along with processes such as the effect of arousal on the dominant response.

Outside of science, referring to an idea as a theory often implies that it is untested—perhaps no more than a wild guess. In science, however, the term theory has no such implication. A theory is simply an explanation or interpretation of a set of phenomena. It can be untested, but it can also be extensively tested, well supported, and accepted as an accurate description of the world by the scientific community. The theory of evolution by natural selection, for example, is a theory because it is an explanation of the diversity of life on earth—not because it is untested or unsupported by scientific research. On the contrary, the evidence for this theory is overwhelmingly positive and nearly all scientists accept its basic assumptions as accurate. Similarly, the “germ theory” of disease is a theory because it is an explanation of the origin of various diseases, not because there is any doubt that many diseases are caused by microorganisms that infect the body.

A hypothesis , on the other hand, is a specific prediction about a new phenomenon that should be observed if a particular theory is accurate. It is an explanation that relies on just a few key concepts. Hypotheses are often specific predictions about what will happen in a particular study. They are developed by considering existing evidence and using reasoning to infer what will happen in the specific context of interest. Hypotheses are often but not always derived from theories. So a hypothesis is often a prediction based on a theory but some hypotheses are a-theoretical and only after a set of observations have been made, is a theory developed. This is because theories are broad in nature and they explain larger bodies of data. So if our research question is really original then we may need to collect some data and make some observations before we can develop a broader theory.

Theories and hypotheses always have this if-then relationship. “ If drive theory is correct, then cockroaches should run through a straight runway faster, and a branching runway more slowly, when other cockroaches are present.” Although hypotheses are usually expressed as statements, they can always be rephrased as questions. “Do cockroaches run through a straight runway faster when other cockroaches are present?” Thus deriving hypotheses from theories is an excellent way of generating interesting research questions.