Technology is Opportunity

A practical guide to crisp-dm, what is crisp-dm.

At some point working in data science, it is common to come across CRISP-DM. I like to irreverently call it the Crispy Process. It is an old concept for data science that’s been around since the mid-1990s. This post is meant as a practical guide to CRISP-DM.

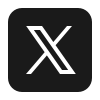

CRISP-DM stands for CR oss I ndustry S tandard P rocess for D ata M ining. The process model spans six phases meant to fully describe the data science life cycle.

- Business understanding

- Data understanding

- Data preparation

This cycle comes off as an abstract process with little meaning if it cannot be grounded into some sort of practical example. That’s what this post is meant to be. The following is going to take a casual scenario appropriate for many Midwestern gardeners about this time of year.

What am I planting in my backyard this year?

It is a vague, urgent question reminiscent of many data science client problems. In order to do that we’re going to use the Crispy Process in this practical guide to CRISP-DM.

First, we need a “Business understanding”. What does the business (or in this case, gardener) need to know?

Next, we have to form “Data understanding”. So, what data is going to cover our first needs? What format do we need that data in?

With our data found, we need to do “Data preparation”. The data has to be organized and formatted so it can actually be used for whatever analysis we’re going to use for it.

The fourth phase is the sexy bit of data science, “Modeling”. There’s information in those hills! Er…data. But we need to apply algorithms to extract that information. I personally find the conventional title of this fifth phase to be somewhat confusing in contemporary data science. Colloquial conversations I would have among fellow data professionals wouldn’t use “Modeling” but rather “Algorithm Design” for this part.

“Evaluation” time. We have information As one of my former team leads would ask at this stage, “You have the what. So what?”

Now that you have something, it needs to be shared with the “Deployment” stage. Don’t ignore the importance of this stage!

I pick up on who is a new professional and who is a veteran by how they feel about this part of a project. Newbies have put so much energy into Modeling and Evaluation, Deployment is like an afterthought. Stop! It’s a trap!

For the rest of us “Deployment” might as well be “What we’re actually being paid for”. I cannot stress enough that all the hours, sweat, and frustration of the previous phases will be for nothing if you do not get this part right .

Business understanding: What does the business need to know?

We have a basic question from our gardener.

To get a full understanding of what they need though in order to take action as a gardener to plant their backyard this year, we need to break this question down into more specific concrete questions.

If I ever can, I want to learn as much about the context of the client. This does necessarily mean I want them to answer “What data do you want?” It is also important to keep a client steered away from preconceived notions of the end result of the project. Hypothesizes can dangerously turn into premature predictions and disappointment when reality does not match those expectations.

Rather, it is important to appreciate what kind of piece you’re creating for the greater puzzle your client is putting together.

About the client

I am a Midwestern gardener myself so I’m going to be my own customer.

Gardening hobbyist who wants to understand the plants best suited for a given environment. Ideal environment to include would be the American Midwest, the client’s location. Their favorite color is red and they like the idea of bits of showy red in their backyard. Anything that is low maintenance is a plus.

For this client, they would prefer the ability to keep the data simple and shareable to other hobbyists. Whatever data, we get should be verified for what it does and does not have as the client is skeptical of a dataset’s true objectivity.

Data understanding: What data is going to cover our needs?

One trick I use to try to objectively break down complex scenarios in real life is to sift the business problem for distinct entities and use those to push my data requirements.

We can infer from the scenario that the minimal amount of entities are the gardener and the plant. As the gardener is presumably a hobbyist and probably doesn’t have something like a greenhouse at their disposal, we can also presume that their backyard is another major entity, which is made of dirt and is a location. It is outside, so other entities at play include the weather. That is also dependent on location. Additionally, the client cares about a plant’s hardiness and color.

So we know we have the following issues at least to address:

- Plant Hardiness

- Plant Color

The Gardener is our client and is seeking to gain knowledge about what is outside their person. So we can discard them as an essential data point.

The plant can be anything. It is also the core of our client question. We should find data that is plant-centric for sure.

Location is essential because that can dictate the other entities I’m considering like Dirt and Weather. Our data should help us figure out these kinds of environmental factors.

Additionally, we need to find data that could help us find the color and hardiness of a plant.

There are many datasets for plants, especially for the US and UK. Our client is American so American-focused datasets will narrow our search.

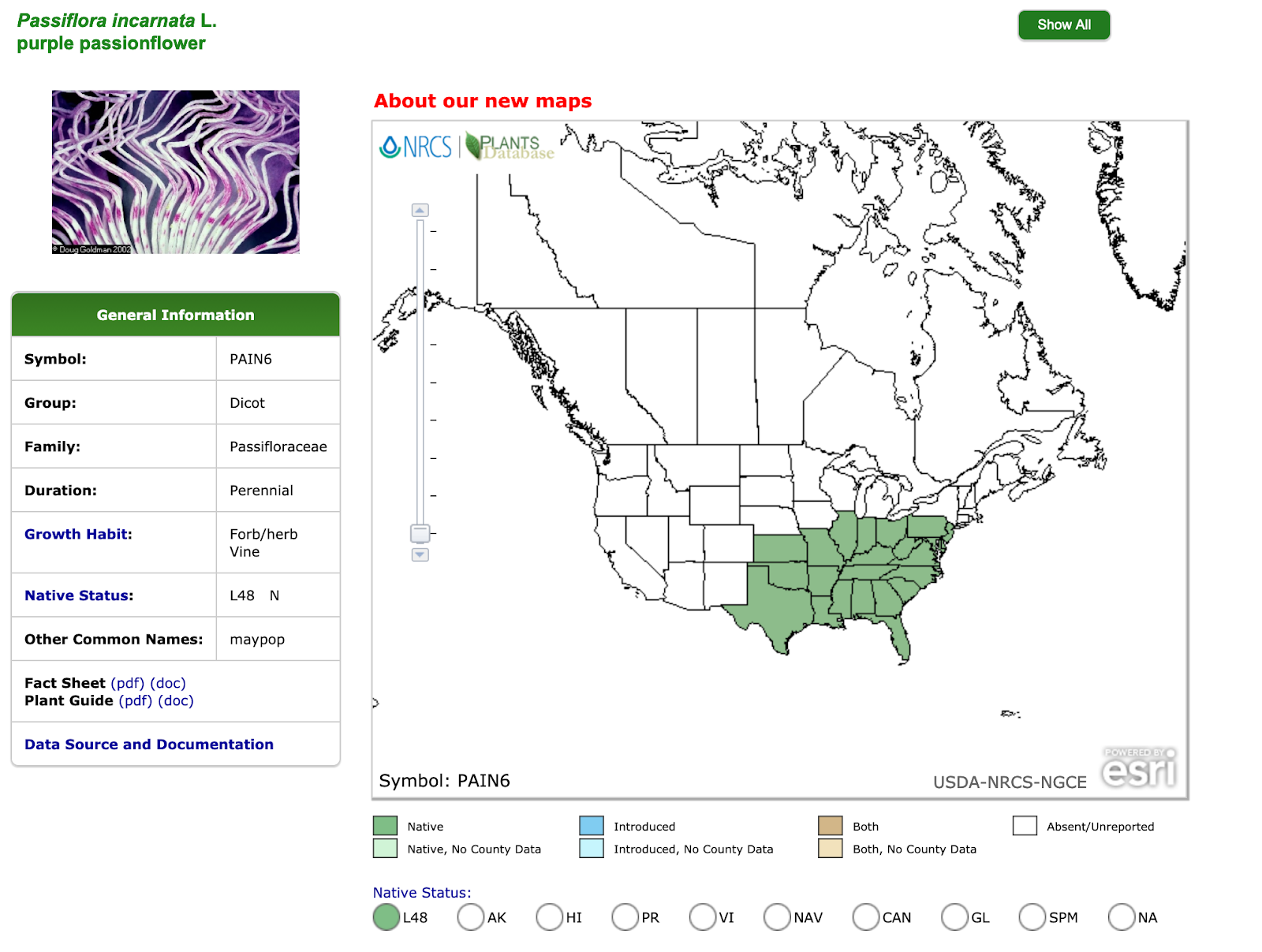

It has several issues though with our needs. One of the most glaring ones is location. While it does have state information, single states in the United States can be larger than one country in many parts of the world. They can cover large geography types so our concern about issues like weather are not accounted for in this dataset.

Perhaps ironically, the USDA does have a measuring system for handling geographically-based plant growing environments, the USDA Plant Hardiness Zones.

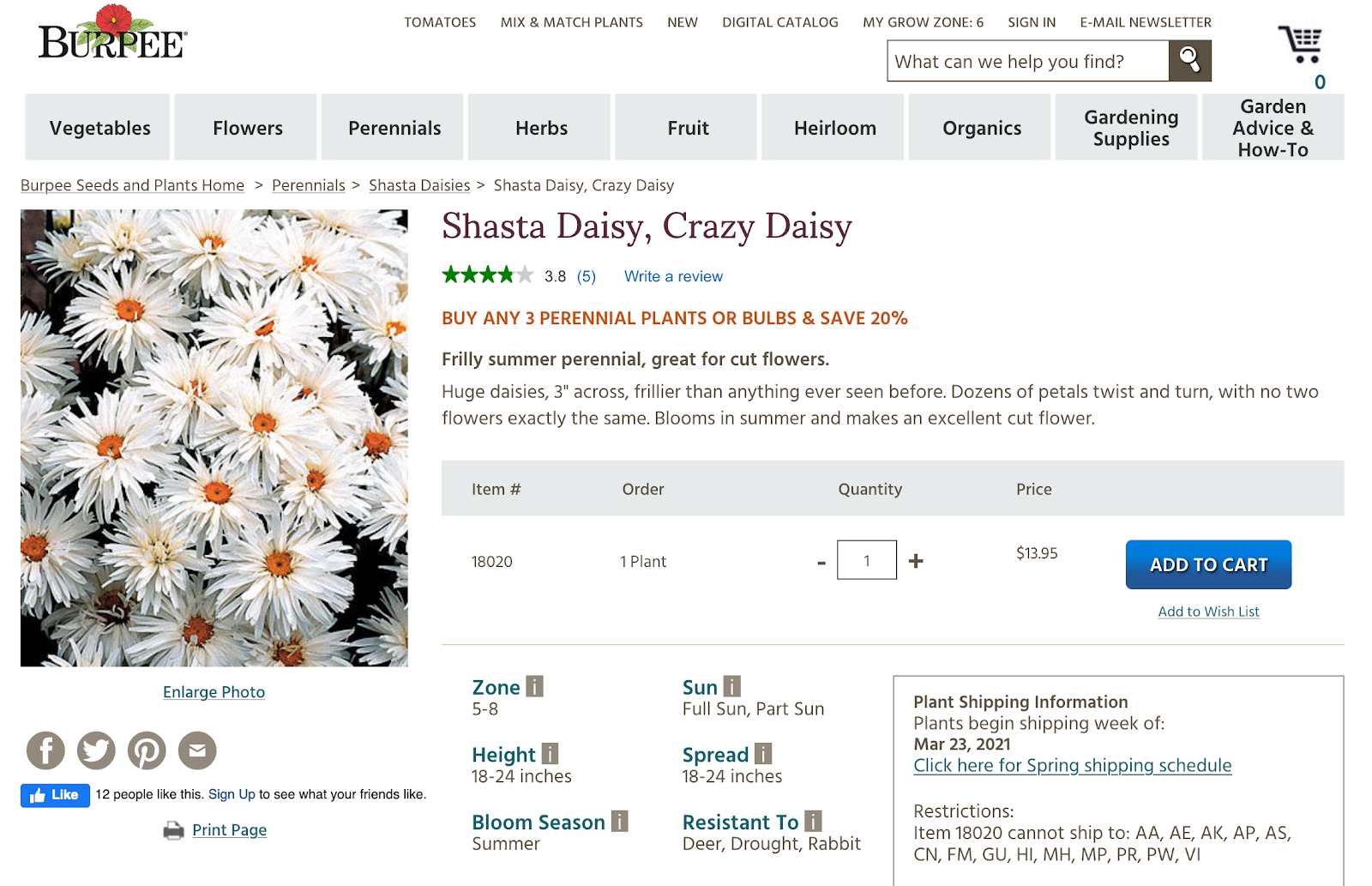

USDA Plant Hardiness Zones are so prevalent, they are what Americans gardeners typically used to shop for plants. Given that our client is an American gardener, it is going to be important to grab that information. Below is an example of an American plant store describing the hardiness zone for the plant listed.

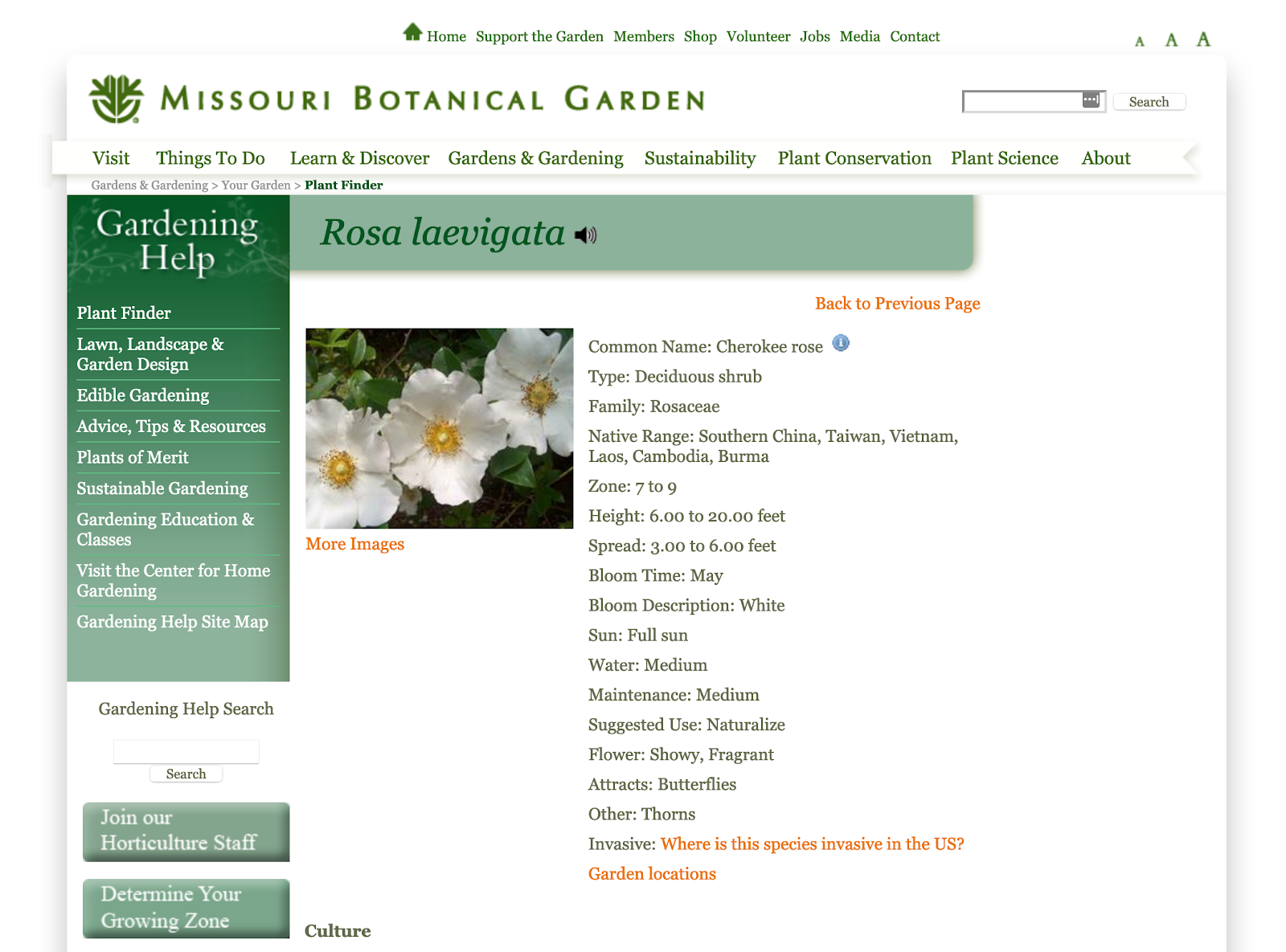

American institutions dedicated to plants and agriculture are not limited to just the federal government. In the Midwest, the Missouri Botanical Garden has its own plant database, which shows great promise.

The way the current data is set up, we could send this on to our client, but we have no way of helping them verify exactly what this dataset does and does not have. We only know what it could have (drought-resistant, flowering, etc), but not how many entries.

We’re going to have to extract this data out of MOBOT’s website and into a format we can explore in something like a Jupyter notebook.

Data preparation: How does the data need to be formatted?

Getting the data.

The clearest first step is that we need to get that data out of just MOBOT’s website.

Using Python, this is a straightforward process using the popular library, Beautiful Soup. The following presumes you are using some version of Python 3.x.

The first function we want to approach this is a systematic way of crawling all the individual webpages with plant entries. Luckily, for every letter in the Latin alphabet, MOBOT has web pages that use the following URL pattern:

https://www.missouribotanicalgarden.org/PlantFinder/PlantFinderListResults.aspx?letter=<LETTER>

So for every letter in the Latin alphabet, we can loop through all the links in all the webpages we need.

The following is how I tackled this need. To just go straight to the code, follow this link .

def find_mobot_links():

alphabet_list = [“A”, “B”, “C”, “D”, “E”, “F”, “G”, “H”, “I”, “J”, “K”, “L”, “M”, “N”, “O”, “P”, “Q”, “R”, “S”, “T”, “U”, “V”, “W”, “X”, “Y”, “Z”]

for letter in alphabet_list:

file_name = “link_list_” + letter + “.csv”

g = open(“mobot_entries/” + file_name, ‘w’)

url = “https://www.missouribotanicalgarden.org/PlantFinder/PlantFinderListResults.aspx?letter=” + letter

page = requests.get(url)

soup = BeautifulSoup(page.content, ‘html.parser’)

for link in soup.findAll(‘a’, id=lambda x: x and x.startswith(“MainContentPlaceHolder_SearchResultsList_TaxonName_”)):

g.write(link.get(‘href’) + “\n”)

g.close()

Now that we have the links we know we need, let’s visit them and extract data from them. Web page scraping is a process of trial and error. Web pages are diverse and often change. The following grabbed the data I needed and wanted from MOBOT but things can always change.

def scrape_and_save_mobot_links():

alphabet_list = [“A”, “B”, “C”, “D”, “E”, “F”, “G”, “H”, “I”, “J”, “K”, “L”, “M”, “N”, “O”, “P”, “Q”, “R”, “S”, “T”, “U”, “V”, “W”, “X”, “Y”, “Z”]

for letter in alphabet_list:

file_name = “link_list_” + letter + “.csv”

with open(“./mobot_entries/” + file_name, ‘r’) as f:

for link_path in f:

url = “https://www.missouribotanicalgarden.org” + link_path

html_page = requests.get(url)

http_encoding = html_page.encoding if ‘charset’ in html_page.headers.get(‘content-type’, ”).lower() else None

html_encoding = EncodingDetector.find_declared_encoding(html_page.content, is_html=True)

encoding = html_encoding or http_encoding

soup = BeautifulSoup(html_page.content, from_encoding=encoding)

file_name = str(soup.title.string).replace(” – Plant Finder”, “”)

file_name = re.sub(r’\W+’, ”, file_name)

g = open(“mobot_entries/scraped_results/” + file_name + “.txt”, ‘w’)

g.write(str(soup.title.string).replace(” – Plant Finder”, “”) + “\n”)

g.write(str(soup.find(“div”, {“class”: “row”})))

g.close()

print(“finished ” + file_name)

f.close()

time.sleep( 5 )

Side note: A small, basic courtesy is to avoid overloading websites serving the common good like MOBOT with a barrage of activity. That is why the timer is used in between every loop.

Transforming the Data

With the data out and in our hands, we still need to bring it together in one convenient file, we can examine all at once using another Python library like pandas. The method is relatively straightforward and also already on Github if you would like to just jump in here .

Because our previous step got us almost everything we could possibly get from MOBOT’s Plant Finder, we can pick and choose just what columns we really want to deal with in a simple, flat csv file. You may notice the code allows for the near constant instances where a data column we want to fill in doesn’t have a value from a given plant. We just have to work with what we have.

Ultimately, the code pulls Attracts, Bloom Description, Bloom Time, Common Name, Culture, Family, Flower, Formal Name, Fruit, Garden Uses, Height, Invasive, Leaf, Maintenance, Native Range, Noteworthy Characteristics, Other, Problems, Spread, Suggested Use, Sun, Tolerate, Type, Water, and Zone.

That should get us somewhere!

Modeling: How are we extracting information out of the data?

I am afraid there isn’t going to be anything fancy happening here. I do not like doing anything complicated when it can be straightforward. In this case, we can be very straightforward. For the entirity of my data anlsysi process, I encourage you to go over to my Jupyter Notebook here for more: https://github.com/prairie-cybrarian/mobot_plant_finder/blob/master/learn_da_mobot.ipynb

The most important part is the results of our extracted information:

- Chinese Lilac (Syringa chinensis Red Rothomagensis)

- Common Lilac (Syringa vulgaris Charles Joly)

- Peony (Paeonia Zhu Sha Pan CINNABAR RED)

- Butterfly Bush (Buddleja davidii Monum PETITE PLUM)

- Butterfly Bush (Buddleja davidii PIIBDII FIRST EDITIONS FUNKY …)

- Blanket Flower (Gaillardia Tizzy)

- Coneflower (Echinacea Emily Saul BIG SKY AFTER MIDNIGHT)

- Miscellaneous Tulip (Tulipa Little Beauty)

- Coneflower (Echinacea Meteor Red)

- Blanket Flower (Gaillardia Frenzy)

- Lily (Lilium Barbaresco)

Additionally, we have a simple csv we can hand over to the client. I will admit as far as clients go, I am easy. Almost like I can read my mind.

Evaluation: You have the what. So what?

In some cases, this step is simply done. We have answered the client’s question. We have addressed the client’s needs.

Yet, we can still probably do a little more. In the hands of a solid sales team, this is the time for the upsell. Otherwise, we are in scope-creep territory.

Since I have a good relationship with my client (me), I’m going to at least suggest the following next steps.

Things you can now do with these new answers:

- Cross reference soil preferences of our listed flowers with the actual location of the garden using the USDA Soil Survey’ data ( https://websoilsurvey.sc.egov.usda.gov/App/HomePage.htm ).

- Identify potential consumer needs of the client in order to find and suggest seed or plant sources for them to purchase the listed flowers.

Deployment: Make your findings known

Person experience has shown me that deployment is largely an exercise in client empathy. Final delivery can look like so many things. Maybe it is a giant blog post. Maybe it is a PDF or a PowerPoint. So long as you deliver in a format that works for your user, it does not matter. All that matters is that it works.

Adapting the CRISP-DM Data Mining Process: A Case Study in the Financial Services Domain

- Conference paper

- First Online: 08 May 2021

- Cite this conference paper

- Veronika Plotnikova 9 ,

- Marlon Dumas 9 &

- Fredrik Milani 9

Part of the book series: Lecture Notes in Business Information Processing ((LNBIP,volume 415))

Included in the following conference series:

- International Conference on Research Challenges in Information Science

1720 Accesses

5 Citations

Data mining techniques have gained widespread adoption over the past decades, particularly in the financial services domain. To achieve sustained benefits from these techniques, organizations have adopted standardized processes for managing data mining projects, most notably CRISP-DM. Research has shown that these standardized processes are often not used as prescribed, but instead, they are extended and adapted to address a variety of requirements. To improve the understanding of how standardized data mining processes are extended and adapted in practice, this paper reports on a case study in a financial services organization, aimed at identifying perceived gaps in the CRISP-DM process and characterizing how CRISP-DM is adapted to address these gaps. The case study was conducted based on documentation from a portfolio of data mining projects, complemented by semi-structured interviews with project participants. The results reveal 18 perceived gaps in CRISP-DM alongside their perceived impact and mechanisms employed to address these gaps. The identified gaps are grouped into six categories. The study provides practitioners with a structured set of gaps to be considered when applying CRISP-DM or similar processes in financial services. Also, number of the identified gaps are generic and applicable to other sectors with similar concerns (e.g. privacy), such as telecom, e-commerce.

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

- Get 10 units per month

- Download Article/Chapter or Ebook

- 1 Unit = 1 Article or 1 Chapter

- Cancel anytime

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Compact, lightweight edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

Tax calculation will be finalised at checkout

Purchases are for personal use only

Institutional subscriptions

Similar content being viewed by others

Solving business problems: the business-driven data-supported process

CRISP-DM/SMEs: A Data Analytics Methodology for Non-profit SMEs

Understanding Process Behaviours in a Large Insurance Company in Australia: A Case Study

KDD - Knowledge Discovery in Databases; SEMMA - Sample, Explore, Modify, Model, and Assess; CRISP-DM - Cross-Industry Process for Data Mining.

The protocol is available at: https://figshare.com/s/33c42eda3b19784e8b21 .

A recently introduced EU legislation to safeguard customer data.

Forbes Homepage (2017). https://www.forbes.com/sites/louiscolumbus/2017/12/24/53-of-companies-are-adopting-big-data-analytics . Accessed 30 Jan 2021

Niaksu, O.: CRISP data mining methodology extension for medical domain. Baltic J. Mod. Comput. 3 (2), 92 (2015)

Google Scholar

Solarte, J.: A proposed data mining methodology and its application to industrial engineering. Ph.D. thesis, University of Tennessee (2002)

Marbán, Ó., Mariscal, G., Menasalvas, E., Segovia, J.: An engineering approach to data mining projects. In: Yin, H., Tino, P., Corchado, E., Byrne, W., Yao, X. (eds.) IDEAL 2007. LNCS, vol. 4881, pp. 578–588. Springer, Heidelberg (2007). https://doi.org/10.1007/978-3-540-77226-2_59

Chapter Google Scholar

Plotnikova, V., Dumas, M., Milani, F.P.: Data mining methodologies in the banking domain: a systematic literature review. In: Pańkowska, M., Sandkuhl, K. (eds.) BIR 2019. LNBIP, vol. 365, pp. 104–118. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-31143-8_8

Marban, O., Mariscal, G., Segovia, J.: A data mining and knowledge discovery process model. In: Julio, P., Adem, K. (eds.) Data Mining and Knowledge Discovery in Real Life Applications, pp. 438–453. Paris, I-Tech, Vienna (2009)

Plotnikova, V., Dumas, M., Milani, F.P.: Adaptations of data mining methodologies: a systematic literature review. PeerJ Comput. Sci. 6 , e267, (2020)

Runeson, P., Host, M., Rainer, A., Regnell, B.: Case Study Research in Software Engineering: Guidelines and Examples. Wiley, Hoboken (2012)

Yin, R.K.: Case Study Research and Applications: Design and Methods. Sage Publications, Los Angeles (2017)

Saldana, J.: The Coding Manual for Qualitative Researchers. Sage Publications, Los Angeles (2015)

McNaughton, B., Ray, P., Lewis, L: Designing an evaluation framework for IT service management. Inf. Manag. 47 (4), 219–225 (2010)

Martinez-Plumed, F., et al.: CRISP-DM twenty years later: from data mining processes to data science trajectories. IEEE Trans. Knowl. Data Eng. (2019)

AXELOS Limited: ITIL® Foundation, ITIL 4 Edition. TSO (The Stationery Office) (2019)

Download references

Author information

Authors and affiliations.

Institute of Computer Science, University of Tartu, Narva mnt 18, 51009, Tartu, Estonia

Veronika Plotnikova, Marlon Dumas & Fredrik Milani

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Veronika Plotnikova .

Editor information

Editors and affiliations.

Conservatoire National des Arts et Métiers, Paris, France

Samira Cherfi

Fondazione Bruno Kessler, Trento, Italy

Anna Perini

University Paris 1 Panthéon-Sorbonne, Paris, France

Selmin Nurcan

Rights and permissions

Reprints and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this paper

Cite this paper.

Plotnikova, V., Dumas, M., Milani, F. (2021). Adapting the CRISP-DM Data Mining Process: A Case Study in the Financial Services Domain. In: Cherfi, S., Perini, A., Nurcan, S. (eds) Research Challenges in Information Science. RCIS 2021. Lecture Notes in Business Information Processing, vol 415. Springer, Cham. https://doi.org/10.1007/978-3-030-75018-3_4

Download citation

DOI : https://doi.org/10.1007/978-3-030-75018-3_4

Published : 08 May 2021

Publisher Name : Springer, Cham

Print ISBN : 978-3-030-75017-6

Online ISBN : 978-3-030-75018-3

eBook Packages : Computer Science Computer Science (R0)

Share this paper

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

- SAP Services

Using the CRISP-DM framework for data driven projects

- Coforge-Salesforce BU

- Data and Analytics CRISP-DM Project Management Data Processing Big Data Insights

Many data and analytics projects include numerous processing phases, such as data cleaning, data preparation, modelling, and evaluation. Completing these projects can take weeks, if not months. This means that it is essential to have a structure or regular method.

In this post, we will discuss how the CRISP-DM framework can be used to establish new data-driven projects or be used as the life cycle to follow during the monitoring of any simple or complex analytical projects.

The CRISP-DM framework

The CRISP-DM ( Cross Industry Standard Process for Data Mining ) framework is a standard process or framework for solving analytical problems. The framework is comprised of a six-phase workflow and was designed to be flexible, so it suits a wide variety of projects. An example of this is that the framework actively encourages you to move back to previous stages when needed.

The CRISP-DM framework or methodology is commonly used as an open standard strategy for developing methodological business proposals for big data projects regardless of domain or destination. The model also provides opportunities for software platforms that help perform or augment some of these tasks.

To help understand the approach before diving into any analytical or technical descriptions, here is a graphic of the six-phase process. This helps illustrate how each phase assists us in planning, organising, and implementing our projects.

If we assume you're tackling a typical Big Data project, the procedure will go something like this:

- Business understanding - Define the project's objectives.

- Data understanding - Set the parameters for the data and data sources and determine whether the available data can meet the project's objectives and how you'll achieve them.

- Data preparation - Data transformations where it is required for the Big Data process.

- Modelling - Choose, build and execute the algorithms that meet the project's goals.

- Evaluation - The findings are examined, reviewed and aligned against the objectives.

- Deployment - The model is launched, people are informed and strategic decisions are made in response to the findings.

Let's take a closer look at each of the six stages:

Business understanding:

Understanding the objectives and the scope of the project is the frameworks first step and clearly the most important. This involves learning and exploring the pain points and using them to construct a problem statement. For us, this is usually a clients issues, problems, strategic/tactical/operational plans, goals etc. We can then use the problem statement to dive into the data and gain a better understanding of the business.

As an example, consider the case of a vintner (wine maker) who needs to estimate the quality of his wine. He might be interested in forecasting a wine's quality based on its characteristics. To be more specific, we could be attempting to respond to the following business questions:

- What are the different types of wines offered at the store?

- Can a wine's price influence its quality?

- Can the quality of wine be predicted with fair accuracy depending on its attribute?

Data understanding:

The framework's second step focuses on gathering and understanding data, which lets us get a feel for the data set. The data comes from multiple places and are typically both structured and unstructured. This data must be helpful and easy to comprehend, and oftentimes in this stage, some exploratory analysis is done to aid in deciding whether or not the data found can be used to help solve the business challenge. To ensure this happens, returning to the business understanding phase can be fruitful when trying to understand the data, the problem, what we are looking for and trying to model.

To continue our theme, here is an example of a dataset (wine quality) that has been taken from the UCI machine learning repository, found here . Take a moment to read it and come to your own conclusion, does the data set give us the information we need? or are the questions we are asking relevant to the overall goal?

To proceed past this phase, we must be able to explain the data set's contents and research insights to acquire a better understanding. To do this we can even create visualisation graphs to help us better comprehend data trends and acquire more valuable insights.

Data preparation:

The most intensive and time-consuming process is data preparation. The data needs to have everything that's needed to answer the business questions and getting input from stakeholders can help to identify the variables to explore. All in an attempt to indicate which models in the next phase need to be used to derive actionable insights . In general, the data isn't always as clean or as useful as one would hope. As a result, data cleaning and other activities are included in this phase. Some of the steps involved are:

- Cleaning the data - Removing incorrect, corrupted or incorrectly formatted data, duplicates.

- Data integration - Dealing with issues like joining data sets, editing metadata, correcting small data inconsistencies like nomenclature.

- Filling the data - Imputing the values according to the dataset.

- Transforming the data - Applying transformations if any are required. Examples being: Generalisation of data, normalisation/scaling of attribute values, aggregation, feature construction.

- Data reduction - Making the data easy to handle whilst producing accurate results.

- Feature engineering - Creating the right features to improve the accuracy of the analytical model.

This is one of the most important phases at the heart of a data analytics project. This is where the various modelling techniques are chosen and implemented. Data modelling primarily involves two tasks:

- Understanding the scope of the problem and selecting appropriate models to solve it.

- Choosing the right techniques that will predict the target most accurately.

The techniques can involve clustering, predictive models, classification, estimations and others. Even combinations of options can be used to find the most accurate prediction. To get this right, we can break down the two tasks into the following steps:

- The first step is to choose the modelling technique or the series of modelling techniques that are going to be used.

- Following that is a training and testing scenario to validate the quality of the techniques used.

- Afterwards, the tuning and optimisation of multiple models will be completed based on the predictions.

- Finally, the models will be evaluated to ensure that they align with business objectives.

Evaluation:

This is the framework's fifth step. This phase uses multiple statistical approaches including: ROC Curve, Root Mean Square Error, Accuracy, Confusion Matrix, and other model evaluation metrics. This is to help discover whether or not the model only answers facets of the questions asked, if the questions need editing, are there things that aren't accounted for etc. If these kinds of issues are appearing, there might be a need to move backwards to a previous stage first to ensure the project is ready for the deployment phase.

This sounds similar to some of the steps in the modelling phase, doesn't it? However, even though we mentioned evaluation in the modelling phase, the modelling and evaluation phases are separate and both test models until they produce relevant results. Instead of reviewing just for quality and aligning with current business goals the evaluation phase can also be used to look at the whole progress so far, evaluate if everything is on track or if the analysis and business goals are actually something else.

Deployment :

This is the framework's last level. Now the model is accurate, reliable and insightful, data can be fed into the model. Then we can decide how the results will be used. Commonly they are used to inform business strategies, tactics, actions and operations. The work doesn't end here though, deployment requires careful thought, launch planning, implementation, informing the right stakeholders and ensuring that they understand. To help with this, there are a variety of methods to help the roll-out when it's ready to be deployed:

- Traditional methods using schedulers.

- Tools such as Flask, Heroku, GCP, AWS, Azure, Kubernetes etc.

What do you get out of this approach?

Now we have given a brief intro to the approach. Here are the primary benefits of using the CRISP-DM approach for Big Data projects:

Flexibility

No team can escape every hazard and misstep at the start of a project. Teams frequently suffer from a lack of topic knowledge or inefficient data evaluation models when beginning a project. As a result, a project's success is contingent on a team's ability to rethink its approach and improve the technological methods it employs. As a result, the CRISP-DM approach's flexibility is great. Its high level of flexibility, helps to enhance the hypotheses and data analysis approaches of teams in the short-term and over time.

Long-term planning

The CRISP-DM approach allows for creating a long-term plan based on short iterations at the beginning of a project. A team can construct a basic and simple model cycle during the initial iterations, which can be easily improved in subsequent iterations. This idea allows a previously designed strategy to be improved after new facts and insights are obtained.

Functional templates

The ability to construct functional templates for project management procedures is a huge benefit of employing a CRISP-DM methodology. The easiest method to get the most out of CRISP-DM is to create precise checklists for every phase of the process. Applying this to the CRISP-DM approach makes it simple to implement and require less extensive training, organisational role changes, or controversy.

To summarise

The CRISP-DM approach brings flexibility, long-term planning, and the ability to template and repeat. With little need for extensive training or controversy this framework is ideal for those looking to implement any new, simple or complex data-driven projects. That's why when implementing the framework both internally and with our clients we can see results. Allowing us to be confident that we can develop solutions for your data-driven strategies, solve issues and deliver projects that deliver success. Be that improving customer experiences, driving revenue, optimising your data practices or helping you make better use of existing legacy infrastructure.

Other useful links:

Evolving manufacturing with visual analytics

Data Mesh – A paradigm shift

Enabling Kafka streams in Mule 4 applications

Most popular

Coforge (Erstwhile Whishworks) shortlisted for Four Big Data Excellence Awards

What do companies use Big Data for

Uk’s first data platform as a service using mapr technology.

James Parks

10 Articles

Get Notifications

Author's Articles

How to Identify Machine Learning Use Cases in Your Business

At this point, we’ve all heard of machine learning. It’s highlighted in business news, your LinkedIn feed is riddled with posts on it, and chances are

You’ve Done a Data Science, ML or AI Proof of Concept (PoC)—Now What?

While some early data science investors—like Amazon, Google and Capital One—have reaped the financial rewards by going all in on their data science in

Why Apache Spark & Azure Databricks are the Ideal Combo for Analytics Workloads

As a data scientist or a manager looking to maximize analytics productivity, you’ve probably heard—or even said—this before: “It should be done soon,

Scaling Data Science: How We Use CRISP-DM and Agile

Share this content:

14 min read

October 26, 2018

Project Management

Read in Spanish

Most mature software teams acknowledge that guiding methodologies are powerful and, if followed, can help steer a team towards excellent results. In the software development world, agile is widely used and appreciated. But what most people don’t recognize is that data science—which often looks like it’s more R&D rather than software development—also benefits from a guiding methodology. At AgileThought, we use the CRISP-DM methodology to build great data science products for our clients.

To explain how CRISP-DM applies, we’re going to use a fictional company called, “Mega Subscription Co.,” which recently lost $100,000 in revenue due to customer attrition. Using CRISP-DM, they want to come up with a strategy to recover their lost revenue.

So, let’s talk about how—and why—the CRISP-DM methodology works:

Start your machine learning initiative

But First, What is CRISP-DM?

The CRISP-DM methodology—which stands for Cross-Industry Standard Process for Data Mining—is a product of a European Union funded project to codify the data mining process. Just as the agile mindset informs an iterative software development process, CRISP-DM conceptualizes data science as a cyclical process. And, while the cycle focuses on iterative progress, the process doesn’t always flow in a single direction. In fact, each step may cause the process to revert to any previous step, and often, the steps can run in parallel.

The cycle consists of the following phases:

Business Understanding : During this phase, the business objective(s) should be framed as one or more data science objectives , and there should be a clear understanding of how potential data science tasks fit within a broader system or process.

So, as we mentioned earlier, Mega Subscription Co. has a business objective to recover $100,000 of lost revenue caused by customer attrition. They might frame their data science objectives as 1.) predict the probability that a given customer will leave and 2.) predict the value of a given customer. Knowing this, we would score customers by their likelihood of leaving and by the potential revenue loss that will result.

Now that we’ve framed our data science objectives, we need to consider how they fit within the overall system. Once we know which customers are likely to leave and of significant value to us, what do we do about it? Mega Subscription Co. could plan to contact the top 100 scoring customers on a monthly basis with an incentive to stay. In addition to classifying customers, the data science solution in this scenario should also rank them and identify the target variable as the likelihood of a customer leaving in the next month.

Data Understanding : Now that we’ve defined the data science objectives, do we know what data we need? And, what it takes to acquire that data (required credentials, maintenance of custom ETL scripts, etc.)? During this phase, we should consider the type, quantity and quality of the data that we have on-hand today, as well as the data that’s worth investing in for the future. For Mega Subscription Co., we may discover historical billing data that tells us which customers stayed versus left, as well as the dollar value of each customer. However, we may realize we don’t have data that suggests what incentive we could offer customers to convince them to stay. In the event this happens, we could apply A/B testing to various incentives to see which one produces a better customer response.

Data Preparation : During this phase, we transform our raw data—which can be images, text or tabular—into small floating-point numbers that can be used by our predictive modeling algorithms. For Mega Subscription Co., this means centering (making the mean of a continuous variable 0), scaling (making the standard deviation of a continuous variable 1), one hot encoding, discretizing, windowing or otherwise transforming the historical billing data.

Modeling : Once our data is prepped, we can run experiments to see which modeling techniques work and which ones don’t. Using an experimental approach, we should pare down the number of candidate models applied over several iterations so that we arrive at the best alternative.

Evaluation : In this phase, we’ll measure the performance of our models and compare with our performance goals to see how we did. For Mega Subscription Co., we’ll use the historical billing data to train our algorithm and then evaluate it using some test data and record a metric, like accuracy. To see how much better or worse our model is, we should compare our recorded metric with a metric from a baseline model, like predictions that customers will stay. This would be a good time to inspect some of the errors the model is making to give us clues as to how we could improve it. In addition to individual performance measurement, we should measure our system’s performance by running it through testing scenarios to see if it could have saved some lost revenue.

Deployment : In this phase, the models produced in the preceding phases should be ready for production use. Keep in mind, there is more to deployment than simply making predictions with the model. Aside from the obvious DevOps and engineering work to scale the prediction engine, we also need to consider collecting production metrics. For Mega Subscription Co., we need to evaluate performance on ‘live’ data, since our performance on test data is just our best proxy for live results. It’s also useful to slice and dice performance across various segments. This may reveal that our model is great for long-standing customers but terrible for relatively new customers.

Why it Works

Generally, data science projects go hand-in-hand with traditional software development; however, while it’s tempting to completely integrate the two, I urge you to take a few things into consideration: For starters, data science is much more experimental in nature than software development ; therefore, you’ll spend significant time and effort experimenting with new ideas. This may seem wasteful in software development, but it is essential for a successful data science project. It’s often advantageous to delay—or completely avoid, if possible—committing to a single data science technique. Instead, data science related components should be built around experimentation and designed for flexibility if a better performing alternative becomes available. This also implies that the data science components be de-coupled from traditional software components and linked via web service APIs that fulfill a ‘contract’ of what is to be sent and received. With these considerations in mind, the data science components can be interchanged—in real time, if necessary—so long as the ‘contract’ between them and the software components are met.

How We Use it

At AgileThought, we blend the ideas of CRISP-DM with those of agile. Our data science team members participate in the usual Scrum ceremonies (grooming, refinement, sprint planning, etc.). While the original user story may look familiar, the backlog items for data science end up looking significantly different from those of traditional software. For example, a user story for customer attrition might be “As a customer service representative, I want a monthly list of customers to contact with an incentive.” Like we explained earlier using the Mega Subscription Co. example—which narrowed down its data science objectives to predicting likelihood of leaving and customer value—each one of these would need to be further broken down into testable experiments. For example, we may refine ‘predicting likelihood’ to ‘predict likelihood using logistic regression, random forest, or gradient-boosted tree algorithms, with an F1 score of 0.75.’ To mark this story as done, the data scientist must evaluate these algorithms and record which algorithm produces the best performance score, or F1. Then, the outcome is binarized to one of two outcomes: success or failure. Assuming the best F1 from the experiment was 0.56 using logistic regression, we may decide to prioritize a story during the next Sprint to work on feature engineering so that we can improve our performance.

Want to learn how you can effectively apply the CRISP-DM methodology to your projects? Contact us to learn how our data scientists can help.

Interested to see a real-life example? Read our Predictive Analytics Discovery client story with Valpak .

August 25, 2022

August 16, 2022

10 min read

February 7, 2023

- CRISPR-DM Case Study

- by Arga Adyatama

- Last updated about 3 years ago

- Hide Comments (–) Share Hide Toolbars

Twitter Facebook Google+

Or copy & paste this link into an email or IM:

Academia.edu no longer supports Internet Explorer.

To browse Academia.edu and the wider internet faster and more securely, please take a few seconds to upgrade your browser .

Enter the email address you signed up with and we'll email you a reset link.

- We're Hiring!

- Help Center

A Case Study of Evaluating Job Readiness with Data Mining Tools and CRISP-DM Methodology (data mining)

A Case Study of Evaluating Job Readiness with Data Mining Tools and CRISP-DM Methodology

This paper analyses the use of data mining techniques in evaluating job readiness of unemployed population in Ireland. To effectively help a jobseeker to enter or return to employment, it is necessary to develop a personal plan and provide them with suitable services. This report investigates how employment and further education needs are recognized among the customers of the Irish public employment services. Following the steps of CRISP-DM methodology, it explores the characteristics of the group and attempts to identify the underlying pattern. Finally, after applying suitable mining techniques, it is discussed whether the classification system with regard to job readiness can be automated.

Related Papers

International Journal of Engineering Pedagogy (iJEP)

Simon Mwendia

Envisaging an adequate IT/IS solution that can mitigate the employability problems is imperative because nowadays there is a high rate of unemployed graduates. Thus, the main goal of this systematic literature review (SLR) was to explore the application of data mining techniques in modeling employability and see how those techniques have been applied and which factors/variables have been retained to be the most predictors or/and prescribers of employability. Data mining techniques have shown the ability to serve as decision support tools in predicting and even prescribing employability. The review determined and analyzed the machine learning algorithms used in data mining to either predict or prescribe employability. This review used the PRISMA method to determine which studies from the existing literature to include as items for this SLR. Hence, 20 relevant studies, 16 of which are predicting employability and 4 of which are prescribing employability. These studies were selected fr...

Aida Mustapha

SHRAVANI JOSHI

In today's competitive world, it's getting difficult to get a job of suitable profile. It may be because of automation, recession or maybe the students themselves are not knowledgeable enough for the work expected from a particular profile. It can also be that students aren't aware of what the requirements are to work in a particular domain. Our system has been created to help students in all such scenarios. From a few assessment tests, it can provide you with an employability factor which shows how much employable you are for a particular domain, and also recommend courses, certifications to work on your weaknesses and gain an edge over others in the field. It can also suggest jobs which suit your current overall profile. This system can be used by students to assess themselves, by placement cells to measure employability of their student, and also by companies to check employability potential of prospective employees.

giovaniesocieta.unibo.it

Giorgio Tassinari

Journal of Telecommunication, Electronic and Computer Engineering

Data mining techniques are widely used in engineering, medicine, industry, agriculture and even used in education to predict a future situation. In this paper, the used of data mining techniques applied in features selection and determine the best model that can be used to predict the employment status of fresh graduate Public Institutions either employed or unemployed, six months after graduation. In CRISP-DM methodology, six phases were adopted. The algorithm in supervised and unsupervised learning; K-Nearest Neighbor, Naive Bayes, Decision Tree, Neural Network, Logistic Regression and Support Vector Machines were compared using the training data set from Tracer Study to determine the highest accuracy in turn is used as a predictive model. Rapid Miner as a data mining tool was used for data analysis algorithm

Journal of Computer Science IJCSIS

Data mining has been applied in various areas because of its ability to rapidly analyze vast amounts of data. This study is to build the Graduates Employment Model using classification task in data mining, and to compare several of data-mining approaches such as Bayesian method and the Tree method. The Bayesian method includes 5 algorithms, including AODE, BayesNet, HNB, NaviveBayes, WAODE. The Tree method includes 5 algorithms, including BFTree, NBTree, REPTree, ID3, C4.5. The experiment uses a classification task in WEKA, and we compare the results of each algorithm, where several classification models were generated. To validate the generated model, the experiments were conducted using real data collected from graduate profile at the Maejo University in Thailand. The model is intended to be used for predicting whether a graduate was employed, unemployed, or in an undetermined situation. https://sites.google.com/site/ijcsis/

Mahendra Patil

— The project Job portal is aimed at developing a and central Recruitment Process System for the HR(Human Resource) Group of a company. It provides the candidate's ability to register to this application and search for jobs, manage their accounts. In this project we will use various data mining methodologies to analyze a field of interest/brilliance of an individual. We use various strategies to determine the ideal potential of an individual by conducting various aptitude tests. Unlike traditional job portals, we aspire to create one which can stream the job seekers for the openings specialized in their interests. On the basis of the results incurred, data mining strategies will be used to shortlist the companies giving suitable openings. This will also help the companies tied up with the portal to shortlist the candidates to fill the vacancies. The potential of the candidate will be filtered through data mining. This in turn will facilitate the correspondence between candidate and companies.

The Eurasia Proceedings of Science Technology Engineering and Mathematics

Riah Encarnacion

Higher education institutions (HEIs) handle tons of data to analyze and generate the most relevant information. Data mining is considered a useful tool to extract knowledge to predict future educational trends in this process. Hence, such a method is significant to HEIs to understand and predict students’ employability and other critical academic elements. A comprehensive and systematic literature review was conducted to identify data mining techniques, algorithms, and the various data sets that will lead to the smart prediction and accuracy of student employability. The same method was used to determine the relationship between academic achievement and the employability of students. According to the research findings, the most frequently used data mining techniques for determining students' academic achievement and employability are Classification techniques, specifically the J48(C4.5) algorithm, the Naïve Bayes algorithm, and the CHAID Decision Tree algorithm. The most frequen...

International Journal of Advanced Computer Science and Applications

Prof. Dr. Qasem AlRadaideh

Suzanne Klinga

Loading Preview

Sorry, preview is currently unavailable. You can download the paper by clicking the button above.

RELATED TOPICS

- We're Hiring!

- Help Center

- Find new research papers in:

- Health Sciences

- Earth Sciences

- Cognitive Science

- Mathematics

- Computer Science

- Academia ©2024

IMAGES

VIDEO

COMMENTS

CRISP-DM stands for CR oss I ndustry S tandard P rocess for D ata M ining. The process model spans six phases meant to fully describe the data science life cycle. Business understanding. Data understanding. Data preparation. Modeling. Evaluation. Deployment.

Keywords: data mining · CRISP-DM · case study. 1 Introduction The use of data mining to support decision making has grown considerably in the past decades. This growth is especially notable in the service industries, such as the nancial sector, where the use of data mining has generally become an enterprise-wide practice [1].

As mentioned above, the CRISP-DM process starts with the understanding of the business problem. Imagine for example a used car dealer who needs estimates what the price of a used car could be. The ...

A case study is an empirical research method aimed at investigating a specific reality within its real-life context [].This method is suitable when the defining boundaries between what is studied and its context are unclear [], which is the case in our research.The case study was conducted according to a detailed protocol Footnote 2.The protocol provides details of the case study design and ...

7. Conclusion This paper explores CRISP-DM phases in recent studies. CRISP-DM is a de-facto standard process model in data mining projects. This systematic literature review is used to give an overview of how CRISP-DM is used in recent studies and to find research focus, best practices and innovative methods.

The aim of this term paper is to understand how the C RISP-DM Model works and how it. is used as a data m in ing methodology in six phases by businesses for their data minin g. projects. Tableau ...

CRISP-DM Methodology Flowchart. The Cross Industry Standard Process for Data Mining is an open standard process model that serves as a guiding framework to help organizations effectively navigate ...

Six phases of CRISP-DM: Business Understanding. Data Understanding. Data Preparation. Modeling. Evaluation. Deployment. Previously, I mentioned that these six phases have particular tasks and ...

The CRISP-DM could execute in a not-strict manner (could move back and forth between different phases). The arrows pointing to the requirement between phases are also important with each other phase; the outer circle represents the cyclic properties of the framework. CRISP-DM itself is not a one-time process, just as the outer circle diagram shows.

This section gives an overview of the CRISP-DM methodology. More detailed information can be found in (CRISP, 1999). 3.1 Overview The CRISP-DM methodology is described in terms of a hierarchical process model, comprising four levels of abstraction (from general to specific): phases, generic tasks, specialized tasks, and process instances (see ...

The first three phases of implementing the CRISP-DM model are described using data from an enterprise with small batch production as an example. The paper presents the CRISP-DM based model for ...

Published in 1999, CRISP-DM (CRoss Industry Standard Process for Data Mining (CRISP-DM) is the most popular framework for executing data science projects. It provides a natural description of a data science life cycle (the workflow in data-focused projects). However, this task-focused approach for executing projects fails to address team and ...

The Cross-Industry Standard Process for Data Mining (CRISP-DM) is a widely-used process model for structured decision-making. This study demonstrates the novel application of CRISP-DM to HWC related decision-making. We apply CRISP-DM and conduct hotspot and temporal (monthly) analysis of HWC data from Ramnagar Forest Division, India.

The CRISP-DM (Cross Industry Standard Process for Data Mining) framework is a standard process or framework for solving analytical problems. The framework is comprised of a six-phase workflow and was designed to be flexible, so it suits a wide variety of projects. An example of this is that the framework actively encourages you to move back to ...

Dec 17, 2020. 4. The development of this project aimed to identify the churn generation of customers. The project's motivation was to analyze patterns, trends and predictions extracted from the data using machine learning models capable of identifying the significant decrease in the use of services and products by customers.

2. CRISP-DM . CRISP-DM is a freely available model that has become the leading methodology in data mining. Because of its industry and tool independence, CRISP-DM can provide guidelines for organized and transparent execution of any project. Typically, it groups all scheduled tasks into six consecutive phases [1]:

To explain how CRISP-DM applies, we're going to use a fictional company called, "Mega Subscription Co.," which recently lost $100,000 in revenue due to customer attrition. Using CRISP-DM, they want to come up with a strategy to recover their lost revenue. So, let's talk about how—and why—the CRISP-DM methodology works:

Or copy & paste this link into an email or IM:

The process of CRISP-DM is described in these six major steps: Business Understanding. Data Understanding. Data Preparation. Modeling. Evaluation. Deployment. This post will go through the process ...

3 Objectives and Benefits of CRISP-DM ensure quality of knowledge discovery project results reduce skills required for knowledge discovery reduce costs and time general purpose (i.e., stable across varying applications) robust (i.e., insensitive to changes in the environment) tool and technique independent tool supportable support documentation of projects

The reminder of the paper is structured as follows: Section 2 provides an overview of CRISPDM methodology; Sections 3-7 report on the execution of the consecutive steps, including the final conclusions. 2. CRISP-DM CRISP-DM is a freely available model that has become the leading methodology in data mining.

Aug 6, 2020. --. CRISP-DM stands for Cross-industry standard process for data mining. It is a common method used to find many solutions in Data Science. It has bee a standard practice used by ...