An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Springer Nature - PMC COVID-19 Collection

Artificial intelligence in education: Addressing ethical challenges in K-12 settings

Selin akgun.

Michigan State University, East Lansing, MI USA

Christine Greenhow

Associated data.

Not applicable.

Artificial intelligence (AI) is a field of study that combines the applications of machine learning, algorithm productions, and natural language processing. Applications of AI transform the tools of education. AI has a variety of educational applications, such as personalized learning platforms to promote students’ learning, automated assessment systems to aid teachers, and facial recognition systems to generate insights about learners’ behaviors. Despite the potential benefits of AI to support students’ learning experiences and teachers’ practices, the ethical and societal drawbacks of these systems are rarely fully considered in K-12 educational contexts. The ethical challenges of AI in education must be identified and introduced to teachers and students. To address these issues, this paper (1) briefly defines AI through the concepts of machine learning and algorithms; (2) introduces applications of AI in educational settings and benefits of AI systems to support students’ learning processes; (3) describes ethical challenges and dilemmas of using AI in education; and (4) addresses the teaching and understanding of AI by providing recommended instructional resources from two providers—i.e., the Massachusetts Institute of Technology’s (MIT) Media Lab and Code.org. The article aims to help practitioners reap the benefits and navigate ethical challenges of integrating AI in K-12 classrooms, while also introducing instructional resources that teachers can use to advance K-12 students’ understanding of AI and ethics.

Introduction

“Success in creating AI would be the biggest event in human history. Unfortunately, it might also be the last, unless we learn how to avoid the risks.” — Stephen Hawking.

We may not think about artificial intelligence (AI) on a daily basis, but it is all around us, and we have been using it for years. When we are doing a Google search, reading our emails, getting a doctor’s appointment, asking for driving directions, or getting movie and music recommendations, we are constantly using the applications of AI and its assistance in our lives. This need for assistance and our dependence on AI systems has become even more apparent during the COVID-19 pandemic. The growing impact and dominance of AI systems reveals itself in healthcare, education, communications, transportation, agriculture, and more. It is almost impossible to live in a modern society without encountering applications powered by AI [ 10 , 32 ].

Artificial intelligence (AI) can be defined briefly as the branch of computer science that deals with the simulation of intelligent behavior in computers and their capacity to mimic, and ideally improve, human behavior [ 43 ]. AI dominates the fields of science, engineering, and technology, but also is present in education through machine-learning systems and algorithm productions [ 43 ]. For instance, AI has a variety of algorithmic applications in education, such as personalized learning systems to promote students’ learning, automated assessment systems to support teachers in evaluating what students know, and facial recognition systems to provide insights about learners’ behaviors [ 49 ]. Besides these platforms, algorithm systems are prominent in education through different social media outlets, such as social network sites, microblogging systems, and mobile applications. Social media are increasingly integrated into K-12 education [ 7 ] and subordinate learners’ activities to intelligent algorithm systems [ 17 ]. Here, we use the American term “K–12 education” to refer to students’ education in kindergarten (K) (ages 5–6) through 12th grade (ages 17–18) in the United States, which is similar to primary and secondary education or pre-college level schooling in other countries. These AI systems can increase the capacity of K-12 educational systems and support the social and cognitive development of students and teachers [ 55 , 8 ]. More specifically, applications of AI can support instruction in mixed-ability classrooms; while personalized learning systems provide students with detailed and timely feedback about their writing products, automated assessment systems support teachers by freeing them from excessive workloads [ 26 , 42 ].

Despite the benefits of AI applications for education, they pose societal and ethical drawbacks. As the famous scientist, Stephen Hawking, pointed out that weighing these risks is vital for the future of humanity. Therefore, it is critical to take action toward addressing them. The biggest risks of integrating these algorithms in K-12 contexts are: (a) perpetuating existing systemic bias and discrimination, (b) perpetuating unfairness for students from mostly disadvantaged and marginalized groups, and (c) amplifying racism, sexism, xenophobia, and other forms of injustice and inequity [ 40 ]. These algorithms do not occur in a vacuum; rather, they shape and are shaped by ever-evolving cultural, social, institutional and political forces and structures [ 33 , 34 ]. As academics, scientists, and citizens, we have a responsibility to educate teachers and students to recognize the ethical challenges and implications of algorithm use. To create a future generation where an inclusive and diverse citizenry can participate in the development of the future of AI, we need to develop opportunities for K-12 students and teachers to learn about AI via AI- and ethics-based curricula and professional development [ 2 , 58 ]

Toward this end, the existing literature provides little guidance and contains a limited number of studies that focus on supporting K-12 students and teachers’ understanding of social, cultural, and ethical implications of AI [ 2 ]. Most studies reflect university students’ engagement with ethical ideas about algorithmic bias, but few addresses how to promote students’ understanding of AI and ethics in K-12 settings. Therefore, this article: (a) synthesizes ethical issues surrounding AI in education as identified in the educational literature, (b) reflects on different approaches and curriculum materials available for teaching students about AI and ethics (i.e., featuring materials from the MIT Media Lab and Code.org), and (c) articulates future directions for research and recommendations for practitioners seeking to navigate AI and ethics in K-12 settings.

Next, we briefly define the notion of artificial intelligence (AI) and its applications through machine-learning and algorithm systems. As educational and educational technology scholars working in the United States, and at the risk of oversimplifying, we provide only a brief definition of AI below, and recognize that definitions of AI are complex, multidimensional, and contested in the literature [ 9 , 16 , 38 ]; an in-depth discussion of these complexities, however, is beyond the scope of this paper. Second, we describe in more detail five applications of AI in education, outlining their potential benefits for educators and students. Third, we describe the ethical challenges they raise by posing the question: “how and in what ways do algorithms manipulate us?” Fourth, we explain how to support students’ learning about AI and ethics through different curriculum materials and teaching practices in K-12 settings. Our goal here is to provide strategies for practitioners to reap the benefits while navigating the ethical challenges. We acknowledge that in centering this work within U.S. education, we highlight certain ethical issues that educators in other parts of the world may see as less prominent. For example, the European Union (EU) has highlighted ethical concerns and implications of AI, emphasized privacy protection, surveillance, and non-discrimination as primary areas of interest, and provided guidelines on how trustworthy AI should be [ 3 , 15 , 23 ]. Finally, we reflect on future directions for educational and other research that could support K-12 teachers and students in reaping the benefits while mitigating the drawbacks of AI in education.

Definition and applications of artificial intelligence

The pursuit of creating intelligent machines that replicate human behavior has accelerated with the realization of artificial intelligence. With the latest advancements in computer science, a proliferation of definitions and explanations of what counts as AI systems has emerged. For instance, AI has been defined as “the ability of a digital computer or computer-controlled robot to perform tasks commonly associated with intelligent beings” [ 49 ]. This particular definition highlights the mimicry of human behavior and consciousness. Furthermore, AI has been defined as “the combination of cognitive automation, machine learning, reasoning, hypothesis generation and analysis, natural language processing, and intentional algorithm mutation producing insights and analytics at or above human capability” [ 31 ]. This definition incorporates the different sub-fields of AI together and underlines their function while reaching at or above human capability.

Combining these definitions, artificial intelligence can be described as the technology that builds systems to think and act like humans with the ability of achieving goals . AI is mainly known through different applications and advanced computer programs, such as recommender systems (e.g., YouTube, Netflix), personal assistants (e.g., Apple’s Siri), facial recognition systems (e.g., Facebook’s face detection in photographs), and learning apps (e.g., Duolingo) [ 32 ]. To build on these programs, different sub-fields of AI have been used in a diverse range of applications. Evolutionary algorithms and machine learning are most relevant to AI in K-12 education.

Algorithms are the core elements of AI. The history of AI is closely connected to the development of sophisticated and evolutionary algorithms. An algorithm is a set of rules or instructions that is to be followed by computers in problem-solving operations to achieve an intended end goal. In essence, all computer programs are algorithms. They involve thousands of lines of codes which represent mathematical instructions that the computer follows to solve the intended problems (e.g., as computing numerical calculation, processing an image, and grammar-checking in an essay). AI algorithms are applied to fields that we might think of as essentially human behavior—such as speech and face recognition, visual perception, learning, and decision-making and learning. In that way, algorithms can provide instructions for almost any AI system and application we can conceive [ 27 ].

Machine learning

Machine learning is derived from statistical learning methods and uses data and algorithms to perform tasks which are typically performed by humans [ 43 ]. Machine learning is about making computers act or perform without being given any line-by-line step [ 29 ]. The working mechanism of machine learning is the learning model’s exposure to ample amounts of quality data [ 41 ]. Machine-learning algorithms first analyze the data to determine patterns and to build a model and then predict future values through these models. In other words, machine learning can be considered a three-step process. First, it analyzes and gathers the data, and then, it builds a model to excel for different tasks, and finally, it undertakes the action and produces the desired results successfully without human intervention [ 29 , 56 ]. The widely known AI applications such as recommender or facial recognition systems have all been made possible through the working principles of machine learning.

Benefits of AI applications in education

Personalized learning systems, automated assessments, facial recognition systems, chatbots (social media sites), and predictive analytics tools are being deployed increasingly in K-12 educational settings; they are powered by machine-learning systems and algorithms [ 29 ]. These applications of AI have shown promise to support teachers and students in various ways: (a) providing instruction in mixed-ability classrooms, (b) providing students with detailed and timely feedback on their writing products, (c) freeing teachers from the burden of possessing all knowledge and giving them more room to support their students while they are observing, discussing, and gathering information in their collaborative knowledge-building processes [ 26 , 50 ]. Below, we outline benefits of each of these educational applications in the K-12 setting before turning to a synthesis of their ethical challenges and drawbacks.

Personalized learning systems

Personalized learning systems, also known as adaptive learning platforms or intelligent tutoring systems, are one of the most common and valuable applications of AI to support students and teachers. They provide students access to different learning materials based on their individual learning needs and subjects [ 55 ]. For example, rather than practicing chemistry on a worksheet or reading a textbook, students may use an adaptive and interactive multimedia version of the course content [ 39 ]. Comparing students’ scores on researcher-developed or standardized tests, research shows that the instruction based on personalized learning systems resulted in higher test scores than traditional teacher-led instruction [ 36 ]. Microsoft’s recent report (2018) of over 2000 students and teachers from Singapore, the U.S., the UK, and Canada shows that AI supports students’ learning progressions. These platforms promise to identify gaps in students’ prior knowledge by accommodating learning tools and materials to support students’ growth. These systems generate models of learners using their knowledge and cognition; however, the existing platforms do not yet provide models for learners’ social, emotional, and motivational states [ 28 ]. Considering the shift to remote K-12 education during the COVID-19 pandemic, personalized learning systems offer a promising form of distance learning that could reshape K-12 instruction for the future [ 35 ].

Automated assessment systems

Automated assessment systems are becoming one of the most prominent and promising applications of machine learning in K-12 education [ 42 ]. These scoring algorithm systems are being developed to meet the need for scoring students’ writing, exams and assignments, and tasks usually performed by the teacher. Assessment algorithms can provide course support and management tools to lessen teachers’ workload, as well as extend their capacity and productivity. Ideally, these systems can provide levels of support to students, as their essays can be graded quickly [ 55 ]. Providers of the biggest open online courses such as Coursera and EdX have integrated automated scoring engines into their learning platforms to assess the writings of hundreds of students [ 42 ]. On the other hand, a tool called “Gradescope” has been used by over 500 universities to develop and streamline scoring and assessment [ 12 ]. By flagging the wrong answers and marking the correct ones, the tool supports instructors by eliminating their manual grading time and effort. Thus, automated assessment systems deal very differently with marking and giving feedback to essays compared to numeric assessments which analyze right or wrong answers on the test. Overall, these scoring systems have the potential to deal with the complexities of the teaching context and support students’ learning process by providing them with feedback and guidance to improve and revise their writing.

Facial recognition systems and predictive analytics

Facial recognition software is used to capture and monitor students’ facial expressions. These systems provide insights about students’ behaviors during learning processes and allow teachers to take action or intervene, which, in turn, helps teachers develop learner-centered practices and increase student’s engagement [ 55 ]. Predictive analytics algorithm systems are mainly used to identify and detect patterns about learners based on statistical analysis. For example, these analytics can be used to detect university students who are at risk of failing or not completing a course. Through these identifications, instructors can intervene and get students the help they need [ 55 ].

Social networking sites and chatbots

Social networking sites (SNSs) connect students and teachers through social media outlets. Researchers have emphasized the importance of using SNSs (such as Facebook) to expand learning opportunities beyond the classroom, monitor students’ well-being, and deepen student–teacher relations [ 5 ]. Different scholars have examined the role of social media in education, describing its impact on student and teacher learning and scholarly communication [ 6 ]. They point out that the integration of social media can foster students’ active learning, collaboration skills, and connections with communities beyond the classroom [ 6 ]. Chatbots also take place in social media outlets through different AI systems [ 21 ]. They are also known as dialogue systems or conversational agents [ 26 , 52 ]. Chatbots are helpful in terms of their ability to respond naturally with a conversational tone. For instance, a text-based chatbot system called “Pounce” was used at Georgia State University to help students through the registration and admission process, as well as financial aid and other administrative tasks [ 7 ].

In summary, applications of AI can positively impact students’ and teachers’ educational experiences and help them address instructional challenges and concerns. On the other hand, AI cannot be a substitute for human interaction [ 22 , 47 ]. Students have a wide range of learning styles and needs. Although AI can be a time-saving and cognitive aide for teachers, it is but one tool in the teachers’ toolkit. Therefore, it is critical for teachers and students to understand the limits, potential risks, and ethical drawbacks of AI applications in education if they are to reap the benefits of AI and minimize the costs [ 11 ].

Ethical concerns and potential risks of AI applications in education

The ethical challenges and risks posed by AI systems seemingly run counter to marketing efforts that present algorithms to the public as if they are objective and value-neutral tools. In essence, algorithms reflect the values of their builders who hold positions of power [ 26 ]. Whenever people create algorithms, they also create a set of data that represent society’s historical and systemic biases, which ultimately transform into algorithmic bias. Even though the bias is embedded into the algorithmic model with no explicit intention, we can see various gender and racial biases in different AI-based platforms [ 54 ].

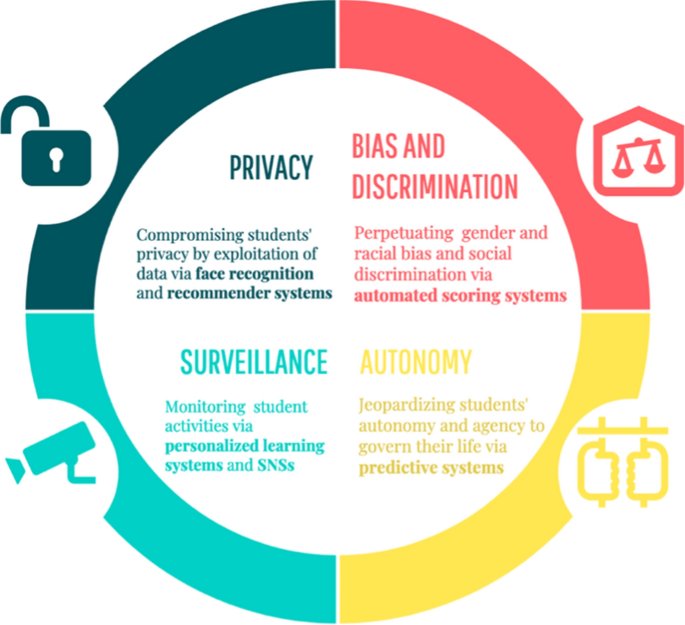

Considering the different forms of bias and ethical challenges of AI applications in K-12 settings, we will focus on problems of privacy, surveillance, autonomy, bias, and discrimination (see Fig. 1 ). However, it is important to acknowledge that educators will have different ethical concerns and challenges depending on their students’ grade and age of development. Where strategies and resources are recommended, we indicate the age and/or grade level of student(s) they are targeting (Fig. (Fig.2 2 ).

Potential ethical and societal risks of AI applications in education

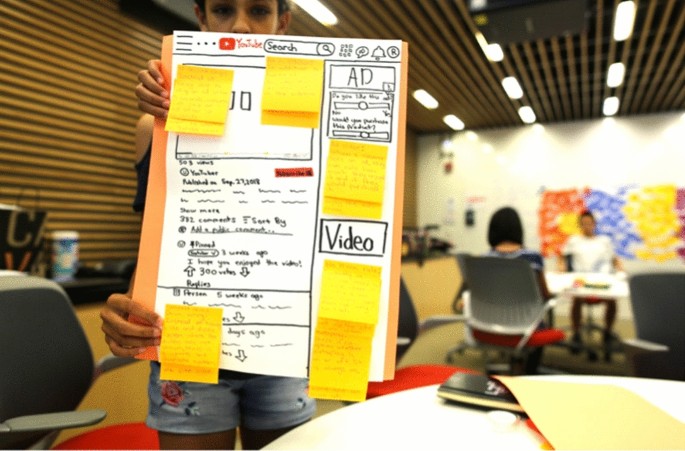

Student work from the activity of “Youtube Redesign” (MIT Media Lab, AI and Ethics Curriculum, p.1, [ 45 ])

One of the biggest ethical issues surrounding the use of AI in K-12 education relates to the privacy concerns of students and teachers [ 47 , 49 , 54 ]. Privacy violations mainly occur as people expose an excessive amount of personal information in online platforms. Although existing legislation and standards exist to protect sensitive personal data, AI-based tech companies’ violations with respect to data access and security increase people’s privacy concerns [ 42 , 54 ]. To address these concerns, AI systems ask for users’ consent to access their personal data. Although consent requests are designed to be protective measures and to help alleviate privacy concerns, many individuals give their consent without knowing or considering the extent of the information (metadata) they are sharing, such as the language spoken, racial identity, biographical data, and location [ 49 ]. Such uninformed sharing in effect undermines human agency and privacy. In other words, people’s agency diminishes as AI systems reduce introspective and independent thought [ 55 ]. Relatedly, scholars have raised the ethical issue of forcing students and parents to use these algorithms as part of their education even if they explicitly agree to give up privacy [ 14 , 48 ]. They really have no choice if these systems are required by public schools.

Another ethical concern surrounding the use of AI in K-12 education is surveillance or tracking systems which gather detailed information about the actions and preferences of students and teachers. Through algorithms and machine-learning models, AI tracking systems not only necessitate monitoring of activities but also determine the future preferences and actions of their users [ 47 ]. Surveillance mechanisms can be embedded into AI’s predictive systems to foresee students’ learning performances, strengths, weaknesses, and learning patterns . For instance, research suggests that teachers who use social networking sites (SNSs) for pedagogical purposes encounter a number of problems, such as concerns in relation to boundaries of privacy, friendship authority, as well as responsibility and availability [ 5 ]. While monitoring and patrolling students’ actions might be considered part of a teacher’s responsibility and a pedagogical tool to intervene in dangerous online cases (such as cyber-bullying or exposure to sexual content), such actions can also be seen as surveillance systems which are problematic in terms of threatening students’ privacy. Monitoring and tracking students’ online conversations and actions also may limit their participation in the learning event and make them feel unsafe to take ownership for their ideas. How can students feel secure and safe, if they know that AI systems are used for surveilling and policing their thoughts and actions? [ 49 ].

Problems also emerge when surveillance systems trigger issues related to autonomy, more specifically, the person’s ability to act on her or his own interest and values. Predictive systems which are powered by algorithms jeopardize students and teachers’ autonomy and their ability to govern their own life [ 46 , 47 ]. Use of algorithms to make predictions about individuals’ actions based on their information raise questions about fairness and self-freedom [ 19 ]. Therefore, the risks of predictive analysis also include the perpetuation of existing bias and prejudices of social discrimination and stratification [ 42 ].

Finally, bias and discrimination are critical concerns in debates of AI ethics in K-12 education [ 6 ]. In AI platforms, the existing power structures and biases are embedded into machine-learning models [ 6 ]. Gender bias is one of the most apparent forms of this problem, as the bias is revealed when students in language learning courses use AI to translate between a gender-specific language and one that is less-so. For example, while Google Translate translated the Turkish equivalent of “S he/he is a nurse ” into the feminine form, it also translated the Turkish equivalent of “ She/he is a doctor ” into the masculine form [ 33 ]. This shows how AI models in language translation carry the societal biases and gender-specific stereotypes in the data [ 40 ]. Similarly, a number of problematic cases of racial bias are also associated with AI’s facial recognition systems. Research shows that facial recognition software has improperly misidentified a number of African American and Latino American people as convicted felons [ 42 ].

Additionally, biased decision-making algorithms reveal themselves throughout AI applications in K-12 education: personalized learning, automated assessment, SNSs, and predictive systems in education. Although the main promise of machine-learning models is increased accuracy and objectivity, current incidents have revealed the contrary. For instance, England’s A-level and GCSE secondary level examinations were cancelled due to the pandemic in the summer of 2020 [ 1 , 57 ]. An alternative assessment method was implemented to determine the qualification grades of students. The grade standardization algorithm was produced by the regulator Ofqual. With the assessment of Ofqual’s algorithm based on schools' previous examination results, thousands of students were shocked to receive unexpectedly low grades. Although a full discussion of the incident is beyond the scope of this article [ 51 ] it revealed how the score distribution favored students who attended private or independent schools, while students from underrepresented groups were hit hardest. Unfortunately, automated assessment algorithms have the potential to reconstruct unfair and inconsistent results by disrupting student’s final scores and future careers [ 53 ].

Teaching and understanding AI and ethics in educational settings

These ethical concerns suggest an urgent need to introduce students and teachers to the ethical challenges surrounding AI applications in K-12 education and how to navigate them. To meet this need, different research groups and nonprofit organizations offer a number of open-access resources based on AI and ethics. They provide instructional materials for students and teachers, such as lesson plans and hands-on activities, and professional learning materials for educators, such as open virtual learning sessions. Below, we describe and evaluate three resources: “AI and Ethics” curriculum and “AI and Data Privacy” workshop from the Massachusetts Institute of Technology (MIT) Media Lab as well as Code.org’s “AI and Oceans” activity. For readers who seek to investigate additional approaches and resources for K-12 level AI and ethics interaction, see: (a) The Chinese University of Hong Kong (CUHK)’s AI for the Future Project (AI4Future) [ 18 ]; (b) IBM’s Educator’s AI Classroom Kit [ 30 ], Google’s Teachable Machine [ 25 ], UK-based nonprofit organization Apps for Good [ 4 ], and Machine Learning for Kids [ 37 ].

"AI and Ethics Curriulum" for middle school students by MIT Media Lab

The MIT Media Lab team offers an open-access curriculum on AI and ethics for middle school students and teachers. Through a series of lesson plans and hand-on activities, teachers are guided to support students’ learning of the technical terminology of AI systems as well as the ethical and societal implications of AI [ 2 ]. The curriculum includes various lessons tied to learning objectives. One of the main learning goals is to introduce students to basic components of AI through algorithms, datasets, and supervised machine-learning systems all while underlining the problem of algorithmic bias [ 45 ]. For instance, in the activity “ AI Bingo” , students are given bingo cards with various AI systems, such as online search engine, customer service bot, and weather app. Students work with their partners collaboratively on these AI systems. In their AI Bingo chart, students try to identify what prediction the selected AI system makes and what dataset it uses. In that way, they become more familiar with the notions of dataset and prediction in the context of AI systems [ 45 ].

In the second investigation, “Algorithms as Opinions” , students think about algorithms as recipes, which are created by set of instructions that modify an input to produce an output [ 45 ]. Initially, students are asked to write an algorithm to make the “ best” jelly sandwich and peanut butter. They explore what it means to be “ best” and see how their opinions of best in their recipes are reflected in their algorithms. In this way, students are able to figure out that algorithms can have various motives and goals. Following this activity, students work on the “Ethical Matrix” , building on the idea of the algorithms as opinions [ 45 ]. During this investigation, students first refer back to their developed algorithms through their “best” jelly sandwich and peanut butter. They discuss what counts as the “best” sandwich for themselves (most healthy, practical, delicious, etc.). Then, through their ethical matrix (chart), students identify different stakeholders (such as their parents, teacher, or doctor) who care about their peanut butter and jelly sandwich algorithm. In this way, the values and opinions of those stakeholders also are embedded in the algorithm. Students fill out an ethical matrix and look for where those values conflict or overlap with each other. This matrix is a great tool for students to recognize different stakeholders in a system or society and how they are able to build and utilize the values of the algorithms in an ethical matrix.

The final investigation which teaches about the biased nature of algorithms is “Learning and Algorithmic Bias” [ 45 ]. During the investigation, students think further about the concept of classification. Using Google’s Teachable Machine tool [ 2 ], students explore the supervised machine-learning systems. Students train a cat–dog classifier using two different datasets. While the first dataset reflects the cats as the over-represented group, the second dataset indicates the equal and diverse representation between dogs and cats [ 2 ]. Using these datasets, students compare the accuracy between the classifiers and then discuss which dataset and outcome are fairer. This activity leads students into a discussion about the occurrence of bias in facial recognition algorithms and systems [ 2 ].

In the rest of the curriculum, similar to the AI Bingo investigation, students work with their partners to determine the various forms of AI systems in the YouTube platform (such as its recommender algorithm and advertisement matching algorithm). Through the investigation of “ YouTube Redesign”, students redesign YouTube’s recommender system. They first identify stakeholders and their values in the system, and then use an ethical matrix to reflect on the goals of their YouTube’s recommendation algorithm [ 45 ]. Finally, through the activity of “YouTube Socratic Seminar” , students read an abridged version of Wall Street Journal article by participating in a Socratic seminar. The article was edited to shorten the text and to provide more accessible language for middle school students. They discuss which stakeholders were most influential or significant in proposing changes in the YouTube Kids app and whether or not technologies like auto play should ever exist. During their discussion, students engage with the questions of: “Which stakeholder is making the most change or has the most power?”, “Have you ever seen an inappropriate piece of content on YouTube? What did you do?” [ 45 ].

Overall, the MIT Media Lab’s AI and Ethics curriculum is a high quality, open-access resource with which teachers can introduce middle school students to the risks and ethical implications of AI systems. The investigations described above involve students in collaborative, critical thinking activities that force them to wrestle with issues of bias and discrimination in AI, as well as surveillance and autonomy through the predictive systems and algorithmic bias.

“AI and Data Privacy” workshop series for K-9 students by MIT Media Lab

Another quality resource from the MIT Media Lab’s Personal Robots Group is a workshop series designed to teach students (between the ages 7 and 14) about data privacy and introduce them to designing and prototyping data privacy features. The group has made the content, materials, worksheets, and activities of the workshop series into an open-access online document, freely available to teachers [ 44 ].

The first workshop in the series is “ Mystery YouTube Viewer: A lesson on Data Privacy” . During the workshop, students engage with the question of what privacy and data mean [ 44 ]. They observe YouTube’s home page from the perspective of a mystery user. Using the clues from the videos, students make predictions about what the characters in the videos might look like or where they might live. In a way, students imitate YouTube algorithms’ prediction mode about the characters. Engaging with these questions and observations, students think further about why privacy and boundaries are important and how each algorithm will interpret us differently based on who creates the algorithm itself.

The second workshop in the series is “ Designing ads with transparency: A creative workshop” . Through this workshop, students are able to think further about the meaning, aim, and impact of advertising and the role of advertisements in our lives [ 44 ]. Students collaboratively create an advertisement using an everyday object. The objective is to make the advertisement as “transparent” as possible. To do that, students learn about notions of malware and adware, as well as the components of YouTube advertisements (such as sponsored labels, logos, news sections, etc.). By the end of the workshop, students design their ads as a poster, and they share with their peers.

The final workshop in MIT’s AI and data privacy series is “ Designing Privacy in Social Media Platforms”. This workshop is designed to teach students about YouTube, design, civics, and data privacy [ 44 ]. During the workshop, students create their own designs to solve one of the biggest challenges of the digital era: problems associated with online consent. The workshop allows students to learn more about the privacy laws and how they impact youth in terms of media consumption. Students consider YouTube within the lenses of the Children’s Online Privacy Protections Rule (COPPA). In this way, students reflect on one of the components of the legislation: how might students get parental permission (or verifiable consent)?

Such workshop resources seem promising in helping educate students and teachers about the ethical challenges of AI in education. Specifically, social media such as YouTube are widely used as a teaching and learning tool within K-12 classrooms and beyond them, in students’ everyday lives. These workshop resources may facilitate teachers’ and students’ knowledge of data privacy issues and support them in thinking further about how to protect privacy online. Moreover, educators seeking to implement such resources should consider engaging students in the larger question: who should own one’s data? Teaching students the underlying reasons for laws and facilitating debate on the extent to which they are just or not could help get at this question.

Investigation of “AI for Oceans” by Code.org

A third recommended resource for K-12 educators trying to navigate the ethical challenges of AI with their students comes from Code.org, a nonprofit organization focused on expanding students’ participation in computer science. Sponsored by Microsoft, Facebook, Amazon, Google, and other tech companies, Code.org aims to provide opportunities for K-12 students to learn about AI and machine-learning systems [ 20 ]. To support students (grades 3–12) in learning about AI, algorithms, machine learning, and bias, the organization offers an activity called “ AI for Oceans ”, where students are able to train their machine-learning models.

The activity is provided as an open-access tutorial for teachers to help their students explore how to train, model and classify data , as well as to understand how human bias plays a role in machine-learning systems. During the activity, students first classify the objects as either “fish” or “not fish” in an attempt to remove trash from the ocean. Then, they expand their training data set by including other sea creatures that belong underwater. Throughout the activity, students are also able to watch and interact with a number of visuals and video tutorials. With the support of their teachers, they discuss machine learning, steps and influences of training data, as well as the formation and risks of biased data [ 20 ].

Future directions for research and teaching on AI and ethics

In this paper, we provided an overview of the possibilities and potential ethical and societal risks of AI integration in education. To help address these risks, we highlighted several instructional strategies and resources for practitioners seeking to integrate AI applications in K-12 education and/or instruct students about the ethical issues they pose. These instructional materials have the potential to help students and teachers reap the powerful benefits of AI while navigating ethical challenges especially related to privacy concerns and bias. Existing research on AI in education provides insight on supporting students’ understanding and use of AI [ 2 , 13 ]; however, research on how to develop K-12 teachers’ instructional practices regarding AI and ethics is still in its infancy.

Moreover, current resources, as demonstrated above, mainly address privacy and bias-related ethical and societal concerns of AI. Conducting more exploratory and critical research on teachers’ and students’ surveillance and autonomy concerns will be important to designing future resources. In addition, curriculum developers and workshop designers might consider centering culturally relevant and responsive pedagogies (by focusing on students’ funds of knowledge, family background, and cultural experiences) while creating instructional materials that address surveillance, privacy, autonomy, and bias. In such student-centered learning environments, students voice their own cultural and contextual experiences while trying to critique and disrupt existing power structures and cultivate their social awareness [ 24 , 36 ].

Finally, as scholars in teacher education and educational technology, we believe that educating future generations of diverse citizens to participate in the ethical use and development of AI will require more professional development for K-12 teachers (both pre-service and in-service). For instance, through sustained professional learning sessions, teachers could engage with suggested curriculum resources and teaching strategies as well as build a community of practice where they can share and critically reflect on their experiences with other teachers. Further research on such reflective teaching practices and students’ sense-making processes in relation to AI and ethics lessons will be essential to developing curriculum materials and pedagogies relevant to a broad base of educators and students.

This work was supported by the Graduate School at Michigan State University, College of Education Summer Research Fellowship.

Data availability

Code availability, declarations.

The authors declare that they have no conflict of interest.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Selin Akgun, Email: ude.usm@lesnugka .

Christine Greenhow, Email: ude.usm@wohneerg .

IEEE Account

- Change Username/Password

- Update Address

Purchase Details

- Payment Options

- Order History

- View Purchased Documents

Profile Information

- Communications Preferences

- Profession and Education

- Technical Interests

- US & Canada: +1 800 678 4333

- Worldwide: +1 732 981 0060

- Contact & Support

- About IEEE Xplore

- Accessibility

- Terms of Use

- Nondiscrimination Policy

- Privacy & Opting Out of Cookies

A not-for-profit organization, IEEE is the world's largest technical professional organization dedicated to advancing technology for the benefit of humanity. © Copyright 2024 IEEE - All rights reserved. Use of this web site signifies your agreement to the terms and conditions.

- DOI: 10.1109/ICCSE.2018.8468773

- Corpus ID: 52303965

Artificial Intelligence Education Ethical Problems and Solutions

- Sijing Li , Wang Lan

- Published in International Conference on… 1 August 2018

- Education, Computer Science, Philosophy

21 Citations

The future of artificial intelligence in special education technology, the role of artificial intelligence in the future of education, digital transformation of education and artificial intelligence, cognitive technologies and artificial intelligence in social perception, the role of artificial intelligence in education: current trends and future prospects, bridging the gap between ethical ai implementations, ai-based assessment for teaching and learning enhancement, exploring efl teachers’ insights regarding artificial intelligence driven tools in student-centered writing instructions, educational practices resulting from digital intelligence, artificial intelligence: how are gen z’s choosing their careers, related papers.

Showing 1 through 3 of 0 Related Papers

The challenges and opportunities of Artificial Intelligence in education

Artificial Intelligence (AI) is producing new teaching and learning solutions that are currently being tested globally. These solutions require advanced infrastructures and an ecosystem of thriving innovators. How does that affect countries around the world, and especially developing nations? Should AI be a priority to tackle in order to reduce the digital and social divide?

These are some of the questions explored in a Working Paper entitled ‘ Artificial Intelligence in Education: Challenges and Opportunities for Sustainable Development ’ presented by UNESCO and ProFuturo at Mobile Learning Week 2019 . It features cases studies on how AI technology is helping education systems use data to improve educational equity and quality.

Concrete examples from countries such as China, Brazil and South Africa are examined on AI’s contribution to learning outcomes, access to education and teacher support. Case studies from countries including the United Arab Emirates, Bhutan and Chile are presented on how AI is helping with data analytics in education management.

The Paper also explores the curriculum and standards dimension of AI, with examples from the European Union, Singapore and the Republic of Korea on how learners and teachers are preparing for an AI-saturated world.

Beyond the opportunities, the Paper also addresses the challenges and policy implications of introducing AI in education and preparing students for an AI-powered future. The challenges presented revolve around:

- Developing a comprehensive view of public policy on AI for sustainable development : The complexity of the technological conditions needed to advance in this field require the alignment of multiple factors and institutions. Public policies have to work in partnership at international and national levels to create an ecosystem of AI that serves sustainable development.

- Ensuring inclusion and equity for AI in education : The least developed countries are at risk of suffering new technological, economic and social divides with the development of AI. Some main obstacles such as basic technological infrastructure must be faced to establish the basic conditions for implementing new strategies that take advantage of AI to improve learning.

- Preparing teachers for an AI-powered education : Teachers must learn new digital skills to use AI in a pedagogical and meaningful way and AI developers must learn how teachers work and create solutions that are sustainable in real-life environments.

- Developing quality and inclusive data systems : If the world is headed towards the datafication of education, the quality of data should be the main chief concern. It´s essential to develop state capabilities to improve data collection and systematization. AI developments should be an opportunity to increase the importance of data in educational system management.

- Enhancing research on AI in education : While it can be reasonably expected that research on AI in education will increase in the coming years, it is nevertheless worth recalling the difficulties that the education sector has had in taking stock of educational research in a significant way both for practice and policy-making.

- Dealing with ethics and transparency in data collection, use and dissemination : AI opens many ethical concerns regarding access to education system, recommendations to individual students, personal data concentration, liability, impact on work, data privacy and ownership of data feeding algorithms. AI regulation will require public discussion on ethics, accountability, transparency and security.

The key discussions taking place at Mobile Learning Week 2019 address these challenges, offering the international educational community, governments and other stakeholders a unique opportunity to explore together the opportunities and threats of AI in all areas of education.

- Download the working paper

Event International Conference of the Memory of the World Programme, incorporating the 4th Global Policy Forum 28 October 2024 - 29 October 2024

Other recent news

Breadcrumbs Section. Click here to navigate to respective pages.

The Ethics of Artificial Intelligence in Education

DOI link for The Ethics of Artificial Intelligence in Education

Get Citation

The Ethics of Artificial Intelligence in Education identifies and confronts key ethical issues generated over years of AI research, development, and deployment in learning contexts. Adaptive, automated, and data-driven education systems are increasingly being implemented in universities, schools, and corporate training worldwide, but the ethical consequences of engaging with these technologies remain unexplored. Featuring expert perspectives from inside and outside the AIED scholarly community, this book provides AI researchers, learning scientists, educational technologists, and others with questions, frameworks, guidelines, policies, and regulations to ensure the positive impact of artificial intelligence in learning.

TABLE OF CONTENTS

Chapter | 19 pages, introduction, part i | 125 pages, introduction to part i, chapter 1 | 22 pages, learning to learn differently, chapter 2 | 27 pages, educational research and aied, chapter 3 | 17 pages, ai in education, chapter 4 | 22 pages, student-centred requirements for the ethics of ai in education, chapter 5 | 33 pages, pitfalls and pathways for trustworthy artificial intelligence in education, part ii | 135 pages, introduction to part ii, chapter 6 | 23 pages, equity and artificial intelligence in education, chapter 7 | 29 pages, algorithmic fairness in education, chapter 8 | 37 pages, beyond “fairness”, chapter 9 | 15 pages, the overlapping ethical imperatives of human teachers and their artificially intelligent assistants, chapter 10 | 16 pages, integrating ai ethics across the computing curriculum, chapter | 11 pages, conclusions.

- Privacy Policy

- Terms & Conditions

- Cookie Policy

- Taylor & Francis Online

- Taylor & Francis Group

- Students/Researchers

- Librarians/Institutions

Connect with us

Registered in England & Wales No. 3099067 5 Howick Place | London | SW1P 1WG © 2024 Informa UK Limited

- Advanced Search

Artificial Intelligence in Education: Ethical Issues and its Regulations

School of Education, Shaanxi Normal University, China

School of Education, Shaanxi Normal University, China and College of Humanities and Foreign Languages, Xi'an University of Science and Technology, China

New Citation Alert added!

This alert has been successfully added and will be sent to:

You will be notified whenever a record that you have chosen has been cited.

To manage your alert preferences, click on the button below.

New Citation Alert!

Please log in to your account

- Publisher Site

ICBDE '22: Proceedings of the 5th International Conference on Big Data and Education

After the birth of human beings, biological evolution essentially ceased. Since then, the evolution of human civilization has been initiated, with curiosity driving science and the desire for control driving technology. The development of science and technology has unfolded in two dimensions of outward and inward, the outward from the solar system, the galaxy to the universe; the inward pointing to human itself, from the movement of life to the movement of consciousness, giving rise to artificial intelligence (AI). For the first time, the "human-like" nature of AI has shaken the social activity in which the world is the stage and humans are the main actors, leading to the ethical issues of AI, of course, including the ethical issues of AI in education(AIEd). The ethical issue of AIEd is the problem caused by the transformation of the actors by implanting humanoids into the human educational community. The main issues include the ethical problem of AI, the adaption of new human and new norms of human behaviors, and the stage for the activities of the actors—the field of AIEd. The regulations of ethical issues of AIEd should aim at the pursuit of human well-being and focus on practice, mainly from the aspects of customs transfer, norms construction and legislation constraints.

Social and professional topics

Professional topics

Computing profession

Recommendations

Analysis on ethical problems of artificial intelligence technology.

In recent years, artificial intelligence has been significantly developed. Artificial intelligence has made great contributions to the progress of human society and has changed the traditional production methods and modes of thinking in human society. ...

Research on Ethical Issues of Artificial Intelligence in Education

The application of artificial intelligence technology in the field of education is becoming more and more extensive, and the ethical issues that come with it are common. The development of responsible and trustworthy artificial intelligence has ...

Attitude of college students towards ethical issues of artificial intelligence in an international university in Japan

We have examined the attitude and moral perception of 228 college students (63 Japanese and 165 non-Japanese) towards artificial intelligence (AI) in an international university in Japan. The students were asked to select a single most significant ...

Login options

Check if you have access through your login credentials or your institution to get full access on this article.

Full Access

- Information

- Contributors

Published in

Copyright © 2022 ACM

Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. Copyrights for components of this work owned by others than ACM must be honored. Abstracting with credit is permitted. To copy otherwise, or republish, to post on servers or to redistribute to lists, requires prior specific permission and/or a fee. Request permissions from [email protected]

In-Cooperation

Association for Computing Machinery

New York, NY, United States

Publication History

- Published: 26 July 2022

Permissions

Request permissions about this article.

Check for updates

Author tags.

- AI in Education

- Artificial Intelligence

- Educational Ethics

- Ethical Issues

- Regulations

- research-article

- Refereed limited

Funding Sources

Other metrics.

- Bibliometrics

- Citations 1

Article Metrics

- 1 Total Citations View Citations

- 578 Total Downloads

- Downloads (Last 12 months) 348

- Downloads (Last 6 weeks) 21

View or Download as a PDF file.

View online with eReader.

Digital Edition

View this article in digital edition.

HTML Format

View this article in HTML Format .

Share this Publication link

https://dl.acm.org/doi/10.1145/3524383.3524406

Share on Social Media

- 0 References

Export Citations

- Please download or close your previous search result export first before starting a new bulk export. Preview is not available. By clicking download, a status dialog will open to start the export process. The process may take a few minutes but once it finishes a file will be downloadable from your browser. You may continue to browse the DL while the export process is in progress. Download

- Download citation

- Copy citation

We are preparing your search results for download ...

We will inform you here when the file is ready.

Your file of search results citations is now ready.

Your search export query has expired. Please try again.

- Undergraduate

- Master’s

- Graduate Specializations and Certificates

- Departments

- Program Rankings

- Our Faculty

- Office of the Dean

Certification

- MSU Interns

- Post Bachelor’s

- Teachers & Administrators

- School Psychologists & Counselors

- Non-Traditional Certification

- Student Affairs Office

- Centers & Institutes

- Research Projects

- Office of Research Administration

- Recent Awards

- Opportunities for Students

- Faculty Research Profiles

- Research News

- Undergraduate Research Opportunities

- Graduate Research Opportunities

- K-12 Schools

- Urban Areas

- International

- Education Policy Innovation Collaborative

- Office of K-12 Outreach

- Office of International Studies in Education

- Education Policy Forum

- For Students

- For Faculty/Staff

- Technology & Data

- Buildings & Facilities

- Undergraduate Student Scholarships

- Graduate Student Scholarships & Fellowships

- College Merchandise

- ENewsletter

- For Reporters

Exploring the ethics of artificial intelligence in K-12 education

Artificial intelligence is everywhere: from reading emails to referencing a search engine. It is in the classroom too, such as with personalized learning or assessment systems. In recent months, AI in the K-12 classroom became even more prevalent as learning shifted to and, in some cases, remained online due to COVID-19. But what about its societal and ethical implications?

Two Michigan State University scholars explored the use of AI in K-12 classrooms, including possible benefits and consequences. Here’s what their research found.

“Artificial intelligence can help students get quicker and helpful feedback and can decrease workload for teachers, among other affordances,” said Selin Akgun, a doctoral student in the College of Education’s Curriculum, Instruction and Teacher Education (CITE) program, and lead author on the paper , published in AI and Ethics . For example, teachers may use social media to encourage conversations amongst students or use platforms to support instruction in hybrid or mixed-ability classrooms. “There are a lot of affordances, but we also wanted to discuss concerns.”

Akgun and co-author Associate Professor Christine Greenhow identified four key areas teachers should consider when using AI in their classroom.

- Privacy . Many AI systems ask users to consent to the program using and accessing personal data in ways they may or may not understand. Consider the “Terms & Conditions” often shared when downloading a new software. Users may just click “Accept” without fully reading and digesting how their data may be used. Or, if they do read and understand it, there are other layered ways the program could be using their data, like the system knowing their location. Moreover, if platforms are required as part of curricula, some argue parents and children are being “forced” to share their data.

- Surveillance . AI systems may also follow how a user is interacting with things; the resulting experience provides a personalized experience. In education, this may include systems identifying strengths, weaknesses, and patterns in a students’ performance. While teachers do this to some degree in their teaching, Akgun and Greenhow say, “monitoring and tracking students’ online conversations and actions also may limit [student] participation … and make them feel unsafe to take ownership for their ideas.”

- Autonomy . Because AI systems rely on algorithms—such as predicting how a student may perform on a test—students and teachers may find it difficult to feel independence in their work. It also, the scholars say, “raise[s] questions about fairness and self-freedom.”

- Bias and discrimination. These factors can appear in a variety of ways in AI systems like through gendered language translation (“She is a nurse,” but “he is a doctor”). Whenever algorithms are created, the scholars say, the makers also build “a set of data that represent society’s historical and systemic biases, which ultimately transform into algorithmic biases. Even though the bias is embedded into the algorithmic model with no explicit intention, we can see various gender and racial biases in different AI-based platforms.”

“Artificial intelligence can manipulate us in ways we don’t always think about,” reiterated Greenhow, co-author and a faculty member in Educational Psychology and Educational Technology at MSU.

The publication came as a result of the College of Education’s Mind, Media and Learning graduate course, which encourages students to develop a paper based on an area of research interest.

“We want to ultimately cultivate different pedagogies, materials and better support for teachers and students,” said Akgun, who is also a research assistant in MSU’s CREATE for STEM Institute on the ML-PBL project .

As one way to assist in that process, the paper outlines three free sources for teachers to use in a classroom. Akgun and Greenhow chose these—a few of many available—that provided several options including collaborative and hands-on activities for students. It also gives considerations and suggestions of where education can go from here.

“The questions this article raised became increasingly important during the COVID-19 pandemic,” Greenhow said. “More and more online tools were being integrated into the classroom—sometimes on the fly and with little time to think. This paper raised important ethical considerations to think about as we move forward with those applications.”

More from our researchers

Akgun was featured on The Sci-Files podcast in February 2021 , talking about educational research during a pandemic.

Greenhow recently answered questions about online and classroom learning during our second school year dealing with the pandemic. Read her expertise , and check out her website for even more .

Academia.edu no longer supports Internet Explorer.

To browse Academia.edu and the wider internet faster and more securely, please take a few seconds to upgrade your browser .

Enter the email address you signed up with and we'll email you a reset link.

- We're Hiring!

- Help Center

Practical Ethical Issues for Artificial Intelligence in Education

2022, Communications in computer and information science

Due to the increasing use of Artificial Intelligence (AI) in Education, as well as in other areas, different ethical questions have been raised in recent years. Despite this, only a few practical proposals related to ethics in AI for Education can be found in scientific databases. For this reason, aiming to help fulfill this gap, this work proposes a solution in ethics by design for teaching and learning processes using a top-down approach for Artificial Moral Agents (AMA), following the assumptions defended by the Values Alignment (VA) in the AI area. Therefore, using the classic Beliefs, Desires, and Intentions (BDI) model, we propose an architecture that implements a hybrid solution applying both the utilitarian and the deontological ethical frameworks. Thus, while the deontological dimension of the agent will guide its behavior by means of ethical principles, its utilitarian dimension will help the AMA to solve ethical dilemmas. Whit this, it is expected to contribute to the development of a safer and more reliable AI for the Education area.

Related Papers

International Journal of Development Research

PAULO ROBERTO CORDOVA

As the area of artificial intelligence (AI) evolves and reaches new spaces, showing itself capable of promoting real changes in the way people interact, solve problems and make decisions, it becomes more urgent to make it predictable, responsible, and reliable. Thus, solutions for the values alignment (VA) in AI have been proposed in recent years. The present study proposes a model of artificial moral pedagogical agents (AMPA), adopting a top-down approach and the classic BDI model. In this article, we describe why the top-down approach is the best approach to educational grounds. Next, we explain in more detail the internal structure of the proposed model. Finally, we present some discussions on the topic and a possible situation in which such an agent could be applicable.

A Proposal for Artificial Moral Pedagogical Agents

While Artificial intelligence technologies continue to proliferate in all areas of contemporary life, researchers are looking for ways to make them safe for users. In the teaching-learning context, this is a trickier problem because it must be clear which principles or ethical frameworks are guiding processes supported by artificial intelligence. After all, people education are at stake. This inquiry presents an approach to value alignment, in educational contexts using artificial pedagogical moral agents (AMPA) adopting the classic BDI model. Besides, we propose a top-down approach explaining why the bottom-up or the hybrid one may would not be advisable in educational grounds.

International Journal of Artificial Intelligence in Education

Robert Aiken

Boris Grozdanoff

In 2015 Savulescu and Maslen argued, influentially, that a moral artificial intelligence (MAI) could be developed with the main function of moral advising human agents. 2 Their proposal is one of the first practically oriented solution suggestions in the broader context of the so-called ethical AI (EAI). The EAI, however, is most of the time framed in terms of an AI being ethical and much less often, if at all, grasped as an engine for ethical comprehension, advise and, let alone, action execution, based on such advise. The status quo both in the academic world and the social layers of government, including states' and industry management, is exclusively focused on defining a set of norms, widely accepted by policy makers and professional academics, as ethical. This set, as we may well see in the near future, being aware of non-trivial differences that regulate interests and goals of different states, 3 is to be employed later in programming the actions of AI based and AI run electronic systems at large. Foreseen and unforeseen consequences for the life and, according to some, even survival of humanity, could hardly be overestimated; they are going to be anything but trivial for the future of human society. Thus, it is of timely importance to speed up the formulation of both theoretical systems of ethical AI and their practical implementation. Savulescu's and Mahlen's suggestion of MAI follows this trend in a novel and not particularly traditional way. While most of professional ethicists would perhaps agree that an AI system could have in theory something to contribute to the millennia old philosophical discipline of ethics, few of them, if any, would accept that it would do better than humans in this task. I, having a tremendous intellectual respect for the achievements of history of ethics, do disagree, however, and perhaps along the same line of the proposed MAI. Where I differ, however, is in the modesty of the MAI proposal, quite apt for the normal tempo of evolution of academic ideas. Such modesty, however, follows the natural speed of evolution of academic ideas and not at all the pace of modern technological development; thus, it is inadequate as a timely response to the pressing need of yesterday's AI, which is fully disinterested in the fact of the presence of an ethical system for AI, or its lack thereof. In what follows I will offer first, a critical overview of the ethical AI theme and second, a detailed proposal for an ethical AI agent.

Proceedings of the AAAI Conference on Artificial Intelligence

Judy Goldsmith

We argue that it is crucial to the future of AI that our students be trained in multiple complementary modes of ethical reasoning, so that they may make ethical design and implementation choices, ethical career decisions, and that their software will be programmed to take into account the complexities of acting ethically in the world.

Mrinalini Luthra

The increasing pervasiveness, autonomy and complexity of artificially intelligent technologies in human society has challenged the traditional conception of moral responsibility. To this extent, it has been proposed that the existing notion of moral responsibility be expanded in order to be able to account for the morality of technologies. Machine ethics is the field of study dedicated to studying the computational entity as a moral entity whose goal is to develop technologies capable of autonomous moral reasoning, namely artificial moral agents. This thesis begins by surveying the basic assumptions and definitions underlying this conception of artificial moral agency. It is followed by an investigation into why (and how) society would benefit from the development of such agents. Finally, it explores the main approaches for the development of artificial moral agents. In effect, this research serves as a critique on the emerging field of machine ethics.

Hanyang Law Review

A Suggestion on the Ethics in Artificial Intelligence Yong Eui Kim Now and in the future, AI is inevitable and necessary for our lives in almost every respect. There are many aspects and consequences our use of AI and the AI itself (as an independent actor in the future) generate, some of which are good, valuable as intended and desired by us human beings, but some of which are bad, harmful, or dangerous whether it is intended or not. Ever since AI was started to be used, there has been a variety of discussions on the ethics which may work as guidelines for the regulation of its development and use. Some of the ethics have not yet become enforceable norm and some others exist already as a part of regulation enforceable under the power of governments. The designers or developers of such ethics are diverse from an individual to international organizations. Almost all of the AI Ethics are not sufficiently satisfying the requirements, needs, and hopes of the society members not only local level, but, national or international level. They lack something in ensuring to make all the stake-holders’ participation in developing the ethics and to achieve such key objectives as the accountability, explainability, traceability, no-bias, and privacy protection in the development, use, and improvement of AI. Based upon the review and analysis of the currently available AI Ethics, this article tries to find and suggest a method to design, develop, and improve continuously the AI Ethics through the National AI Ethics Platform where all the relevant stake-holders participate and exchange ideas and opinions together with the AI itself as a device to help, with its great capacity to deal with big data, all the processing and operation of the ethics through simulations utilizing all the input data provided by the participants and the situations surrounding the participants not in a static mode but a dynamic continuing mode.

International Journal for Educational Integrity

Irene Glendinning

2021 IEEE Conference on Norbert Wiener in the 21st Century (21CW)

Humans have invented intelligent machinery to enhance their rational decision-making procedure, which is why it has been named 'augmented intelligence'. The usage of artificial intelligence (AI) technology is increasing enormously with every passing year, and it is becoming a part of our daily life. We are using this technology not only as a tool to enhance our rationality but also heightening them as the autonomous ethical agent for our future society. Norbert Wiener envisaged 'Cybernetics' with a view of a brain-machine interface to augment human beings' biological rationality. Being an autonomous ethical agent presupposes an 'agency' in moral decision-making procedure. According to agency's contemporary theories, AI robots might be entitled to some minimal rational agency. However, that minimal agency might not be adequate for a fully autonomous ethical agent's performance in the future. If we plan to implement them as an ethical agent for the future society, it will be difficult for us to judge their actual stand as a moral agent. It is well known that any kind of moral agency presupposes consciousness and mental representations, which cannot be articulated synthetically until today. We can only anticipate that this milestone will be achieved by AI scientists shortly, which will further help them triumph over 'the problem of ethical agency in AI'. Philosophers are currently trying a probe of the pre-existing ethical theories to build a guidance framework for the AI robots and construct a tangible overview of artificial moral agency. Although, no unanimous solution is available yet. It will land up in another conflicting situation between biological, moral agency and autonomous ethical agency, which will leave us in a baffled state. Creating rational and ethical AI machines will be a fundamental future research problem for the AI field. This paper aims to investigate 'the problem of moral agency in AI' from a philosophical outset and hold a survey of the relevant philosophical discussions to find a resolution for the same.

Alice Pavaloiu , Utku Köse

Artificial Intelligence (AI) is an effective science which employs strong enough approaches, methods, and techniques to solve unsolvable real-world based problems. Because of its unstoppable rise towards the future, there are also some discussions about its ethics and safety. Shaping an AI-friendly environment for people and a people-friendly environment for AI can be a possible answer for finding a shared context of values for both humans and robots. In this context, objective of this paper is to address the ethical issues of AI and explore the moral dilemmas that arise from ethical algorithms, from pre-set or acquired values. In addition, the paper will also focus on the subject of AI safety. As general, the paper will briefly analyze the concerns and potential solutions to solving the ethical issues presented and increase readers' awareness on AI safety as another related research interest.

Loading Preview

Sorry, preview is currently unavailable. You can download the paper by clicking the button above.

RELATED PAPERS

2020 IEEE International Conference on Systems, Man, and Cybernetics (SMC)

Fabrice Muhlenbach

Redshine , Swedon

Saket Bihari

Frontiers in Psychology

Ana Luize Correa Bertoncini

Advances in Robotics & Mechanical Engineering

Thibault de Swarte

Artificial Intelligence, A Protocol for Setting Moral and Ethical Operational Standars

Daniel Raphael, PhD

Challenges of Aligning AI with Human Values

Margit Sutrop

AI Ethics in Higher Education: Insights from Africa and Beyond

ABRAHAM SAM

AI and Ethics

Brian D. Earp , Hossein Dabbagh

Christine Greenhow

Mark R Waser

Massimiliano L Cappuccio

Giovanni Landi

Proceedings of the 8th WSEAS international …

Liliana Rogozea

Alfredo Peña Castaño

Catherine Adams

RELATED TOPICS

- We're Hiring!

- Help Center

- Find new research papers in:

- Health Sciences

- Earth Sciences

- Cognitive Science

- Mathematics

- Computer Science

- Academia ©2024

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

- My Bibliography

- Collections

- Citation manager

Save citation to file

Email citation, add to collections.

- Create a new collection

- Add to an existing collection

Add to My Bibliography

Your saved search, create a file for external citation management software, your rss feed.

- Search in PubMed

- Search in NLM Catalog

- Add to Search

Artificial intelligence in education: Addressing ethical challenges in K-12 settings

Affiliation.

- 1 Michigan State University, East Lansing, MI USA.

- PMID: 34790956

- PMCID: PMC8455229

- DOI: 10.1007/s43681-021-00096-7

Artificial intelligence (AI) is a field of study that combines the applications of machine learning, algorithm productions, and natural language processing. Applications of AI transform the tools of education. AI has a variety of educational applications, such as personalized learning platforms to promote students' learning, automated assessment systems to aid teachers, and facial recognition systems to generate insights about learners' behaviors. Despite the potential benefits of AI to support students' learning experiences and teachers' practices, the ethical and societal drawbacks of these systems are rarely fully considered in K-12 educational contexts. The ethical challenges of AI in education must be identified and introduced to teachers and students. To address these issues, this paper (1) briefly defines AI through the concepts of machine learning and algorithms; (2) introduces applications of AI in educational settings and benefits of AI systems to support students' learning processes; (3) describes ethical challenges and dilemmas of using AI in education; and (4) addresses the teaching and understanding of AI by providing recommended instructional resources from two providers-i.e., the Massachusetts Institute of Technology's (MIT) Media Lab and Code.org. The article aims to help practitioners reap the benefits and navigate ethical challenges of integrating AI in K-12 classrooms, while also introducing instructional resources that teachers can use to advance K-12 students' understanding of AI and ethics.

Keywords: Artificial intelligence; Ethics; K-12 education; Teacher education.

© The Author(s), under exclusive licence to Springer Nature Switzerland AG 2021.

PubMed Disclaimer

Conflict of interest statement

Conflict of interestThe authors declare that they have no conflict of interest.

Potential ethical and societal risks…

Potential ethical and societal risks of AI applications in education

Student work from the activity…

Student work from the activity of “Youtube Redesign” (MIT Media Lab, AI and…

Similar articles

- Teachers' AI digital competencies and twenty-first century skills in the post-pandemic world. Ng DTK, Leung JKL, Su J, Ng RCW, Chu SKW. Ng DTK, et al. Educ Technol Res Dev. 2023;71(1):137-161. doi: 10.1007/s11423-023-10203-6. Epub 2023 Feb 21. Educ Technol Res Dev. 2023. PMID: 36844361 Free PMC article.

- [Subverting the Future of Teaching: Artificial Intelligence Innovation in Nursing Education]. Wu HS. Wu HS. Hu Li Za Zhi. 2024 Apr;71(2):20-25. doi: 10.6224/JN.202404_71(2).04. Hu Li Za Zhi. 2024. PMID: 38532671 Chinese.

- The emergent role of artificial intelligence, natural learning processing, and large language models in higher education and research. Alqahtani T, Badreldin HA, Alrashed M, Alshaya AI, Alghamdi SS, Bin Saleh K, Alowais SA, Alshaya OA, Rahman I, Al Yami MS, Albekairy AM. Alqahtani T, et al. Res Social Adm Pharm. 2023 Aug;19(8):1236-1242. doi: 10.1016/j.sapharm.2023.05.016. Epub 2023 Jun 4. Res Social Adm Pharm. 2023. PMID: 37321925 Review.

- Integrating Ethics and Career Futures with Technical Learning to Promote AI Literacy for Middle School Students: An Exploratory Study. Zhang H, Lee I, Ali S, DiPaola D, Cheng Y, Breazeal C. Zhang H, et al. Int J Artif Intell Educ. 2022 May 9:1-35. doi: 10.1007/s40593-022-00293-3. Online ahead of print. Int J Artif Intell Educ. 2022. PMID: 35573722 Free PMC article.

- Applications and Challenges of Implementing Artificial Intelligence in Medical Education: Integrative Review. Chan KS, Zary N. Chan KS, et al. JMIR Med Educ. 2019 Jun 15;5(1):e13930. doi: 10.2196/13930. JMIR Med Educ. 2019. PMID: 31199295 Free PMC article. Review.

- Evaluating generative AI integration in Saudi Arabian education: a mixed-methods study. Alammari A. Alammari A. PeerJ Comput Sci. 2024 Feb 16;10:e1879. doi: 10.7717/peerj-cs.1879. eCollection 2024. PeerJ Comput Sci. 2024. PMID: 38435558 Free PMC article.

- Proposing a Principle-Based Approach for Teaching AI Ethics in Medical Education. Weidener L, Fischer M. Weidener L, et al. JMIR Med Educ. 2024 Feb 9;10:e55368. doi: 10.2196/55368. JMIR Med Educ. 2024. PMID: 38285931 Free PMC article.

- Educating the next generation of radiologists: a comparative report of ChatGPT and e-learning resources. Meşe İ, Altıntaş Taşlıçay C, Kuzan BN, Kuzan TY, Sivrioğlu AK. Meşe İ, et al. Diagn Interv Radiol. 2024 May 13;30(3):163-174. doi: 10.4274/dir.2023.232496. Epub 2023 Dec 25. Diagn Interv Radiol. 2024. PMID: 38145370 Free PMC article. Review.