Statistics Made Easy

How to Test for Normality in R (4 Methods)

Many statistical tests make the assumption that datasets are normally distributed.

There are four common ways to check this assumption in R:

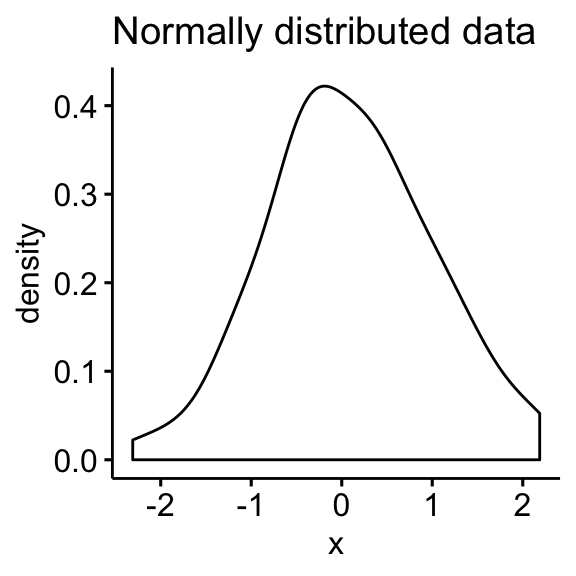

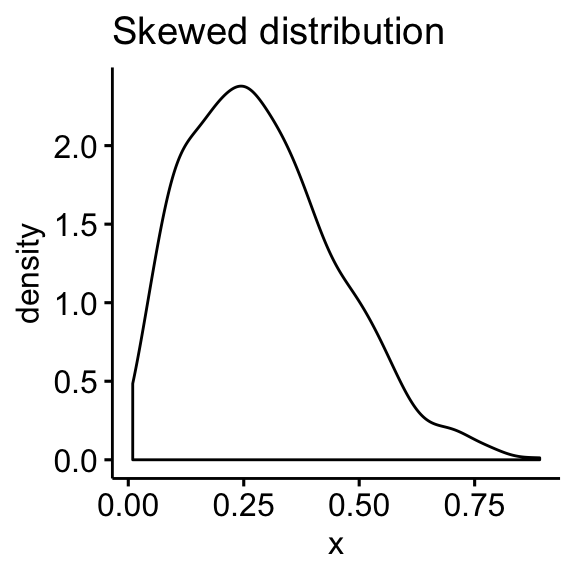

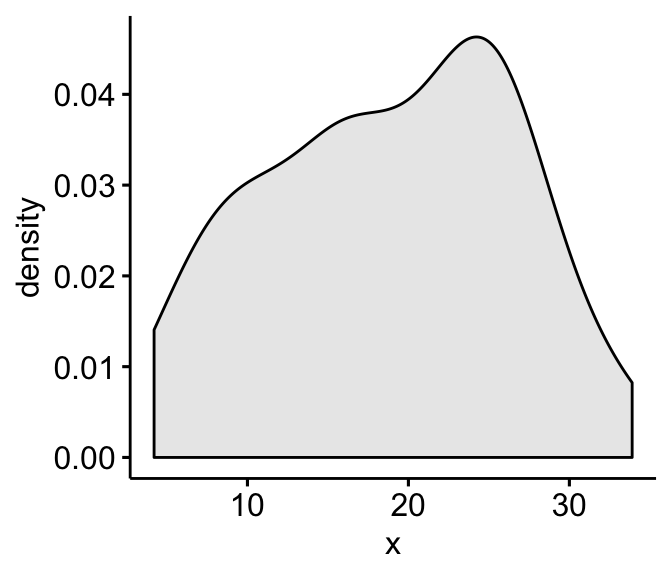

1. (Visual Method) Create a histogram.

- If the histogram is roughly “bell-shaped”, then the data is assumed to be normally distributed.

2. (Visual Method) Create a Q-Q plot.

- If the points in the plot roughly fall along a straight diagonal line, then the data is assumed to be normally distributed.

3. (Formal Statistical Test) Perform a Shapiro-Wilk Test.

- If the p-value of the test is greater than α = .05, then the data is assumed to be normally distributed.

4. (Formal Statistical Test) Perform a Kolmogorov-Smirnov Test.

The following examples show how to use each of these methods in practice.

Method 1: Create a Histogram

The following code shows how to create a histogram for a normally distributed and non-normally distributed dataset in R:

The histogram on the left exhibits a dataset that is normally distributed (roughly a “bell-shape”) and the one on the right exhibits a dataset that is not normally distributed.

Method 2: Create a Q-Q plot

The following code shows how to create a Q-Q plot for a normally distributed and non-normally distributed dataset in R:

The Q-Q plot on the left exhibits a dataset that is normally distributed (the points fall along a straight diagonal line) and the Q-Q plot on the right exhibits a dataset that is not normally distributed.

Method 3: Perform a Shapiro-Wilk Test

The following code shows how to perform a Shapiro-Wilk test on a normally distributed and non-normally distributed dataset in R:

The p-value of the first test is not less than .05, which indicates that the data is normally distributed.

The p-value of the second test is less than .05, which indicates that the data is not normally distributed.

Method 4: Perform a Kolmogorov-Smirnov Test

The following code shows how to perform a Kolmogorov-Smirnov test on a normally distributed and non-normally distributed dataset in R:

How to Handle Non-Normal Data

If a given dataset is not normally distributed, we can often perform one of the following transformations to make it more normally distributed:

1. Log Transformation: Transform the values from x to log(x) .

2. Square Root Transformation: Transform the values from x to √ x .

3. Cube Root Transformation: Transform the values from x to x 1/3 .

By performing these transformations, the dataset typically becomes more normally distributed.

Read this tutorial to see how to perform these transformations in R.

Additional Resources

How to Create Histograms in R How to Create & Interpret a Q-Q Plot in R How to Perform a Shapiro-Wilk Test in R How to Perform a Kolmogorov-Smirnov Test in R

Featured Posts

Hey there. My name is Zach Bobbitt. I have a Masters of Science degree in Applied Statistics and I’ve worked on machine learning algorithms for professional businesses in both healthcare and retail. I’m passionate about statistics, machine learning, and data visualization and I created Statology to be a resource for both students and teachers alike. My goal with this site is to help you learn statistics through using simple terms, plenty of real-world examples, and helpful illustrations.

3 Replies to “How to Test for Normality in R (4 Methods)”

Worked great thank you

it’s a great honor to learn Data Analysis especially using R software. it’s stress-free and it helps push your reasoning . It helps in understanding data collected on field,and encourage to help identify shortcomings of your data.

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Join the Statology Community

Sign up to receive Statology's exclusive study resource: 100 practice problems with step-by-step solutions. Plus, get our latest insights, tutorials, and data analysis tips straight to your inbox!

By subscribing you accept Statology's Privacy Policy.

Hypothesis Tests in R

This tutorial covers basic hypothesis testing in R.

- Normality tests

- Shapiro-Wilk normality test

- Kolmogorov-Smirnov test

- Comparing central tendencies: Tests with continuous / discrete data

- One-sample t-test : Normally-distributed sample vs. expected mean

- Two-sample t-test : Two normally-distributed samples

- Wilcoxen rank sum : Two non-normally-distributed samples

- Weighted two-sample t-test : Two continuous samples with weights

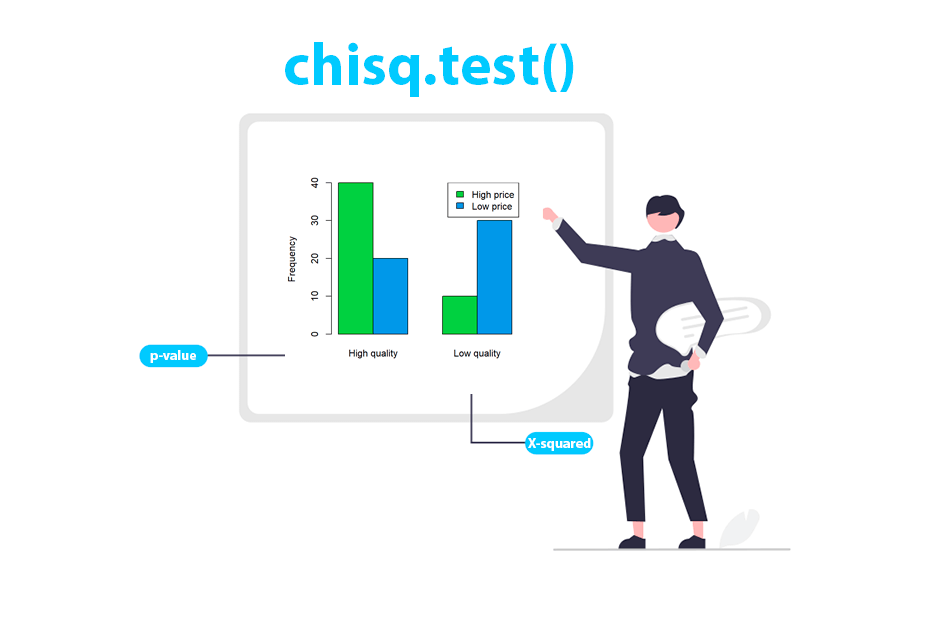

- Comparing proportions: Tests with categorical data

- Chi-squared goodness of fit test : Sampled frequencies of categorical values vs. expected frequencies

- Chi-squared independence test : Two sampled frequencies of categorical values

- Weighted chi-squared independence test : Two weighted sampled frequencies of categorical values

- Comparing multiple groups: Tests with categorical and continuous / discrete data

- Analysis of Variation (ANOVA) : Normally-distributed samples in groups defined by categorical variable(s)

- Kruskal-Wallace One-Way Analysis of Variance : Nonparametric test of the significance of differences between two or more groups

Hypothesis Testing

Science is "knowledge or a system of knowledge covering general truths or the operation of general laws especially as obtained and tested through scientific method" (Merriam-Webster 2022) .

The idealized world of the scientific method is question-driven , with the collection and analysis of data determined by the formulation of research questions and the testing of hypotheses. Hypotheses are tentative assumptions about what the answers to your research questions may be.

- Formulate questions: How can I understand some phenomenon?

- Literature review: What does existing research say about my questions?

- Formulate hypotheses: What do I think the answers to my questions will be?

- Collect data: What data can I gather to test my hypothesis?

- Test hypotheses: Does the data support my hypothesis?

- Communicate results: Who else needs to know about this?

- Formulate questions: Frame missing knowledge about a phenomenon as research question(s).

- Literature review: A literature review is an investigation of what existing research says about the phenomenon you are studying. A thorough literature review is essential to identify gaps in existing knowledge you can fill, and to avoid unnecessarily duplicating existing research.

- Formulate hypotheses: Develop possible answers to your research questions.

- Collect data: Acquire data that supports or refutes the hypothesis.

- Test hypotheses: Run tools to determine if the data corroborates the hypothesis.

- Communicate results: Share your findings with the broader community that might find them useful.

While the process of knowledge production is, in practice, often more iterative than this waterfall model, the testing of hypotheses is usually a fundamental element of scientific endeavors involving quantitative data.

The Problem of Induction

The scientific method looks to the past or present to build a model that can be used to infer what will happen in the future. General knowledge asserts that given a particular set of conditions, a particular outcome will or is likely to occur.

The problem of induction is that we cannot be 100% certain that what we are assuming is a general principle is not, in fact, specific to the particular set of conditions when we made our empirical observations. We cannot prove that that such principles will hold true under future conditions or different locations that we have not yet experienced (Vickers 2014) .

The problem of induction is often associated with the 18th-century British philosopher David Hume . This problem is especially vexing in the study of human beings, where behaviors are a function of complex social interactions that vary over both space and time.

Falsification

One way of addressing the problem of induction was proposed by the 20th-century Viennese philosopher Karl Popper .

Rather than try to prove a hypothesis is true, which we cannot do because we cannot know all possible situations that will arise in the future, we should instead concentrate on falsification , where we try to find situations where a hypothesis is false. While you cannot prove your hypothesis will always be true, you only need to find one situation where the hypothesis is false to demonstrate that the hypothesis can be false (Popper 1962) .

If a hypothesis is not demonstrated to be false by a particular test, we have corroborated that hypothesis. While corroboration does not "prove" anything with 100% certainty, by subjecting a hypothesis to multiple tests that fail to demonstrate that it is false, we can have increasing confidence that our hypothesis reflects reality.

Null and Alternative Hypotheses

In scientific inquiry, we are often concerned with whether a factor we are considering (such as taking a specific drug) results in a specific effect (such as reduced recovery time).

To evaluate whether a factor results in an effect, we will perform an experiment and / or gather data. For example, in a clinical drug trial, half of the test subjects will be given the drug, and half will be given a placebo (something that appears to be the drug but is actually a neutral substance).

Because the data we gather will usually only be a portion (sample) of total possible people or places that could be affected (population), there is a possibility that the sample is unrepresentative of the population. We use a statistical test that considers that uncertainty when assessing whether an effect is associated with a factor.

- Statistical testing begins with an alternative hypothesis (H 1 ) that states that the factor we are considering results in a particular effect. The alternative hypothesis is based on the research question and the type of statistical test being used.

- Because of the problem of induction , we cannot prove our alternative hypothesis. However, under the concept of falsification , we can evaluate the data to see if there is a significant probability that our data falsifies our alternative hypothesis (Wilkinson 2012) .

- The null hypothesis (H 0 ) states that the factor has no effect. The null hypothesis is the opposite of the alternative hypothesis. The null hypothesis is what we are testing when we perform a hypothesis test.

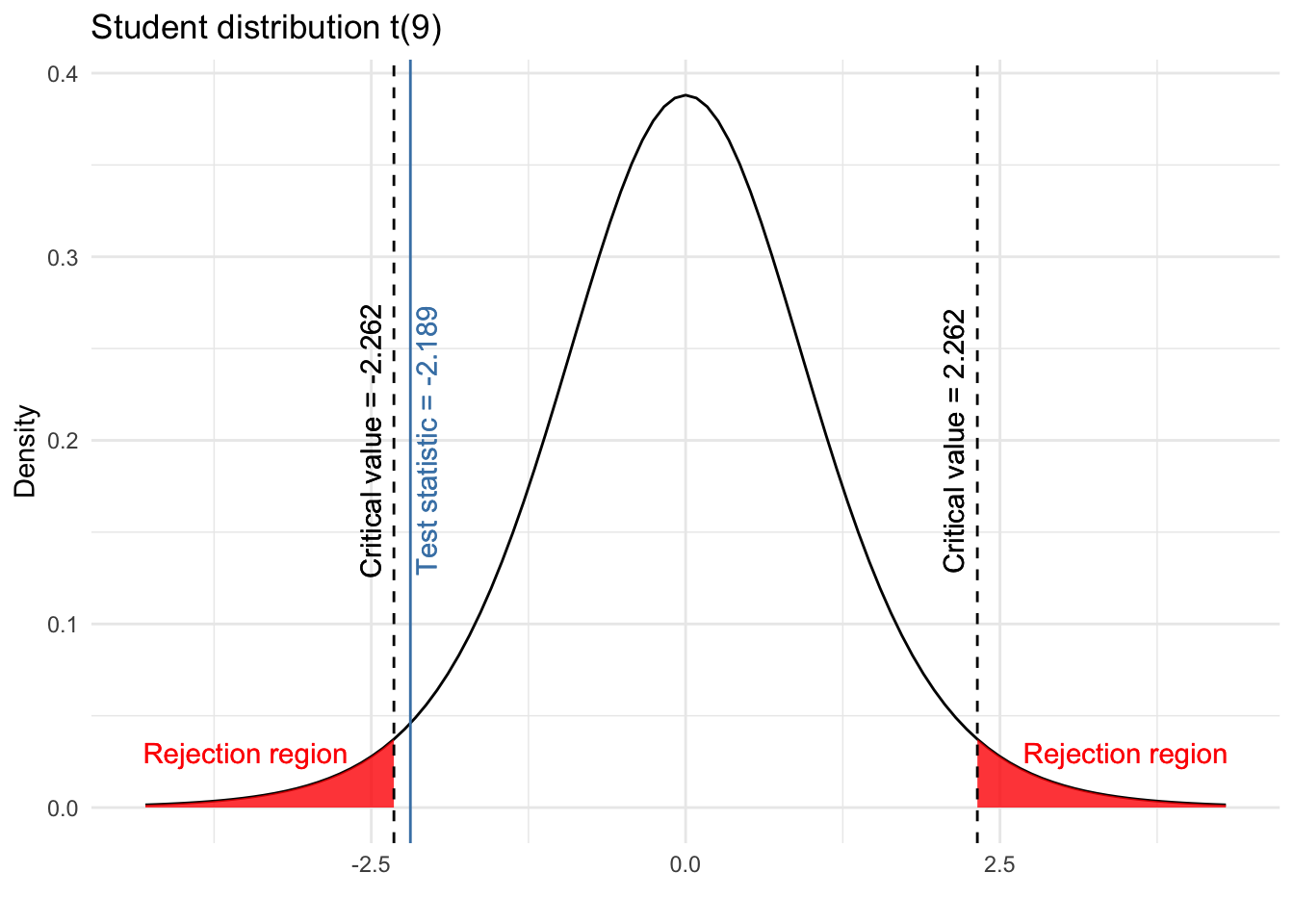

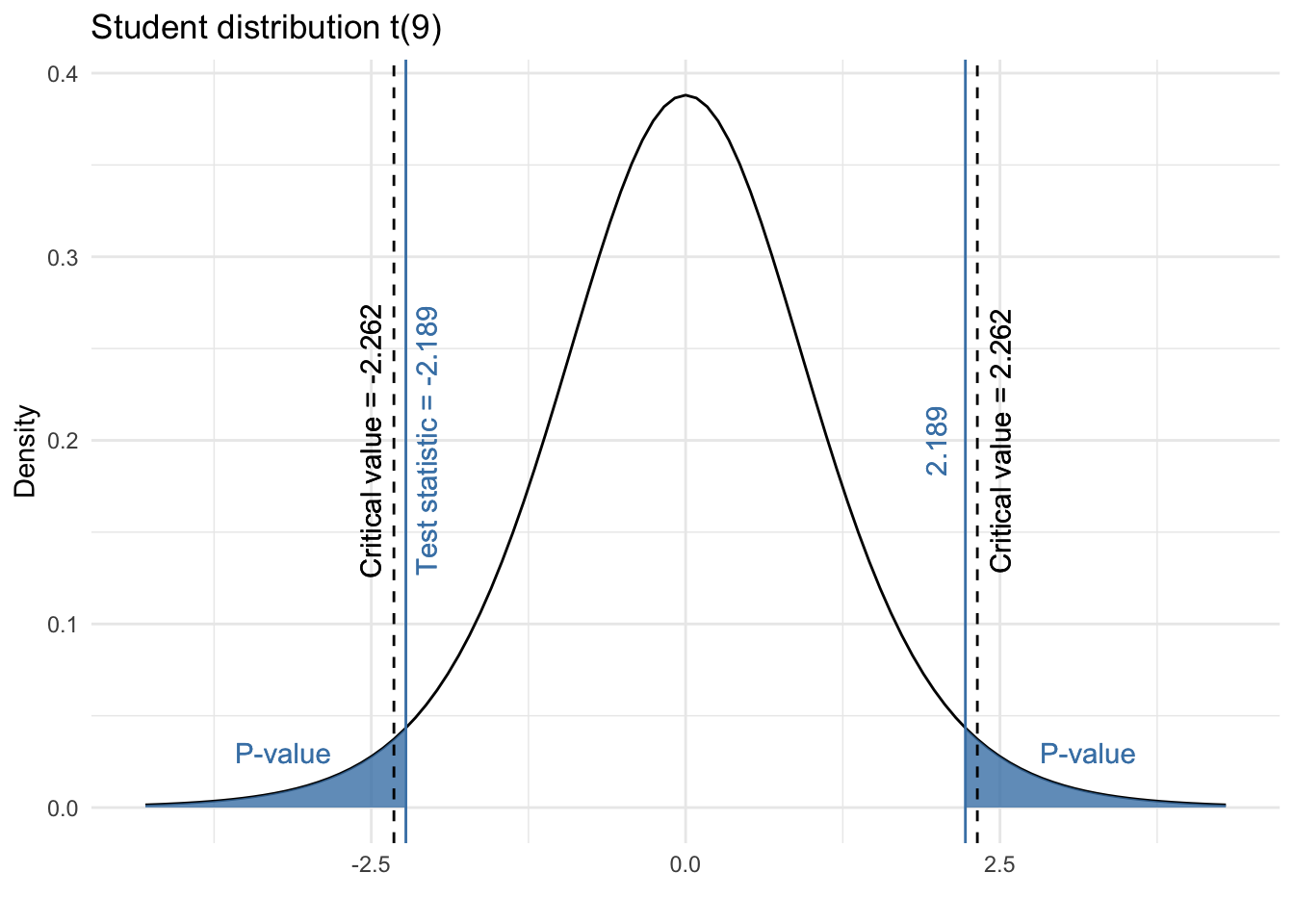

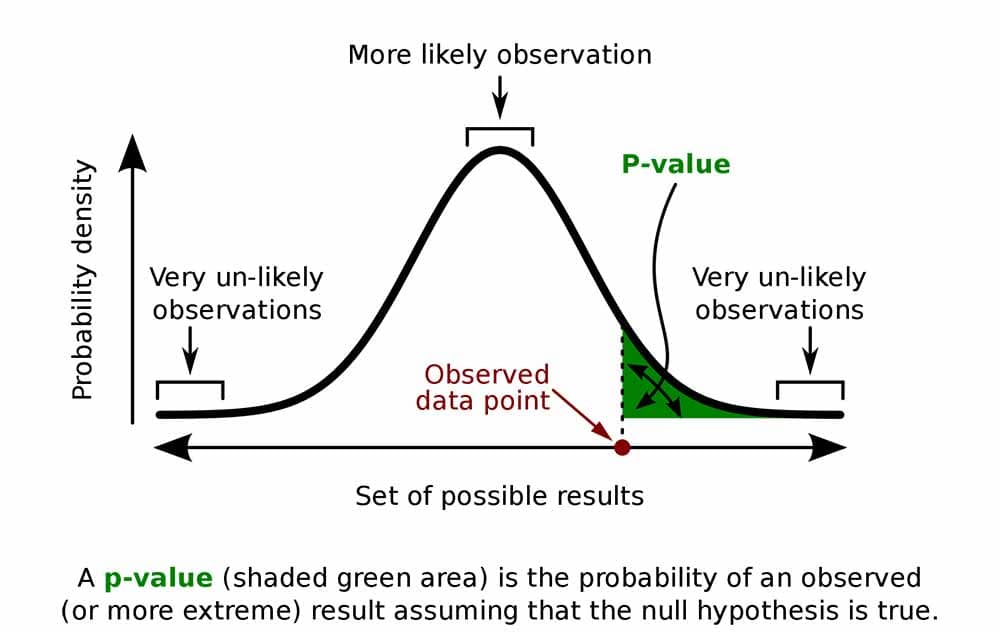

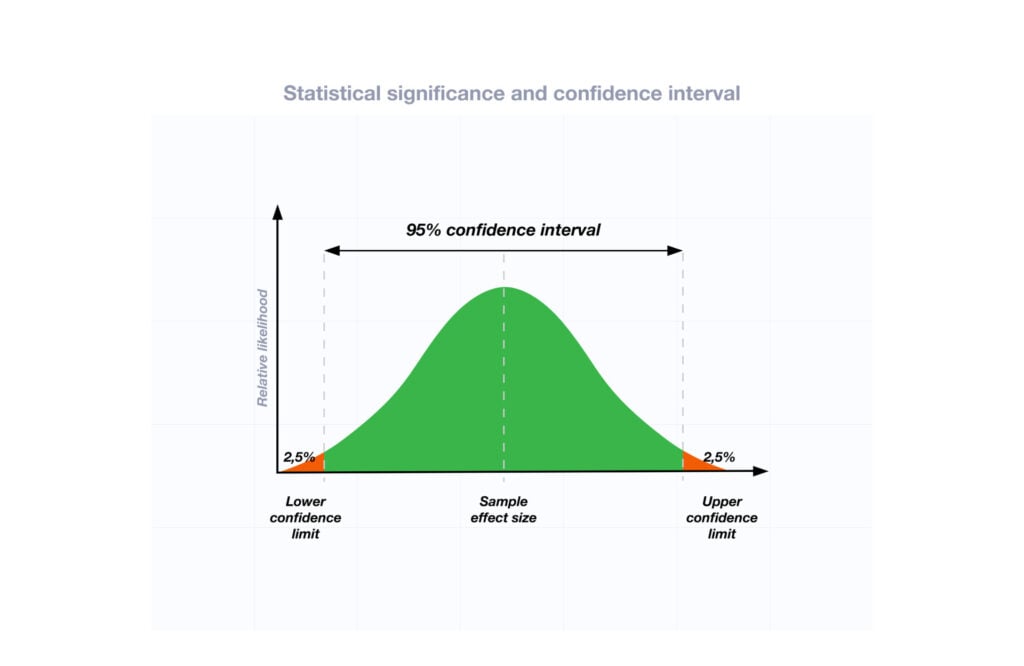

The output of a statistical test like the t-test is a p -value. A p -value is the probability that any effects we see in the sampled data are the result of random sampling error (chance).

- If a p -value is greater than the significance level (0.05 for 5% significance) we fail to reject the null hypothesis since there is a significant possibility that our results falsify our alternative hypothesis.

- If a p -value is lower than the significance level (0.05 for 5% significance) we reject the null hypothesis and have corroborated (provided evidence for) our alternative hypothesis.

The calculation and interpretation of the p -value goes back to the central limit theorem , which states that random sampling error has a normal distribution.

Using our example of a clinical drug trial, if the mean recovery times for the two groups are close enough together that there is a significant possibility ( p > 0.05) that the recovery times are the same (falsification), we fail to reject the null hypothesis.

However, if the mean recovery times for the two groups are far enough apart that the probability they are the same is under the level of significance ( p < 0.05), we reject the null hypothesis and have corroborated our alternative hypothesis.

Significance means that an effect is "probably caused by something other than mere chance" (Merriam-Webster 2022) .

- The significance level (α) is the threshold for significance and, by convention, is usually 5%, 10%, or 1%, which corresponds to 95% confidence, 90% confidence, or 99% confidence, respectively.

- A factor is considered statistically significant if the probability that the effect we see in the data is a result of random sampling error (the p -value) is below the chosen significance level.

- A statistical test is used to evaluate whether a factor being considered is statistically significant (Gallo 2016) .

Type I vs. Type II Errors

Although we are making a binary choice between rejecting and failing to reject the null hypothesis, because we are using sampled data, there is always the possibility that the choice we have made is an error.

There are two types of errors that can occur in hypothesis testing.

- Type I error (false positive) occurs when a low p -value causes us to reject the null hypothesis, but the factor does not actually result in the effect.

- Type II error (false negative) occurs when a high p -value causes us to fail to reject the null hypothesis, but the factor does actually result in the effect.

The numbering of the errors reflects the predisposition of the scientific method to be fundamentally skeptical . Accepting a fact about the world as true when it is not true is considered worse than rejecting a fact about the world that actually is true.

Statistical Significance vs. Importance

When we fail to reject the null hypothesis, we have found information that is commonly called statistically significant . But there are multiple challenges with this terminology.

First, statistical significance is distinct from importance (NIST 2012) . For example, if sampled data reveals a statistically significant difference in cancer rates, that does not mean that the increased risk is important enough to justify expensive mitigation measures. All statistical results require critical interpretation within the context of the phenomenon being observed. People with different values and incentives can have different interpretations of whether statistically significant results are important.

Second, the use of 95% probability for defining confidence intervals is an arbitrary convention. This creates a good vs. bad binary that suggests a "finality and certitude that are rarely justified." Alternative approaches like Beyesian statistics that express results as probabilities can offer more nuanced ways of dealing with complexity and uncertainty (Clayton 2022) .

Science vs. Non-science

Not all ideas can be falsified, and Popper uses the distinction between falsifiable and non-falsifiable ideas to make a distinction between science and non-science. In order for an idea to be science it must be an idea that can be demonstrated to be false.

While Popper asserts there is still value in ideas that are not falsifiable, such ideas are not science in his conception of what science is. Such non-science ideas often involve questions of subjective values or unseen forces that are complex, amorphous, or difficult to objectively observe.

| Falsifiable (Science) | Non-Falsifiable (Non-Science) |

|---|---|

| Murder death rates by firearms tend to be higher in countries with higher gun ownership rates | Murder is wrong |

| Marijuana users may be more likely than nonusers to | The benefits of marijuana outweigh the risks |

| Job candidates who meaningfully research the companies they are interviewing with have higher success rates | Prayer improves success in job interviews |

Example Data

As example data, this tutorial will use a table of anonymized individual responses from the CDC's Behavioral Risk Factor Surveillance System . The BRFSS is a "system of health-related telephone surveys that collect state data about U.S. residents regarding their health-related risk behaviors, chronic health conditions, and use of preventive services" (CDC 2019) .

A CSV file with the selected variables used in this tutorial is available here and can be imported into R with read.csv() .

Guidance on how to download and process this data directly from the CDC website is available here...

Variable Types

The publicly-available BRFSS data contains a wide variety of discrete, ordinal, and categorical variables. Variables often contain special codes for non-responsiveness or missing (NA) values. Examples of how to clean these variables are given here...

The BRFSS has a codebook that gives the survey questions associated with each variable, and the way that responses are encoded in the variable values.

Normality Tests

Tests are commonly divided into two groups depending on whether they are built on the assumption that the continuous variable has a normal distribution.

- Parametric tests presume a normal distribution.

- Non-parametric tests can work with normal and non-normal distributions.

The distinction between parametric and non-parametric techniques is especially important when working with small numbers of samples (less than 40 or so) from a larger population.

The normality tests given below do not work with large numbers of values, but with many statistical techniques, violations of normality assumptions do not cause major problems when large sample sizes are used. (Ghasemi and Sahediasi 2012) .

The Shapiro-Wilk Normality Test

- Data: A continuous or discrete sampled variable

- R Function: shapiro.test()

- Null hypothesis (H 0 ): The population distribution from which the sample is drawn is not normal

- History: Samuel Sanford Shapiro and Martin Wilk (1965)

This is an example with random values from a normal distribution.

This is an example with random values from a uniform (non-normal) distribution.

The Kolmogorov-Smirnov Test

The Kolmogorov-Smirnov is a more-generalized test than the Shapiro-Wilks test that can be used to test whether a sample is drawn from any type of distribution.

- Data: A continuous or discrete sampled variable and a reference probability distribution

- R Function: ks.test()

- Null hypothesis (H 0 ): The population distribution from which the sample is drawn does not match the reference distribution

- History: Andrey Kolmogorov (1933) and Nikolai Smirnov (1948)

- pearson.test() The Pearson Chi-square Normality Test from the nortest library. Lower p-values (closer to 0) means to reject the reject the null hypothesis that the distribution IS normal.

Modality Tests of Samples

Comparing two central tendencies: tests with continuous / discrete data, one sample t-test (two-sided).

The one-sample t-test tests the significance of the difference between the mean of a sample and an expected mean.

- Data: A continuous or discrete sampled variable and a single expected mean (μ)

- Parametric (normal distributions)

- R Function: t.test()

- Null hypothesis (H 0 ): The means of the sampled distribution matches the expected mean.

- History: William Sealy Gosset (1908)

t = ( Χ - μ) / (σ̂ / √ n )

- t : The value of t used to find the p-value

- Χ : The sample mean

- μ: The population mean

- σ̂: The estimate of the standard deviation of the population (usually the stdev of the sample

- n : The sample size

T-tests should only be used when the population is at least 20 times larger than its respective sample. If the sample size is too large, the low p-value makes the insignificant look significant. .

For example, we test a hypothesis that the mean weight in IL in 2020 is different than the 2005 continental mean weight.

Walpole et al. (2012) estimated that the average adult weight in North America in 2005 was 178 pounds. We could presume that Illinois is a comparatively normal North American state that would follow the trend of both increased age and increased weight (CDC 2021) .

The low p-value leads us to reject the null hypothesis and corroborate our alternative hypothesis that mean weight changed between 2005 and 2020 in Illinois.

One Sample T-Test (One-Sided)

Because we were expecting an increase, we can modify our hypothesis that the mean weight in 2020 is higher than the continental weight in 2005. We can perform a one-sided t-test using the alternative="greater" parameter.

The low p-value leads us to again reject the null hypothesis and corroborate our alternative hypothesis that mean weight in 2020 is higher than the continental weight in 2005.

Note that this does not clearly evaluate whether weight increased specifically in Illinois, or, if it did, whether that was caused by an aging population or decreasingly healthy diets. Hypotheses based on such questions would require more detailed analysis of individual data.

Although we can see that the mean cancer incidence rate is higher for counties near nuclear plants, there is the possiblity that the difference in means happened by accident and the nuclear plants have nothing to do with those higher rates.

The t-test allows us to test a hypothesis. Note that a t-test does not "prove" or "disprove" anything. It only gives the probability that the differences we see between two areas happened by chance. It also does not evaluate whether there are other problems with the data, such as a third variable, or inaccurate cancer incidence rate estimates.

Note that this does not prove that nuclear power plants present a higher cancer risk to their neighbors. It simply says that the slightly higher risk is probably not due to chance alone. But there are a wide variety of other other related or unrelated social, environmental, or economic factors that could contribute to this difference.

Box-and-Whisker Chart

One visualization commonly used when comparing distributions (collections of numbers) is a box-and-whisker chart. The boxes show the range of values in the middle 25% to 50% to 75% of the distribution and the whiskers show the extreme high and low values.

Although Google Sheets does not provide the capability to create box-and-whisker charts, Google Sheets does have candlestick charts , which are similar to box-and-whisker charts, and which are normally used to display the range of stock price changes over a period of time.

This video shows how to create a candlestick chart comparing the distributions of cancer incidence rates. The QUARTILE() function gets the values that divide the distribution into four equally-sized parts. This shows that while the range of incidence rates in the non-nuclear counties are wider, the bulk of the rates are below the rates in nuclear counties, giving a visual demonstration of the numeric output of our t-test.

While categorical data can often be reduced to dichotomous data and used with proportions tests or t-tests, there are situations where you are sampling data that falls into more than two categories and you would like to make hypothesis tests about those categories. This tutorial describes a group of tests that can be used with that type of data.

Two-Sample T-Test

When comparing means of values from two different groups in your sample, a two-sample t-test is in order.

The two-sample t-test tests the significance of the difference between the means of two different samples.

- Two normally-distributed, continuous or discrete sampled variables, OR

- A normally-distributed continuous or sampled variable and a parallel dichotomous variable indicating what group each of the values in the first variable belong to

- Null hypothesis (H 0 ): The means of the two sampled distributions are equal.

For example, given the low incomes and delicious foods prevalent in Mississippi, we might presume that average weight in Mississippi would be higher than in Illinois.

We test a hypothesis that the mean weight in IL in 2020 is less than the 2020 mean weight in Mississippi.

The low p-value leads us to reject the null hypothesis and corroborate our alternative hypothesis that mean weight in Illinois is less than in Mississippi.

While the difference in means is statistically significant, it is small (182 vs. 187), which should lead to caution in interpretation that you avoid using your analysis simply to reinforce unhelpful stigmatization.

Wilcoxen Rank Sum Test (Mann-Whitney U-Test)

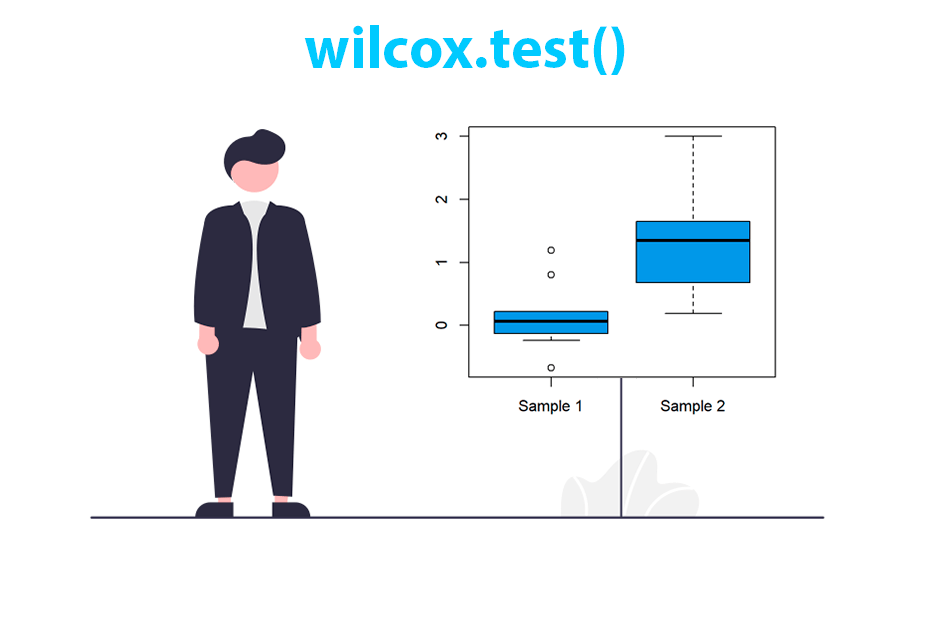

The Wilcoxen rank sum test tests the significance of the difference between the means of two different samples. This is a non-parametric alternative to the t-test.

- Data: Two continuous sampled variables

- Non-parametric (normal or non-normal distributions)

- R Function: wilcox.test()

- Null hypothesis (H 0 ): For randomly selected values X and Y from two populations, the probability of X being greater than Y is equal to the probability of Y being greater than X.

- History: Frank Wilcoxon (1945) and Henry Mann and Donald Whitney (1947)

The test is is implemented with the wilcox.test() function.

- When the test is performed on one sample in comparison to an expected value around which the distribution is symmetrical (μ), the test is known as a Mann-Whitney U test .

- When the test is performed to compare two samples, the test is known as a Wilcoxon rank sum test .

For this example, we will use AVEDRNK3: During the past 30 days, on the days when you drank, about how many drinks did you drink on the average?

- 1 - 76: Number of drinks

- 77: Don’t know/Not sure

- 99: Refused

- NA: Not asked or Missing

The histogram clearly shows this to be a non-normal distribution.

Continuing the comparison of Illinois and Mississippi from above, we might presume that with all that warm weather and excellent food in Mississippi, they might be inclined to drink more. The means of average number of drinks per month seem to suggest that Mississippians do drink more than Illinoians.

We can test use wilcox.test() to test a hypothesis that the average amount of drinking in Illinois is different than in Mississippi. Like the t-test, the alternative can be specified as two-sided or one-sided, and for this example we will test whether the sampled Illinois value is indeed less than the Mississippi value.

The low p-value leads us to reject the null hypothesis and corroborates our hypothesis that average drinking is lower in Illinois than in Mississippi. As before, this tells us nothing about why this is the case.

Weighted Two-Sample T-Test

The downloadable BRFSS data is raw, anonymized survey data that is biased by uneven geographic coverage of survey administration (noncoverage) and lack of responsiveness from some segments of the population (nonresponse). The X_LLCPWT field (landline, cellphone weighting) is a weighting factor added by the CDC that can be assigned to each response to compensate for these biases.

The wtd.t.test() function from the weights library has a weights parameter that can be used to include a weighting factor as part of the t-test.

Comparing Proportions: Tests with Categorical Data

Chi-squared goodness of fit.

- Tests the significance of the difference between sampled frequencies of different values and expected frequencies of those values

- Data: A categorical sampled variable and a table of expected frequencies for each of the categories

- R Function: chisq.test()

- Null hypothesis (H 0 ): The relative proportions of categories in one variable are different from the expected proportions

- History: Karl Pearson (1900)

- Example Question: Are the voting preferences of voters in my district significantly different from the current national polls?

For example, we test a hypothesis that smoking rates changed between 2000 and 2020.

In 2000, the estimated rate of adult smoking in Illinois was 22.3% (Illinois Department of Public Health 2004) .

The variable we will use is SMOKDAY2: Do you now smoke cigarettes every day, some days, or not at all?

- 1: Current smoker - now smokes every day

- 2: Current smoker - now smokes some days

- 3: Not at all

- 7: Don't know

- NA: Not asked or missing - NA is used for people who have never smoked

We subset only yes/no responses in Illinois and convert into a dummy variable (yes = 1, no = 0).

The listing of the table as percentages indicates that smoking rates were halved between 2000 and 2020, but since this is sampled data, we need to run a chi-squared test to make sure the difference can't be explained by the randomness of sampling.

In this case, the very low p-value leads us to reject the null hypothesis and corroborates the alternative hypothesis that smoking rates changed between 2000 and 2020.

Chi-Squared Contingency Analysis / Test of Independence

- Tests the significance of the difference between frequencies between two different groups

- Data: Two categorical sampled variables

- Null hypothesis (H 0 ): The relative proportions of one variable are independent of the second variable.

We can also compare categorical proportions between two sets of sampled categorical variables.

The chi-squared test can is used to determine if two categorical variables are independent. What is passed as the parameter is a contingency table created with the table() function that cross-classifies the number of rows that are in the categories specified by the two categorical variables.

The null hypothesis with this test is that the two categories are independent. The alternative hypothesis is that there is some dependency between the two categories.

For this example, we can compare the three categories of smokers (daily = 1, occasionally = 2, never = 3) across the two categories of states (Illinois and Mississippi).

The low p-value leads us to reject the null hypotheses that the categories are independent and corroborates our hypotheses that smoking behaviors in the two states are indeed different.

p-value = 1.516e-09

Weighted Chi-Squared Contingency Analysis

As with the weighted t-test above, the weights library contains the wtd.chi.sq() function for incorporating weighting into chi-squared contingency analysis.

As above, the even lower p-value leads us to again reject the null hypothesis that smoking behaviors are independent in the two states.

Suppose that the Macrander campaign would like to know how partisan this election is. If people are largely choosing to vote along party lines, the campaign will seek to get their base voters out to the polls. If people are splitting their ticket, the campaign may focus their efforts more broadly.

In the example below, the Macrander campaign took a small poll of 30 people asking who they wished to vote for AND what party they most strongly affiliate with.

The output of table() shows fairly strong relationship between party affiliation and candidates. Democrats tend to vote for Macrander, while Republicans tend to vote for Stewart, while independents all vote for Miller.

This is reflected in the very low p-value from the chi-squared test. This indicates that there is a very low probability that the two categories are independent. Therefore we reject the null hypothesis.

In contrast, suppose that the poll results had showed there were a number of people crossing party lines to vote for candidates outside their party. The simulated data below uses the runif() function to randomly choose 50 party names.

The contingency table() shows no clear relationship between party affiliation and candidate. This is validated quantitatively by the chi-squared test. The fairly high p-value of 0.4018 indicates a 40% chance that the two categories are independent. Therefore, we fail to reject the null hypothesis and the campaign should focus their efforts on the broader electorate.

The warning message given by the chisq.test() function indicates that the sample size is too small to make an accurate analysis. The simulate.p.value = T parameter adds Monte Carlo simulation to the test to improve the estimation and get rid of the warning message. However, the best way to get rid of this message is to get a larger sample.

Comparing Categorical and Continuous Variables

Analysis of variation (anova).

Analysis of Variance (ANOVA) is a test that you can use when you have a categorical variable and a continuous variable. It is a test that considers variability between means for different categories as well as the variability of observations within groups.

There are a wide variety of different extensions of ANOVA that deal with covariance (ANCOVA), multiple variables (MANOVA), and both of those together (MANCOVA). These techniques can become quite complicated and also assume that the values in the continuous variables have a normal distribution.

- Data: One or more categorical (independent) variables and one continuous (dependent) sampled variable

- R Function: aov()

- Null hypothesis (H 0 ): There is no difference in means of the groups defined by each level of the categorical (independent) variable

- History: Ronald Fisher (1921)

- Example Question: Do low-, middle- and high-income people vary in the amount of time they spend watching TV?

As an example, we look at the continuous weight variable (WEIGHT2) split into groups by the eight income categories in INCOME2: Is your annual household income from all sources?

- 1: Less than $10,000

- 2: $10,000 to less than $15,000

- 3: $15,000 to less than $20,000

- 4: $20,000 to less than $25,000

- 5: $25,000 to less than $35,000

- 6: $35,000 to less than $50,000

- 7: $50,000 to less than $75,000)

- 8: $75,000 or more

The barplot() of means does show variation among groups, although there is no clear linear relationship between income and weight.

To test whether this variation could be explained by randomness in the sample, we run the ANOVA test.

The low p-value leads us to reject the null hypothesis that there is no difference in the means of the different groups, and corroborates the alternative hypothesis that mean weights differ based on income group.

However, it gives us no clear model for describing that relationship and offers no insights into why income would affect weight, especially in such a nonlinear manner.

Suppose you are performing research into obesity in your city. You take a sample of 30 people in three different neighborhoods (90 people total), collecting information on health and lifestyle. Two variables you collect are height and weight so you can calculate body mass index . Although this index can be misleading for some populations (notably very athletic people), ordinary sedentary people can be classified according to BMI:

Average BMI in the US from 2007-2010 was around 28.6 and rising, standard deviation of around 5 .

You would like to know if there is a difference in BMI between different neighborhoods so you can know whether to target specific neighborhoods or make broader city-wide efforts. Since you have more than two groups, you cannot use a t-test().

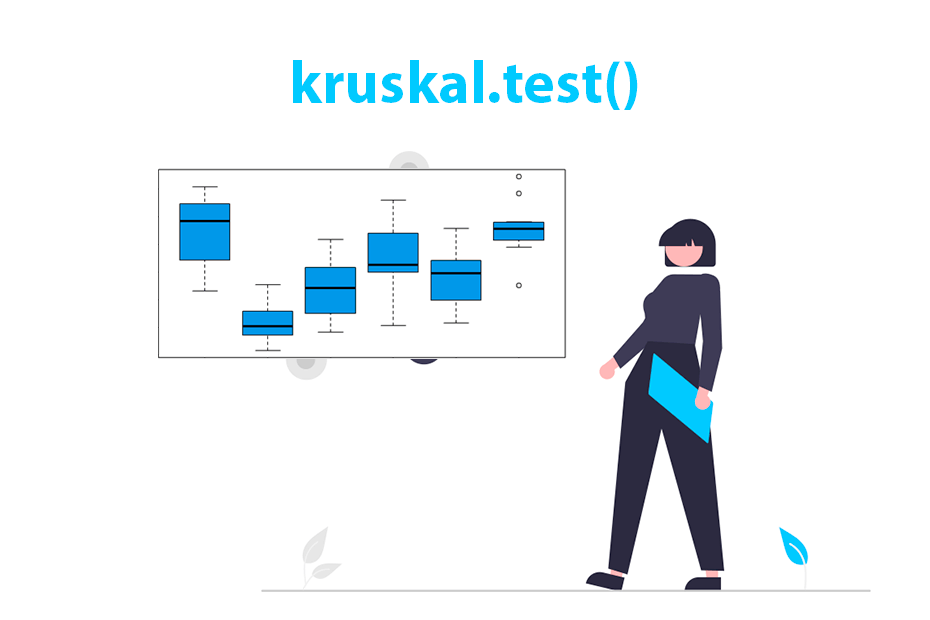

Kruskal-Wallace One-Way Analysis of Variance

A somewhat simpler test is the Kruskal-Wallace test which is a nonparametric analogue to ANOVA for testing the significance of differences between two or more groups.

- R Function: kruskal.test()

- Null hypothesis (H 0 ): The samples come from the same distribution.

- History: William Kruskal and W. Allen Wallis (1952)

For this example, we will investigate whether mean weight varies between the three major US urban states: New York, Illinois, and California.

To test whether this variation could be explained by randomness in the sample, we run the Kruskal-Wallace test.

The low p-value leads us to reject the null hypothesis that the samples come from the same distribution. This corroborates the alternative hypothesis that mean weights differ based on state.

A convienent way of visualizing a comparison between continuous and categorical data is with a box plot , which shows the distribution of a continuous variable across different groups:

A percentile is the level at which a given percentage of the values in the distribution are below: the 5th percentile means that five percent of the numbers are below that value.

The quartiles divide the distribution into four parts. 25% of the numbers are below the first quartile. 75% are below the third quartile. 50% are below the second quartile, making it the median.

Box plots can be used with both sampled data and population data.

The first parameter to the box plot is a formula: the continuous variable as a function of (the tilde) the second variable. A data= parameter can be added if you are using variables in a data frame.

The chi-squared test can be used to determine if two categorical variables are independent of each other.

Statistical Tests and Assumptions

Normality Test in R

Many of the statistical methods including correlation, regression, t tests, and analysis of variance assume that the data follows a normal distribution or a Gaussian distribution. These tests are called parametric tests, because their validity depends on the distribution of the data.

Normality and the other assumptions made by these tests should be taken seriously to draw reliable interpretation and conclusions of the research.

With large enough sample sizes (> 30 or 40), there’s a pretty good chance that the data will be normally distributed; or at least close enough to normal that you can get away with using parametric tests, such as t-test (central limit theorem).

In this chapter, you will learn how to check the normality of the data in R by visual inspection ( QQ plots and density distributions ) and by significance tests ( Shapiro-Wilk test ).

Prerequisites

Examples of distribution shapes, visual methods, shapiro-wilk’s normality test, related book.

Make sure you have installed the following R packages:

- tidyverse for data manipulation and visualization

- ggpubr for creating easily publication ready plots

- rstatix provides pipe-friendly R functions for easy statistical analyses

Start by loading the packages:

We’ll use the ToothGrowth dataset. Inspect the data by displaying some random rows by groups:

- Normal distribution

- Skewed distributions

Check normality in R

Question: We want to test if the variable len (tooth length) is normally distributed.

Density plot and Q-Q plot can be used to check normality visually.

- Density plot : the density plot provides a visual judgment about whether the distribution is bell shaped.

- QQ plot : QQ plot (or quantile-quantile plot) draws the correlation between a given sample and the normal distribution. A 45-degree reference line is also plotted. In a QQ plot, each observation is plotted as a single dot. If the data are normal, the dots should form a straight line.

As all the points fall approximately along this reference line, we can assume normality.

Visual inspection, described in the previous section, is usually unreliable. It’s possible to use a significance test comparing the sample distribution to a normal one in order to ascertain whether data show or not a serious deviation from normality.

There are several methods for evaluate normality, including the Kolmogorov-Smirnov (K-S) normality test and the Shapiro-Wilk’s test .

The null hypothesis of these tests is that “sample distribution is normal”. If the test is significant , the distribution is non-normal.

Shapiro-Wilk’s method is widely recommended for normality test and it provides better power than K-S. It is based on the correlation between the data and the corresponding normal scores (Ghasemi and Zahediasl 2012) .

Note that, normality test is sensitive to sample size. Small samples most often pass normality tests. Therefore, it’s important to combine visual inspection and significance test in order to take the right decision.

The R function shapiro_test() [rstatix package] provides a pipe-friendly framework to compute Shapiro-Wilk test for one or multiple variables. It also supports a grouped data. It’s a wrapper around R base function shapiro.test() .

- Shapiro test for one variable:

From the output above, the p-value > 0.05 implying that the distribution of the data are not significantly different from normal distribution. In other words, we can assume the normality.

- Shapiro test for grouped data:

- Shapiro test for multiple variables:

This chapter describes how to check the normality of a data using QQ-plot and Shapiro-Wilk test.

Note that, if your sample size is greater than 50, the normal QQ plot is preferred because at larger sample sizes the Shapiro-Wilk test becomes very sensitive even to a minor deviation from normality.

Consequently, we should not rely on only one approach for assessing the normality. A better strategy is to combine visual inspection and statistical test.

Ghasemi, Asghar, and Saleh Zahediasl. 2012. “Normality Tests for Statistical Analysis: A Guide for Non-Statisticians.” Int J Endocrinol Metab 10 (2): 486–89. doi: 10.5812/ijem.3505 .

Recommended for you

This section contains best data science and self-development resources to help you on your path.

Coursera - Online Courses and Specialization

Data science.

- Course: Machine Learning: Master the Fundamentals by Stanford

- Specialization: Data Science by Johns Hopkins University

- Specialization: Python for Everybody by University of Michigan

- Courses: Build Skills for a Top Job in any Industry by Coursera

- Specialization: Master Machine Learning Fundamentals by University of Washington

- Specialization: Statistics with R by Duke University

- Specialization: Software Development in R by Johns Hopkins University

- Specialization: Genomic Data Science by Johns Hopkins University

Popular Courses Launched in 2020

- Google IT Automation with Python by Google

- AI for Medicine by deeplearning.ai

- Epidemiology in Public Health Practice by Johns Hopkins University

- AWS Fundamentals by Amazon Web Services

Trending Courses

- The Science of Well-Being by Yale University

- Google IT Support Professional by Google

- Python for Everybody by University of Michigan

- IBM Data Science Professional Certificate by IBM

- Business Foundations by University of Pennsylvania

- Introduction to Psychology by Yale University

- Excel Skills for Business by Macquarie University

- Psychological First Aid by Johns Hopkins University

- Graphic Design by Cal Arts

Amazing Selling Machine

- Free Training - How to Build a 7-Figure Amazon FBA Business You Can Run 100% From Home and Build Your Dream Life! by ASM

Books - Data Science

- Practical Guide to Cluster Analysis in R by A. Kassambara (Datanovia)

- Practical Guide To Principal Component Methods in R by A. Kassambara (Datanovia)

- Machine Learning Essentials: Practical Guide in R by A. Kassambara (Datanovia)

- R Graphics Essentials for Great Data Visualization by A. Kassambara (Datanovia)

- GGPlot2 Essentials for Great Data Visualization in R by A. Kassambara (Datanovia)

- Network Analysis and Visualization in R by A. Kassambara (Datanovia)

- Practical Statistics in R for Comparing Groups: Numerical Variables by A. Kassambara (Datanovia)

- Inter-Rater Reliability Essentials: Practical Guide in R by A. Kassambara (Datanovia)

- R for Data Science: Import, Tidy, Transform, Visualize, and Model Data by Hadley Wickham & Garrett Grolemund

- Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow: Concepts, Tools, and Techniques to Build Intelligent Systems by Aurelien Géron

- Practical Statistics for Data Scientists: 50 Essential Concepts by Peter Bruce & Andrew Bruce

- Hands-On Programming with R: Write Your Own Functions And Simulations by Garrett Grolemund & Hadley Wickham

- An Introduction to Statistical Learning: with Applications in R by Gareth James et al.

- Deep Learning with R by François Chollet & J.J. Allaire

- Deep Learning with Python by François Chollet

Version: Français

Comment ( 1 )

can you please give a reference to the book or paper that support the claims you do in this lesson: eg. “Note that, normality test is sensitive to sample size. Small samples most often pass normality tests. Therefore, it’s important to combine visual inspection and significance test in order to take the right decision.”

Give a comment Cancel reply

Course curriculum.

- Normality Test in R 10 mins

- Homogeneity of Variance Test in R 10 mins

- Mauchly's Test of Sphericity in R 15 mins

- Transform Data to Normal Distribution in R 15 mins

Alboukadel Kassambara

Role : founder of datanovia.

- Website : https://www.datanovia.com/en

- Experience : >10 years

- Specialist in : Bioinformatics and Cancer Biology

Introduction to Statistics with R

6.2 hypothesis tests, 6.2.1 illustrating a hypothesis test.

Let’s say we have a batch of chocolate bars, and we’re not sure if they are from Theo’s. What can the weight of these bars tell us about the probability that these are Theo’s chocolate?

Now, let’s perform a hypothesis test on this chocolate of an unknown origin.

What is the sampling distribution of the bar weight under the null hypothesis that the bars from Theo’s weigh 40 grams on average? We’ll need to specify the standard deviation to obtain the sampling distribution, and here we’ll use \(\sigma_X = 2\) (since that’s the value we used for the distribution we sampled from).

The null hypothesis is \[H_0: \mu = 40\] since we know the mean weight of Theo’s chocolate bars is 40 grams.

The sample distribution of the sample mean is: \[ \overline{X} \sim {\cal N}\left(\mu, \frac{\sigma}{\sqrt{n}}\right) = {\cal N}\left(40, \frac{2}{\sqrt{20}}\right). \] We can visualize the situation by plotting the p.d.f. of the sampling distribution under \(H_0\) along with the location of our observed sample mean.

6.2.2 Hypothesis Tests for Means

6.2.2.1 known standard deviation.

It is simple to calculate a hypothesis test in R (in fact, we already implicitly did this in the previous section). When we know the population standard deviation, we use a hypothesis test based on the standard normal, known as a \(z\) -test. Here, let’s assume \(\sigma_X = 2\) (because that is the standard deviation of the distribution we simulated from above) and specify the alternative hypothesis to be \[ H_A: \mu \neq 40. \] We will the z.test() function from the BSDA package, specifying the confidence level via conf.level , which is \(1 - \alpha = 1 - 0.05 = 0.95\) , for our test:

6.2.2.2 Unknown Standard Deviation

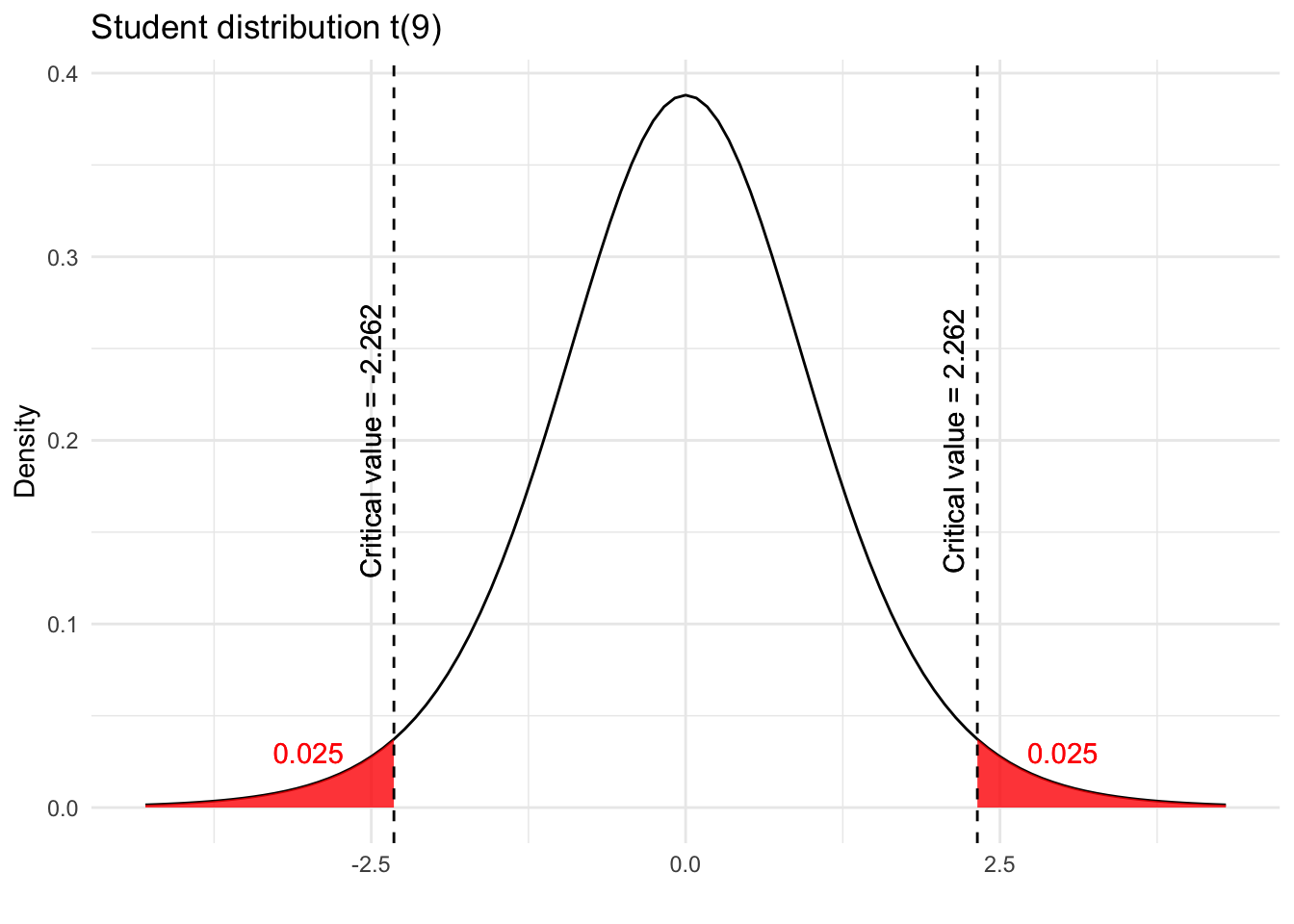

If we do not know the population standard deviation, we typically use the t.test() function included in base R. We know that: \[\frac{\overline{X} - \mu}{\frac{s_x}{\sqrt{n}}} \sim t_{n-1},\] where \(t_{n-1}\) denotes Student’s \(t\) distribution with \(n - 1\) degrees of freedom. We only need to supply the confidence level here:

We note that the \(p\) -value here (rounded to 4 decimal places) is 0.0031, so again, we can detect it’s not likely that these bars are from Theo’s. Even with a very small sample, the difference is large enough (and the standard deviation small enough) that the \(t\) -test can detect it.

6.2.3 Two-sample Tests

6.2.3.1 unpooled two-sample t-test.

Now suppose we have two batches of chocolate bars, one of size 40 and one of size 45. We want to test whether they come from the same factory. However we have no information about the distributions of the chocolate bars. Therefore, we cannot conduct a one sample t-test like above as that would require some knowledge about \(\mu_0\) , the population mean of chocolate bars.

We will generate the samples from normal distribution with mean 45 and 47 respectively. However, let’s assume we do not know this information. The population standard deviation of the distributions we are sampling from are both 2, but we will assume we do not know that either. Let us denote the unknown true population means by \(\mu_1\) and \(\mu_2\) .

Consider the test \(H_0:\mu_1=\mu_2\) versus \(H_1:\mu_1\neq\mu_2\) . We can use R function t.test again, since this function can perform one- and two-sided tests. In fact, t.test assumes a two-sided test by default, so we do not have to specify that here.

The p-value is much less than .05, so we can quite confidently reject the null hypothesis. Indeed, we know from simulating the data that \(\mu_1\neq\mu_2\) , so our test led us to the correct conclusion!

Consider instead testing \(H_0:\mu_1=\mu_2\) versus \(H_1:\mu_1\leq\mu_2\) .

As we would expect, this test also rejects the null hypothesis. One-sided tests are more common in practice as they provide a more principled description of the relationship between the datasets. For example, if you are comparing your new drug’s performance to a “gold standard”, you really only care if your drug’s performance is “better” (a one-sided alternative), and not that your drug’s performance is merely “different” (a two-sided alternative).

6.2.3.2 Pooled Two-sample t-test

Suppose you knew that the samples are coming from distributions with same standard deviations. Then it makes sense to carry out a pooled 2 sample t-test. You specify this in the t.test function as follows.

6.2.3.3 Paired t-test

Suppose we take a batch of chocolate bars and stamp the Theo’s logo on them. We want to know if the stamping process significantly changes the weight of the chocolate bars. Let’s suppose that the true change in weight is distributed as a \({\cal N}(-0.3, 0.2^2)\) random variable:

Let \(\mu_1\) and \(\mu_2\) be the true means of the distributions of chocolate weights before and after the stamping process. Suppose we want to test \(H_0:\mu_1=\mu_2\) versus \(\mu_1\neq\mu_2\) . We can use the R function t.test() for this by choosing paired = TRUE , which indicates that we are looking at pairs of observations corresponding to the same experimental subject and testing whether or not the difference in distribution means is zero.

We can also perform the same test as a one sample t-test using choc.after - choc.batch .

Notice that we get the exact same \(p\) -value for these two tests.

Since the p-value is less than .05, we reject the null hypothesis at level .05. Hence, we have enough evidence in the data to claim that stamping a chocolate bar significantly reduces its weight.

6.2.4 Tests for Proportions

Let’s look at the proportion of Theo’s chocolate bars with a weight exceeding 38g:

Going back to that first batch of 20 chocolate bars of unknown origin, let’s see if we can test whether they’re from Theo’s based on the proportion weighing > 38g.

Recall from our test on the means that we rejected the null hypothesis that the means from the two batches were equal. In this case, a one-sided test is appropiate, and our hypothesis is:

Null hypothesis: \(H_0: p = 0.85\) . Alternative: \(H_A: p > 0.85\) .

We want to test this hypothesis at a level \(\alpha = 0.05\) .

In R, there is a function called prop.test() that you can use to perform tests for proportions. Note that prop.test() only gives you an approximate result.

Similarly, you can use the binom.test() function for an exact result.

The \(p\) -value for both tests is around 0.18, which is much greater than 0.05. So, we cannot reject the hypothesis that the unknown bars come from Theo’s. This is not because the tests are less accurate than the ones we ran before, but because we are testing a less sensitive measure: the proportion weighing > 38 grams, rather than the mean weights. Also, note that this doesn’t mean that we can conclude that these bars do come from Theo’s – why not?

The prop.test() function is the more versatile function in that it can deal with contingency tables, larger number of groups, etc. The binom.test() function gives you exact results, but you can only apply it to one-sample questions.

6.2.5 Power

Let’s think about when we reject the null hypothesis. We would reject the null hypothesis if we observe data with too small of a \(p\) -value. We can calculate the critical value where we would reject the null if we were to observe data that would lead to a more extreme value.

Suppose we take a sample of chocolate bars of size n = 20 , and our null hypothesis is that the bars come from Theo’s ( \(H_0\) : mean = 40, sd = 2 ). Then for a one-sided test (versus larger alternatives), we can calculate the critical value by using the quantile function in R, specifiying the mean and sd of the sampling distribution of \(\overline X\) under \(H_0\) :

Now suppose we want to calculate the power of our hypothesis test: the probability of rejecting the null hypothesis when the null hypothesis is false. In order to do so, we need to compare the null to a specific alternative, so we choose \(H_A\) : mean = 42, sd = 2 . Then the probability that we reject the null under this specific alternative is

We can use R to perform the same calculations using the power.z.test from the asbio package:

Intro to hypothesis testing

Hypothesis testing is all about answering the question: for a parameter \(\theta\) , is a parameter value of \(\theta_0\) consistent with the data in our observed sample?

We call this is the null hypothesis and write

\[ H_0 : \theta = \theta_0 \]

where this means that true (population) value of a parameter \(\theta\) is equal to some value \(\theta_0\) .

What do we do next? We assume that \(\theta = \theta_0\) in the population, and then check if this assumption is compatible with our observed data. The population with \(\theta = \theta_0\) corresponds to a probability distribution, which we call the null distribution .

Let’s make this concrete. Suppose that we observe data \(2, 3, 7\) and we know that our data comes from a normal distribution with known variance \(\sigma^2 = 2\) . Realistically, we won’t know \(\sigma^2\) , or that our data is normal, but we’ll work with these assumptions for now and relax them later.

Let’s suppose we’re interested in the population mean. Let’s guess that the population mean is 8. In this case we would write the null hypothesis as \(H_0 : \mu = 8\) . This is a ridiculous guess for the population mean given our data, but it’ll illustrate our point. Our null distribution is then \(\mathrm{Normal}(8, 2)\) .

Now that we have a null distribution, we need to dream up a test statistic . In this class, you’ll always be given a test statistic. For now we’ll use the T statistic.

\[ Z = {\bar x - \mu_0 \over \mathrm{se}\left(\bar x \right)} = {\bar x - \mu_0 \over {\sigma \over \sqrt n}} = {4 \over \sqrt \frac 23} \approx 4.9 \]

Recall: a statistic \(T(X)\) is a function from a random sample into the real line. Since statistics are functions of random samples, they are themselves random variables.

Test statistics are chosen to have two important properties:

- They need to relate to the population parameter we’re interested in measuring

- We need to know their sampling distributions

Sampling distributions you say! Why do test statistics have sampling distributions? Because we’re just taking a function of a random sample.

For this example, we know that

\[ Z \sim \mathrm{Normal}(0, 1) \]

and now we ask how probable is this statistic given that we have assumed that null distribution is true .

The idea is that if this number is very small, then our null distribution can’t be correct: we shouldn’t observe highly unlikely statistics. This means that hypothesis testing is a form of falsification testing .

For the example above, we are interested in the probability of observing a more extreme test statistic given the null distribution, which in this case is:

\[ P(|Z| > 4.9) = P(Z < -4.9) + P(Z > 4.9) \approx 9.6 \cdot 10^{-7} \]

This probability is called a p-value . Since it’s very small, we conclude that the null hypothesis is not realistic. In other words, the population mean is statistically distinguishable from 8 (whether or not it is practically distinguishable from 8 is entirely another matter).

This is the just of hypothesis testing. Of course there’s a bunch of other associated nonsense that obscures the basic idea, which we’ll dive into next.

Things that can go wrong

False positives.

We need to be concerned about rejecting the null hypothesis when the null hypothesis is true. This is called a false positive or a Type I error.

If the null hypothesis is true, and we calculate a statistic like we did above, we still expect to see a value of p-value of \(9.6 \cdot 10^{-7}\) about \(9.6 \cdot 10^{-5}\) percent of the time. For small p-values this isn’t an issue, but let’s consider a different null hypothesis of \(\mu_0 = 3.9\) . Now

\[ Z = {\bar x - \mu_0 \over {\sigma \over \sqrt n}} = {4 - 3.9 \over \sqrt \frac 23} \approx 0.12 \]

and our corresponding p-value is

\[ P(|Z| > 0.12) = P(Z < -0.12) + P(Z > 0.12) \approx 0.9 \]

and we see that this is quite probable! We should definitely not reject the null hypothesis!

This leads us to a new question: when should we reject the null hypothesis? A standard choice is to set an acceptable probability for a false positive \(\alpha\) . One arbitrary but common choice is to set \(\alpha = 0.05\) , which means we are okay with a \({1 \over 20}\) chance of a false positive. We should then reject the null hypothesis when the p-value is less than \(\alpha\) . This is often called “rejecting the null hypothesis at significance level \(\alpha\) ”. More formally, we might write

\[ P(\text{reject} \; H_0 | H_0 \; \text{true}) = \alpha \]

False negatives

On the other hand, we may also fail to reject the null hypothesis when the null hypothesis is in fact false. We might just not have enough data to reject the null, for example. We call this a false negative or a Type II error. We write this as

\[ \text{Power} = P(\text{fail to reject} \; H_0 | H_0 \; \text{false}) = 1 - \beta \]

To achieve a power of \(1 - \beta\) for a one sample Z-test, you need

\[ n \approx \left( { \sigma \cdot (z_{\alpha / 2} + z_\beta) \over \mu_0 - \mu_A } \right)^2 \]

where \(\mu_A\) is the true mean and \(\mu_0\) is the proposed mean. We’ll do an exercise later that will help you see where this comes from.

A company claims battery lifetimes are normally distributed with \(\mu = 40\) and \(\sigma = 5\) hours. We are curious if the claim about the mean is reasonable, and collect a random sample of 100 batteries. The sample mean is 39.8. What is the p-value of a Z-test for \(H_0 : \mu = 40\) ?

We begin by calculating a Z-score

\[ Z = {\bar x - \mu_0 \over {\sigma \over \sqrt n}} = {39.8 - 40 \over {5 \over \sqrt 100}} = 0.4 \]

and then we calculate, using the fact that \(Z \sim \mathrm{Normal}(0, 1)\) ,

\[ P(Z < -0.4) + P(Z > 0.4) \approx 0.69 \]

we might also be interested in a one-sided test, where \(H_A : \mu < 40\) . In this case the p-value is only the case when \(Z < -0.4\) , and the p-value is

\[ P(Z < -0.4) \approx 0.34 \]

Power for Z-test

Suppose a powdered medicine is supposed to have a mean particle diameter of \(\mu = 15\) micrometers, and the standard deviation of diameters stays steady around 1.8 micrometers. The company would like to have high power to detect mean thicknesses 0.2 micrometers away from 15. When \(n = 100\) , what is the power of the test if the true \(\mu\) is 15.2 micrometers. Assume the company is interested in controlling type I error at an \(\alpha = 0.05\) level.

We will reject the null when our Z score is less than \(z_{\alpha / 2}\) or \(z_{1 - \alpha / 2}\) , or when the Z score is less than -1.96 or greater than 1.96. Recall that the Z score is \({\bar x - \mu_0 \over {\sigma \over \sqrt n}}\) , which we can rearrange in terms of \(\bar x\) to see that we will reject the null when \(\bar x < 14.65\) or \(\bar x > 15.35\) .

Now we are interested in the probability of being in this rejection region when the alternative hypothesis \(\mu_A = 15.2\) is true .

\[ P(\bar x > 15.35 | \mu = 15.2) + P(\bar x < 14.65 | \mu = 15.2) \]

and we know that \(\bar x \sim \mathrm{Normal} \left(15.2, 1.8 / \sqrt{100}\right)\) so this equals

\[ 0.001 + 0.198 \approx 0.199 \]

So we have only a power of about 20 percent. This is quite low.

Introduction

- R installation

- Working directory

- Getting help

- Install packages

Data structures

Data Wrangling

- Sort and order

- Merge data frames

Programming

- Creating functions

- If else statement

- apply function

- sapply function

- tapply function

Import & export

- Read TXT files

- Import CSV files

- Read Excel files

- Read SQL databases

- Export data

- plot function

- Scatter plot

- Density plot

- Tutorials Introduction Data wrangling Graphics Statistics See all

HYPOTHESIS TESTING IN R

Hypothesis testing is a statistical procedure used to make decisions or draw conclusions about the characteristics of a population based on information provided by a sample

NORMALITY TESTS

Normality tests are used to evaluate whether a data sample follows a normal distribution. These tests allow to verify if the data have a behavior similar to that of a Gaussian distribution, being useful to determine if the assumptions of certain parametric statistical analyses that require normality in the data are met

Shapiro Wilk normality test

shapiro.test()

Lilliefors normality test

lillie.test()

GOODNESS OF FIT TESTS

These tests are used to verify whether a proposed theoretical distribution adequately matches the observed data. They are useful to assess whether a specific distribution fits the data well, allowing to determine whether a theoretical model accurately represents the observed data distribution

Pearson's Chi-squared test with chisq.test()

chisq.test()

Kolmogorov-Smirnov test with ks.test()

Median tests.

Median tests are used to test whether the medians of two or more groups are statistically different, thus identifying whether there are significant differences in medians between populations or treatments

Wilcoxon signed rank test

wilcox.test()

Wilcoxon rank sum test (Mann-Whitney U test)

Kruskal Wallis rank sum test (H test)

kruskal.test()

OTHER TYPES OF TESTS

There are other types of tests, such as tests for comparing means, for equality of variances or for equality of proportions

T-test to compare means

F test with var.test() to compare two variances

Test for proportions with prop.test()

prop.test()

Try adjusting your search query

👉 If you haven’t found what you’re looking for, consider clicking the checkbox to activate the extended search on R CHARTS for additional graphs tutorials, try searching a synonym of your query if possible (e.g., ‘bar plot’ -> ‘bar chart’), search for a more generic query or if you are searching for a specific function activate the functions search or use the functions search bar .

Quantitative Methods Using R

10 hypothesis testing.

Hypothesis testing is a method used to make decisions about population parameters based on sample data.

10.1 Hypothesis

A hypothesis is an educated guess or statement about the relationship between variables or the characteristics of a population. In hypothesis testing, there are two main hypotheses:

10.1.1 Null hypothesis (H0):

This hypothesis states that there is no effect or no relationship between variables. It is typically the hypothesis that the researcher wants to disprove.

10.1.2 Alternative hypothesis (H1):

This hypothesis states that there is an effect or a relationship between variables. It is the hypothesis that the researcher wants to prove or provide evidence for.

10.2 Decision Type Error

When performing hypothesis testing, there are two types of decision errors:

Type I Error (α): This error occurs when the null hypothesis is rejected when it is actually true. In other words, it’s a false positive. The probability of committing a Type I error is denoted by the significance level (α), which is typically set at 0.05 or 0.01. Type II Error (β): This error occurs when the null hypothesis is not rejected when it is actually false. In other words, it’s a false negative. The probability of committing a Type II error is denoted by β. The power of a test (1 - β) measures the ability of the test to detect an effect when it truly exists. Here is a graphical representation of the types of decision errors:

Hypothesis Testing Errors

This table represents the different outcomes when making decisions based on hypothesis testing. The columns represent the reality (i.e., whether the null hypothesis is true or false), and the rows represent the decision made based on the hypothesis test (i.e., whether to reject or not reject the null hypothesis). The cells show the types of decision errors (Type I and Type II errors) and the correct decisions.

10.3 Level of Signficance

The level of significance is a critical component in hypothesis testing because it sets a threshold for determining whether an observed effect is statistically significant or not.

The level of significance is denoted by the Greek letter α (alpha) and represents the probability of making a Type I error. A Type I error occurs when we reject the null hypothesis (H0) when it is actually true. By choosing a level of significance, researchers define the risk they are willing to take when rejecting a true null hypothesis. Common levels of significance are 0.05 (5%) and 0.01 (1%).

To better understand the role of the level of significance in hypothesis testing, let’s consider the following steps:

Formulate the null hypothesis (H0) and the alternative hypothesis (H1): The null hypothesis typically states that there is no effect or relationship between variables, while the alternative hypothesis states that there is an effect or relationship.

Choose a level of significance (α): Determine the threshold for the probability of making a Type I error. For example, if α is set to 0.05, there is a 5% chance of rejecting a true null hypothesis.

Perform the statistical test and calculate the test statistic: The test statistic is calculated using the sample data, and it helps determine how far the observed sample mean is from the hypothesized population mean. In the case of a single mean, a one-sample t-test is commonly used, and the test statistic is the t-value.

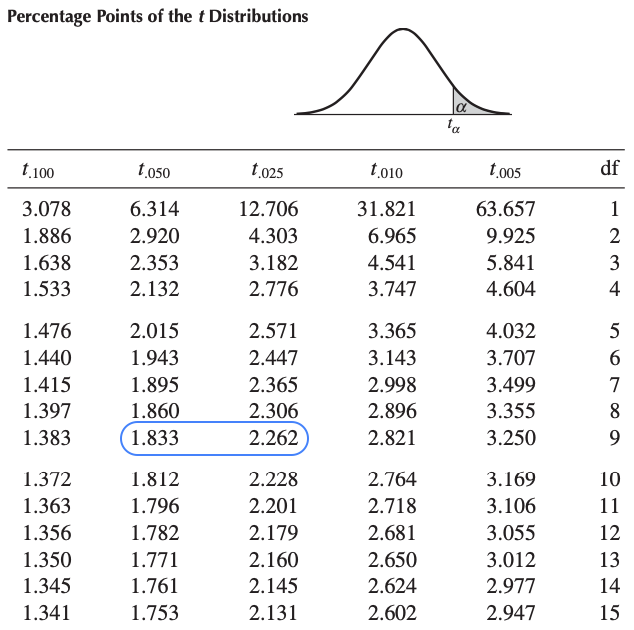

Determine the critical value or p-value: Compare the calculated test statistic with the critical value or the p-value (probability value) to make a decision about the null hypothesis. The critical value is a threshold value that depends on the chosen level of significance and the distribution of the test statistic. The p-value represents the probability of obtaining a test statistic as extreme or more extreme than the observed test statistic under the assumption that the null hypothesis is true.

Make a decision: If the test statistic is more extreme than the critical value, or if the p-value is less than the level of significance (α), reject the null hypothesis. Otherwise, fail to reject the null hypothesis.

10.4 T-statistic

The t-statistic is a standardized measure used in hypothesis testing to compare the observed sample mean with the hypothesized population mean. It takes into account the sample mean, the hypothesized population mean, and the standard error of the mean. Mathematically, the t-statistic can be calculated using the following formula:

t = (X̄ - μ) / (s / √n)

t is the t-statistic X̄ is the sample mean μ is the hypothesized population mean s is the sample standard deviation n is the sample size

10.4.1 T-distribution

The t-distribution, also known as the Student’s t-distribution, is a probability distribution that is used when the population standard deviation is unknown and the sample size is small. It is similar to the normal distribution but has thicker tails, which accounts for the increased variability due to using the sample standard deviation as an estimate of the population standard deviation. The shape of the t-distribution depends on the degrees of freedom (df), which is related to the sample size (df = n - 1). As the sample size increases, the t-distribution approaches the normal distribution.

To calculate the t-statistic in R, you can use the following code:

To perform a one-sample t-test in R, which calculates the t-statistic and p-value automatically, you can use the t.test() function:

10.4.2 Intepreting Normality Evidence

When using a t-test, the assumption of normality is important. The data should follow a normal distribution to ensure the validity of the test results. To assess the normality of the data, we can use visual methods (histograms, Q-Q plots) and statistical tests (e.g., Shapiro-Wilk test).

This is important because the t-test assumes that the data follow a normal distribution, and verifying this assumption helps ensure the validity of the test results.

To generate normality evidence after performing a t-test, you can use the following methods:

Visual methods: Histograms and Q-Q plots can provide a visual assessment of the normality of the data.

Statistical tests: Shapiro-Wilk test and Kolmogorov-Smirnov test are commonly used to test for normality. These tests generate p-values, which can be compared with a chosen significance level (e.g., 0.05) to determine if the data deviate significantly from normality.

In R, you can create a histogram and Q-Q plot using the following code:

- Create a histogram and Q-Q plot:

- Perform the Shapiro-Wilk test:

To interpret the normality evidence, follow these guidelines:

Visual methods: Inspect the histogram and Q-Q plot. If the histogram is roughly bell-shaped and the points on the Q-Q plot fall approximately on the reference line, the data can be considered approximately normally distributed.

Statistical tests: Check the p-values of the normality tests. If the p-value is greater than the chosen significance level (e.g., 0.05), the null hypothesis (i.e., the data follow a normal distribution) cannot be rejected. This suggests that the data do not deviate significantly from normality.

Keep in mind that no single method is foolproof, and it’s often a good idea to use a combination of visual and statistical methods to assess normality. If the data appear to be non-normal, you might consider using non-parametric alternatives to the t-test or transforming the data to achieve normality.

10.5 Statistical Power

Statistical power is the probability of correctly rejecting the null hypothesis when it is false, which means not committing a Type II error. Power is influenced by factors such as sample size, effect size, and the chosen significance level (α). Power analysis helps researchers determine the appropriate sample size needed to achieve a desired level of power, typically 0.8 or higher.

To perform power analysis in R, you can use the pwr package, which provides a set of functions for power calculations in various statistical tests, including the t-test.

Here’s a step-by-step procedure for generating and testing power using R:

- Install and load the pwr package:

- Define the parameters for power analysis. You will need to specify the effect size (Cohen’s d), sample size, and significance level (α):

- Use the pwr.t.test() function to calculate the power for a one-sample t-test:

The output will show the calculated power, sample size, effect size, and significance level. If the power is below the desired level (e.g., 0.8), you can adjust the sample size or effect size and recalculate the power to determine the necessary changes for achieving the desired power level.

It’s essential to consider the practical implications of the effect size and sample size when planning a study. A large effect size may be easier to detect but might not occur frequently in real-world situations. Conversely, a small effect size might be more difficult to detect and may require a larger sample size to achieve adequate power.

An Introduction to Bayesian Thinking

Chapter 5 hypothesis testing with normal populations.

In Section 3.5 , we described how the Bayes factors can be used for hypothesis testing. Now we will use the Bayes factors to compare normal means, i.e., test whether the mean of a population is zero or compare the means of two groups of normally-distributed populations. We divide this mission into three cases: known variance for a single population, unknown variance for a single population using paired data, and unknown variance using two independent groups.

Also note that some of the examples in this section use an updated version of the bayes_inference function. If your local output is different from what is seen in this chapter, or the provided code fails to run for you please make sure that you have the most recent version of the package.

5.1 Bayes Factors for Testing a Normal Mean: variance known

Now we show how to obtain Bayes factors for testing hypothesis about a normal mean, where the variance is known . To start, let’s consider a random sample of observations from a normal population with mean \(\mu\) and pre-specified variance \(\sigma^2\) . We consider testing whether the population mean \(\mu\) is equal to \(m_0\) or not.

Therefore, we can formulate the data and hypotheses as below:

Data \[Y_1, \cdots, Y_n \mathrel{\mathop{\sim}\limits^{\rm iid}}\textsf{Normal}(\mu, \sigma^2)\]

- \(H_1: \mu = m_0\)

- \(H_2: \mu \neq m_0\)

We also need to specify priors for \(\mu\) under both hypotheses. Under \(H_1\) , we assume that \(\mu\) is exactly \(m_0\) , so this occurs with probability 1 under \(H_1\) . Now under \(H_2\) , \(\mu\) is unspecified, so we describe our prior uncertainty with the conjugate normal distribution centered at \(m_0\) and with a variance \(\sigma^2/\mathbf{n_0}\) . This is centered at the hypothesized value \(m_0\) , and it seems that the mean is equally likely to be larger or smaller than \(m_0\) , so a dividing factor \(n_0\) is given to the variance. The hyper parameter \(n_0\) controls the precision of the prior as before.

In mathematical terms, the priors are:

- \(H_1: \mu = m_0 \text{ with probability 1}\)

- \(H_2: \mu \sim \textsf{Normal}(m_0, \sigma^2/\mathbf{n_0})\)

Bayes Factor

Now the Bayes factor for comparing \(H_1\) to \(H_2\) is the ratio of the distribution, the data under the assumption that \(\mu = m_0\) to the distribution of the data under \(H_2\) .

\[\begin{aligned} \textit{BF}[H_1 : H_2] &= \frac{p(\text{data}\mid \mu = m_0, \sigma^2 )} {\int p(\text{data}\mid \mu, \sigma^2) p(\mu \mid m_0, \mathbf{n_0}, \sigma^2)\, d \mu} \\ \textit{BF}[H_1 : H_2] &=\left(\frac{n + \mathbf{n_0}}{\mathbf{n_0}} \right)^{1/2} \exp\left\{-\frac 1 2 \frac{n }{n + \mathbf{n_0}} Z^2 \right\} \\ Z &= \frac{(\bar{Y} - m_0)}{\sigma/\sqrt{n}} \end{aligned}\]

The term in the denominator requires integration to account for the uncertainty in \(\mu\) under \(H_2\) . And it can be shown that the Bayes factor is a function of the observed sampled size, the prior sample size \(n_0\) and a \(Z\) score.

Let’s explore how the hyperparameters in \(n_0\) influences the Bayes factor in Equation (5.1) . For illustration we will use the sample size of 100. Recall that for estimation, we interpreted \(n_0\) as a prior sample size and considered the limiting case where \(n_0\) goes to zero as a non-informative or reference prior.

\[\begin{equation} \textsf{BF}[H_1 : H_2] = \left(\frac{n + \mathbf{n_0}}{\mathbf{n_0}}\right)^{1/2} \exp\left\{-\frac{1}{2} \frac{n }{n + \mathbf{n_0}} Z^2 \right\} \tag{5.1} \end{equation}\]

Figure 5.1 shows the Bayes factor for comparing \(H_1\) to \(H_2\) on the y-axis as \(n_0\) changes on the x-axis. The different lines correspond to different values of the \(Z\) score or how many standard errors \(\bar{y}\) is from the hypothesized mean. As expected, larger values of the \(Z\) score favor \(H_2\) .

Figure 5.1: Vague prior for mu: n=100

But as \(n_0\) becomes smaller and approaches 0, the first term in the Bayes factor goes to infinity, while the exponential term involving the data goes to a constant and is ignored. In the limit as \(n_0 \rightarrow 0\) under this noninformative prior, the Bayes factor paradoxically ends up favoring \(H_1\) regardless of the value of \(\bar{y}\) .

The takeaway from this is that we cannot use improper priors with \(n_0 = 0\) , if we are going to test our hypothesis that \(\mu = n_0\) . Similarly, vague priors that use a small value of \(n_0\) are not recommended due to the sensitivity of the results to the choice of an arbitrarily small value of \(n_0\) .

This problem arises with vague priors – the Bayes factor favors the null model \(H_1\) even when the data are far away from the value under the null – are known as the Bartlett’s paradox or the Jeffrey’s-Lindleys paradox.

Now, one way to understand the effect of prior is through the standard effect size

\[\delta = \frac{\mu - m_0}{\sigma}.\] The prior of the standard effect size is

\[\delta \mid H_2 \sim \textsf{Normal}(0, \frac{1}{\mathbf{n_0}})\]

This allows us to think about a standardized effect independent of the units of the problem. One default choice is using the unit information prior, where the prior sample size \(n_0\) is 1, leading to a standard normal for the standardized effect size. This is depicted with the blue normal density in Figure 5.2 . This suggested that we expect that the mean will be within \(\pm 1.96\) standard deviations of the hypothesized mean with probability 0.95 . (Note that we can say this only under a Bayesian setting.)