10 Real-Life Experimental Research Examples

Chris Drew (PhD)

Dr. Chris Drew is the founder of the Helpful Professor. He holds a PhD in education and has published over 20 articles in scholarly journals. He is the former editor of the Journal of Learning Development in Higher Education. [Image Descriptor: Photo of Chris]

Learn about our Editorial Process

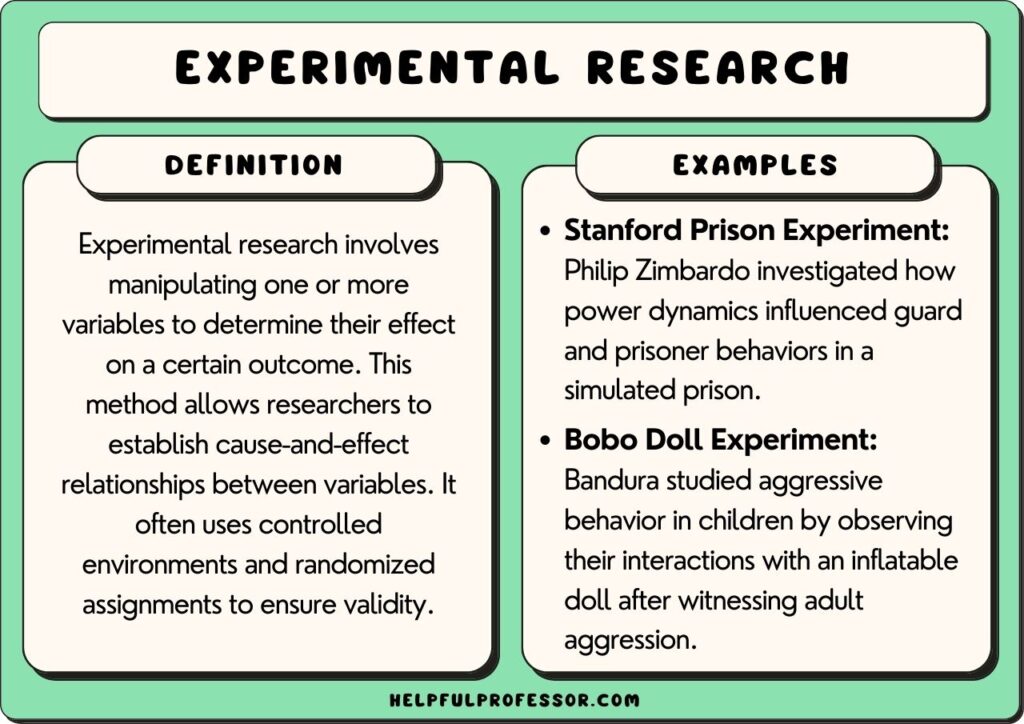

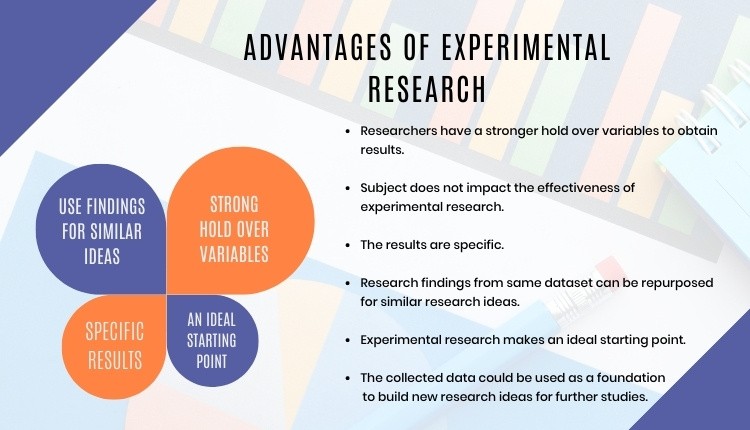

Experimental research is research that involves using a scientific approach to examine research variables.

Below are some famous experimental research examples. Some of these studies were conducted quite a long time ago. Some were so controversial that they would never be attempted today. And some were so unethical that they would never be permitted again.

A few of these studies have also had very practical implications for modern society involving criminal investigations, the impact of television and the media, and the power of authority figures.

Examples of Experimental Research

1. pavlov’s dog: classical conditioning.

Dr. Ivan Pavlov was a physiologist studying animal digestive systems in the 1890s. In one study, he presented food to a dog and then collected its salivatory juices via a tube attached to the inside of the animal’s mouth.

As he was conducting his experiments, an annoying thing kept happening; every time his assistant would enter the lab with a bowl of food for the experiment, the dog would start to salivate at the sound of the assistant’s footsteps.

Although this disrupted his experimental procedures, eventually, it dawned on Pavlov that something else was to be learned from this problem.

Pavlov learned that animals could be conditioned into responding on a physiological level to various stimuli, such as food, or even the sound of the assistant bringing the food down the hall.

Hence, the creation of the theory of classical conditioning. One of the most influential theories in psychology still to this day.

2. Bobo Doll Experiment: Observational Learning

Dr. Albert Bandura conducted one of the most influential studies in psychology in the 1960s at Stanford University.

His intention was to demonstrate that cognitive processes play a fundamental role in learning. At the time, Behaviorism was the predominant theoretical perspective, which completely rejected all inferences to constructs not directly observable .

So, Bandura made two versions of a video. In version #1, an adult behaved aggressively with a Bobo doll by throwing it around the room and striking it with a wooden mallet. In version #2, the adult played gently with the doll by carrying it around to different parts of the room and pushing it gently.

After showing children one of the two versions, they were taken individually to a room that had a Bobo doll. Their behavior was observed and the results indicated that children that watched version #1 of the video were far more aggressive than those that watched version #2.

Not only did Bandura’s Bobo doll study form the basis of his social learning theory, it also helped start the long-lasting debate about the harmful effects of television on children.

Worth Checking Out: What’s the Difference between Experimental and Observational Studies?

3. The Asch Study: Conformity

Dr. Solomon Asch was interested in conformity and the power of group pressure. His study was quite simple. Different groups of students were shown lines of varying lengths and asked, “which line is longest.”

However, out of each group, only one was an actual participant. All of the others in the group were working with Asch and instructed to say that one of the shorter lines was actually the longest.

Nearly every time, the real participant gave an answer that was clearly wrong, but the same as the rest of the group.

The study is one of the most famous in psychology because it demonstrated the power of social pressure so clearly.

4. Car Crash Experiment: Leading Questions

In 1974, Dr. Elizabeth Loftus and her undergraduate student John Palmer designed a study to examine how fallible human judgement is under certain conditions.

They showed groups of research participants videos that depicted accidents between two cars. Later, the participants were asked to estimate the rate of speed of the cars.

Here’s the interesting part. All participants were asked the same question with the exception of a single word: “How fast were the two cars going when they ______into each other?” The word in the blank varied in its implied severity.

Participants’ estimates were completely affected by the word in the blank. When the word “smashed” was used, participants estimated the cars were going much faster than when the word “contacted” was used.

This line of research has had a huge impact on law enforcement interrogation practices, line-up procedures, and the credibility of eyewitness testimony .

5. The 6 Universal Emotions

The research by Dr. Paul Ekman has been influential in the study of emotions. His early research revealed that all human beings, regardless of culture, experience the same 6 basic emotions: happiness, sadness, disgust, fear, surprise, and anger.

In the late 1960s, Ekman traveled to Papua New Guinea. He approached a tribe of people that were extremely isolated from modern culture. With the help of a guide, he would describe different situations to individual members and take a photo of their facial expressions.

The situations included: if a good friend had come; their child had just died; they were about to get into a fight; or had just stepped on a dead pig.

The facial expressions of this highly isolated tribe were nearly identical to those displayed by people in his studies in California.

6. The Little Albert Study: Development of Phobias

Dr. John Watson and Dr. Rosalie Rayner sought to demonstrate how irrational fears were developed.

Their study involved showing a white rat to an infant. Initially, the child had no fear of the rat. However, the researchers then began to create a loud noise each time they showed the child the rat by striking a steel bar with a hammer.

Eventually, the child started to cry and feared the white rat. The child also developed a fear of other white, furry objects such as white rabbits and a Santa’s beard.

This study is famous because it demonstrated one way in which phobias are developed in humans, and also because it is now considered highly unethical for its mistreatment of children, lack of study debriefing , and intent to instil fear.

7. A Class Divided: Discrimination

Perhaps one of the most famous psychological experiments of all time was not conducted by a psychologist. In 1968, third grade teacher Jane Elliott conducted one of the most famous studies on discrimination in history. It took place shortly after the assassination of Dr. Martin Luther King, Jr.

She divided her class into two groups: brown-eyed and blue-eyed students. On the first day of the experiment, she announced the blue-eyed group as superior. They received extra privileges and were told not to intermingle with the brown-eyed students.

They instantly became happier, more self-confident, and started performing better academically.

The next day, the roles were reversed. The brown-eyed students were announced as superior and given extra privileges. Their behavior changed almost immediately and exhibited the same patterns as the other group had the day before.

This study was a remarkable demonstration of the harmful effects of discrimination.

8. The Milgram Study: Obedience to Authority

Dr. Stanley Milgram conducted one of the most influential experiments on authority and obedience in 1961 at Yale University.

Participants were told they were helping study the effects of punishment on learning. Their job was to administer an electric shock to another participant each time they made an error on a test. The other participant was actually an actor in another room that only pretended to be shocked.

However, each time a mistake was made, the level of shock was supposed to increase, eventually reaching quite high voltage levels. When the real participants expressed reluctance to administer the next level of shock, the experimenter, who served as the authority figure in the room, pressured the participant to deliver the next level of shock.

The results of this study were truly astounding. A surprisingly high percentage of participants continued to deliver the shocks to the highest level possible despite the very strong objections by the “other participant.”

This study demonstrated the power of authority figures.

9. The Marshmallow Test: Delay of Gratification

The Marshmallow Test was designed by Dr. Walter Mischel to examine the role of delay of gratification and academic success.

Children ages 4-6 years old were seated at a table with one marshmallow placed in front of them. The experimenter explained that if they did not eat the marshmallow, they would receive a second one. They could then eat both.

The children that were able to delay gratification the longest were rated as significantly more competent later in life and earned higher SAT scores than children that could not withstand the temptation.

The study has since been conceptually replicated by other researchers that have revealed additional factors involved in delay of gratification and academic achievement.

10. Stanford Prison Study: Deindividuation

Dr. Philip Zimbardo conducted one of the most famous psychological studies of all time in 1971. The purpose of the study was to investigate how the power structure in some situations can lead people to behave in ways highly uncharacteristic of their usual behavior.

College students were recruited to participate in the study. Some were randomly assigned to play the role of prison guard. The others were actually “arrested” by real police officers. They were blindfolded and taken to the basement of the university’s psychology building which had been converted to look like a prison.

Although the study was supposed to last 2 weeks, it had to be halted due to the abusive actions of the guards.

The study demonstrated that people will behave in ways they never thought possible when placed in certain roles and power structures. Although the Stanford Prison Study is so well-known for what it revealed about human nature, it is also famous because of the numerous violations of ethical principles.

The studies above are varied and focused on many different aspects of human behavior . However, each example of experimental research listed above has had a lasting impact on society. Some have had tremendous sway in how very practical matters are conducted, such as criminal investigations and legal proceedings.

Psychology is a field of study that is often not fully understood by the general public. When most people hear the term “psychology,” they think of a therapist that listens carefully to the revealing statements of a patient. The therapist then tries to help their patient learn to cope with many of life’s challenges. Nothing wrong with that.

In reality however, most psychologists are researchers. They spend most of their time designing and conducting experiments to enhance our understanding of the human condition.

Asch SE. (1956). Studies of independence and conformity: I. A minority of one against a unanimous majority . Psychological Monographs: General and Applied, 70 (9),1-70. https://doi.org/doi:10.1037/h0093718

Bandura A. (1965). Influence of models’ reinforcement contingencies on the acquisition of imitative responses. Journal of Personality and Social Psychology, 1 (6), 589-595. https://doi.org/doi:10.1037/h0022070

Beck, H. P., Levinson, S., & Irons, G. (2009). Finding little Albert: A journey to John B. Watson’s infant laboratory. American Psychologist, 64(7), 605-614.

Ekman, P. & Friesen, W. V. (1971). Constants Across Cultures in the Face and motion . Journal of Personality and Social Psychology, 17(2) , 124-129.

Loftus, E. F., & Palmer, J. C. (1974). Reconstruction of automobile destruction: An example of

the interaction between language and memory. Journal of Verbal Learning and Verbal

Behavior, 13 (5), 585–589.

Milgram S (1965). Some Conditions of Obedience and Disobedience to Authority. Human Relations, 18(1), 57–76.

Mischel, W., & Ebbesen, E. B. (1970). Attention in delay of gratification . Journal of Personality and Social Psychology, 16 (2), 329-337.

Pavlov, I.P. (1927). Conditioned Reflexes . London: Oxford University Press.

Watson, J. & Rayner, R. (1920). Conditioned emotional reactions. Journal of Experimental Psychology, 3 , 1-14. Zimbardo, P., Haney, C., Banks, W. C., & Jaffe, D. (1971). The Stanford Prison Experiment: A simulation study of the psychology of imprisonment . Stanford University, Stanford Digital Repository, Stanford.

- Chris Drew (PhD) https://helpfulprofessor.com/author/chris-drew-phd/ 19 Top Cognitive Psychology Theories (Explained)

- Chris Drew (PhD) https://helpfulprofessor.com/author/chris-drew-phd/ 119 Bloom’s Taxonomy Examples

- Chris Drew (PhD) https://helpfulprofessor.com/author/chris-drew-phd/ All 6 Levels of Understanding (on Bloom’s Taxonomy)

- Chris Drew (PhD) https://helpfulprofessor.com/author/chris-drew-phd/ 15 Self-Actualization Examples (Maslow's Hierarchy)

Leave a Comment Cancel Reply

Your email address will not be published. Required fields are marked *

- - Google Chrome

Intended for healthcare professionals

- My email alerts

- BMA member login

- Username * Password * Forgot your log in details? Need to activate BMA Member Log In Log in via OpenAthens Log in via your institution

Search form

- Advanced search

- Search responses

- Search blogs

- Beauty sleep:...

Beauty sleep: experimental study on the perceived health and attractiveness of sleep deprived people

- Related content

- Peer review

- John Axelsson , researcher 1 2 ,

- Tina Sundelin , research assistant and MSc student 2 ,

- Michael Ingre , statistician and PhD student 3 ,

- Eus J W Van Someren , researcher 4 ,

- Andreas Olsson , researcher 2 ,

- Mats Lekander , researcher 1 3

- 1 Osher Center for Integrative Medicine, Department of Clinical Neuroscience, Karolinska Institutet, 17177 Stockholm, Sweden

- 2 Division for Psychology, Department of Clinical Neuroscience, Karolinska Institutet

- 3 Stress Research Institute, Stockholm University, Stockholm

- 4 Netherlands Institute for Neuroscience, an Institute of the Royal Netherlands Academy of Arts and Sciences, and VU Medical Center, Amsterdam, Netherlands

- Correspondence to: J Axelsson john.axelsson{at}ki.se

- Accepted 22 October 2010

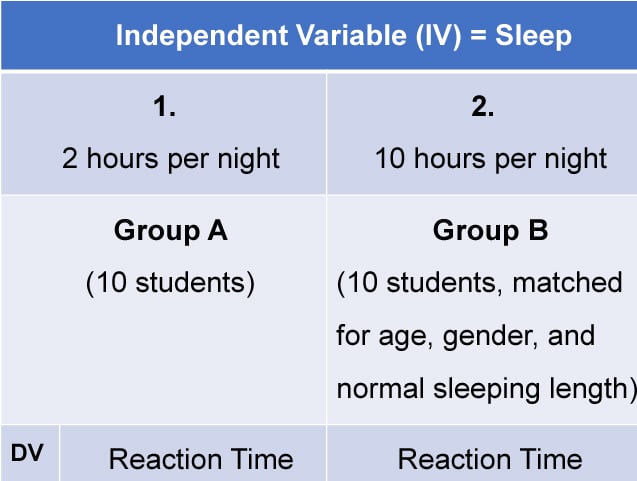

Objective To investigate whether sleep deprived people are perceived as less healthy, less attractive, and more tired than after a normal night’s sleep.

Design Experimental study.

Setting Sleep laboratory in Stockholm, Sweden.

Participants 23 healthy, sleep deprived adults (age 18-31) who were photographed and 65 untrained observers (age 18-61) who rated the photographs.

Intervention Participants were photographed after a normal night’s sleep (eight hours) and after sleep deprivation (31 hours of wakefulness after a night of reduced sleep). The photographs were presented in a randomised order and rated by untrained observers.

Main outcome measure Difference in observer ratings of perceived health, attractiveness, and tiredness between sleep deprived and well rested participants using a visual analogue scale (100 mm).

Results Sleep deprived people were rated as less healthy (visual analogue scale scores, mean 63 (SE 2) v 68 (SE 2), P<0.001), more tired (53 (SE 3) v 44 (SE 3), P<0.001), and less attractive (38 (SE 2) v 40 (SE 2), P<0.001) than after a normal night’s sleep. The decrease in rated health was associated with ratings of increased tiredness and decreased attractiveness.

Conclusion Our findings show that sleep deprived people appear less healthy, less attractive, and more tired compared with when they are well rested. This suggests that humans are sensitive to sleep related facial cues, with potential implications for social and clinical judgments and behaviour. Studies are warranted for understanding how these effects may affect clinical decision making and can add knowledge with direct implications in a medical context.

Introduction

The recognition [of the case] depends in great measure on the accurate and rapid appreciation of small points in which the diseased differs from the healthy state Joseph Bell (1837-1911)

Good clinical judgment is an important skill in medical practice. This is well illustrated in the quote by Joseph Bell, 1 who demonstrated impressive observational and deductive skills. Bell was one of Sir Arthur Conan Doyle’s teachers and served as a model for the fictitious detective Sherlock Holmes. 2 Generally, human judgment involves complex processes, whereby ingrained, often less consciously deliberated responses from perceptual cues are mixed with semantic calculations to affect decision making. 3 Thus all social interactions, including diagnosis in clinical practice, are influenced by reflexive as well as reflective processes in human cognition and communication.

Sleep is an essential homeostatic process with well established effects on an individual’s physiological, cognitive, and behavioural functionality 4 5 6 7 and long term health, 8 but with only anecdotal support of a role in social perception, such as that underlying judgments of attractiveness and health. As illustrated by the common expression “beauty sleep,” an individual’s sleep history may play an integral part in the perception and judgments of his or her attractiveness and health. To date, the concept of beauty sleep has lacked scientific support, but the biological importance of sleep may have favoured a sensitivity to perceive sleep related cues in others. It seems warranted to explore such sensitivity, as sleep disorders and disturbed sleep are increasingly common in today’s 24 hour society and often coexist with some of the most common health problems, such as hypertension 9 10 and inflammatory conditions. 11

To describe the relation between sleep deprivation and perceived health and attractiveness we asked untrained observers to rate the faces of people who had been photographed after a normal night’s sleep and after a night of sleep deprivation. We chose facial photographs as the human face is the primary source of information in social communication. 12 A perceiver’s response to facial cues, signalling the bearer’s emotional state, intentions, and potential mate value, serves to guide actions in social contexts and may ultimately promote survival. 13 14 15 We hypothesised that untrained observers would perceive sleep deprived people as more tired, less healthy, and less attractive compared with after a normal night’s sleep.

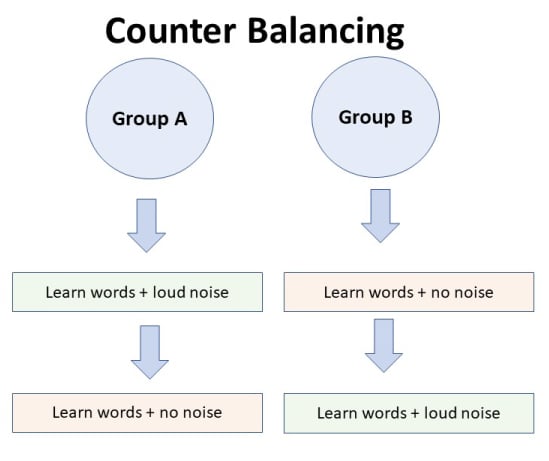

Using an experimental design we photographed the faces of 23 adults (mean age 23, range 18-31 years, 11 women) between 14.00 and 15.00 under two conditions in a balanced design: after a normal night’s sleep (at least eight hours of sleep between 23.00-07.00 and seven hours of wakefulness) and after sleep deprivation (sleep 02.00-07.00 and 31 hours of wakefulness). We advertised for participants at four universities in the Stockholm area. Twenty of 44 potentially eligible people were excluded. Reasons for exclusion were reported sleep disturbances, abnormal sleep requirements (for example, sleep need out of the 7-9 hour range), health problems, or availability on study days (the main reason). We also excluded smokers and those who had consumed alcohol within two days of the protocol. One woman failed to participate in both conditions. Overall, we enrolled 12 women and 12 men.

The participants slept in their own homes. Sleep times were confirmed with sleep diaries and text messages. The sleep diaries (Karolinska sleep diary) included information on sleep latency, quality, duration, and sleepiness. Participants sent a text message to the research assistant by mobile phone (SMS) at bedtime and when they got up on the night before sleep deprivation. They had been instructed not to nap. During the normal sleep condition the participants’ mean duration of sleep, estimated from sleep diaries, was 8.45 (SE 0.20) hours. The sleep deprivation condition started with a restriction of sleep to five hours in bed; the participants sent text messages (SMS) when they went to sleep and when they woke up. The mean duration of sleep during this night, estimated from sleep diaries and text messages, was 5.06 (SE 0.04) hours. For the following night of total sleep deprivation, the participants were monitored in the sleep laboratory at all times. Thus, for the sleep deprivation condition, participants came to the laboratory at 22.00 (after 15 hours of wakefulness) to be monitored, and stayed awake for a further 16 hours. We therefore did not observe the participants during the first 15 hours of wakefulness, when they had had a slightly restricted sleep, but had good control over the last 16 hours of wakefulness when sleepiness increased in magnitude. For the sleep condition, participants came to the laboratory at 12.00 (after five hours of wakefulness). They were kept indoors two hours before being photographed to avoid the effects of exposure to sunlight and the weather. We had a series of five or six photographs (resolution 3872×2592 pixels) taken in a well lit room, with a constant white balance (×900l; colour temperature 4200 K, Nikon D80; Nikon, Tokyo). The white balance was differently set during the two days of the study and affected seven photographs (four taken during sleep deprivation and three during a normal night’s sleep). Removing these participants from the analyses did not affect the results. The distance from camera to head was fixed, as was the focal length, within 14 mm (between 44 and 58 mm). To ensure a fixed surface area of each face on the photograph, the focal length was adapted to the head size of each participant.

For the photo shoot, participants wore no makeup, had their hair loose (combed backwards if long), underwent similar cleaning or shaving procedures for both conditions, and were instructed to “sit with a straight back and look straight into the camera with a neutral, relaxed facial expression.” Although the photographer was not blinded to the sleep conditions, she followed a highly standardised procedure during each photo shoot, including minimal interaction with the participants. A blinded rater chose the most typical photograph from each series of photographs. This process resulted in 46 photographs; two (one from each sleep condition) of each of the 23 participants. This part of the study took place between June and September 2007.

In October 2007 the photographs were presented at a fixed interval of six seconds in a randomised order to 65 observers (mainly students at the Karolinska Institute, mean age 30 (range 18-61) years, 40 women), who were unaware of the conditions of the study. They rated the faces for attractiveness (very unattractive to very attractive), health (very sick to very healthy), and tiredness (not at all tired to very tired) on a 100 mm visual analogue scale. After every 23 photographs a brief intermission was allowed, including a working memory task lasting 23 seconds to prevent the faces being memorised. To ensure that the observers were not primed to tiredness when rating health and attractiveness they rated the photographs for attractiveness and health in the first two sessions and tiredness in the last. To avoid the influence of possible order effects we presented the photographs in a balanced order between conditions for each session.

Statistical analyses

Data were analysed using multilevel mixed effects linear regression, with two crossed independent random effects accounting for random variation between observers and participants using the xtmixed procedure in Stata 9.2. We present the effect of condition as a percentage of change from the baseline condition as the reference using the absolute value in millimetres (rated on the visual analogue scale). No data were missing in the analyses.

Sixty five observers rated each of the 46 photographs for attractiveness, health, and tiredness: 138 ratings by each observer and 2990 ratings for each of the three factors rated. When sleep deprived, people were rated as less healthy (visual analogue scale scores, mean 63 (SE 2) v 68 (SE 2)), more tired (53 (SE 3) v 44 (SE 3)), and less attractive (38 (SE 2) v 40 (SE 2); P<0.001 for all) than after a normal night’s sleep (table 1 ⇓ ). Compared with the normal sleep condition, perceptions of health and attractiveness in the sleep deprived condition decreased on average by 6% and 4% and tiredness increased by 19%.

Multilevel mixed effects regression on effect of how sleep deprived people are perceived with respect to attractiveness, health, and tiredness

- View inline

A 10 mm increase in tiredness was associated with a −3.0 mm change in health, a 10 mm increase in health increased attractiveness by 2.4 mm, and a 10 mm increase in tiredness reduced attractiveness by 1.2 mm (table 2 ⇓ ). These findings were also presented as correlation, suggesting that faces with perceived attractiveness are positively associated with perceived health (r=0.42, fig 1 ⇓ ) and negatively with perceived tiredness (r=−0.28, fig 1). In addition, the average decrease (for each face) in attractiveness as a result of deprived sleep was associated with changes in tiredness (−0.53, n=23, P=0.03) and in health (0.50, n=23, P=0.01). Moreover, a strong negative association was found between the respective perceptions of tiredness and health (r=−0.54, fig 1). Figure 2 ⇓ shows an example of observer rated faces.

Associations between health, tiredness, and attractiveness

Fig 1 Relations between health, tiredness, and attractiveness of 46 photographs (two each of 23 participants) rated by 65 observers on 100 mm visual analogue scales, with variation between observers removed using empirical Bayes’ estimates

- Download figure

- Open in new tab

- Download powerpoint

Fig 2 Participant after a normal night’s sleep (left) and after sleep deprivation (right). Faces were presented in a counterbalanced order

To evaluate the mediation effects of sleep loss on attractiveness and health, tiredness was added to the models presented in table 1 following recommendations. 16 The effect of sleep loss was significantly mediated by tiredness on both health (P<0.001) and attractiveness (P<0.001). When tiredness was added to the model (table 1) with an estimated coefficient of −2.9 (SE 0.1; P<0.001) the independent effect of sleep loss on health decreased from −4.2 to −1.8 (SE 0.5; P<0.001). The effect of sleep loss on attractiveness decreased from −1.6 (table 1) to −0.62 (SE 0.4; P=0.133), with tiredness estimated at −1.1 (SE 0.1; P<0.001). The same approach applied to the model of attractiveness and health (table 2), with a decrease in the association from 2.4 to 2.1 (SE 0.1; P<0.001) with tiredness estimated at −0.56 (SE 0.1; P<0.001).

Sleep deprived people are perceived as less attractive, less healthy, and more tired compared with when they are well rested. Apparent tiredness was strongly related to looking less healthy and less attractive, which was also supported by the mediating analyses, indicating that a large part of the found effects and relations on appearing healthy and attractive were mediated by looking tired. The fact that untrained observers detected the effects of sleep loss in others not only provides evidence for a perceptual ability not previously subjected to experimental control, but also supports the notion that sleep history gives rise to socially relevant signals that provide information about the bearer. The adaptiveness of an ability to detect sleep related facial cues resonates well with other research, showing that small deviations from the average sleep duration in the long term are associated with an increased risk of health problems and with a decreased longevity. 8 17 Indeed, even a few hours of sleep deprivation inflict an array of physiological changes, including neural, endocrinological, immunological, and cellular functioning, that if sustained are relevant for long term health. 7 18 19 20 Here, we show that such physiological changes are paralleled by detectable facial changes.

These results are related to photographs taken in an artificial setting and presented to the observers for only six seconds. It is likely that the effects reported here would be larger in real life person to person situations, when overt behaviour and interactions add further information. Blink interval and blink duration are known to be indicators of sleepiness, 21 and trained observers are able to evaluate reliably the drowsiness of drivers by watching their videotaped faces. 22 In addition, a few of the people were perceived as healthier, less tired, and more attractive during the sleep deprived condition. It remains to be evaluated in follow-up research whether this is due to random error noise in judgments, or associated with specific characteristics of observers or the sleep deprived people they judge. Nevertheless, we believe that the present findings can be generalised to a wide variety of settings, but further studies will have to investigate the impact on clinical studies and other social situations.

Importantly, our findings suggest a prominent role of sleep history in several domains of interpersonal perception and judgment, in which sleep history has previously not been considered of importance, such as in clinical judgment. In addition, because attractiveness motivates sexual behaviour, collaboration, and superior treatment, 13 sleep loss may have consequences in other social contexts. For example, it has been proposed that facial cues perceived as attractive are signals of good health and that this recognition has been selected evolutionarily to guide choice of mate and successful transmission of genes. 13 The fact that good sleep supports a healthy look and poor sleep the reverse may be of particular relevance in the medical setting, where health estimates are an essential part. It is possible that people with sleep disturbances, clinical or otherwise, would be judged as more unhealthy, whereas those who have had an unusually good night’s sleep may be perceived as rather healthy. Compared with the sleep deprivation used in the present investigation, further studies are needed to investigate the effects of less drastic acute reductions of sleep as well as long term clinical effects.

Conclusions

People are capable of detecting sleep loss related facial cues, and these cues modify judgments of another’s health and attractiveness. These conclusions agree well with existing models describing a link between sleep and good health, 18 23 as well as a link between attractiveness and health. 13 Future studies should focus on the relevance of these facial cues in clinical settings. These could investigate whether clinicians are better than the average population at detecting sleep or health related facial cues, and whether patients with a clinical diagnosis exhibit more tiredness and are less healthy looking than healthy people. Perhaps the more successful doctors are those who pick up on these details and act accordingly.

Taken together, our results provide important insights into judgments about health and attractiveness that are reminiscent of the anecdotal wisdom harboured in Bell’s words, and in the colloquial notion of “beauty sleep.”

What is already known on this topic

Short or disturbed sleep and fatigue constitute major risk factors for health and safety

Complaints of short or disturbed sleep are common among patients seeking healthcare

The human face is the main source of information for social signalling

What this study adds

The facial cues of sleep deprived people are sufficient for others to judge them as more tired, less healthy, and less attractive, lending the first scientific support to the concept of “beauty sleep”

By affecting doctors’ general perception of health, the sleep history of a patient may affect clinical decisions and diagnostic precision

Cite this as: BMJ 2010;341:c6614

We thank B Karshikoff for support with data acquisition and M Ingvar for comments on an earlier draft of the manuscript, both without compensation and working at the Department for Clinical Neuroscience, Karolinska Institutet, Sweden.

Contributors: JA designed the data collection, supervised and monitored data collection, wrote the statistical analysis plan, carried out the statistical analyses, obtained funding, drafted and revised the manuscript, and is guarantor. TS designed and carried out the data collection, cleaned the data, drafted, revised the manuscript, and had final approval of the manuscript. JA and TS contributed equally to the work. MI wrote the statistical analysis plan, carried out the statistical analyses, drafted the manuscript, and critically revised the manuscript. EJWVS provided statistical advice, advised on data handling, and critically revised the manuscript. AO provided advice on the methods and critically revised the manuscript. ML provided administrative support, drafted the manuscript, and critically revised the manuscript. All authors approved the final version of the manuscript.

Funding: This study was funded by the Swedish Society for Medical Research, Rut and Arvid Wolff’s Memory Fund, and the Osher Center for Integrative Medicine.

Competing interests: All authors have completed the Unified Competing Interest form at www.icmje.org/coi_disclosure.pdf (available on request from the corresponding author) and declare: no support from any company for the submitted work; no financial relationships with any companies that might have an interest in the submitted work in the previous 3 years; no other relationships or activities that could appear to have influenced the submitted work.

Ethical approval: This study was approved by the Karolinska Institutet’s ethical committee. Participants were compensated for their participation.

Participant consent: Participant’s consent obtained.

Data sharing: Statistical code and dataset of ratings are available from the corresponding author at john.axelsson{at}ki.se .

This is an open-access article distributed under the terms of the Creative Commons Attribution Non-commercial License, which permits use, distribution, and reproduction in any medium, provided the original work is properly cited, the use is non commercial and is otherwise in compliance with the license. See: http://creativecommons.org/licenses/by-nc/2.0/ and http://creativecommons.org/licenses/by-nc/2.0/legalcode .

- ↵ Deten A, Volz HC, Clamors S, Leiblein S, Briest W, Marx G, et al. Hematopoietic stem cells do not repair the infarcted mouse heart. Cardiovasc Res 2005 ; 65 : 52 -63. OpenUrl Abstract / FREE Full Text

- ↵ Doyle AC. The case-book of Sherlock Holmes: selected stories. Wordsworth, 1993.

- ↵ Lieberman MD, Gaunt R, Gilbert DT, Trope Y. Reflection and reflexion: a social cognitive neuroscience approach to attributional inference. Adv Exp Soc Psychol 2002 ; 34 : 199 -249. OpenUrl CrossRef

- ↵ Drummond SPA, Brown GG, Gillin JC, Stricker JL, Wong EC, Buxton RB. Altered brain response to verbal learning following sleep deprivation. Nature 2000 ; 403 : 655 -7. OpenUrl CrossRef PubMed

- ↵ Harrison Y, Horne JA. The impact of sleep deprivation on decision making: a review. J Exp Psychol Appl 2000 ; 6 : 236 -49. OpenUrl CrossRef PubMed Web of Science

- ↵ Huber R, Ghilardi MF, Massimini M, Tononi G. Local sleep and learning. Nature 2004 ; 430 : 78 -81. OpenUrl CrossRef PubMed Web of Science

- ↵ Spiegel K, Leproult R, Van Cauter E. Impact of sleep debt on metabolic and endocrine function. Lancet 1999 ; 354 : 1435 -9. OpenUrl CrossRef PubMed Web of Science

- ↵ Kripke DF, Garfinkel L, Wingard DL, Klauber MR, Marler MR. Mortality associated with sleep duration and insomnia. Arch Gen Psychiatry 2002 ; 59 : 131 -6. OpenUrl CrossRef PubMed Web of Science

- ↵ Olson LG, Ambrogetti A. Waking up to sleep disorders. Br J Hosp Med (Lond) 2006 ; 67 : 118 , 20. OpenUrl PubMed

- ↵ Rajaratnam SM, Arendt J. Health in a 24-h society. Lancet 2001 ; 358 : 999 -1005. OpenUrl CrossRef PubMed Web of Science

- ↵ Ranjbaran Z, Keefer L, Stepanski E, Farhadi A, Keshavarzian A. The relevance of sleep abnormalities to chronic inflammatory conditions. Inflamm Res 2007 ; 56 : 51 -7. OpenUrl CrossRef PubMed Web of Science

- ↵ Haxby JV, Hoffman EA, Gobbini MI. The distributed human neural system for face perception. Trends Cogn Sci 2000 ; 4 : 223 -33. OpenUrl CrossRef PubMed Web of Science

- ↵ Rhodes G. The evolutionary psychology of facial beauty. Annu Rev Psychol 2006 ; 57 : 199 -226. OpenUrl CrossRef PubMed Web of Science

- ↵ Todorov A, Mandisodza AN, Goren A, Hall CC. Inferences of competence from faces predict election outcomes. Science 2005 ; 308 : 1623 -6. OpenUrl Abstract / FREE Full Text

- ↵ Willis J, Todorov A. First impressions: making up your mind after a 100-ms exposure to a face. Psychol Sci 2006 ; 17 : 592 -8. OpenUrl Abstract / FREE Full Text

- ↵ Krull JL, MacKinnon DP. Multilevel modeling of individual and group level mediated effects. Multivariate Behav Res 2001 ; 36 : 249 -77. OpenUrl CrossRef Web of Science

- ↵ Ayas NT, White DP, Manson JE, Stampfer MJ, Speizer FE, Malhotra A, et al. A prospective study of sleep duration and coronary heart disease in women. Arch Intern Med 2003 ; 163 : 205 -9. OpenUrl CrossRef PubMed Web of Science

- ↵ Bryant PA, Trinder J, Curtis N. Sick and tired: does sleep have a vital role in the immune system. Nat Rev Immunol 2004 ; 4 : 457 -67. OpenUrl CrossRef PubMed Web of Science

- ↵ Cirelli C. Cellular consequences of sleep deprivation in the brain. Sleep Med Rev 2006 ; 10 : 307 -21. OpenUrl CrossRef PubMed Web of Science

- ↵ Irwin MR, Wang M, Campomayor CO, Collado-Hidalgo A, Cole S. Sleep deprivation and activation of morning levels of cellular and genomic markers of inflammation. Arch Intern Med 2006 ; 166 : 1756 -62. OpenUrl CrossRef PubMed Web of Science

- ↵ Schleicher R, Galley N, Briest S, Galley L. Blinks and saccades as indicators of fatigue in sleepiness warnings: looking tired? Ergonomics 2008 ; 51 : 982 -1010. OpenUrl CrossRef PubMed Web of Science

- ↵ Wierwille WW, Ellsworth LA. Evaluation of driver drowsiness by trained raters. Accid Anal Prev 1994 ; 26 : 571 -81. OpenUrl CrossRef PubMed Web of Science

- ↵ Horne J. Why we sleep—the functions of sleep in humans and other mammals. Oxford University Press, 1988.

Purdue Online Writing Lab Purdue OWL® College of Liberal Arts

Writing the Experimental Report: Overview, Introductions, and Literature Reviews

Welcome to the Purdue OWL

This page is brought to you by the OWL at Purdue University. When printing this page, you must include the entire legal notice.

Copyright ©1995-2018 by The Writing Lab & The OWL at Purdue and Purdue University. All rights reserved. This material may not be published, reproduced, broadcast, rewritten, or redistributed without permission. Use of this site constitutes acceptance of our terms and conditions of fair use.

Experimental reports (also known as "lab reports") are reports of empirical research conducted by their authors. You should think of an experimental report as a "story" of your research in which you lead your readers through your experiment. As you are telling this story, you are crafting an argument about both the validity and reliability of your research, what your results mean, and how they fit into other previous work.

These next two sections provide an overview of the experimental report in APA format. Always check with your instructor, advisor, or journal editor for specific formatting guidelines.

General-specific-general format

Experimental reports follow a general to specific to general pattern. Your report will start off broadly in your introduction and discussion of the literature; the report narrows as it leads up to your specific hypotheses, methods, and results. Your discussion transitions from talking about your specific results to more general ramifications, future work, and trends relating to your research.

Experimental reports in APA format have a title page. Title page formatting is as follows:

- A running head and page number in the upper right corner (right aligned)

- A definition of running head in IN ALL CAPS below the running head (left aligned)

- Vertically and horizontally centered paper title, followed by author and affiliation

Please see our sample APA title page .

Crafting your story

Before you begin to write, carefully consider your purpose in writing: what is it that you discovered, would like to share, or would like to argue? You can see report writing as crafting a story about your research and your findings. Consider the following.

- What is the story you would like to tell?

- What literature best speaks to that story?

- How do your results tell the story?

- How can you discuss the story in broad terms?

During each section of your paper, you should be focusing on your story. Consider how each sentence, each paragraph, and each section contributes to your overall purpose in writing. Here is a description of one student's process.

Briel is writing an experimental report on her results from her experimental psychology lab class. She was interested in looking at the role gender plays in persuading individuals to take financial risks. After her data analysis, she finds that men are more easily persuaded by women to take financial risks and that men are generally willing to take more financial risks.

When Briel begins to write, she focuses her introduction on financial risk taking and gender, focusing on male behaviors. She then presents relevant literature on financial risk taking and gender that help illuminate her own study, but also help demonstrate the need for her own work. Her introduction ends with a study overview that directly leads from the literature review. Because she has already broadly introduced her study through her introduction and literature review, her readers can anticipate where she is going when she gets to her study overview. Her methods and results continue that story. Finally, her discussion concludes that story, discussing her findings, implications of her work, and the need for more research in the area of gender and financial risk taking.

The abstract gives a concise summary of the contents of the report.

- Abstracts should be brief (about 100 words)

- Abstracts should be self-contained and provide a complete picture of what the study is about

- Abstracts should be organized just like your experimental report—introduction, literature review, methods, results and discussion

- Abstracts should be written last during your drafting stage

Introduction

The introduction in an experimental article should follow a general to specific pattern, where you first introduce the problem generally and then provide a short overview of your own study. The introduction includes three parts: opening statements, literature review, and study overview.

Opening statements: Define the problem broadly in plain English and then lead into the literature review (this is the "general" part of the introduction). Your opening statements should already be setting the stage for the story you are going to tell.

Literature review: Discusses literature (previous studies) relevant to your current study in a concise manner. Keep your story in mind as you organize your lit review and as you choose what literature to include. The following are tips when writing your literature review.

- You should discuss studies that are directly related to your problem at hand and that logically lead to your own hypotheses.

- You do not need to provide a complete historical overview nor provide literature that is peripheral to your own study.

- Studies should be presented based on themes or concepts relevant to your research, not in a chronological format.

- You should also consider what gap in the literature your own research fills. What hasn't been examined? What does your work do that others have not?

Study overview: The literature review should lead directly into the last section of the introduction—your study overview. Your short overview should provide your hypotheses and briefly describe your method. The study overview functions as a transition to your methods section.

You should always give good, descriptive names to your hypotheses that you use consistently throughout your study. When you number hypotheses, readers must go back to your introduction to find them, which makes your piece more difficult to read. Using descriptive names reminds readers what your hypotheses were and allows for better overall flow.

In our example above, Briel had three different hypotheses based on previous literature. Her first hypothesis, the "masculine risk-taking hypothesis" was that men would be more willing to take financial risks overall. She clearly named her hypothesis in the study overview, and then referred back to it in her results and discussion sections.

Thais and Sanford (2000) recommend the following organization for introductions.

- Provide an introduction to your topic

- Provide a very concise overview of the literature

- State your hypotheses and how they connect to the literature

- Provide an overview of the methods for investigation used in your research

Bem (2006) provides the following rules of thumb for writing introductions.

- Write in plain English

- Take the time and space to introduce readers to your problem step-by-step; do not plunge them into the middle of the problem without an introduction

- Use examples to illustrate difficult or unfamiliar theories or concepts. The more complicated the concept or theory, the more important it is to have clear examples

- Open with a discussion about people and their behavior, not about psychologists and their research

19+ Experimental Design Examples (Methods + Types)

Ever wondered how scientists discover new medicines, psychologists learn about behavior, or even how marketers figure out what kind of ads you like? Well, they all have something in common: they use a special plan or recipe called an "experimental design."

Imagine you're baking cookies. You can't just throw random amounts of flour, sugar, and chocolate chips into a bowl and hope for the best. You follow a recipe, right? Scientists and researchers do something similar. They follow a "recipe" called an experimental design to make sure their experiments are set up in a way that the answers they find are meaningful and reliable.

Experimental design is the roadmap researchers use to answer questions. It's a set of rules and steps that researchers follow to collect information, or "data," in a way that is fair, accurate, and makes sense.

Long ago, people didn't have detailed game plans for experiments. They often just tried things out and saw what happened. But over time, people got smarter about this. They started creating structured plans—what we now call experimental designs—to get clearer, more trustworthy answers to their questions.

In this article, we'll take you on a journey through the world of experimental designs. We'll talk about the different types, or "flavors," of experimental designs, where they're used, and even give you a peek into how they came to be.

What Is Experimental Design?

Alright, before we dive into the different types of experimental designs, let's get crystal clear on what experimental design actually is.

Imagine you're a detective trying to solve a mystery. You need clues, right? Well, in the world of research, experimental design is like the roadmap that helps you find those clues. It's like the game plan in sports or the blueprint when you're building a house. Just like you wouldn't start building without a good blueprint, researchers won't start their studies without a strong experimental design.

So, why do we need experimental design? Think about baking a cake. If you toss ingredients into a bowl without measuring, you'll end up with a mess instead of a tasty dessert.

Similarly, in research, if you don't have a solid plan, you might get confusing or incorrect results. A good experimental design helps you ask the right questions ( think critically ), decide what to measure ( come up with an idea ), and figure out how to measure it (test it). It also helps you consider things that might mess up your results, like outside influences you hadn't thought of.

For example, let's say you want to find out if listening to music helps people focus better. Your experimental design would help you decide things like: Who are you going to test? What kind of music will you use? How will you measure focus? And, importantly, how will you make sure that it's really the music affecting focus and not something else, like the time of day or whether someone had a good breakfast?

In short, experimental design is the master plan that guides researchers through the process of collecting data, so they can answer questions in the most reliable way possible. It's like the GPS for the journey of discovery!

History of Experimental Design

Around 350 BCE, people like Aristotle were trying to figure out how the world works, but they mostly just thought really hard about things. They didn't test their ideas much. So while they were super smart, their methods weren't always the best for finding out the truth.

Fast forward to the Renaissance (14th to 17th centuries), a time of big changes and lots of curiosity. People like Galileo started to experiment by actually doing tests, like rolling balls down inclined planes to study motion. Galileo's work was cool because he combined thinking with doing. He'd have an idea, test it, look at the results, and then think some more. This approach was a lot more reliable than just sitting around and thinking.

Now, let's zoom ahead to the 18th and 19th centuries. This is when people like Francis Galton, an English polymath, started to get really systematic about experimentation. Galton was obsessed with measuring things. Seriously, he even tried to measure how good-looking people were ! His work helped create the foundations for a more organized approach to experiments.

Next stop: the early 20th century. Enter Ronald A. Fisher , a brilliant British statistician. Fisher was a game-changer. He came up with ideas that are like the bread and butter of modern experimental design.

Fisher invented the concept of the " control group "—that's a group of people or things that don't get the treatment you're testing, so you can compare them to those who do. He also stressed the importance of " randomization ," which means assigning people or things to different groups by chance, like drawing names out of a hat. This makes sure the experiment is fair and the results are trustworthy.

Around the same time, American psychologists like John B. Watson and B.F. Skinner were developing " behaviorism ." They focused on studying things that they could directly observe and measure, like actions and reactions.

Skinner even built boxes—called Skinner Boxes —to test how animals like pigeons and rats learn. Their work helped shape how psychologists design experiments today. Watson performed a very controversial experiment called The Little Albert experiment that helped describe behaviour through conditioning—in other words, how people learn to behave the way they do.

In the later part of the 20th century and into our time, computers have totally shaken things up. Researchers now use super powerful software to help design their experiments and crunch the numbers.

With computers, they can simulate complex experiments before they even start, which helps them predict what might happen. This is especially helpful in fields like medicine, where getting things right can be a matter of life and death.

Also, did you know that experimental designs aren't just for scientists in labs? They're used by people in all sorts of jobs, like marketing, education, and even video game design! Yes, someone probably ran an experiment to figure out what makes a game super fun to play.

So there you have it—a quick tour through the history of experimental design, from Aristotle's deep thoughts to Fisher's groundbreaking ideas, and all the way to today's computer-powered research. These designs are the recipes that help people from all walks of life find answers to their big questions.

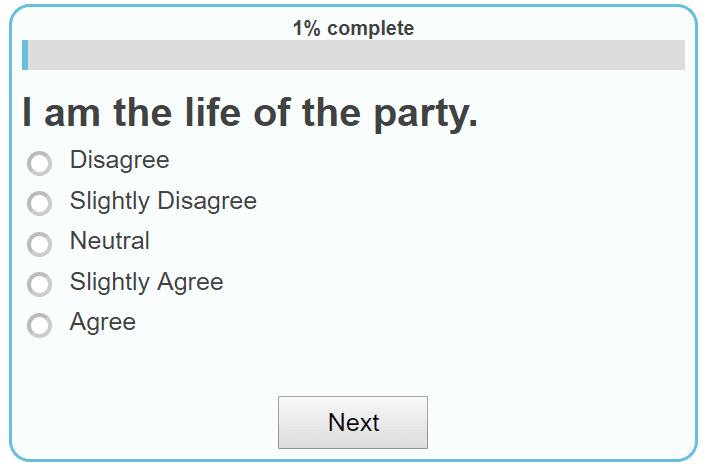

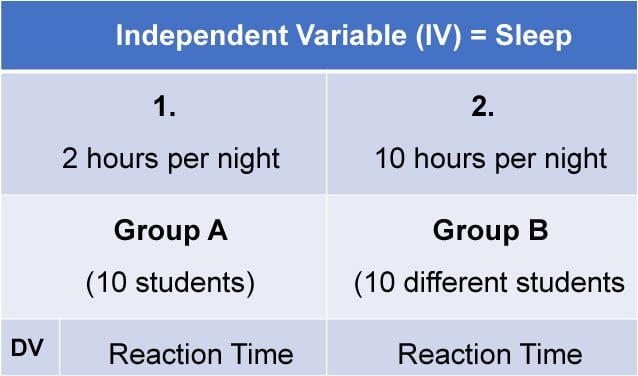

Key Terms in Experimental Design

Before we dig into the different types of experimental designs, let's get comfy with some key terms. Understanding these terms will make it easier for us to explore the various types of experimental designs that researchers use to answer their big questions.

Independent Variable : This is what you change or control in your experiment to see what effect it has. Think of it as the "cause" in a cause-and-effect relationship. For example, if you're studying whether different types of music help people focus, the kind of music is the independent variable.

Dependent Variable : This is what you're measuring to see the effect of your independent variable. In our music and focus experiment, how well people focus is the dependent variable—it's what "depends" on the kind of music played.

Control Group : This is a group of people who don't get the special treatment or change you're testing. They help you see what happens when the independent variable is not applied. If you're testing whether a new medicine works, the control group would take a fake pill, called a placebo , instead of the real medicine.

Experimental Group : This is the group that gets the special treatment or change you're interested in. Going back to our medicine example, this group would get the actual medicine to see if it has any effect.

Randomization : This is like shaking things up in a fair way. You randomly put people into the control or experimental group so that each group is a good mix of different kinds of people. This helps make the results more reliable.

Sample : This is the group of people you're studying. They're a "sample" of a larger group that you're interested in. For instance, if you want to know how teenagers feel about a new video game, you might study a sample of 100 teenagers.

Bias : This is anything that might tilt your experiment one way or another without you realizing it. Like if you're testing a new kind of dog food and you only test it on poodles, that could create a bias because maybe poodles just really like that food and other breeds don't.

Data : This is the information you collect during the experiment. It's like the treasure you find on your journey of discovery!

Replication : This means doing the experiment more than once to make sure your findings hold up. It's like double-checking your answers on a test.

Hypothesis : This is your educated guess about what will happen in the experiment. It's like predicting the end of a movie based on the first half.

Steps of Experimental Design

Alright, let's say you're all fired up and ready to run your own experiment. Cool! But where do you start? Well, designing an experiment is a bit like planning a road trip. There are some key steps you've got to take to make sure you reach your destination. Let's break it down:

- Ask a Question : Before you hit the road, you've got to know where you're going. Same with experiments. You start with a question you want to answer, like "Does eating breakfast really make you do better in school?"

- Do Some Homework : Before you pack your bags, you look up the best places to visit, right? In science, this means reading up on what other people have already discovered about your topic.

- Form a Hypothesis : This is your educated guess about what you think will happen. It's like saying, "I bet this route will get us there faster."

- Plan the Details : Now you decide what kind of car you're driving (your experimental design), who's coming with you (your sample), and what snacks to bring (your variables).

- Randomization : Remember, this is like shuffling a deck of cards. You want to mix up who goes into your control and experimental groups to make sure it's a fair test.

- Run the Experiment : Finally, the rubber hits the road! You carry out your plan, making sure to collect your data carefully.

- Analyze the Data : Once the trip's over, you look at your photos and decide which ones are keepers. In science, this means looking at your data to see what it tells you.

- Draw Conclusions : Based on your data, did you find an answer to your question? This is like saying, "Yep, that route was faster," or "Nope, we hit a ton of traffic."

- Share Your Findings : After a great trip, you want to tell everyone about it, right? Scientists do the same by publishing their results so others can learn from them.

- Do It Again? : Sometimes one road trip just isn't enough. In the same way, scientists often repeat their experiments to make sure their findings are solid.

So there you have it! Those are the basic steps you need to follow when you're designing an experiment. Each step helps make sure that you're setting up a fair and reliable way to find answers to your big questions.

Let's get into examples of experimental designs.

1) True Experimental Design

In the world of experiments, the True Experimental Design is like the superstar quarterback everyone talks about. Born out of the early 20th-century work of statisticians like Ronald A. Fisher, this design is all about control, precision, and reliability.

Researchers carefully pick an independent variable to manipulate (remember, that's the thing they're changing on purpose) and measure the dependent variable (the effect they're studying). Then comes the magic trick—randomization. By randomly putting participants into either the control or experimental group, scientists make sure their experiment is as fair as possible.

No sneaky biases here!

True Experimental Design Pros

The pros of True Experimental Design are like the perks of a VIP ticket at a concert: you get the best and most trustworthy results. Because everything is controlled and randomized, you can feel pretty confident that the results aren't just a fluke.

True Experimental Design Cons

However, there's a catch. Sometimes, it's really tough to set up these experiments in a real-world situation. Imagine trying to control every single detail of your day, from the food you eat to the air you breathe. Not so easy, right?

True Experimental Design Uses

The fields that get the most out of True Experimental Designs are those that need super reliable results, like medical research.

When scientists were developing COVID-19 vaccines, they used this design to run clinical trials. They had control groups that received a placebo (a harmless substance with no effect) and experimental groups that got the actual vaccine. Then they measured how many people in each group got sick. By comparing the two, they could say, "Yep, this vaccine works!"

So next time you read about a groundbreaking discovery in medicine or technology, chances are a True Experimental Design was the VIP behind the scenes, making sure everything was on point. It's been the go-to for rigorous scientific inquiry for nearly a century, and it's not stepping off the stage anytime soon.

2) Quasi-Experimental Design

So, let's talk about the Quasi-Experimental Design. Think of this one as the cool cousin of True Experimental Design. It wants to be just like its famous relative, but it's a bit more laid-back and flexible. You'll find quasi-experimental designs when it's tricky to set up a full-blown True Experimental Design with all the bells and whistles.

Quasi-experiments still play with an independent variable, just like their stricter cousins. The big difference? They don't use randomization. It's like wanting to divide a bag of jelly beans equally between your friends, but you can't quite do it perfectly.

In real life, it's often not possible or ethical to randomly assign people to different groups, especially when dealing with sensitive topics like education or social issues. And that's where quasi-experiments come in.

Quasi-Experimental Design Pros

Even though they lack full randomization, quasi-experimental designs are like the Swiss Army knives of research: versatile and practical. They're especially popular in fields like education, sociology, and public policy.

For instance, when researchers wanted to figure out if the Head Start program , aimed at giving young kids a "head start" in school, was effective, they used a quasi-experimental design. They couldn't randomly assign kids to go or not go to preschool, but they could compare kids who did with kids who didn't.

Quasi-Experimental Design Cons

Of course, quasi-experiments come with their own bag of pros and cons. On the plus side, they're easier to set up and often cheaper than true experiments. But the flip side is that they're not as rock-solid in their conclusions. Because the groups aren't randomly assigned, there's always that little voice saying, "Hey, are we missing something here?"

Quasi-Experimental Design Uses

Quasi-Experimental Design gained traction in the mid-20th century. Researchers were grappling with real-world problems that didn't fit neatly into a laboratory setting. Plus, as society became more aware of ethical considerations, the need for flexible designs increased. So, the quasi-experimental approach was like a breath of fresh air for scientists wanting to study complex issues without a laundry list of restrictions.

In short, if True Experimental Design is the superstar quarterback, Quasi-Experimental Design is the versatile player who can adapt and still make significant contributions to the game.

3) Pre-Experimental Design

Now, let's talk about the Pre-Experimental Design. Imagine it as the beginner's skateboard you get before you try out for all the cool tricks. It has wheels, it rolls, but it's not built for the professional skatepark.

Similarly, pre-experimental designs give researchers a starting point. They let you dip your toes in the water of scientific research without diving in head-first.

So, what's the deal with pre-experimental designs?

Pre-Experimental Designs are the basic, no-frills versions of experiments. Researchers still mess around with an independent variable and measure a dependent variable, but they skip over the whole randomization thing and often don't even have a control group.

It's like baking a cake but forgetting the frosting and sprinkles; you'll get some results, but they might not be as complete or reliable as you'd like.

Pre-Experimental Design Pros

Why use such a simple setup? Because sometimes, you just need to get the ball rolling. Pre-experimental designs are great for quick-and-dirty research when you're short on time or resources. They give you a rough idea of what's happening, which you can use to plan more detailed studies later.

A good example of this is early studies on the effects of screen time on kids. Researchers couldn't control every aspect of a child's life, but they could easily ask parents to track how much time their kids spent in front of screens and then look for trends in behavior or school performance.

Pre-Experimental Design Cons

But here's the catch: pre-experimental designs are like that first draft of an essay. It helps you get your ideas down, but you wouldn't want to turn it in for a grade. Because these designs lack the rigorous structure of true or quasi-experimental setups, they can't give you rock-solid conclusions. They're more like clues or signposts pointing you in a certain direction.

Pre-Experimental Design Uses

This type of design became popular in the early stages of various scientific fields. Researchers used them to scratch the surface of a topic, generate some initial data, and then decide if it's worth exploring further. In other words, pre-experimental designs were the stepping stones that led to more complex, thorough investigations.

So, while Pre-Experimental Design may not be the star player on the team, it's like the practice squad that helps everyone get better. It's the starting point that can lead to bigger and better things.

4) Factorial Design

Now, buckle up, because we're moving into the world of Factorial Design, the multi-tasker of the experimental universe.

Imagine juggling not just one, but multiple balls in the air—that's what researchers do in a factorial design.

In Factorial Design, researchers are not satisfied with just studying one independent variable. Nope, they want to study two or more at the same time to see how they interact.

It's like cooking with several spices to see how they blend together to create unique flavors.

Factorial Design became the talk of the town with the rise of computers. Why? Because this design produces a lot of data, and computers are the number crunchers that help make sense of it all. So, thanks to our silicon friends, researchers can study complicated questions like, "How do diet AND exercise together affect weight loss?" instead of looking at just one of those factors.

Factorial Design Pros

This design's main selling point is its ability to explore interactions between variables. For instance, maybe a new study drug works really well for young people but not so great for older adults. A factorial design could reveal that age is a crucial factor, something you might miss if you only studied the drug's effectiveness in general. It's like being a detective who looks for clues not just in one room but throughout the entire house.

Factorial Design Cons

However, factorial designs have their own bag of challenges. First off, they can be pretty complicated to set up and run. Imagine coordinating a four-way intersection with lots of cars coming from all directions—you've got to make sure everything runs smoothly, or you'll end up with a traffic jam. Similarly, researchers need to carefully plan how they'll measure and analyze all the different variables.

Factorial Design Uses

Factorial designs are widely used in psychology to untangle the web of factors that influence human behavior. They're also popular in fields like marketing, where companies want to understand how different aspects like price, packaging, and advertising influence a product's success.

And speaking of success, the factorial design has been a hit since statisticians like Ronald A. Fisher (yep, him again!) expanded on it in the early-to-mid 20th century. It offered a more nuanced way of understanding the world, proving that sometimes, to get the full picture, you've got to juggle more than one ball at a time.

So, if True Experimental Design is the quarterback and Quasi-Experimental Design is the versatile player, Factorial Design is the strategist who sees the entire game board and makes moves accordingly.

5) Longitudinal Design

Alright, let's take a step into the world of Longitudinal Design. Picture it as the grand storyteller, the kind who doesn't just tell you about a single event but spins an epic tale that stretches over years or even decades. This design isn't about quick snapshots; it's about capturing the whole movie of someone's life or a long-running process.

You know how you might take a photo every year on your birthday to see how you've changed? Longitudinal Design is kind of like that, but for scientific research.

With Longitudinal Design, instead of measuring something just once, researchers come back again and again, sometimes over many years, to see how things are going. This helps them understand not just what's happening, but why it's happening and how it changes over time.

This design really started to shine in the latter half of the 20th century, when researchers began to realize that some questions can't be answered in a hurry. Think about studies that look at how kids grow up, or research on how a certain medicine affects you over a long period. These aren't things you can rush.

The famous Framingham Heart Study , started in 1948, is a prime example. It's been studying heart health in a small town in Massachusetts for decades, and the findings have shaped what we know about heart disease.

Longitudinal Design Pros

So, what's to love about Longitudinal Design? First off, it's the go-to for studying change over time, whether that's how people age or how a forest recovers from a fire.

Longitudinal Design Cons

But it's not all sunshine and rainbows. Longitudinal studies take a lot of patience and resources. Plus, keeping track of participants over many years can be like herding cats—difficult and full of surprises.

Longitudinal Design Uses

Despite these challenges, longitudinal studies have been key in fields like psychology, sociology, and medicine. They provide the kind of deep, long-term insights that other designs just can't match.

So, if the True Experimental Design is the superstar quarterback, and the Quasi-Experimental Design is the flexible athlete, then the Factorial Design is the strategist, and the Longitudinal Design is the wise elder who has seen it all and has stories to tell.

6) Cross-Sectional Design

Now, let's flip the script and talk about Cross-Sectional Design, the polar opposite of the Longitudinal Design. If Longitudinal is the grand storyteller, think of Cross-Sectional as the snapshot photographer. It captures a single moment in time, like a selfie that you take to remember a fun day. Researchers using this design collect all their data at one point, providing a kind of "snapshot" of whatever they're studying.

In a Cross-Sectional Design, researchers look at multiple groups all at the same time to see how they're different or similar.

This design rose to popularity in the mid-20th century, mainly because it's so quick and efficient. Imagine wanting to know how people of different ages feel about a new video game. Instead of waiting for years to see how opinions change, you could just ask people of all ages what they think right now. That's Cross-Sectional Design for you—fast and straightforward.

You'll find this type of research everywhere from marketing studies to healthcare. For instance, you might have heard about surveys asking people what they think about a new product or political issue. Those are usually cross-sectional studies, aimed at getting a quick read on public opinion.

Cross-Sectional Design Pros

So, what's the big deal with Cross-Sectional Design? Well, it's the go-to when you need answers fast and don't have the time or resources for a more complicated setup.

Cross-Sectional Design Cons

Remember, speed comes with trade-offs. While you get your results quickly, those results are stuck in time. They can't tell you how things change or why they're changing, just what's happening right now.

Cross-Sectional Design Uses

Also, because they're so quick and simple, cross-sectional studies often serve as the first step in research. They give scientists an idea of what's going on so they can decide if it's worth digging deeper. In that way, they're a bit like a movie trailer, giving you a taste of the action to see if you're interested in seeing the whole film.

So, in our lineup of experimental designs, if True Experimental Design is the superstar quarterback and Longitudinal Design is the wise elder, then Cross-Sectional Design is like the speedy running back—fast, agile, but not designed for long, drawn-out plays.

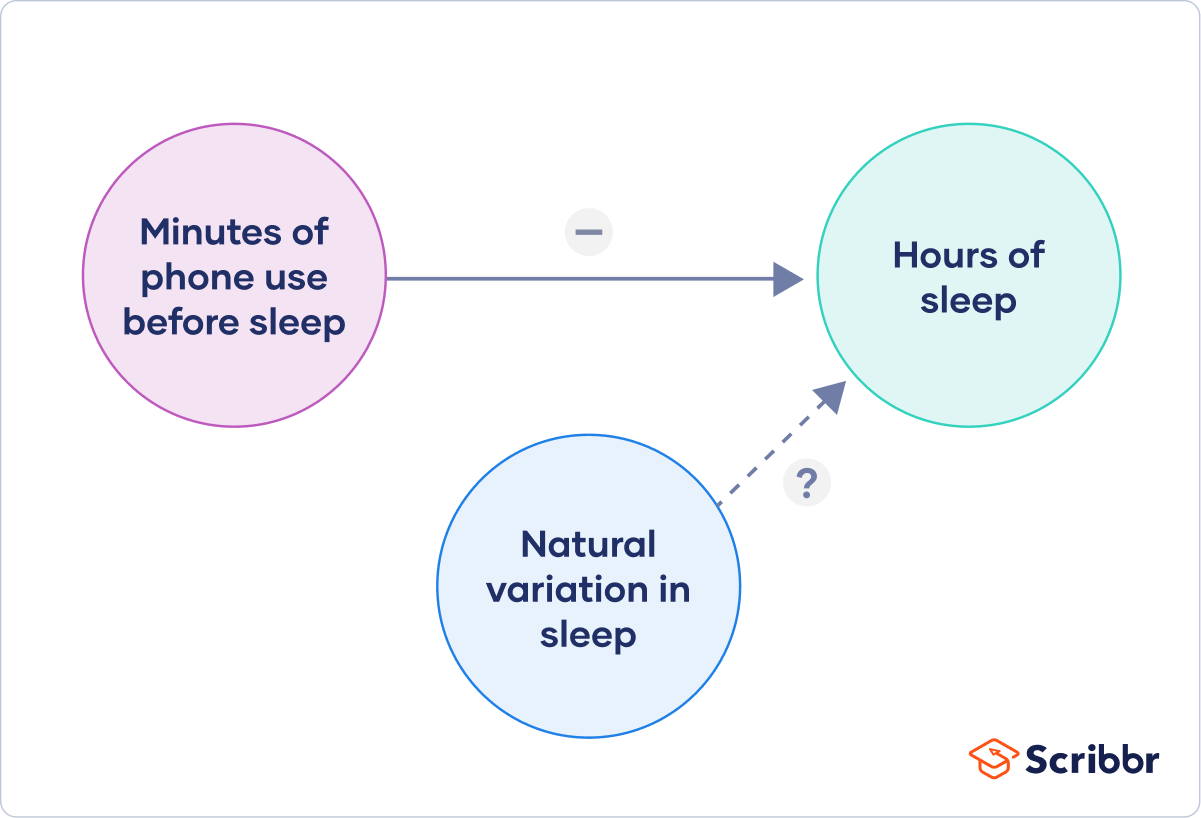

7) Correlational Design

Next on our roster is the Correlational Design, the keen observer of the experimental world. Imagine this design as the person at a party who loves people-watching. They don't interfere or get involved; they just observe and take mental notes about what's going on.

In a correlational study, researchers don't change or control anything; they simply observe and measure how two variables relate to each other.

The correlational design has roots in the early days of psychology and sociology. Pioneers like Sir Francis Galton used it to study how qualities like intelligence or height could be related within families.

This design is all about asking, "Hey, when this thing happens, does that other thing usually happen too?" For example, researchers might study whether students who have more study time get better grades or whether people who exercise more have lower stress levels.

One of the most famous correlational studies you might have heard of is the link between smoking and lung cancer. Back in the mid-20th century, researchers started noticing that people who smoked a lot also seemed to get lung cancer more often. They couldn't say smoking caused cancer—that would require a true experiment—but the strong correlation was a red flag that led to more research and eventually, health warnings.

Correlational Design Pros

This design is great at proving that two (or more) things can be related. Correlational designs can help prove that more detailed research is needed on a topic. They can help us see patterns or possible causes for things that we otherwise might not have realized.

Correlational Design Cons

But here's where you need to be careful: correlational designs can be tricky. Just because two things are related doesn't mean one causes the other. That's like saying, "Every time I wear my lucky socks, my team wins." Well, it's a fun thought, but those socks aren't really controlling the game.

Correlational Design Uses

Despite this limitation, correlational designs are popular in psychology, economics, and epidemiology, to name a few fields. They're often the first step in exploring a possible relationship between variables. Once a strong correlation is found, researchers may decide to conduct more rigorous experimental studies to examine cause and effect.

So, if the True Experimental Design is the superstar quarterback and the Longitudinal Design is the wise elder, the Factorial Design is the strategist, and the Cross-Sectional Design is the speedster, then the Correlational Design is the clever scout, identifying interesting patterns but leaving the heavy lifting of proving cause and effect to the other types of designs.

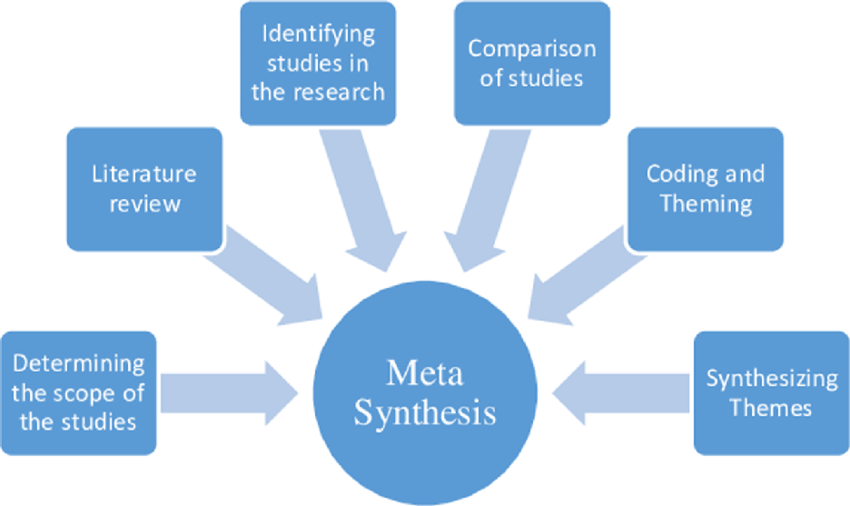

8) Meta-Analysis

Last but not least, let's talk about Meta-Analysis, the librarian of experimental designs.

If other designs are all about creating new research, Meta-Analysis is about gathering up everyone else's research, sorting it, and figuring out what it all means when you put it together.

Imagine a jigsaw puzzle where each piece is a different study. Meta-Analysis is the process of fitting all those pieces together to see the big picture.

The concept of Meta-Analysis started to take shape in the late 20th century, when computers became powerful enough to handle massive amounts of data. It was like someone handed researchers a super-powered magnifying glass, letting them examine multiple studies at the same time to find common trends or results.

You might have heard of the Cochrane Reviews in healthcare . These are big collections of meta-analyses that help doctors and policymakers figure out what treatments work best based on all the research that's been done.

For example, if ten different studies show that a certain medicine helps lower blood pressure, a meta-analysis would pull all that information together to give a more accurate answer.

Meta-Analysis Pros

The beauty of Meta-Analysis is that it can provide really strong evidence. Instead of relying on one study, you're looking at the whole landscape of research on a topic.

Meta-Analysis Cons

However, it does have some downsides. For one, Meta-Analysis is only as good as the studies it includes. If those studies are flawed, the meta-analysis will be too. It's like baking a cake: if you use bad ingredients, it doesn't matter how good your recipe is—the cake won't turn out well.

Meta-Analysis Uses

Despite these challenges, meta-analyses are highly respected and widely used in many fields like medicine, psychology, and education. They help us make sense of a world that's bursting with information by showing us the big picture drawn from many smaller snapshots.

So, in our all-star lineup, if True Experimental Design is the quarterback and Longitudinal Design is the wise elder, the Factorial Design is the strategist, the Cross-Sectional Design is the speedster, and the Correlational Design is the scout, then the Meta-Analysis is like the coach, using insights from everyone else's plays to come up with the best game plan.

9) Non-Experimental Design

Now, let's talk about a player who's a bit of an outsider on this team of experimental designs—the Non-Experimental Design. Think of this design as the commentator or the journalist who covers the game but doesn't actually play.

In a Non-Experimental Design, researchers are like reporters gathering facts, but they don't interfere or change anything. They're simply there to describe and analyze.

Non-Experimental Design Pros

So, what's the deal with Non-Experimental Design? Its strength is in description and exploration. It's really good for studying things as they are in the real world, without changing any conditions.

Non-Experimental Design Cons

Because a non-experimental design doesn't manipulate variables, it can't prove cause and effect. It's like a weather reporter: they can tell you it's raining, but they can't tell you why it's raining.

The downside? Since researchers aren't controlling variables, it's hard to rule out other explanations for what they observe. It's like hearing one side of a story—you get an idea of what happened, but it might not be the complete picture.

Non-Experimental Design Uses

Non-Experimental Design has always been a part of research, especially in fields like anthropology, sociology, and some areas of psychology.