Assignment Problem: Meaning, Methods and Variations | Operations Research

After reading this article you will learn about:- 1. Meaning of Assignment Problem 2. Definition of Assignment Problem 3. Mathematical Formulation 4. Hungarian Method 5. Variations.

Meaning of Assignment Problem:

An assignment problem is a particular case of transportation problem where the objective is to assign a number of resources to an equal number of activities so as to minimise total cost or maximize total profit of allocation.

The problem of assignment arises because available resources such as men, machines etc. have varying degrees of efficiency for performing different activities, therefore, cost, profit or loss of performing the different activities is different.

Thus, the problem is “How should the assignments be made so as to optimize the given objective”. Some of the problem where the assignment technique may be useful are assignment of workers to machines, salesman to different sales areas.

Definition of Assignment Problem:

ADVERTISEMENTS:

Suppose there are n jobs to be performed and n persons are available for doing these jobs. Assume that each person can do each job at a term, though with varying degree of efficiency, let c ij be the cost if the i-th person is assigned to the j-th job. The problem is to find an assignment (which job should be assigned to which person one on-one basis) So that the total cost of performing all jobs is minimum, problem of this kind are known as assignment problem.

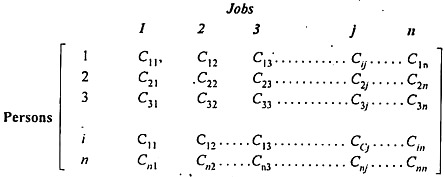

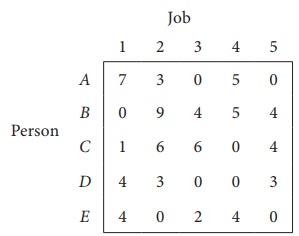

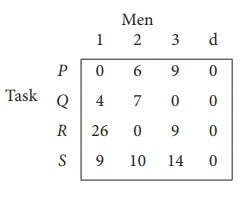

The assignment problem can be stated in the form of n x n cost matrix C real members as given in the following table:

www.springer.com The European Mathematical Society

- StatProb Collection

- Recent changes

- Current events

- Random page

- Project talk

- Request account

- What links here

- Related changes

- Special pages

- Printable version

- Permanent link

- Page information

- View source

Assignment problem

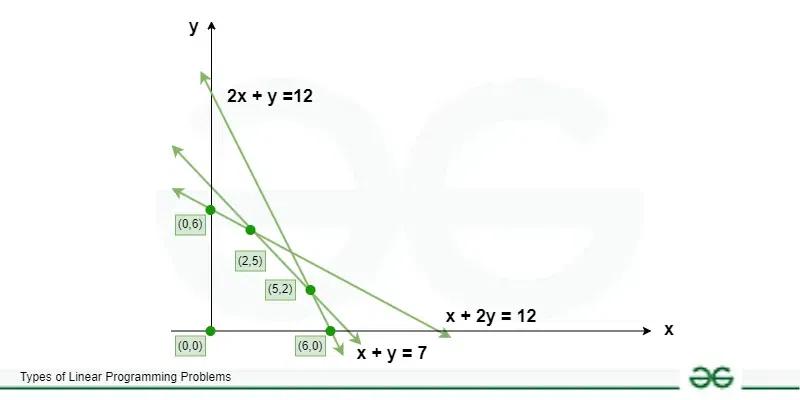

The problem of optimally assigning $ m $ individuals to $ m $ jobs. It can be formulated as a linear programming problem that is a special case of the transport problem :

maximize $ \sum _ {i,j } c _ {ij } x _ {ij } $

$$ \sum _ { j } x _ {ij } = a _ {i} , i = 1 \dots m $$

(origins or supply),

$$ \sum _ { i } x _ {ij } = b _ {j} , j = 1 \dots n $$

(destinations or demand), where $ x _ {ij } \geq 0 $ and $ \sum a _ {i} = \sum b _ {j} $, which is called the balance condition. The assignment problem arises when $ m = n $ and all $ a _ {i} $ and $ b _ {j} $ are $ 1 $.

If all $ a _ {i} $ and $ b _ {j} $ in the transposed problem are integers, then there is an optimal solution for which all $ x _ {ij } $ are integers (Dantzig's theorem on integral solutions of the transport problem).

In the assignment problem, for such a solution $ x _ {ij } $ is either zero or one; $ x _ {ij } = 1 $ means that person $ i $ is assigned to job $ j $; the weight $ c _ {ij } $ is the utility of person $ i $ assigned to job $ j $.

The special structure of the transport problem and the assignment problem makes it possible to use algorithms that are more efficient than the simplex method . Some of these use the Hungarian method (see, e.g., [a5] , [a1] , Chapt. 7), which is based on the König–Egervary theorem (see König theorem ), the method of potentials (see [a1] , [a2] ), the out-of-kilter algorithm (see, e.g., [a3] ) or the transportation simplex method.

In turn, the transportation problem is a special case of the network optimization problem.

A totally different assignment problem is the pole assignment problem in control theory.

- This page was last edited on 5 April 2020, at 18:48.

- Privacy policy

- About Encyclopedia of Mathematics

- Disclaimers

- Impressum-Legal

404 Not found

Operations Research by

Get full access to Operations Research and 60K+ other titles, with a free 10-day trial of O'Reilly.

There are also live events, courses curated by job role, and more.

Assignment Problem

5.1 introduction.

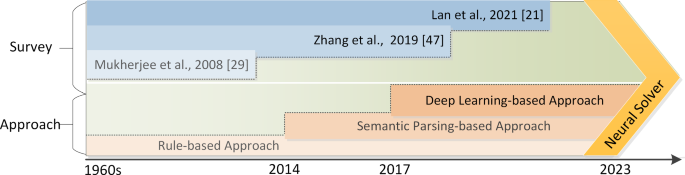

The assignment problem is one of the special type of transportation problem for which more efficient (less-time consuming) solution method has been devised by KUHN (1956) and FLOOD (1956). The justification of the steps leading to the solution is based on theorems proved by Hungarian mathematicians KONEIG (1950) and EGERVARY (1953), hence the method is named Hungarian.

5.2 GENERAL MODEL OF THE ASSIGNMENT PROBLEM

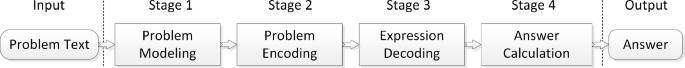

Consider n jobs and n persons. Assume that each job can be done only by one person and the time a person required for completing the i th job (i = 1,2,...n) by the j th person (j = 1,2,...n) is denoted by a real number C ij . On the whole this model deals with the assignment of n candidates to n jobs ...

Get Operations Research now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.

Don’t leave empty-handed

Get Mark Richards’s Software Architecture Patterns ebook to better understand how to design components—and how they should interact.

It’s yours, free.

Check it out now on O’Reilly

Dive in for free with a 10-day trial of the O’Reilly learning platform—then explore all the other resources our members count on to build skills and solve problems every day.

- Google OR-Tools

- Español – América Latina

- Português – Brasil

- Tiếng Việt

Solving an Assignment Problem

This section presents an example that shows how to solve an assignment problem using both the MIP solver and the CP-SAT solver.

In the example there are five workers (numbered 0-4) and four tasks (numbered 0-3). Note that there is one more worker than in the example in the Overview .

The costs of assigning workers to tasks are shown in the following table.

The problem is to assign each worker to at most one task, with no two workers performing the same task, while minimizing the total cost. Since there are more workers than tasks, one worker will not be assigned a task.

MIP solution

The following sections describe how to solve the problem using the MPSolver wrapper .

Import the libraries

The following code imports the required libraries.

Create the data

The following code creates the data for the problem.

The costs array corresponds to the table of costs for assigning workers to tasks, shown above.

Declare the MIP solver

The following code declares the MIP solver.

Create the variables

The following code creates binary integer variables for the problem.

Create the constraints

Create the objective function.

The following code creates the objective function for the problem.

The value of the objective function is the total cost over all variables that are assigned the value 1 by the solver.

Invoke the solver

The following code invokes the solver.

Print the solution

The following code prints the solution to the problem.

Here is the output of the program.

Complete programs

Here are the complete programs for the MIP solution.

CP SAT solution

The following sections describe how to solve the problem using the CP-SAT solver.

Declare the model

The following code declares the CP-SAT model.

The following code sets up the data for the problem.

The following code creates the constraints for the problem.

Here are the complete programs for the CP-SAT solution.

Except as otherwise noted, the content of this page is licensed under the Creative Commons Attribution 4.0 License , and code samples are licensed under the Apache 2.0 License . For details, see the Google Developers Site Policies . Java is a registered trademark of Oracle and/or its affiliates.

Last updated 2023-01-02 UTC.

Principedia

Successful Strategies for Solving Problems on Assignments

Solving complex problems is a challenging task and warrants ongoing effort throughout your career. A number of approaches that expert problem-solvers find useful are summarized below, and you may find these strategies helpful in your own work. Any quantitative problem, whether in economics, science, or engineering, requires a two-step approach: analyze, then compute. Jumping directly to “number-crunching” without thinking through the logic of the problem is counter-productive. Conversely, analyzing a problem and then computing carelessly will not result in the right answer either. So, think first, calculate, and always check your results. And remember, attitude matters. Approach solving a problem as something that you know you can do, rather than something you think that you can’t do. Very few of us can see the answer to a problem without working through various approaches first.

Analysis Stage

- Read the problem carefully at least twice, aloud if possible, then restate the problem in your own words.

- Write down all the information that you know in the problem and separate, if necessary, the “givens” from the “constraints.”

- Think about what can be done with the information that is given. What are some relationships within the information given? What does this particular problem have in common conceptually with course material or other questions that you have solved?

- Draw pictures or graphs to help you sort through what’s really going on in the problem. These will help you recall related course material that will help you solve the problem. However, be sure to check that the assumptions underlying the picture or graph you have drawn are the same as the assumptions made in the problem. If they are not, you will need to take this into consideration when setting up your approach.

Computing Stage

- If the actual numbers involved in the problem are too large, small, or abstract and seem to be getting in the way of your thinking, substitute simple numbers and plan your approach. Then, once you get an understanding of the concepts in the problem, you can go back to the numbers given.

- Once you have a plan, do the necessary calculations. If you think of a simpler or more elegant approach, you can try it afterwards and use it as a check of your logic. Be careful about changing your approach in the middle of a problem. You can inadvertently include some incorrect or inapplicable assumptions from the prior plan.

- Throughout the computing stage, pause periodically to be sure that you understand the intuition behind each concept in the problem. Doing this will not only strengthen your understanding of the material, but it will also help you in solving other problems that also focus on those concepts.

- Resist the temptation to consult the answer key before you have finished the problem. Problems often look logical when someone else does them; that recognition does not require the same knowledge as solving the problem yourself. Likewise, when soliciting help from the AI or course head, ask for direction or a helpful tip only—avoid having them work the problem for you. This approach will help ensure that you really understand the problem—an essential prerequisite for successfully solving problems on exams and quizzes where no outside help is available.

- Check your results. Does the answer make sense given the information you have and the concepts involved? Does the answer make sense in the real world? Are the units reasonable? Are the units the ones specified in the problem? If you substitute your answer for the unknown in the problem, does it fit the criteria given? Does your answer fit within the range of an estimate that you made prior to calculating the result? One especially effective way to check your results is to work with a study partner or group. Discussing various options for a problem can help you uncover both computational errors and errors in your thinking about the problem. Before doing this, of course, make sure that working with someone else is acceptable to your course instructor.

- Ask yourself why this question is important. Lectures, precepts, problem sets, and exams are all intended to increase your knowledge of the subject. Thinking about the connection between a problem and the rest of the course material will strengthen your overall understanding.

If you get stuck, take a break. Research has shown that the brain works very productively on problems while we sleep—so plan your problem-solving sessions in such a way that you do a “first pass.” Then, get a night’s rest, return to the problem set the next day, and think about approaching the problem in an entirely different way.

References and Further Reading:

Adapted in part from Walter Pauk. How to Study in College , 7th edition, Houghton Mifflin Co., 2001

- ← Questions to Ask Yourself When Problem Solving

- Breaking Down Large Projects Into Manageable Pieces →

Procedure, Example Solved Problem | Operations Research - Solution of assignment problems (Hungarian Method) | 12th Business Maths and Statistics : Chapter 10 : Operations Research

Chapter: 12th business maths and statistics : chapter 10 : operations research.

Solution of assignment problems (Hungarian Method)

First check whether the number of rows is equal to the numbers of columns, if it is so, the assignment problem is said to be balanced.

Step :1 Choose the least element in each row and subtract it from all the elements of that row.

Step :2 Choose the least element in each column and subtract it from all the elements of that column. Step 2 has to be performed from the table obtained in step 1.

Step:3 Check whether there is atleast one zero in each row and each column and make an assignment as follows.

Step :4 If each row and each column contains exactly one assignment, then the solution is optimal.

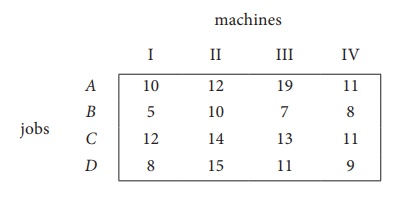

Example 10.7

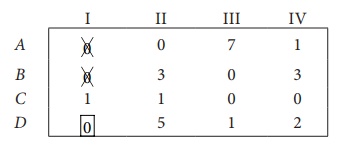

Solve the following assignment problem. Cell values represent cost of assigning job A, B, C and D to the machines I, II, III and IV.

Here the number of rows and columns are equal.

∴ The given assignment problem is balanced. Now let us find the solution.

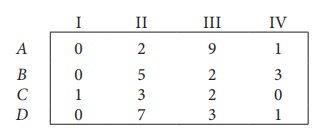

Step 1: Select a smallest element in each row and subtract this from all the elements in its row.

Look for atleast one zero in each row and each column.Otherwise go to step 2.

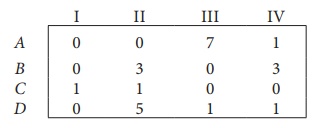

Step 2: Select the smallest element in each column and subtract this from all the elements in its column.

Since each row and column contains atleast one zero, assignments can be made.

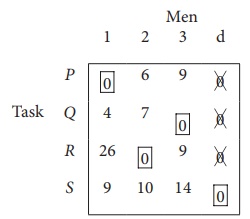

Step 3 (Assignment):

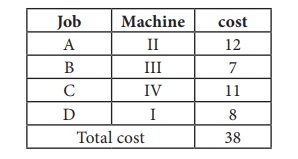

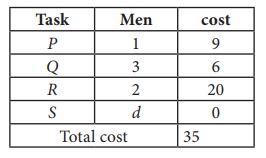

Thus all the four assignments have been made. The optimal assignment schedule and total cost is

The optimal assignment (minimum) cost

Example 10.8

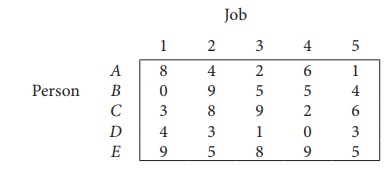

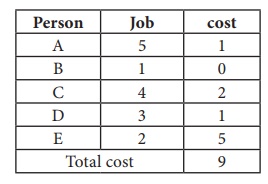

Consider the problem of assigning five jobs to five persons. The assignment costs are given as follows. Determine the optimum assignment schedule.

∴ The given assignment problem is balanced.

Now let us find the solution.

The cost matrix of the given assignment problem is

Column 3 contains no zero. Go to Step 2.

Thus all the five assignments have been made. The Optimal assignment schedule and total cost is

The optimal assignment (minimum) cost = ` 9

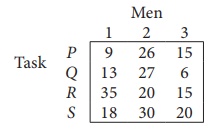

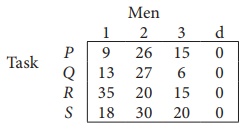

Example 10.9

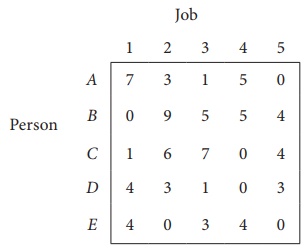

Solve the following assignment problem.

Since the number of columns is less than the number of rows, given assignment problem is unbalanced one. To balance it , introduce a dummy column with all the entries zero. The revised assignment problem is

Here only 3 tasks can be assigned to 3 men.

Step 1: is not necessary, since each row contains zero entry. Go to Step 2.

Step 3 (Assignment) :

Since each row and each columncontains exactly one assignment,all the three men have been assigned a task. But task S is not assigned to any Man. The optimal assignment schedule and total cost is

The optimal assignment (minimum) cost = ₹ 35

Related Topics

Privacy Policy , Terms and Conditions , DMCA Policy and Compliant

Copyright © 2018-2024 BrainKart.com; All Rights Reserved. Developed by Therithal info, Chennai.

Assignment Problem: Linear Programming

The assignment problem is a special type of transportation problem , where the objective is to minimize the cost or time of completing a number of jobs by a number of persons.

In other words, when the problem involves the allocation of n different facilities to n different tasks, it is often termed as an assignment problem.

The model's primary usefulness is for planning. The assignment problem also encompasses an important sub-class of so-called shortest- (or longest-) route models. The assignment model is useful in solving problems such as, assignment of machines to jobs, assignment of salesmen to sales territories, travelling salesman problem, etc.

It may be noted that with n facilities and n jobs, there are n! possible assignments. One way of finding an optimal assignment is to write all the n! possible arrangements, evaluate their total cost, and select the assignment with minimum cost. But, due to heavy computational burden this method is not suitable. This chapter concentrates on an efficient method for solving assignment problems that was developed by a Hungarian mathematician D.Konig.

"A mathematician is a device for turning coffee into theorems." -Paul Erdos

Formulation of an assignment problem

Suppose a company has n persons of different capacities available for performing each different job in the concern, and there are the same number of jobs of different types. One person can be given one and only one job. The objective of this assignment problem is to assign n persons to n jobs, so as to minimize the total assignment cost. The cost matrix for this problem is given below:

The structure of an assignment problem is identical to that of a transportation problem.

To formulate the assignment problem in mathematical programming terms , we define the activity variables as

for i = 1, 2, ..., n and j = 1, 2, ..., n

In the above table, c ij is the cost of performing jth job by ith worker.

Generalized Form of an Assignment Problem

The optimization model is

Minimize c 11 x 11 + c 12 x 12 + ------- + c nn x nn

subject to x i1 + x i2 +..........+ x in = 1 i = 1, 2,......., n x 1j + x 2j +..........+ x nj = 1 j = 1, 2,......., n

x ij = 0 or 1

In Σ Sigma notation

x ij = 0 or 1 for all i and j

An assignment problem can be solved by transportation methods, but due to high degree of degeneracy the usual computational techniques of a transportation problem become very inefficient. Therefore, a special method is available for solving such type of problems in a more efficient way.

Assumptions in Assignment Problem

- Number of jobs is equal to the number of machines or persons.

- Each man or machine is assigned only one job.

- Each man or machine is independently capable of handling any job to be done.

- Assigning criteria is clearly specified (minimizing cost or maximizing profit).

Share this article with your friends

Operations Research Simplified Back Next

Goal programming Linear programming Simplex Method Transportation Problem

35 problem-solving techniques and methods for solving complex problems

Design your next session with SessionLab

Join the 150,000+ facilitators using SessionLab.

Recommended Articles

A step-by-step guide to planning a workshop, how to create an unforgettable training session in 8 simple steps, 47 useful online tools for workshop planning and meeting facilitation.

All teams and organizations encounter challenges as they grow. There are problems that might occur for teams when it comes to miscommunication or resolving business-critical issues . You may face challenges around growth , design , user engagement, and even team culture and happiness. In short, problem-solving techniques should be part of every team’s skillset.

Problem-solving methods are primarily designed to help a group or team through a process of first identifying problems and challenges , ideating possible solutions , and then evaluating the most suitable .

Finding effective solutions to complex problems isn’t easy, but by using the right process and techniques, you can help your team be more efficient in the process.

So how do you develop strategies that are engaging, and empower your team to solve problems effectively?

In this blog post, we share a series of problem-solving tools you can use in your next workshop or team meeting. You’ll also find some tips for facilitating the process and how to enable others to solve complex problems.

Let’s get started!

How do you identify problems?

How do you identify the right solution.

- Tips for more effective problem-solving

Complete problem-solving methods

- Problem-solving techniques to identify and analyze problems

- Problem-solving techniques for developing solutions

Problem-solving warm-up activities

Closing activities for a problem-solving process.

Before you can move towards finding the right solution for a given problem, you first need to identify and define the problem you wish to solve.

Here, you want to clearly articulate what the problem is and allow your group to do the same. Remember that everyone in a group is likely to have differing perspectives and alignment is necessary in order to help the group move forward.

Identifying a problem accurately also requires that all members of a group are able to contribute their views in an open and safe manner. It can be scary for people to stand up and contribute, especially if the problems or challenges are emotive or personal in nature. Be sure to try and create a psychologically safe space for these kinds of discussions.

Remember that problem analysis and further discussion are also important. Not taking the time to fully analyze and discuss a challenge can result in the development of solutions that are not fit for purpose or do not address the underlying issue.

Successfully identifying and then analyzing a problem means facilitating a group through activities designed to help them clearly and honestly articulate their thoughts and produce usable insight.

With this data, you might then produce a problem statement that clearly describes the problem you wish to be addressed and also state the goal of any process you undertake to tackle this issue.

Finding solutions is the end goal of any process. Complex organizational challenges can only be solved with an appropriate solution but discovering them requires using the right problem-solving tool.

After you’ve explored a problem and discussed ideas, you need to help a team discuss and choose the right solution. Consensus tools and methods such as those below help a group explore possible solutions before then voting for the best. They’re a great way to tap into the collective intelligence of the group for great results!

Remember that the process is often iterative. Great problem solvers often roadtest a viable solution in a measured way to see what works too. While you might not get the right solution on your first try, the methods below help teams land on the most likely to succeed solution while also holding space for improvement.

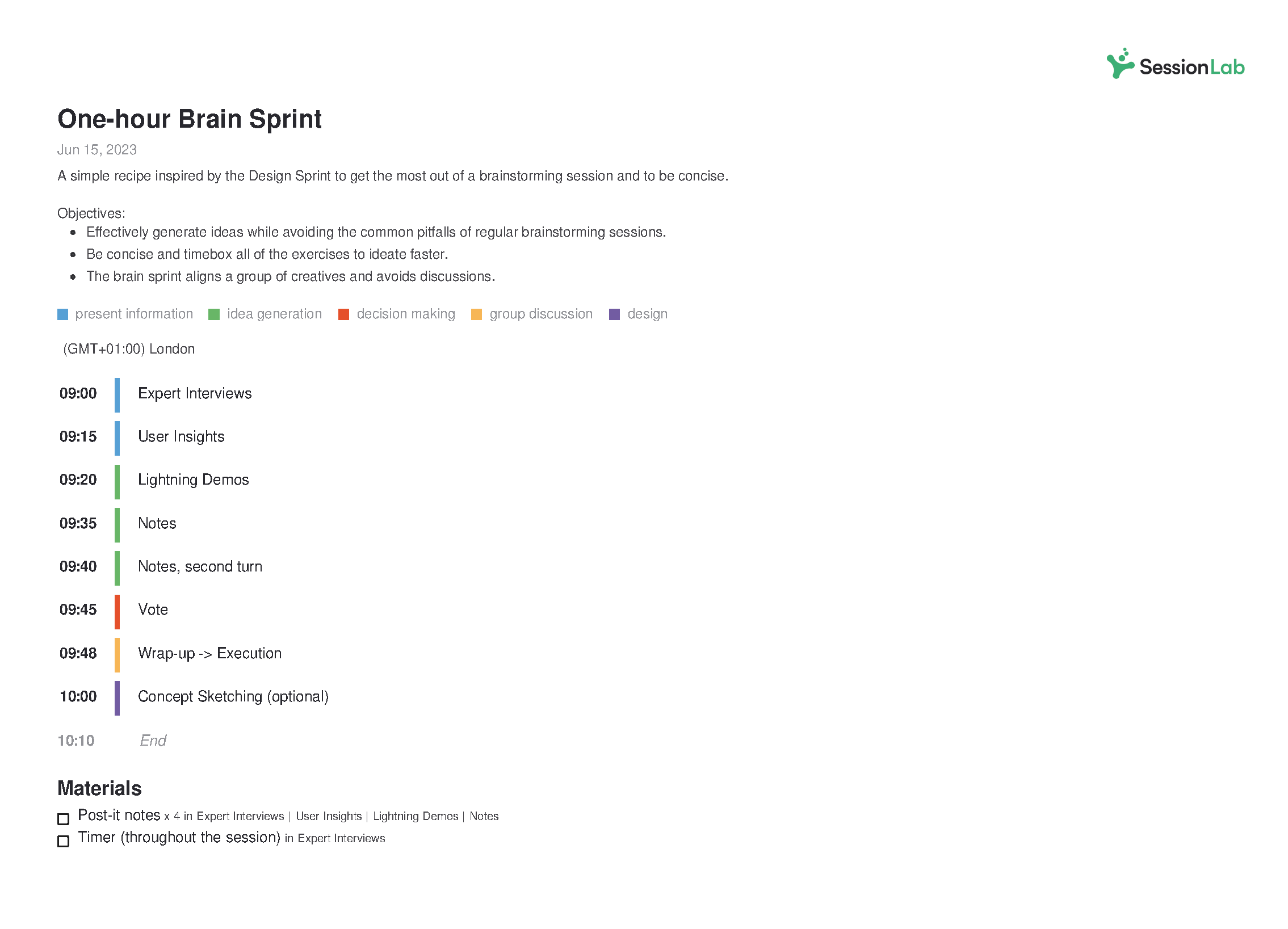

Every effective problem solving process begins with an agenda . A well-structured workshop is one of the best methods for successfully guiding a group from exploring a problem to implementing a solution.

In SessionLab, it’s easy to go from an idea to a complete agenda . Start by dragging and dropping your core problem solving activities into place . Add timings, breaks and necessary materials before sharing your agenda with your colleagues.

The resulting agenda will be your guide to an effective and productive problem solving session that will also help you stay organized on the day!

Tips for more effective problem solving

Problem-solving activities are only one part of the puzzle. While a great method can help unlock your team’s ability to solve problems, without a thoughtful approach and strong facilitation the solutions may not be fit for purpose.

Let’s take a look at some problem-solving tips you can apply to any process to help it be a success!

Clearly define the problem

Jumping straight to solutions can be tempting, though without first clearly articulating a problem, the solution might not be the right one. Many of the problem-solving activities below include sections where the problem is explored and clearly defined before moving on.

This is a vital part of the problem-solving process and taking the time to fully define an issue can save time and effort later. A clear definition helps identify irrelevant information and it also ensures that your team sets off on the right track.

Don’t jump to conclusions

It’s easy for groups to exhibit cognitive bias or have preconceived ideas about both problems and potential solutions. Be sure to back up any problem statements or potential solutions with facts, research, and adequate forethought.

The best techniques ask participants to be methodical and challenge preconceived notions. Make sure you give the group enough time and space to collect relevant information and consider the problem in a new way. By approaching the process with a clear, rational mindset, you’ll often find that better solutions are more forthcoming.

Try different approaches

Problems come in all shapes and sizes and so too should the methods you use to solve them. If you find that one approach isn’t yielding results and your team isn’t finding different solutions, try mixing it up. You’ll be surprised at how using a new creative activity can unblock your team and generate great solutions.

Don’t take it personally

Depending on the nature of your team or organizational problems, it’s easy for conversations to get heated. While it’s good for participants to be engaged in the discussions, ensure that emotions don’t run too high and that blame isn’t thrown around while finding solutions.

You’re all in it together, and even if your team or area is seeing problems, that isn’t necessarily a disparagement of you personally. Using facilitation skills to manage group dynamics is one effective method of helping conversations be more constructive.

Get the right people in the room

Your problem-solving method is often only as effective as the group using it. Getting the right people on the job and managing the number of people present is important too!

If the group is too small, you may not get enough different perspectives to effectively solve a problem. If the group is too large, you can go round and round during the ideation stages.

Creating the right group makeup is also important in ensuring you have the necessary expertise and skillset to both identify and follow up on potential solutions. Carefully consider who to include at each stage to help ensure your problem-solving method is followed and positioned for success.

Document everything

The best solutions can take refinement, iteration, and reflection to come out. Get into a habit of documenting your process in order to keep all the learnings from the session and to allow ideas to mature and develop. Many of the methods below involve the creation of documents or shared resources. Be sure to keep and share these so everyone can benefit from the work done!

Bring a facilitator

Facilitation is all about making group processes easier. With a subject as potentially emotive and important as problem-solving, having an impartial third party in the form of a facilitator can make all the difference in finding great solutions and keeping the process moving. Consider bringing a facilitator to your problem-solving session to get better results and generate meaningful solutions!

Develop your problem-solving skills

It takes time and practice to be an effective problem solver. While some roles or participants might more naturally gravitate towards problem-solving, it can take development and planning to help everyone create better solutions.

You might develop a training program, run a problem-solving workshop or simply ask your team to practice using the techniques below. Check out our post on problem-solving skills to see how you and your group can develop the right mental process and be more resilient to issues too!

Design a great agenda

Workshops are a great format for solving problems. With the right approach, you can focus a group and help them find the solutions to their own problems. But designing a process can be time-consuming and finding the right activities can be difficult.

Check out our workshop planning guide to level-up your agenda design and start running more effective workshops. Need inspiration? Check out templates designed by expert facilitators to help you kickstart your process!

In this section, we’ll look at in-depth problem-solving methods that provide a complete end-to-end process for developing effective solutions. These will help guide your team from the discovery and definition of a problem through to delivering the right solution.

If you’re looking for an all-encompassing method or problem-solving model, these processes are a great place to start. They’ll ask your team to challenge preconceived ideas and adopt a mindset for solving problems more effectively.

- Six Thinking Hats

- Lightning Decision Jam

- Problem Definition Process

- Discovery & Action Dialogue

Design Sprint 2.0

- Open Space Technology

1. Six Thinking Hats

Individual approaches to solving a problem can be very different based on what team or role an individual holds. It can be easy for existing biases or perspectives to find their way into the mix, or for internal politics to direct a conversation.

Six Thinking Hats is a classic method for identifying the problems that need to be solved and enables your team to consider them from different angles, whether that is by focusing on facts and data, creative solutions, or by considering why a particular solution might not work.

Like all problem-solving frameworks, Six Thinking Hats is effective at helping teams remove roadblocks from a conversation or discussion and come to terms with all the aspects necessary to solve complex problems.

2. Lightning Decision Jam

Featured courtesy of Jonathan Courtney of AJ&Smart Berlin, Lightning Decision Jam is one of those strategies that should be in every facilitation toolbox. Exploring problems and finding solutions is often creative in nature, though as with any creative process, there is the potential to lose focus and get lost.

Unstructured discussions might get you there in the end, but it’s much more effective to use a method that creates a clear process and team focus.

In Lightning Decision Jam, participants are invited to begin by writing challenges, concerns, or mistakes on post-its without discussing them before then being invited by the moderator to present them to the group.

From there, the team vote on which problems to solve and are guided through steps that will allow them to reframe those problems, create solutions and then decide what to execute on.

By deciding the problems that need to be solved as a team before moving on, this group process is great for ensuring the whole team is aligned and can take ownership over the next stages.

Lightning Decision Jam (LDJ) #action #decision making #problem solving #issue analysis #innovation #design #remote-friendly The problem with anything that requires creative thinking is that it’s easy to get lost—lose focus and fall into the trap of having useless, open-ended, unstructured discussions. Here’s the most effective solution I’ve found: Replace all open, unstructured discussion with a clear process. What to use this exercise for: Anything which requires a group of people to make decisions, solve problems or discuss challenges. It’s always good to frame an LDJ session with a broad topic, here are some examples: The conversion flow of our checkout Our internal design process How we organise events Keeping up with our competition Improving sales flow

3. Problem Definition Process

While problems can be complex, the problem-solving methods you use to identify and solve those problems can often be simple in design.

By taking the time to truly identify and define a problem before asking the group to reframe the challenge as an opportunity, this method is a great way to enable change.

Begin by identifying a focus question and exploring the ways in which it manifests before splitting into five teams who will each consider the problem using a different method: escape, reversal, exaggeration, distortion or wishful. Teams develop a problem objective and create ideas in line with their method before then feeding them back to the group.

This method is great for enabling in-depth discussions while also creating space for finding creative solutions too!

Problem Definition #problem solving #idea generation #creativity #online #remote-friendly A problem solving technique to define a problem, challenge or opportunity and to generate ideas.

4. The 5 Whys

Sometimes, a group needs to go further with their strategies and analyze the root cause at the heart of organizational issues. An RCA or root cause analysis is the process of identifying what is at the heart of business problems or recurring challenges.

The 5 Whys is a simple and effective method of helping a group go find the root cause of any problem or challenge and conduct analysis that will deliver results.

By beginning with the creation of a problem statement and going through five stages to refine it, The 5 Whys provides everything you need to truly discover the cause of an issue.

The 5 Whys #hyperisland #innovation This simple and powerful method is useful for getting to the core of a problem or challenge. As the title suggests, the group defines a problems, then asks the question “why” five times, often using the resulting explanation as a starting point for creative problem solving.

5. World Cafe

World Cafe is a simple but powerful facilitation technique to help bigger groups to focus their energy and attention on solving complex problems.

World Cafe enables this approach by creating a relaxed atmosphere where participants are able to self-organize and explore topics relevant and important to them which are themed around a central problem-solving purpose. Create the right atmosphere by modeling your space after a cafe and after guiding the group through the method, let them take the lead!

Making problem-solving a part of your organization’s culture in the long term can be a difficult undertaking. More approachable formats like World Cafe can be especially effective in bringing people unfamiliar with workshops into the fold.

World Cafe #hyperisland #innovation #issue analysis World Café is a simple yet powerful method, originated by Juanita Brown, for enabling meaningful conversations driven completely by participants and the topics that are relevant and important to them. Facilitators create a cafe-style space and provide simple guidelines. Participants then self-organize and explore a set of relevant topics or questions for conversation.

6. Discovery & Action Dialogue (DAD)

One of the best approaches is to create a safe space for a group to share and discover practices and behaviors that can help them find their own solutions.

With DAD, you can help a group choose which problems they wish to solve and which approaches they will take to do so. It’s great at helping remove resistance to change and can help get buy-in at every level too!

This process of enabling frontline ownership is great in ensuring follow-through and is one of the methods you will want in your toolbox as a facilitator.

Discovery & Action Dialogue (DAD) #idea generation #liberating structures #action #issue analysis #remote-friendly DADs make it easy for a group or community to discover practices and behaviors that enable some individuals (without access to special resources and facing the same constraints) to find better solutions than their peers to common problems. These are called positive deviant (PD) behaviors and practices. DADs make it possible for people in the group, unit, or community to discover by themselves these PD practices. DADs also create favorable conditions for stimulating participants’ creativity in spaces where they can feel safe to invent new and more effective practices. Resistance to change evaporates as participants are unleashed to choose freely which practices they will adopt or try and which problems they will tackle. DADs make it possible to achieve frontline ownership of solutions.

7. Design Sprint 2.0

Want to see how a team can solve big problems and move forward with prototyping and testing solutions in a few days? The Design Sprint 2.0 template from Jake Knapp, author of Sprint, is a complete agenda for a with proven results.

Developing the right agenda can involve difficult but necessary planning. Ensuring all the correct steps are followed can also be stressful or time-consuming depending on your level of experience.

Use this complete 4-day workshop template if you are finding there is no obvious solution to your challenge and want to focus your team around a specific problem that might require a shortcut to launching a minimum viable product or waiting for the organization-wide implementation of a solution.

8. Open space technology

Open space technology- developed by Harrison Owen – creates a space where large groups are invited to take ownership of their problem solving and lead individual sessions. Open space technology is a great format when you have a great deal of expertise and insight in the room and want to allow for different takes and approaches on a particular theme or problem you need to be solved.

Start by bringing your participants together to align around a central theme and focus their efforts. Explain the ground rules to help guide the problem-solving process and then invite members to identify any issue connecting to the central theme that they are interested in and are prepared to take responsibility for.

Once participants have decided on their approach to the core theme, they write their issue on a piece of paper, announce it to the group, pick a session time and place, and post the paper on the wall. As the wall fills up with sessions, the group is then invited to join the sessions that interest them the most and which they can contribute to, then you’re ready to begin!

Everyone joins the problem-solving group they’ve signed up to, record the discussion and if appropriate, findings can then be shared with the rest of the group afterward.

Open Space Technology #action plan #idea generation #problem solving #issue analysis #large group #online #remote-friendly Open Space is a methodology for large groups to create their agenda discerning important topics for discussion, suitable for conferences, community gatherings and whole system facilitation

Techniques to identify and analyze problems

Using a problem-solving method to help a team identify and analyze a problem can be a quick and effective addition to any workshop or meeting.

While further actions are always necessary, you can generate momentum and alignment easily, and these activities are a great place to get started.

We’ve put together this list of techniques to help you and your team with problem identification, analysis, and discussion that sets the foundation for developing effective solutions.

Let’s take a look!

- The Creativity Dice

- Fishbone Analysis

- Problem Tree

- SWOT Analysis

- Agreement-Certainty Matrix

- The Journalistic Six

- LEGO Challenge

- What, So What, Now What?

- Journalists

Individual and group perspectives are incredibly important, but what happens if people are set in their minds and need a change of perspective in order to approach a problem more effectively?

Flip It is a method we love because it is both simple to understand and run, and allows groups to understand how their perspectives and biases are formed.

Participants in Flip It are first invited to consider concerns, issues, or problems from a perspective of fear and write them on a flip chart. Then, the group is asked to consider those same issues from a perspective of hope and flip their understanding.

No problem and solution is free from existing bias and by changing perspectives with Flip It, you can then develop a problem solving model quickly and effectively.

Flip It! #gamestorming #problem solving #action Often, a change in a problem or situation comes simply from a change in our perspectives. Flip It! is a quick game designed to show players that perspectives are made, not born.

10. The Creativity Dice

One of the most useful problem solving skills you can teach your team is of approaching challenges with creativity, flexibility, and openness. Games like The Creativity Dice allow teams to overcome the potential hurdle of too much linear thinking and approach the process with a sense of fun and speed.

In The Creativity Dice, participants are organized around a topic and roll a dice to determine what they will work on for a period of 3 minutes at a time. They might roll a 3 and work on investigating factual information on the chosen topic. They might roll a 1 and work on identifying the specific goals, standards, or criteria for the session.

Encouraging rapid work and iteration while asking participants to be flexible are great skills to cultivate. Having a stage for idea incubation in this game is also important. Moments of pause can help ensure the ideas that are put forward are the most suitable.

The Creativity Dice #creativity #problem solving #thiagi #issue analysis Too much linear thinking is hazardous to creative problem solving. To be creative, you should approach the problem (or the opportunity) from different points of view. You should leave a thought hanging in mid-air and move to another. This skipping around prevents premature closure and lets your brain incubate one line of thought while you consciously pursue another.

11. Fishbone Analysis

Organizational or team challenges are rarely simple, and it’s important to remember that one problem can be an indication of something that goes deeper and may require further consideration to be solved.

Fishbone Analysis helps groups to dig deeper and understand the origins of a problem. It’s a great example of a root cause analysis method that is simple for everyone on a team to get their head around.

Participants in this activity are asked to annotate a diagram of a fish, first adding the problem or issue to be worked on at the head of a fish before then brainstorming the root causes of the problem and adding them as bones on the fish.

Using abstractions such as a diagram of a fish can really help a team break out of their regular thinking and develop a creative approach.

Fishbone Analysis #problem solving ##root cause analysis #decision making #online facilitation A process to help identify and understand the origins of problems, issues or observations.

12. Problem Tree

Encouraging visual thinking can be an essential part of many strategies. By simply reframing and clarifying problems, a group can move towards developing a problem solving model that works for them.

In Problem Tree, groups are asked to first brainstorm a list of problems – these can be design problems, team problems or larger business problems – and then organize them into a hierarchy. The hierarchy could be from most important to least important or abstract to practical, though the key thing with problem solving games that involve this aspect is that your group has some way of managing and sorting all the issues that are raised.

Once you have a list of problems that need to be solved and have organized them accordingly, you’re then well-positioned for the next problem solving steps.

Problem tree #define intentions #create #design #issue analysis A problem tree is a tool to clarify the hierarchy of problems addressed by the team within a design project; it represents high level problems or related sublevel problems.

13. SWOT Analysis

Chances are you’ve heard of the SWOT Analysis before. This problem-solving method focuses on identifying strengths, weaknesses, opportunities, and threats is a tried and tested method for both individuals and teams.

Start by creating a desired end state or outcome and bare this in mind – any process solving model is made more effective by knowing what you are moving towards. Create a quadrant made up of the four categories of a SWOT analysis and ask participants to generate ideas based on each of those quadrants.

Once you have those ideas assembled in their quadrants, cluster them together based on their affinity with other ideas. These clusters are then used to facilitate group conversations and move things forward.

SWOT analysis #gamestorming #problem solving #action #meeting facilitation The SWOT Analysis is a long-standing technique of looking at what we have, with respect to the desired end state, as well as what we could improve on. It gives us an opportunity to gauge approaching opportunities and dangers, and assess the seriousness of the conditions that affect our future. When we understand those conditions, we can influence what comes next.

14. Agreement-Certainty Matrix

Not every problem-solving approach is right for every challenge, and deciding on the right method for the challenge at hand is a key part of being an effective team.

The Agreement Certainty matrix helps teams align on the nature of the challenges facing them. By sorting problems from simple to chaotic, your team can understand what methods are suitable for each problem and what they can do to ensure effective results.

If you are already using Liberating Structures techniques as part of your problem-solving strategy, the Agreement-Certainty Matrix can be an invaluable addition to your process. We’ve found it particularly if you are having issues with recurring problems in your organization and want to go deeper in understanding the root cause.

Agreement-Certainty Matrix #issue analysis #liberating structures #problem solving You can help individuals or groups avoid the frequent mistake of trying to solve a problem with methods that are not adapted to the nature of their challenge. The combination of two questions makes it possible to easily sort challenges into four categories: simple, complicated, complex , and chaotic . A problem is simple when it can be solved reliably with practices that are easy to duplicate. It is complicated when experts are required to devise a sophisticated solution that will yield the desired results predictably. A problem is complex when there are several valid ways to proceed but outcomes are not predictable in detail. Chaotic is when the context is too turbulent to identify a path forward. A loose analogy may be used to describe these differences: simple is like following a recipe, complicated like sending a rocket to the moon, complex like raising a child, and chaotic is like the game “Pin the Tail on the Donkey.” The Liberating Structures Matching Matrix in Chapter 5 can be used as the first step to clarify the nature of a challenge and avoid the mismatches between problems and solutions that are frequently at the root of chronic, recurring problems.

Organizing and charting a team’s progress can be important in ensuring its success. SQUID (Sequential Question and Insight Diagram) is a great model that allows a team to effectively switch between giving questions and answers and develop the skills they need to stay on track throughout the process.

Begin with two different colored sticky notes – one for questions and one for answers – and with your central topic (the head of the squid) on the board. Ask the group to first come up with a series of questions connected to their best guess of how to approach the topic. Ask the group to come up with answers to those questions, fix them to the board and connect them with a line. After some discussion, go back to question mode by responding to the generated answers or other points on the board.

It’s rewarding to see a diagram grow throughout the exercise, and a completed SQUID can provide a visual resource for future effort and as an example for other teams.

SQUID #gamestorming #project planning #issue analysis #problem solving When exploring an information space, it’s important for a group to know where they are at any given time. By using SQUID, a group charts out the territory as they go and can navigate accordingly. SQUID stands for Sequential Question and Insight Diagram.

16. Speed Boat

To continue with our nautical theme, Speed Boat is a short and sweet activity that can help a team quickly identify what employees, clients or service users might have a problem with and analyze what might be standing in the way of achieving a solution.

Methods that allow for a group to make observations, have insights and obtain those eureka moments quickly are invaluable when trying to solve complex problems.

In Speed Boat, the approach is to first consider what anchors and challenges might be holding an organization (or boat) back. Bonus points if you are able to identify any sharks in the water and develop ideas that can also deal with competitors!

Speed Boat #gamestorming #problem solving #action Speedboat is a short and sweet way to identify what your employees or clients don’t like about your product/service or what’s standing in the way of a desired goal.

17. The Journalistic Six

Some of the most effective ways of solving problems is by encouraging teams to be more inclusive and diverse in their thinking.

Based on the six key questions journalism students are taught to answer in articles and news stories, The Journalistic Six helps create teams to see the whole picture. By using who, what, when, where, why, and how to facilitate the conversation and encourage creative thinking, your team can make sure that the problem identification and problem analysis stages of the are covered exhaustively and thoughtfully. Reporter’s notebook and dictaphone optional.

The Journalistic Six – Who What When Where Why How #idea generation #issue analysis #problem solving #online #creative thinking #remote-friendly A questioning method for generating, explaining, investigating ideas.

18. LEGO Challenge

Now for an activity that is a little out of the (toy) box. LEGO Serious Play is a facilitation methodology that can be used to improve creative thinking and problem-solving skills.

The LEGO Challenge includes giving each member of the team an assignment that is hidden from the rest of the group while they create a structure without speaking.

What the LEGO challenge brings to the table is a fun working example of working with stakeholders who might not be on the same page to solve problems. Also, it’s LEGO! Who doesn’t love LEGO!

LEGO Challenge #hyperisland #team A team-building activity in which groups must work together to build a structure out of LEGO, but each individual has a secret “assignment” which makes the collaborative process more challenging. It emphasizes group communication, leadership dynamics, conflict, cooperation, patience and problem solving strategy.

19. What, So What, Now What?

If not carefully managed, the problem identification and problem analysis stages of the problem-solving process can actually create more problems and misunderstandings.

The What, So What, Now What? problem-solving activity is designed to help collect insights and move forward while also eliminating the possibility of disagreement when it comes to identifying, clarifying, and analyzing organizational or work problems.

Facilitation is all about bringing groups together so that might work on a shared goal and the best problem-solving strategies ensure that teams are aligned in purpose, if not initially in opinion or insight.

Throughout the three steps of this game, you give everyone on a team to reflect on a problem by asking what happened, why it is important, and what actions should then be taken.

This can be a great activity for bringing our individual perceptions about a problem or challenge and contextualizing it in a larger group setting. This is one of the most important problem-solving skills you can bring to your organization.

W³ – What, So What, Now What? #issue analysis #innovation #liberating structures You can help groups reflect on a shared experience in a way that builds understanding and spurs coordinated action while avoiding unproductive conflict. It is possible for every voice to be heard while simultaneously sifting for insights and shaping new direction. Progressing in stages makes this practical—from collecting facts about What Happened to making sense of these facts with So What and finally to what actions logically follow with Now What . The shared progression eliminates most of the misunderstandings that otherwise fuel disagreements about what to do. Voila!

20. Journalists

Problem analysis can be one of the most important and decisive stages of all problem-solving tools. Sometimes, a team can become bogged down in the details and are unable to move forward.

Journalists is an activity that can avoid a group from getting stuck in the problem identification or problem analysis stages of the process.

In Journalists, the group is invited to draft the front page of a fictional newspaper and figure out what stories deserve to be on the cover and what headlines those stories will have. By reframing how your problems and challenges are approached, you can help a team move productively through the process and be better prepared for the steps to follow.

Journalists #vision #big picture #issue analysis #remote-friendly This is an exercise to use when the group gets stuck in details and struggles to see the big picture. Also good for defining a vision.

Problem-solving techniques for developing solutions

The success of any problem-solving process can be measured by the solutions it produces. After you’ve defined the issue, explored existing ideas, and ideated, it’s time to narrow down to the correct solution.

Use these problem-solving techniques when you want to help your team find consensus, compare possible solutions, and move towards taking action on a particular problem.

- Improved Solutions

- Four-Step Sketch

- 15% Solutions

- How-Now-Wow matrix

- Impact Effort Matrix

21. Mindspin

Brainstorming is part of the bread and butter of the problem-solving process and all problem-solving strategies benefit from getting ideas out and challenging a team to generate solutions quickly.

With Mindspin, participants are encouraged not only to generate ideas but to do so under time constraints and by slamming down cards and passing them on. By doing multiple rounds, your team can begin with a free generation of possible solutions before moving on to developing those solutions and encouraging further ideation.

This is one of our favorite problem-solving activities and can be great for keeping the energy up throughout the workshop. Remember the importance of helping people become engaged in the process – energizing problem-solving techniques like Mindspin can help ensure your team stays engaged and happy, even when the problems they’re coming together to solve are complex.

MindSpin #teampedia #idea generation #problem solving #action A fast and loud method to enhance brainstorming within a team. Since this activity has more than round ideas that are repetitive can be ruled out leaving more creative and innovative answers to the challenge.

22. Improved Solutions

After a team has successfully identified a problem and come up with a few solutions, it can be tempting to call the work of the problem-solving process complete. That said, the first solution is not necessarily the best, and by including a further review and reflection activity into your problem-solving model, you can ensure your group reaches the best possible result.

One of a number of problem-solving games from Thiagi Group, Improved Solutions helps you go the extra mile and develop suggested solutions with close consideration and peer review. By supporting the discussion of several problems at once and by shifting team roles throughout, this problem-solving technique is a dynamic way of finding the best solution.

Improved Solutions #creativity #thiagi #problem solving #action #team You can improve any solution by objectively reviewing its strengths and weaknesses and making suitable adjustments. In this creativity framegame, you improve the solutions to several problems. To maintain objective detachment, you deal with a different problem during each of six rounds and assume different roles (problem owner, consultant, basher, booster, enhancer, and evaluator) during each round. At the conclusion of the activity, each player ends up with two solutions to her problem.

23. Four Step Sketch

Creative thinking and visual ideation does not need to be confined to the opening stages of your problem-solving strategies. Exercises that include sketching and prototyping on paper can be effective at the solution finding and development stage of the process, and can be great for keeping a team engaged.

By going from simple notes to a crazy 8s round that involves rapidly sketching 8 variations on their ideas before then producing a final solution sketch, the group is able to iterate quickly and visually. Problem-solving techniques like Four-Step Sketch are great if you have a group of different thinkers and want to change things up from a more textual or discussion-based approach.

Four-Step Sketch #design sprint #innovation #idea generation #remote-friendly The four-step sketch is an exercise that helps people to create well-formed concepts through a structured process that includes: Review key information Start design work on paper, Consider multiple variations , Create a detailed solution . This exercise is preceded by a set of other activities allowing the group to clarify the challenge they want to solve. See how the Four Step Sketch exercise fits into a Design Sprint

24. 15% Solutions

Some problems are simpler than others and with the right problem-solving activities, you can empower people to take immediate actions that can help create organizational change.

Part of the liberating structures toolkit, 15% solutions is a problem-solving technique that focuses on finding and implementing solutions quickly. A process of iterating and making small changes quickly can help generate momentum and an appetite for solving complex problems.

Problem-solving strategies can live and die on whether people are onboard. Getting some quick wins is a great way of getting people behind the process.

It can be extremely empowering for a team to realize that problem-solving techniques can be deployed quickly and easily and delineate between things they can positively impact and those things they cannot change.

15% Solutions #action #liberating structures #remote-friendly You can reveal the actions, however small, that everyone can do immediately. At a minimum, these will create momentum, and that may make a BIG difference. 15% Solutions show that there is no reason to wait around, feel powerless, or fearful. They help people pick it up a level. They get individuals and the group to focus on what is within their discretion instead of what they cannot change. With a very simple question, you can flip the conversation to what can be done and find solutions to big problems that are often distributed widely in places not known in advance. Shifting a few grains of sand may trigger a landslide and change the whole landscape.

25. How-Now-Wow Matrix

The problem-solving process is often creative, as complex problems usually require a change of thinking and creative response in order to find the best solutions. While it’s common for the first stages to encourage creative thinking, groups can often gravitate to familiar solutions when it comes to the end of the process.

When selecting solutions, you don’t want to lose your creative energy! The How-Now-Wow Matrix from Gamestorming is a great problem-solving activity that enables a group to stay creative and think out of the box when it comes to selecting the right solution for a given problem.

Problem-solving techniques that encourage creative thinking and the ideation and selection of new solutions can be the most effective in organisational change. Give the How-Now-Wow Matrix a go, and not just for how pleasant it is to say out loud.

How-Now-Wow Matrix #gamestorming #idea generation #remote-friendly When people want to develop new ideas, they most often think out of the box in the brainstorming or divergent phase. However, when it comes to convergence, people often end up picking ideas that are most familiar to them. This is called a ‘creative paradox’ or a ‘creadox’. The How-Now-Wow matrix is an idea selection tool that breaks the creadox by forcing people to weigh each idea on 2 parameters.

26. Impact and Effort Matrix

All problem-solving techniques hope to not only find solutions to a given problem or challenge but to find the best solution. When it comes to finding a solution, groups are invited to put on their decision-making hats and really think about how a proposed idea would work in practice.

The Impact and Effort Matrix is one of the problem-solving techniques that fall into this camp, empowering participants to first generate ideas and then categorize them into a 2×2 matrix based on impact and effort.

Activities that invite critical thinking while remaining simple are invaluable. Use the Impact and Effort Matrix to move from ideation and towards evaluating potential solutions before then committing to them.

Impact and Effort Matrix #gamestorming #decision making #action #remote-friendly In this decision-making exercise, possible actions are mapped based on two factors: effort required to implement and potential impact. Categorizing ideas along these lines is a useful technique in decision making, as it obliges contributors to balance and evaluate suggested actions before committing to them.

27. Dotmocracy

If you’ve followed each of the problem-solving steps with your group successfully, you should move towards the end of your process with heaps of possible solutions developed with a specific problem in mind. But how do you help a group go from ideation to putting a solution into action?

Dotmocracy – or Dot Voting -is a tried and tested method of helping a team in the problem-solving process make decisions and put actions in place with a degree of oversight and consensus.

One of the problem-solving techniques that should be in every facilitator’s toolbox, Dot Voting is fast and effective and can help identify the most popular and best solutions and help bring a group to a decision effectively.

Dotmocracy #action #decision making #group prioritization #hyperisland #remote-friendly Dotmocracy is a simple method for group prioritization or decision-making. It is not an activity on its own, but a method to use in processes where prioritization or decision-making is the aim. The method supports a group to quickly see which options are most popular or relevant. The options or ideas are written on post-its and stuck up on a wall for the whole group to see. Each person votes for the options they think are the strongest, and that information is used to inform a decision.

All facilitators know that warm-ups and icebreakers are useful for any workshop or group process. Problem-solving workshops are no different.

Use these problem-solving techniques to warm up a group and prepare them for the rest of the process. Activating your group by tapping into some of the top problem-solving skills can be one of the best ways to see great outcomes from your session.

- Check-in/Check-out

- Doodling Together

- Show and Tell

- Constellations

- Draw a Tree

28. Check-in / Check-out

Solid processes are planned from beginning to end, and the best facilitators know that setting the tone and establishing a safe, open environment can be integral to a successful problem-solving process.

Check-in / Check-out is a great way to begin and/or bookend a problem-solving workshop. Checking in to a session emphasizes that everyone will be seen, heard, and expected to contribute.

If you are running a series of meetings, setting a consistent pattern of checking in and checking out can really help your team get into a groove. We recommend this opening-closing activity for small to medium-sized groups though it can work with large groups if they’re disciplined!

Check-in / Check-out #team #opening #closing #hyperisland #remote-friendly Either checking-in or checking-out is a simple way for a team to open or close a process, symbolically and in a collaborative way. Checking-in/out invites each member in a group to be present, seen and heard, and to express a reflection or a feeling. Checking-in emphasizes presence, focus and group commitment; checking-out emphasizes reflection and symbolic closure.

29. Doodling Together

Thinking creatively and not being afraid to make suggestions are important problem-solving skills for any group or team, and warming up by encouraging these behaviors is a great way to start.

Doodling Together is one of our favorite creative ice breaker games – it’s quick, effective, and fun and can make all following problem-solving steps easier by encouraging a group to collaborate visually. By passing cards and adding additional items as they go, the workshop group gets into a groove of co-creation and idea development that is crucial to finding solutions to problems.

Doodling Together #collaboration #creativity #teamwork #fun #team #visual methods #energiser #icebreaker #remote-friendly Create wild, weird and often funny postcards together & establish a group’s creative confidence.

30. Show and Tell

You might remember some version of Show and Tell from being a kid in school and it’s a great problem-solving activity to kick off a session.

Asking participants to prepare a little something before a workshop by bringing an object for show and tell can help them warm up before the session has even begun! Games that include a physical object can also help encourage early engagement before moving onto more big-picture thinking.

By asking your participants to tell stories about why they chose to bring a particular item to the group, you can help teams see things from new perspectives and see both differences and similarities in the way they approach a topic. Great groundwork for approaching a problem-solving process as a team!

Show and Tell #gamestorming #action #opening #meeting facilitation Show and Tell taps into the power of metaphors to reveal players’ underlying assumptions and associations around a topic The aim of the game is to get a deeper understanding of stakeholders’ perspectives on anything—a new project, an organizational restructuring, a shift in the company’s vision or team dynamic.

31. Constellations

Who doesn’t love stars? Constellations is a great warm-up activity for any workshop as it gets people up off their feet, energized, and ready to engage in new ways with established topics. It’s also great for showing existing beliefs, biases, and patterns that can come into play as part of your session.

Using warm-up games that help build trust and connection while also allowing for non-verbal responses can be great for easing people into the problem-solving process and encouraging engagement from everyone in the group. Constellations is great in large spaces that allow for movement and is definitely a practical exercise to allow the group to see patterns that are otherwise invisible.

Constellations #trust #connection #opening #coaching #patterns #system Individuals express their response to a statement or idea by standing closer or further from a central object. Used with teams to reveal system, hidden patterns, perspectives.

32. Draw a Tree

Problem-solving games that help raise group awareness through a central, unifying metaphor can be effective ways to warm-up a group in any problem-solving model.

Draw a Tree is a simple warm-up activity you can use in any group and which can provide a quick jolt of energy. Start by asking your participants to draw a tree in just 45 seconds – they can choose whether it will be abstract or realistic.

Once the timer is up, ask the group how many people included the roots of the tree and use this as a means to discuss how we can ignore important parts of any system simply because they are not visible.

All problem-solving strategies are made more effective by thinking of problems critically and by exposing things that may not normally come to light. Warm-up games like Draw a Tree are great in that they quickly demonstrate some key problem-solving skills in an accessible and effective way.

Draw a Tree #thiagi #opening #perspectives #remote-friendly With this game you can raise awarness about being more mindful, and aware of the environment we live in.

Each step of the problem-solving workshop benefits from an intelligent deployment of activities, games, and techniques. Bringing your session to an effective close helps ensure that solutions are followed through on and that you also celebrate what has been achieved.

Here are some problem-solving activities you can use to effectively close a workshop or meeting and ensure the great work you’ve done can continue afterward.

- One Breath Feedback

- Who What When Matrix

- Response Cards

How do I conclude a problem-solving process?

All good things must come to an end. With the bulk of the work done, it can be tempting to conclude your workshop swiftly and without a moment to debrief and align. This can be problematic in that it doesn’t allow your team to fully process the results or reflect on the process.

At the end of an effective session, your team will have gone through a process that, while productive, can be exhausting. It’s important to give your group a moment to take a breath, ensure that they are clear on future actions, and provide short feedback before leaving the space.

The primary purpose of any problem-solving method is to generate solutions and then implement them. Be sure to take the opportunity to ensure everyone is aligned and ready to effectively implement the solutions you produced in the workshop.

Remember that every process can be improved and by giving a short moment to collect feedback in the session, you can further refine your problem-solving methods and see further success in the future too.

33. One Breath Feedback

Maintaining attention and focus during the closing stages of a problem-solving workshop can be tricky and so being concise when giving feedback can be important. It’s easy to incur “death by feedback” should some team members go on for too long sharing their perspectives in a quick feedback round.

One Breath Feedback is a great closing activity for workshops. You give everyone an opportunity to provide feedback on what they’ve done but only in the space of a single breath. This keeps feedback short and to the point and means that everyone is encouraged to provide the most important piece of feedback to them.

One breath feedback #closing #feedback #action This is a feedback round in just one breath that excels in maintaining attention: each participants is able to speak during just one breath … for most people that’s around 20 to 25 seconds … unless of course you’ve been a deep sea diver in which case you’ll be able to do it for longer.

34. Who What When Matrix

Matrices feature as part of many effective problem-solving strategies and with good reason. They are easily recognizable, simple to use, and generate results.

The Who What When Matrix is a great tool to use when closing your problem-solving session by attributing a who, what and when to the actions and solutions you have decided upon. The resulting matrix is a simple, easy-to-follow way of ensuring your team can move forward.

Great solutions can’t be enacted without action and ownership. Your problem-solving process should include a stage for allocating tasks to individuals or teams and creating a realistic timeframe for those solutions to be implemented or checked out. Use this method to keep the solution implementation process clear and simple for all involved.

Who/What/When Matrix #gamestorming #action #project planning With Who/What/When matrix, you can connect people with clear actions they have defined and have committed to.

35. Response cards

Group discussion can comprise the bulk of most problem-solving activities and by the end of the process, you might find that your team is talked out!

Providing a means for your team to give feedback with short written notes can ensure everyone is head and can contribute without the need to stand up and talk. Depending on the needs of the group, giving an alternative can help ensure everyone can contribute to your problem-solving model in the way that makes the most sense for them.

Response Cards is a great way to close a workshop if you are looking for a gentle warm-down and want to get some swift discussion around some of the feedback that is raised.

Response Cards #debriefing #closing #structured sharing #questions and answers #thiagi #action It can be hard to involve everyone during a closing of a session. Some might stay in the background or get unheard because of louder participants. However, with the use of Response Cards, everyone will be involved in providing feedback or clarify questions at the end of a session.

Save time and effort discovering the right solutions

A structured problem solving process is a surefire way of solving tough problems, discovering creative solutions and driving organizational change. But how can you design for successful outcomes?

With SessionLab, it’s easy to design engaging workshops that deliver results. Drag, drop and reorder blocks to build your agenda. When you make changes or update your agenda, your session timing adjusts automatically , saving you time on manual adjustments.

Collaborating with stakeholders or clients? Share your agenda with a single click and collaborate in real-time. No more sending documents back and forth over email.

Explore how to use SessionLab to design effective problem solving workshops or watch this five minute video to see the planner in action!

Over to you

The problem-solving process can often be as complicated and multifaceted as the problems they are set-up to solve. With the right problem-solving techniques and a mix of creative exercises designed to guide discussion and generate purposeful ideas, we hope we’ve given you the tools to find the best solutions as simply and easily as possible.

Is there a problem-solving technique that you are missing here? Do you have a favorite activity or method you use when facilitating? Let us know in the comments below, we’d love to hear from you!

thank you very much for these excellent techniques

Certainly wonderful article, very detailed. Shared!

Your list of techniques for problem solving can be helpfully extended by adding TRIZ to the list of techniques. TRIZ has 40 problem solving techniques derived from methods inventros and patent holders used to get new patents. About 10-12 are general approaches. many organization sponsor classes in TRIZ that are used to solve business problems or general organiztational problems. You can take a look at TRIZ and dwonload a free internet booklet to see if you feel it shound be included per your selection process.

Leave a Comment Cancel reply

Your email address will not be published. Required fields are marked *

Going from a mere idea to a workshop that delivers results for your clients can feel like a daunting task. In this piece, we will shine a light on all the work behind the scenes and help you learn how to plan a workshop from start to finish. On a good day, facilitation can feel like effortless magic, but that is mostly the result of backstage work, foresight, and a lot of careful planning. Read on to learn a step-by-step approach to breaking the process of planning a workshop into small, manageable chunks. The flow starts with the first meeting with a client to define the purposes of a workshop.…

How does learning work? A clever 9-year-old once told me: “I know I am learning something new when I am surprised.” The science of adult learning tells us that, in order to learn new skills (which, unsurprisingly, is harder for adults to do than kids) grown-ups need to first get into a specific headspace. In a business, this approach is often employed in a training session where employees learn new skills or work on professional development. But how do you ensure your training is effective? In this guide, we'll explore how to create an effective training session plan and run engaging training sessions. As team leader, project manager, or consultant,…

Effective online tools are a necessity for smooth and engaging virtual workshops and meetings. But how do you choose the right ones? Do you sometimes feel that the good old pen and paper or MS Office toolkit and email leaves you struggling to stay on top of managing and delivering your workshop? Fortunately, there are plenty of online tools to make your life easier when you need to facilitate a meeting and lead workshops. In this post, we’ll share our favorite online tools you can use to make your job as a facilitator easier. In fact, there are plenty of free online workshop tools and meeting facilitation software you can…

Design your next workshop with SessionLab

Join the 150,000 facilitators using SessionLab

Sign up for free

- School Guide

- Mathematics

- Number System and Arithmetic

- Trigonometry

- Probability

- Mensuration

- Maths Formulas

- Class 8 Maths Notes

- Class 9 Maths Notes

- Class 10 Maths Notes

- Class 11 Maths Notes