Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- My Account Login

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Review Article

- Open access

- Published: 11 January 2023

The effectiveness of collaborative problem solving in promoting students’ critical thinking: A meta-analysis based on empirical literature

- Enwei Xu ORCID: orcid.org/0000-0001-6424-8169 1 ,

- Wei Wang 1 &

- Qingxia Wang 1

Humanities and Social Sciences Communications volume 10 , Article number: 16 ( 2023 ) Cite this article

13k Accesses

10 Citations

3 Altmetric

Metrics details

- Science, technology and society

Collaborative problem-solving has been widely embraced in the classroom instruction of critical thinking, which is regarded as the core of curriculum reform based on key competencies in the field of education as well as a key competence for learners in the 21st century. However, the effectiveness of collaborative problem-solving in promoting students’ critical thinking remains uncertain. This current research presents the major findings of a meta-analysis of 36 pieces of the literature revealed in worldwide educational periodicals during the 21st century to identify the effectiveness of collaborative problem-solving in promoting students’ critical thinking and to determine, based on evidence, whether and to what extent collaborative problem solving can result in a rise or decrease in critical thinking. The findings show that (1) collaborative problem solving is an effective teaching approach to foster students’ critical thinking, with a significant overall effect size (ES = 0.82, z = 12.78, P < 0.01, 95% CI [0.69, 0.95]); (2) in respect to the dimensions of critical thinking, collaborative problem solving can significantly and successfully enhance students’ attitudinal tendencies (ES = 1.17, z = 7.62, P < 0.01, 95% CI[0.87, 1.47]); nevertheless, it falls short in terms of improving students’ cognitive skills, having only an upper-middle impact (ES = 0.70, z = 11.55, P < 0.01, 95% CI[0.58, 0.82]); and (3) the teaching type (chi 2 = 7.20, P < 0.05), intervention duration (chi 2 = 12.18, P < 0.01), subject area (chi 2 = 13.36, P < 0.05), group size (chi 2 = 8.77, P < 0.05), and learning scaffold (chi 2 = 9.03, P < 0.01) all have an impact on critical thinking, and they can be viewed as important moderating factors that affect how critical thinking develops. On the basis of these results, recommendations are made for further study and instruction to better support students’ critical thinking in the context of collaborative problem-solving.

Similar content being viewed by others

Anger is eliminated with the disposal of a paper written because of provocation

Yuta Kanaya & Nobuyuki Kawai

Impact of artificial intelligence on human loss in decision making, laziness and safety in education

Sayed Fayaz Ahmad, Heesup Han, … Antonio Ariza-Montes

An overview of clinical decision support systems: benefits, risks, and strategies for success

Reed T. Sutton, David Pincock, … Karen I. Kroeker

Introduction

Although critical thinking has a long history in research, the concept of critical thinking, which is regarded as an essential competence for learners in the 21st century, has recently attracted more attention from researchers and teaching practitioners (National Research Council, 2012 ). Critical thinking should be the core of curriculum reform based on key competencies in the field of education (Peng and Deng, 2017 ) because students with critical thinking can not only understand the meaning of knowledge but also effectively solve practical problems in real life even after knowledge is forgotten (Kek and Huijser, 2011 ). The definition of critical thinking is not universal (Ennis, 1989 ; Castle, 2009 ; Niu et al., 2013 ). In general, the definition of critical thinking is a self-aware and self-regulated thought process (Facione, 1990 ; Niu et al., 2013 ). It refers to the cognitive skills needed to interpret, analyze, synthesize, reason, and evaluate information as well as the attitudinal tendency to apply these abilities (Halpern, 2001 ). The view that critical thinking can be taught and learned through curriculum teaching has been widely supported by many researchers (e.g., Kuncel, 2011 ; Leng and Lu, 2020 ), leading to educators’ efforts to foster it among students. In the field of teaching practice, there are three types of courses for teaching critical thinking (Ennis, 1989 ). The first is an independent curriculum in which critical thinking is taught and cultivated without involving the knowledge of specific disciplines; the second is an integrated curriculum in which critical thinking is integrated into the teaching of other disciplines as a clear teaching goal; and the third is a mixed curriculum in which critical thinking is taught in parallel to the teaching of other disciplines for mixed teaching training. Furthermore, numerous measuring tools have been developed by researchers and educators to measure critical thinking in the context of teaching practice. These include standardized measurement tools, such as WGCTA, CCTST, CCTT, and CCTDI, which have been verified by repeated experiments and are considered effective and reliable by international scholars (Facione and Facione, 1992 ). In short, descriptions of critical thinking, including its two dimensions of attitudinal tendency and cognitive skills, different types of teaching courses, and standardized measurement tools provide a complex normative framework for understanding, teaching, and evaluating critical thinking.

Cultivating critical thinking in curriculum teaching can start with a problem, and one of the most popular critical thinking instructional approaches is problem-based learning (Liu et al., 2020 ). Duch et al. ( 2001 ) noted that problem-based learning in group collaboration is progressive active learning, which can improve students’ critical thinking and problem-solving skills. Collaborative problem-solving is the organic integration of collaborative learning and problem-based learning, which takes learners as the center of the learning process and uses problems with poor structure in real-world situations as the starting point for the learning process (Liang et al., 2017 ). Students learn the knowledge needed to solve problems in a collaborative group, reach a consensus on problems in the field, and form solutions through social cooperation methods, such as dialogue, interpretation, questioning, debate, negotiation, and reflection, thus promoting the development of learners’ domain knowledge and critical thinking (Cindy, 2004 ; Liang et al., 2017 ).

Collaborative problem-solving has been widely used in the teaching practice of critical thinking, and several studies have attempted to conduct a systematic review and meta-analysis of the empirical literature on critical thinking from various perspectives. However, little attention has been paid to the impact of collaborative problem-solving on critical thinking. Therefore, the best approach for developing and enhancing critical thinking throughout collaborative problem-solving is to examine how to implement critical thinking instruction; however, this issue is still unexplored, which means that many teachers are incapable of better instructing critical thinking (Leng and Lu, 2020 ; Niu et al., 2013 ). For example, Huber ( 2016 ) provided the meta-analysis findings of 71 publications on gaining critical thinking over various time frames in college with the aim of determining whether critical thinking was truly teachable. These authors found that learners significantly improve their critical thinking while in college and that critical thinking differs with factors such as teaching strategies, intervention duration, subject area, and teaching type. The usefulness of collaborative problem-solving in fostering students’ critical thinking, however, was not determined by this study, nor did it reveal whether there existed significant variations among the different elements. A meta-analysis of 31 pieces of educational literature was conducted by Liu et al. ( 2020 ) to assess the impact of problem-solving on college students’ critical thinking. These authors found that problem-solving could promote the development of critical thinking among college students and proposed establishing a reasonable group structure for problem-solving in a follow-up study to improve students’ critical thinking. Additionally, previous empirical studies have reached inconclusive and even contradictory conclusions about whether and to what extent collaborative problem-solving increases or decreases critical thinking levels. As an illustration, Yang et al. ( 2008 ) carried out an experiment on the integrated curriculum teaching of college students based on a web bulletin board with the goal of fostering participants’ critical thinking in the context of collaborative problem-solving. These authors’ research revealed that through sharing, debating, examining, and reflecting on various experiences and ideas, collaborative problem-solving can considerably enhance students’ critical thinking in real-life problem situations. In contrast, collaborative problem-solving had a positive impact on learners’ interaction and could improve learning interest and motivation but could not significantly improve students’ critical thinking when compared to traditional classroom teaching, according to research by Naber and Wyatt ( 2014 ) and Sendag and Odabasi ( 2009 ) on undergraduate and high school students, respectively.

The above studies show that there is inconsistency regarding the effectiveness of collaborative problem-solving in promoting students’ critical thinking. Therefore, it is essential to conduct a thorough and trustworthy review to detect and decide whether and to what degree collaborative problem-solving can result in a rise or decrease in critical thinking. Meta-analysis is a quantitative analysis approach that is utilized to examine quantitative data from various separate studies that are all focused on the same research topic. This approach characterizes the effectiveness of its impact by averaging the effect sizes of numerous qualitative studies in an effort to reduce the uncertainty brought on by independent research and produce more conclusive findings (Lipsey and Wilson, 2001 ).

This paper used a meta-analytic approach and carried out a meta-analysis to examine the effectiveness of collaborative problem-solving in promoting students’ critical thinking in order to make a contribution to both research and practice. The following research questions were addressed by this meta-analysis:

What is the overall effect size of collaborative problem-solving in promoting students’ critical thinking and its impact on the two dimensions of critical thinking (i.e., attitudinal tendency and cognitive skills)?

How are the disparities between the study conclusions impacted by various moderating variables if the impacts of various experimental designs in the included studies are heterogeneous?

This research followed the strict procedures (e.g., database searching, identification, screening, eligibility, merging, duplicate removal, and analysis of included studies) of Cooper’s ( 2010 ) proposed meta-analysis approach for examining quantitative data from various separate studies that are all focused on the same research topic. The relevant empirical research that appeared in worldwide educational periodicals within the 21st century was subjected to this meta-analysis using Rev-Man 5.4. The consistency of the data extracted separately by two researchers was tested using Cohen’s kappa coefficient, and a publication bias test and a heterogeneity test were run on the sample data to ascertain the quality of this meta-analysis.

Data sources and search strategies

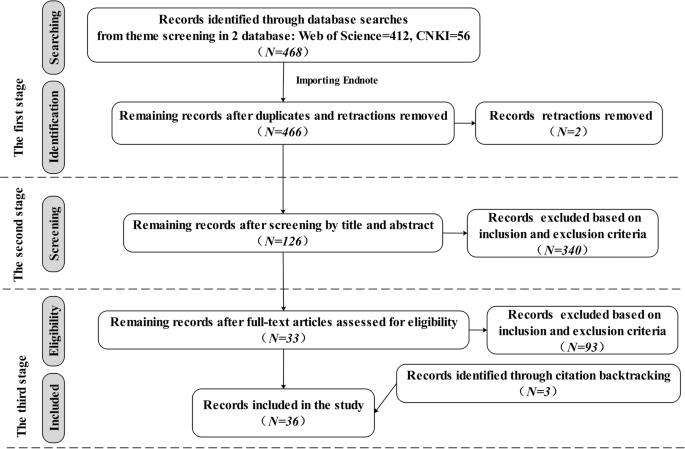

There were three stages to the data collection process for this meta-analysis, as shown in Fig. 1 , which shows the number of articles included and eliminated during the selection process based on the statement and study eligibility criteria.

This flowchart shows the number of records identified, included and excluded in the article.

First, the databases used to systematically search for relevant articles were the journal papers of the Web of Science Core Collection and the Chinese Core source journal, as well as the Chinese Social Science Citation Index (CSSCI) source journal papers included in CNKI. These databases were selected because they are credible platforms that are sources of scholarly and peer-reviewed information with advanced search tools and contain literature relevant to the subject of our topic from reliable researchers and experts. The search string with the Boolean operator used in the Web of Science was “TS = (((“critical thinking” or “ct” and “pretest” or “posttest”) or (“critical thinking” or “ct” and “control group” or “quasi experiment” or “experiment”)) and (“collaboration” or “collaborative learning” or “CSCL”) and (“problem solving” or “problem-based learning” or “PBL”))”. The research area was “Education Educational Research”, and the search period was “January 1, 2000, to December 30, 2021”. A total of 412 papers were obtained. The search string with the Boolean operator used in the CNKI was “SU = (‘critical thinking’*‘collaboration’ + ‘critical thinking’*‘collaborative learning’ + ‘critical thinking’*‘CSCL’ + ‘critical thinking’*‘problem solving’ + ‘critical thinking’*‘problem-based learning’ + ‘critical thinking’*‘PBL’ + ‘critical thinking’*‘problem oriented’) AND FT = (‘experiment’ + ‘quasi experiment’ + ‘pretest’ + ‘posttest’ + ‘empirical study’)” (translated into Chinese when searching). A total of 56 studies were found throughout the search period of “January 2000 to December 2021”. From the databases, all duplicates and retractions were eliminated before exporting the references into Endnote, a program for managing bibliographic references. In all, 466 studies were found.

Second, the studies that matched the inclusion and exclusion criteria for the meta-analysis were chosen by two researchers after they had reviewed the abstracts and titles of the gathered articles, yielding a total of 126 studies.

Third, two researchers thoroughly reviewed each included article’s whole text in accordance with the inclusion and exclusion criteria. Meanwhile, a snowball search was performed using the references and citations of the included articles to ensure complete coverage of the articles. Ultimately, 36 articles were kept.

Two researchers worked together to carry out this entire process, and a consensus rate of almost 94.7% was reached after discussion and negotiation to clarify any emerging differences.

Eligibility criteria

Since not all the retrieved studies matched the criteria for this meta-analysis, eligibility criteria for both inclusion and exclusion were developed as follows:

The publication language of the included studies was limited to English and Chinese, and the full text could be obtained. Articles that did not meet the publication language and articles not published between 2000 and 2021 were excluded.

The research design of the included studies must be empirical and quantitative studies that can assess the effect of collaborative problem-solving on the development of critical thinking. Articles that could not identify the causal mechanisms by which collaborative problem-solving affects critical thinking, such as review articles and theoretical articles, were excluded.

The research method of the included studies must feature a randomized control experiment or a quasi-experiment, or a natural experiment, which have a higher degree of internal validity with strong experimental designs and can all plausibly provide evidence that critical thinking and collaborative problem-solving are causally related. Articles with non-experimental research methods, such as purely correlational or observational studies, were excluded.

The participants of the included studies were only students in school, including K-12 students and college students. Articles in which the participants were non-school students, such as social workers or adult learners, were excluded.

The research results of the included studies must mention definite signs that may be utilized to gauge critical thinking’s impact (e.g., sample size, mean value, or standard deviation). Articles that lacked specific measurement indicators for critical thinking and could not calculate the effect size were excluded.

Data coding design

In order to perform a meta-analysis, it is necessary to collect the most important information from the articles, codify that information’s properties, and convert descriptive data into quantitative data. Therefore, this study designed a data coding template (see Table 1 ). Ultimately, 16 coding fields were retained.

The designed data-coding template consisted of three pieces of information. Basic information about the papers was included in the descriptive information: the publishing year, author, serial number, and title of the paper.

The variable information for the experimental design had three variables: the independent variable (instruction method), the dependent variable (critical thinking), and the moderating variable (learning stage, teaching type, intervention duration, learning scaffold, group size, measuring tool, and subject area). Depending on the topic of this study, the intervention strategy, as the independent variable, was coded into collaborative and non-collaborative problem-solving. The dependent variable, critical thinking, was coded as a cognitive skill and an attitudinal tendency. And seven moderating variables were created by grouping and combining the experimental design variables discovered within the 36 studies (see Table 1 ), where learning stages were encoded as higher education, high school, middle school, and primary school or lower; teaching types were encoded as mixed courses, integrated courses, and independent courses; intervention durations were encoded as 0–1 weeks, 1–4 weeks, 4–12 weeks, and more than 12 weeks; group sizes were encoded as 2–3 persons, 4–6 persons, 7–10 persons, and more than 10 persons; learning scaffolds were encoded as teacher-supported learning scaffold, technique-supported learning scaffold, and resource-supported learning scaffold; measuring tools were encoded as standardized measurement tools (e.g., WGCTA, CCTT, CCTST, and CCTDI) and self-adapting measurement tools (e.g., modified or made by researchers); and subject areas were encoded according to the specific subjects used in the 36 included studies.

The data information contained three metrics for measuring critical thinking: sample size, average value, and standard deviation. It is vital to remember that studies with various experimental designs frequently adopt various formulas to determine the effect size. And this paper used Morris’ proposed standardized mean difference (SMD) calculation formula ( 2008 , p. 369; see Supplementary Table S3 ).

Procedure for extracting and coding data

According to the data coding template (see Table 1 ), the 36 papers’ information was retrieved by two researchers, who then entered them into Excel (see Supplementary Table S1 ). The results of each study were extracted separately in the data extraction procedure if an article contained numerous studies on critical thinking, or if a study assessed different critical thinking dimensions. For instance, Tiwari et al. ( 2010 ) used four time points, which were viewed as numerous different studies, to examine the outcomes of critical thinking, and Chen ( 2013 ) included the two outcome variables of attitudinal tendency and cognitive skills, which were regarded as two studies. After discussion and negotiation during data extraction, the two researchers’ consistency test coefficients were roughly 93.27%. Supplementary Table S2 details the key characteristics of the 36 included articles with 79 effect quantities, including descriptive information (e.g., the publishing year, author, serial number, and title of the paper), variable information (e.g., independent variables, dependent variables, and moderating variables), and data information (e.g., mean values, standard deviations, and sample size). Following that, testing for publication bias and heterogeneity was done on the sample data using the Rev-Man 5.4 software, and then the test results were used to conduct a meta-analysis.

Publication bias test

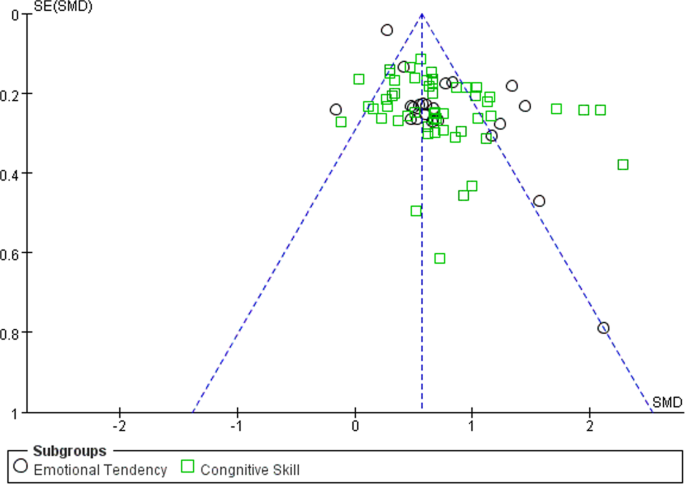

When the sample of studies included in a meta-analysis does not accurately reflect the general status of research on the relevant subject, publication bias is said to be exhibited in this research. The reliability and accuracy of the meta-analysis may be impacted by publication bias. Due to this, the meta-analysis needs to check the sample data for publication bias (Stewart et al., 2006 ). A popular method to check for publication bias is the funnel plot; and it is unlikely that there will be publishing bias when the data are equally dispersed on either side of the average effect size and targeted within the higher region. The data are equally dispersed within the higher portion of the efficient zone, consistent with the funnel plot connected with this analysis (see Fig. 2 ), indicating that publication bias is unlikely in this situation.

This funnel plot shows the result of publication bias of 79 effect quantities across 36 studies.

Heterogeneity test

To select the appropriate effect models for the meta-analysis, one might use the results of a heterogeneity test on the data effect sizes. In a meta-analysis, it is common practice to gauge the degree of data heterogeneity using the I 2 value, and I 2 ≥ 50% is typically understood to denote medium-high heterogeneity, which calls for the adoption of a random effect model; if not, a fixed effect model ought to be applied (Lipsey and Wilson, 2001 ). The findings of the heterogeneity test in this paper (see Table 2 ) revealed that I 2 was 86% and displayed significant heterogeneity ( P < 0.01). To ensure accuracy and reliability, the overall effect size ought to be calculated utilizing the random effect model.

The analysis of the overall effect size

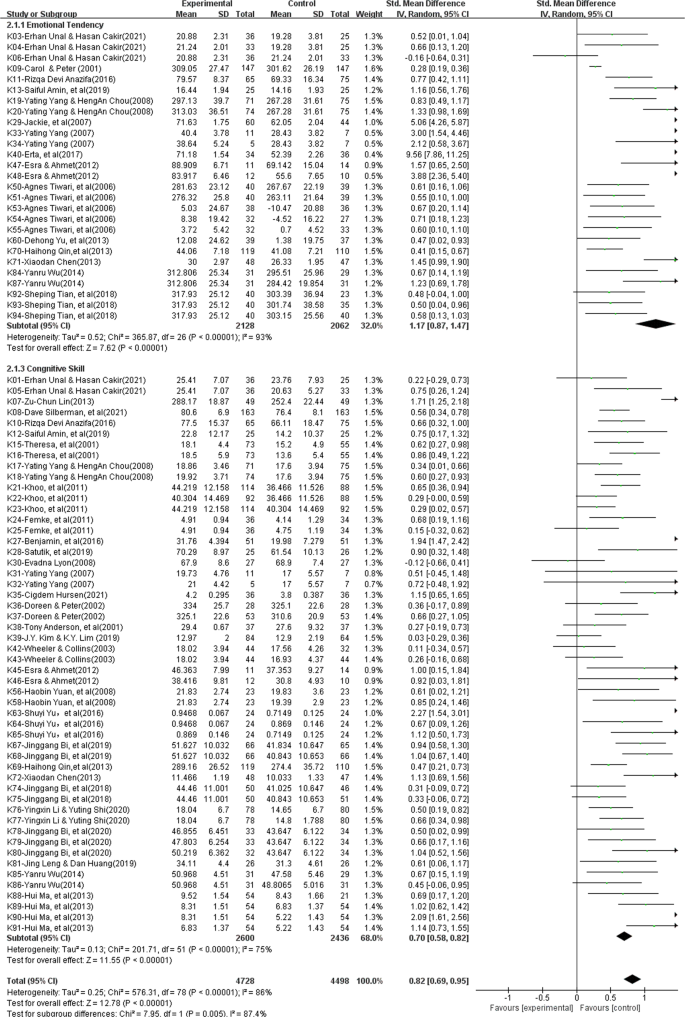

This meta-analysis utilized a random effect model to examine 79 effect quantities from 36 studies after eliminating heterogeneity. In accordance with Cohen’s criterion (Cohen, 1992 ), it is abundantly clear from the analysis results, which are shown in the forest plot of the overall effect (see Fig. 3 ), that the cumulative impact size of cooperative problem-solving is 0.82, which is statistically significant ( z = 12.78, P < 0.01, 95% CI [0.69, 0.95]), and can encourage learners to practice critical thinking.

This forest plot shows the analysis result of the overall effect size across 36 studies.

In addition, this study examined two distinct dimensions of critical thinking to better understand the precise contributions that collaborative problem-solving makes to the growth of critical thinking. The findings (see Table 3 ) indicate that collaborative problem-solving improves cognitive skills (ES = 0.70) and attitudinal tendency (ES = 1.17), with significant intergroup differences (chi 2 = 7.95, P < 0.01). Although collaborative problem-solving improves both dimensions of critical thinking, it is essential to point out that the improvements in students’ attitudinal tendency are much more pronounced and have a significant comprehensive effect (ES = 1.17, z = 7.62, P < 0.01, 95% CI [0.87, 1.47]), whereas gains in learners’ cognitive skill are slightly improved and are just above average. (ES = 0.70, z = 11.55, P < 0.01, 95% CI [0.58, 0.82]).

The analysis of moderator effect size

The whole forest plot’s 79 effect quantities underwent a two-tailed test, which revealed significant heterogeneity ( I 2 = 86%, z = 12.78, P < 0.01), indicating differences between various effect sizes that may have been influenced by moderating factors other than sampling error. Therefore, exploring possible moderating factors that might produce considerable heterogeneity was done using subgroup analysis, such as the learning stage, learning scaffold, teaching type, group size, duration of the intervention, measuring tool, and the subject area included in the 36 experimental designs, in order to further explore the key factors that influence critical thinking. The findings (see Table 4 ) indicate that various moderating factors have advantageous effects on critical thinking. In this situation, the subject area (chi 2 = 13.36, P < 0.05), group size (chi 2 = 8.77, P < 0.05), intervention duration (chi 2 = 12.18, P < 0.01), learning scaffold (chi 2 = 9.03, P < 0.01), and teaching type (chi 2 = 7.20, P < 0.05) are all significant moderators that can be applied to support the cultivation of critical thinking. However, since the learning stage and the measuring tools did not significantly differ among intergroup (chi 2 = 3.15, P = 0.21 > 0.05, and chi 2 = 0.08, P = 0.78 > 0.05), we are unable to explain why these two factors are crucial in supporting the cultivation of critical thinking in the context of collaborative problem-solving. These are the precise outcomes, as follows:

Various learning stages influenced critical thinking positively, without significant intergroup differences (chi 2 = 3.15, P = 0.21 > 0.05). High school was first on the list of effect sizes (ES = 1.36, P < 0.01), then higher education (ES = 0.78, P < 0.01), and middle school (ES = 0.73, P < 0.01). These results show that, despite the learning stage’s beneficial influence on cultivating learners’ critical thinking, we are unable to explain why it is essential for cultivating critical thinking in the context of collaborative problem-solving.

Different teaching types had varying degrees of positive impact on critical thinking, with significant intergroup differences (chi 2 = 7.20, P < 0.05). The effect size was ranked as follows: mixed courses (ES = 1.34, P < 0.01), integrated courses (ES = 0.81, P < 0.01), and independent courses (ES = 0.27, P < 0.01). These results indicate that the most effective approach to cultivate critical thinking utilizing collaborative problem solving is through the teaching type of mixed courses.

Various intervention durations significantly improved critical thinking, and there were significant intergroup differences (chi 2 = 12.18, P < 0.01). The effect sizes related to this variable showed a tendency to increase with longer intervention durations. The improvement in critical thinking reached a significant level (ES = 0.85, P < 0.01) after more than 12 weeks of training. These findings indicate that the intervention duration and critical thinking’s impact are positively correlated, with a longer intervention duration having a greater effect.

Different learning scaffolds influenced critical thinking positively, with significant intergroup differences (chi 2 = 9.03, P < 0.01). The resource-supported learning scaffold (ES = 0.69, P < 0.01) acquired a medium-to-higher level of impact, the technique-supported learning scaffold (ES = 0.63, P < 0.01) also attained a medium-to-higher level of impact, and the teacher-supported learning scaffold (ES = 0.92, P < 0.01) displayed a high level of significant impact. These results show that the learning scaffold with teacher support has the greatest impact on cultivating critical thinking.

Various group sizes influenced critical thinking positively, and the intergroup differences were statistically significant (chi 2 = 8.77, P < 0.05). Critical thinking showed a general declining trend with increasing group size. The overall effect size of 2–3 people in this situation was the biggest (ES = 0.99, P < 0.01), and when the group size was greater than 7 people, the improvement in critical thinking was at the lower-middle level (ES < 0.5, P < 0.01). These results show that the impact on critical thinking is positively connected with group size, and as group size grows, so does the overall impact.

Various measuring tools influenced critical thinking positively, with significant intergroup differences (chi 2 = 0.08, P = 0.78 > 0.05). In this situation, the self-adapting measurement tools obtained an upper-medium level of effect (ES = 0.78), whereas the complete effect size of the standardized measurement tools was the largest, achieving a significant level of effect (ES = 0.84, P < 0.01). These results show that, despite the beneficial influence of the measuring tool on cultivating critical thinking, we are unable to explain why it is crucial in fostering the growth of critical thinking by utilizing the approach of collaborative problem-solving.

Different subject areas had a greater impact on critical thinking, and the intergroup differences were statistically significant (chi 2 = 13.36, P < 0.05). Mathematics had the greatest overall impact, achieving a significant level of effect (ES = 1.68, P < 0.01), followed by science (ES = 1.25, P < 0.01) and medical science (ES = 0.87, P < 0.01), both of which also achieved a significant level of effect. Programming technology was the least effective (ES = 0.39, P < 0.01), only having a medium-low degree of effect compared to education (ES = 0.72, P < 0.01) and other fields (such as language, art, and social sciences) (ES = 0.58, P < 0.01). These results suggest that scientific fields (e.g., mathematics, science) may be the most effective subject areas for cultivating critical thinking utilizing the approach of collaborative problem-solving.

The effectiveness of collaborative problem solving with regard to teaching critical thinking

According to this meta-analysis, using collaborative problem-solving as an intervention strategy in critical thinking teaching has a considerable amount of impact on cultivating learners’ critical thinking as a whole and has a favorable promotional effect on the two dimensions of critical thinking. According to certain studies, collaborative problem solving, the most frequently used critical thinking teaching strategy in curriculum instruction can considerably enhance students’ critical thinking (e.g., Liang et al., 2017 ; Liu et al., 2020 ; Cindy, 2004 ). This meta-analysis provides convergent data support for the above research views. Thus, the findings of this meta-analysis not only effectively address the first research query regarding the overall effect of cultivating critical thinking and its impact on the two dimensions of critical thinking (i.e., attitudinal tendency and cognitive skills) utilizing the approach of collaborative problem-solving, but also enhance our confidence in cultivating critical thinking by using collaborative problem-solving intervention approach in the context of classroom teaching.

Furthermore, the associated improvements in attitudinal tendency are much stronger, but the corresponding improvements in cognitive skill are only marginally better. According to certain studies, cognitive skill differs from the attitudinal tendency in classroom instruction; the cultivation and development of the former as a key ability is a process of gradual accumulation, while the latter as an attitude is affected by the context of the teaching situation (e.g., a novel and exciting teaching approach, challenging and rewarding tasks) (Halpern, 2001 ; Wei and Hong, 2022 ). Collaborative problem-solving as a teaching approach is exciting and interesting, as well as rewarding and challenging; because it takes the learners as the focus and examines problems with poor structure in real situations, and it can inspire students to fully realize their potential for problem-solving, which will significantly improve their attitudinal tendency toward solving problems (Liu et al., 2020 ). Similar to how collaborative problem-solving influences attitudinal tendency, attitudinal tendency impacts cognitive skill when attempting to solve a problem (Liu et al., 2020 ; Zhang et al., 2022 ), and stronger attitudinal tendencies are associated with improved learning achievement and cognitive ability in students (Sison, 2008 ; Zhang et al., 2022 ). It can be seen that the two specific dimensions of critical thinking as well as critical thinking as a whole are affected by collaborative problem-solving, and this study illuminates the nuanced links between cognitive skills and attitudinal tendencies with regard to these two dimensions of critical thinking. To fully develop students’ capacity for critical thinking, future empirical research should pay closer attention to cognitive skills.

The moderating effects of collaborative problem solving with regard to teaching critical thinking

In order to further explore the key factors that influence critical thinking, exploring possible moderating effects that might produce considerable heterogeneity was done using subgroup analysis. The findings show that the moderating factors, such as the teaching type, learning stage, group size, learning scaffold, duration of the intervention, measuring tool, and the subject area included in the 36 experimental designs, could all support the cultivation of collaborative problem-solving in critical thinking. Among them, the effect size differences between the learning stage and measuring tool are not significant, which does not explain why these two factors are crucial in supporting the cultivation of critical thinking utilizing the approach of collaborative problem-solving.

In terms of the learning stage, various learning stages influenced critical thinking positively without significant intergroup differences, indicating that we are unable to explain why it is crucial in fostering the growth of critical thinking.

Although high education accounts for 70.89% of all empirical studies performed by researchers, high school may be the appropriate learning stage to foster students’ critical thinking by utilizing the approach of collaborative problem-solving since it has the largest overall effect size. This phenomenon may be related to student’s cognitive development, which needs to be further studied in follow-up research.

With regard to teaching type, mixed course teaching may be the best teaching method to cultivate students’ critical thinking. Relevant studies have shown that in the actual teaching process if students are trained in thinking methods alone, the methods they learn are isolated and divorced from subject knowledge, which is not conducive to their transfer of thinking methods; therefore, if students’ thinking is trained only in subject teaching without systematic method training, it is challenging to apply to real-world circumstances (Ruggiero, 2012 ; Hu and Liu, 2015 ). Teaching critical thinking as mixed course teaching in parallel to other subject teachings can achieve the best effect on learners’ critical thinking, and explicit critical thinking instruction is more effective than less explicit critical thinking instruction (Bensley and Spero, 2014 ).

In terms of the intervention duration, with longer intervention times, the overall effect size shows an upward tendency. Thus, the intervention duration and critical thinking’s impact are positively correlated. Critical thinking, as a key competency for students in the 21st century, is difficult to get a meaningful improvement in a brief intervention duration. Instead, it could be developed over a lengthy period of time through consistent teaching and the progressive accumulation of knowledge (Halpern, 2001 ; Hu and Liu, 2015 ). Therefore, future empirical studies ought to take these restrictions into account throughout a longer period of critical thinking instruction.

With regard to group size, a group size of 2–3 persons has the highest effect size, and the comprehensive effect size decreases with increasing group size in general. This outcome is in line with some research findings; as an example, a group composed of two to four members is most appropriate for collaborative learning (Schellens and Valcke, 2006 ). However, the meta-analysis results also indicate that once the group size exceeds 7 people, small groups cannot produce better interaction and performance than large groups. This may be because the learning scaffolds of technique support, resource support, and teacher support improve the frequency and effectiveness of interaction among group members, and a collaborative group with more members may increase the diversity of views, which is helpful to cultivate critical thinking utilizing the approach of collaborative problem-solving.

With regard to the learning scaffold, the three different kinds of learning scaffolds can all enhance critical thinking. Among them, the teacher-supported learning scaffold has the largest overall effect size, demonstrating the interdependence of effective learning scaffolds and collaborative problem-solving. This outcome is in line with some research findings; as an example, a successful strategy is to encourage learners to collaborate, come up with solutions, and develop critical thinking skills by using learning scaffolds (Reiser, 2004 ; Xu et al., 2022 ); learning scaffolds can lower task complexity and unpleasant feelings while also enticing students to engage in learning activities (Wood et al., 2006 ); learning scaffolds are designed to assist students in using learning approaches more successfully to adapt the collaborative problem-solving process, and the teacher-supported learning scaffolds have the greatest influence on critical thinking in this process because they are more targeted, informative, and timely (Xu et al., 2022 ).

With respect to the measuring tool, despite the fact that standardized measurement tools (such as the WGCTA, CCTT, and CCTST) have been acknowledged as trustworthy and effective by worldwide experts, only 54.43% of the research included in this meta-analysis adopted them for assessment, and the results indicated no intergroup differences. These results suggest that not all teaching circumstances are appropriate for measuring critical thinking using standardized measurement tools. “The measuring tools for measuring thinking ability have limits in assessing learners in educational situations and should be adapted appropriately to accurately assess the changes in learners’ critical thinking.”, according to Simpson and Courtney ( 2002 , p. 91). As a result, in order to more fully and precisely gauge how learners’ critical thinking has evolved, we must properly modify standardized measuring tools based on collaborative problem-solving learning contexts.

With regard to the subject area, the comprehensive effect size of science departments (e.g., mathematics, science, medical science) is larger than that of language arts and social sciences. Some recent international education reforms have noted that critical thinking is a basic part of scientific literacy. Students with scientific literacy can prove the rationality of their judgment according to accurate evidence and reasonable standards when they face challenges or poorly structured problems (Kyndt et al., 2013 ), which makes critical thinking crucial for developing scientific understanding and applying this understanding to practical problem solving for problems related to science, technology, and society (Yore et al., 2007 ).

Suggestions for critical thinking teaching

Other than those stated in the discussion above, the following suggestions are offered for critical thinking instruction utilizing the approach of collaborative problem-solving.

First, teachers should put a special emphasis on the two core elements, which are collaboration and problem-solving, to design real problems based on collaborative situations. This meta-analysis provides evidence to support the view that collaborative problem-solving has a strong synergistic effect on promoting students’ critical thinking. Asking questions about real situations and allowing learners to take part in critical discussions on real problems during class instruction are key ways to teach critical thinking rather than simply reading speculative articles without practice (Mulnix, 2012 ). Furthermore, the improvement of students’ critical thinking is realized through cognitive conflict with other learners in the problem situation (Yang et al., 2008 ). Consequently, it is essential for teachers to put a special emphasis on the two core elements, which are collaboration and problem-solving, and design real problems and encourage students to discuss, negotiate, and argue based on collaborative problem-solving situations.

Second, teachers should design and implement mixed courses to cultivate learners’ critical thinking, utilizing the approach of collaborative problem-solving. Critical thinking can be taught through curriculum instruction (Kuncel, 2011 ; Leng and Lu, 2020 ), with the goal of cultivating learners’ critical thinking for flexible transfer and application in real problem-solving situations. This meta-analysis shows that mixed course teaching has a highly substantial impact on the cultivation and promotion of learners’ critical thinking. Therefore, teachers should design and implement mixed course teaching with real collaborative problem-solving situations in combination with the knowledge content of specific disciplines in conventional teaching, teach methods and strategies of critical thinking based on poorly structured problems to help students master critical thinking, and provide practical activities in which students can interact with each other to develop knowledge construction and critical thinking utilizing the approach of collaborative problem-solving.

Third, teachers should be more trained in critical thinking, particularly preservice teachers, and they also should be conscious of the ways in which teachers’ support for learning scaffolds can promote critical thinking. The learning scaffold supported by teachers had the greatest impact on learners’ critical thinking, in addition to being more directive, targeted, and timely (Wood et al., 2006 ). Critical thinking can only be effectively taught when teachers recognize the significance of critical thinking for students’ growth and use the proper approaches while designing instructional activities (Forawi, 2016 ). Therefore, with the intention of enabling teachers to create learning scaffolds to cultivate learners’ critical thinking utilizing the approach of collaborative problem solving, it is essential to concentrate on the teacher-supported learning scaffolds and enhance the instruction for teaching critical thinking to teachers, especially preservice teachers.

Implications and limitations

There are certain limitations in this meta-analysis, but future research can correct them. First, the search languages were restricted to English and Chinese, so it is possible that pertinent studies that were written in other languages were overlooked, resulting in an inadequate number of articles for review. Second, these data provided by the included studies are partially missing, such as whether teachers were trained in the theory and practice of critical thinking, the average age and gender of learners, and the differences in critical thinking among learners of various ages and genders. Third, as is typical for review articles, more studies were released while this meta-analysis was being done; therefore, it had a time limit. With the development of relevant research, future studies focusing on these issues are highly relevant and needed.

Conclusions

The subject of the magnitude of collaborative problem-solving’s impact on fostering students’ critical thinking, which received scant attention from other studies, was successfully addressed by this study. The question of the effectiveness of collaborative problem-solving in promoting students’ critical thinking was addressed in this study, which addressed a topic that had gotten little attention in earlier research. The following conclusions can be made:

Regarding the results obtained, collaborative problem solving is an effective teaching approach to foster learners’ critical thinking, with a significant overall effect size (ES = 0.82, z = 12.78, P < 0.01, 95% CI [0.69, 0.95]). With respect to the dimensions of critical thinking, collaborative problem-solving can significantly and effectively improve students’ attitudinal tendency, and the comprehensive effect is significant (ES = 1.17, z = 7.62, P < 0.01, 95% CI [0.87, 1.47]); nevertheless, it falls short in terms of improving students’ cognitive skills, having only an upper-middle impact (ES = 0.70, z = 11.55, P < 0.01, 95% CI [0.58, 0.82]).

As demonstrated by both the results and the discussion, there are varying degrees of beneficial effects on students’ critical thinking from all seven moderating factors, which were found across 36 studies. In this context, the teaching type (chi 2 = 7.20, P < 0.05), intervention duration (chi 2 = 12.18, P < 0.01), subject area (chi 2 = 13.36, P < 0.05), group size (chi 2 = 8.77, P < 0.05), and learning scaffold (chi 2 = 9.03, P < 0.01) all have a positive impact on critical thinking, and they can be viewed as important moderating factors that affect how critical thinking develops. Since the learning stage (chi 2 = 3.15, P = 0.21 > 0.05) and measuring tools (chi 2 = 0.08, P = 0.78 > 0.05) did not demonstrate any significant intergroup differences, we are unable to explain why these two factors are crucial in supporting the cultivation of critical thinking in the context of collaborative problem-solving.

Data availability

All data generated or analyzed during this study are included within the article and its supplementary information files, and the supplementary information files are available in the Dataverse repository: https://doi.org/10.7910/DVN/IPFJO6 .

Bensley DA, Spero RA (2014) Improving critical thinking skills and meta-cognitive monitoring through direct infusion. Think Skills Creat 12:55–68. https://doi.org/10.1016/j.tsc.2014.02.001

Article Google Scholar

Castle A (2009) Defining and assessing critical thinking skills for student radiographers. Radiography 15(1):70–76. https://doi.org/10.1016/j.radi.2007.10.007

Chen XD (2013) An empirical study on the influence of PBL teaching model on critical thinking ability of non-English majors. J PLA Foreign Lang College 36 (04):68–72

Google Scholar

Cohen A (1992) Antecedents of organizational commitment across occupational groups: a meta-analysis. J Organ Behav. https://doi.org/10.1002/job.4030130602

Cooper H (2010) Research synthesis and meta-analysis: a step-by-step approach, 4th edn. Sage, London, England

Cindy HS (2004) Problem-based learning: what and how do students learn? Educ Psychol Rev 51(1):31–39

Duch BJ, Gron SD, Allen DE (2001) The power of problem-based learning: a practical “how to” for teaching undergraduate courses in any discipline. Stylus Educ Sci 2:190–198

Ennis RH (1989) Critical thinking and subject specificity: clarification and needed research. Educ Res 18(3):4–10. https://doi.org/10.3102/0013189x018003004

Facione PA (1990) Critical thinking: a statement of expert consensus for purposes of educational assessment and instruction. Research findings and recommendations. Eric document reproduction service. https://eric.ed.gov/?id=ed315423

Facione PA, Facione NC (1992) The California Critical Thinking Dispositions Inventory (CCTDI) and the CCTDI test manual. California Academic Press, Millbrae, CA

Forawi SA (2016) Standard-based science education and critical thinking. Think Skills Creat 20:52–62. https://doi.org/10.1016/j.tsc.2016.02.005

Halpern DF (2001) Assessing the effectiveness of critical thinking instruction. J Gen Educ 50(4):270–286. https://doi.org/10.2307/27797889

Hu WP, Liu J (2015) Cultivation of pupils’ thinking ability: a five-year follow-up study. Psychol Behav Res 13(05):648–654. https://doi.org/10.3969/j.issn.1672-0628.2015.05.010

Huber K (2016) Does college teach critical thinking? A meta-analysis. Rev Educ Res 86(2):431–468. https://doi.org/10.3102/0034654315605917

Kek MYCA, Huijser H (2011) The power of problem-based learning in developing critical thinking skills: preparing students for tomorrow’s digital futures in today’s classrooms. High Educ Res Dev 30(3):329–341. https://doi.org/10.1080/07294360.2010.501074

Kuncel NR (2011) Measurement and meaning of critical thinking (Research report for the NRC 21st Century Skills Workshop). National Research Council, Washington, DC

Kyndt E, Raes E, Lismont B, Timmers F, Cascallar E, Dochy F (2013) A meta-analysis of the effects of face-to-face cooperative learning. Do recent studies falsify or verify earlier findings? Educ Res Rev 10(2):133–149. https://doi.org/10.1016/j.edurev.2013.02.002

Leng J, Lu XX (2020) Is critical thinking really teachable?—A meta-analysis based on 79 experimental or quasi experimental studies. Open Educ Res 26(06):110–118. https://doi.org/10.13966/j.cnki.kfjyyj.2020.06.011

Liang YZ, Zhu K, Zhao CL (2017) An empirical study on the depth of interaction promoted by collaborative problem solving learning activities. J E-educ Res 38(10):87–92. https://doi.org/10.13811/j.cnki.eer.2017.10.014

Lipsey M, Wilson D (2001) Practical meta-analysis. International Educational and Professional, London, pp. 92–160

Liu Z, Wu W, Jiang Q (2020) A study on the influence of problem based learning on college students’ critical thinking-based on a meta-analysis of 31 studies. Explor High Educ 03:43–49

Morris SB (2008) Estimating effect sizes from pretest-posttest-control group designs. Organ Res Methods 11(2):364–386. https://doi.org/10.1177/1094428106291059

Article ADS Google Scholar

Mulnix JW (2012) Thinking critically about critical thinking. Educ Philos Theory 44(5):464–479. https://doi.org/10.1111/j.1469-5812.2010.00673.x

Naber J, Wyatt TH (2014) The effect of reflective writing interventions on the critical thinking skills and dispositions of baccalaureate nursing students. Nurse Educ Today 34(1):67–72. https://doi.org/10.1016/j.nedt.2013.04.002

National Research Council (2012) Education for life and work: developing transferable knowledge and skills in the 21st century. The National Academies Press, Washington, DC

Niu L, Behar HLS, Garvan CW (2013) Do instructional interventions influence college students’ critical thinking skills? A meta-analysis. Educ Res Rev 9(12):114–128. https://doi.org/10.1016/j.edurev.2012.12.002

Peng ZM, Deng L (2017) Towards the core of education reform: cultivating critical thinking skills as the core of skills in the 21st century. Res Educ Dev 24:57–63. https://doi.org/10.14121/j.cnki.1008-3855.2017.24.011

Reiser BJ (2004) Scaffolding complex learning: the mechanisms of structuring and problematizing student work. J Learn Sci 13(3):273–304. https://doi.org/10.1207/s15327809jls1303_2

Ruggiero VR (2012) The art of thinking: a guide to critical and creative thought, 4th edn. Harper Collins College Publishers, New York

Schellens T, Valcke M (2006) Fostering knowledge construction in university students through asynchronous discussion groups. Comput Educ 46(4):349–370. https://doi.org/10.1016/j.compedu.2004.07.010

Sendag S, Odabasi HF (2009) Effects of an online problem based learning course on content knowledge acquisition and critical thinking skills. Comput Educ 53(1):132–141. https://doi.org/10.1016/j.compedu.2009.01.008

Sison R (2008) Investigating Pair Programming in a Software Engineering Course in an Asian Setting. 2008 15th Asia-Pacific Software Engineering Conference, pp. 325–331. https://doi.org/10.1109/APSEC.2008.61

Simpson E, Courtney M (2002) Critical thinking in nursing education: literature review. Mary Courtney 8(2):89–98

Stewart L, Tierney J, Burdett S (2006) Do systematic reviews based on individual patient data offer a means of circumventing biases associated with trial publications? Publication bias in meta-analysis. John Wiley and Sons Inc, New York, pp. 261–286

Tiwari A, Lai P, So M, Yuen K (2010) A comparison of the effects of problem-based learning and lecturing on the development of students’ critical thinking. Med Educ 40(6):547–554. https://doi.org/10.1111/j.1365-2929.2006.02481.x

Wood D, Bruner JS, Ross G (2006) The role of tutoring in problem solving. J Child Psychol Psychiatry 17(2):89–100. https://doi.org/10.1111/j.1469-7610.1976.tb00381.x

Wei T, Hong S (2022) The meaning and realization of teachable critical thinking. Educ Theory Practice 10:51–57

Xu EW, Wang W, Wang QX (2022) A meta-analysis of the effectiveness of programming teaching in promoting K-12 students’ computational thinking. Educ Inf Technol. https://doi.org/10.1007/s10639-022-11445-2

Yang YC, Newby T, Bill R (2008) Facilitating interactions through structured web-based bulletin boards: a quasi-experimental study on promoting learners’ critical thinking skills. Comput Educ 50(4):1572–1585. https://doi.org/10.1016/j.compedu.2007.04.006

Yore LD, Pimm D, Tuan HL (2007) The literacy component of mathematical and scientific literacy. Int J Sci Math Educ 5(4):559–589. https://doi.org/10.1007/s10763-007-9089-4

Zhang T, Zhang S, Gao QQ, Wang JH (2022) Research on the development of learners’ critical thinking in online peer review. Audio Visual Educ Res 6:53–60. https://doi.org/10.13811/j.cnki.eer.2022.06.08

Download references

Acknowledgements

This research was supported by the graduate scientific research and innovation project of Xinjiang Uygur Autonomous Region named “Research on in-depth learning of high school information technology courses for the cultivation of computing thinking” (No. XJ2022G190) and the independent innovation fund project for doctoral students of the College of Educational Science of Xinjiang Normal University named “Research on project-based teaching of high school information technology courses from the perspective of discipline core literacy” (No. XJNUJKYA2003).

Author information

Authors and affiliations.

College of Educational Science, Xinjiang Normal University, 830017, Urumqi, Xinjiang, China

Enwei Xu, Wei Wang & Qingxia Wang

You can also search for this author in PubMed Google Scholar

Corresponding authors

Correspondence to Enwei Xu or Wei Wang .

Ethics declarations

Competing interests.

The authors declare no competing interests.

Ethical approval

This article does not contain any studies with human participants performed by any of the authors.

Informed consent

Additional information.

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary tables, rights and permissions.

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/ .

Reprints and permissions

About this article

Cite this article.

Xu, E., Wang, W. & Wang, Q. The effectiveness of collaborative problem solving in promoting students’ critical thinking: A meta-analysis based on empirical literature. Humanit Soc Sci Commun 10 , 16 (2023). https://doi.org/10.1057/s41599-023-01508-1

Download citation

Received : 07 August 2022

Accepted : 04 January 2023

Published : 11 January 2023

DOI : https://doi.org/10.1057/s41599-023-01508-1

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

This article is cited by

Exploring the effects of digital technology on deep learning: a meta-analysis.

Education and Information Technologies (2024)

Sustainable electricity generation and farm-grid utilization from photovoltaic aquaculture: a bibliometric analysis

- A. A. Amusa

- M. Alhassan

International Journal of Environmental Science and Technology (2024)

Impacts of online collaborative learning on students’ intercultural communication apprehension and intercultural communicative competence

- Hoa Thi Hoang Chau

- Hung Phu Bui

- Quynh Thi Huong Dinh

Education and Information Technologies (2023)

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Collaborative Problem Solving: What It Is and How to Do It

What is collaborative problem solving, how to solve problems as a team, celebrating success as a team.

Problems arise. That's a well-known fact of life and business. When they do, it may seem more straightforward to take individual ownership of the problem and immediately run with trying to solve it. However, the most effective problem-solving solutions often come through collaborative problem solving.

As defined by Webster's Dictionary , the word collaborate is to work jointly with others or together, especially in an intellectual endeavor. Therefore, collaborative problem solving (CPS) is essentially solving problems by working together as a team. While problems can and are solved individually, CPS often brings about the best resolution to a problem while also developing a team atmosphere and encouraging creative thinking.

Because collaborative problem solving involves multiple people and ideas, there are some techniques that can help you stay on track, engage efficiently, and communicate effectively during collaboration.

- Set Expectations. From the very beginning, expectations for openness and respect must be established for CPS to be effective. Everyone participating should feel that their ideas will be heard and valued.

- Provide Variety. Another way of providing variety can be by eliciting individuals outside the organization but affected by the problem. This may mean involving various levels of leadership from the ground floor to the top of the organization. It may be that you involve someone from bookkeeping in a marketing problem-solving session. A perspective from someone not involved in the day-to-day of the problem can often provide valuable insight.

- Communicate Clearly. If the problem is not well-defined, the solution can't be. By clearly defining the problem, the framework for collaborative problem solving is narrowed and more effective.

- Expand the Possibilities. Think beyond what is offered. Take a discarded idea and expand upon it. Turn it upside down and inside out. What is good about it? What needs improvement? Sometimes the best ideas are those that have been discarded rather than reworked.

- Encourage Creativity. Out-of-the-box thinking is one of the great benefits of collaborative problem-solving. This may mean that solutions are proposed that have no way of working, but a small nugget makes its way from that creative thought to evolution into the perfect solution.

- Provide Positive Feedback. There are many reasons participants may hold back in a collaborative problem-solving meeting. Fear of performance evaluation, lack of confidence, lack of clarity, and hierarchy concerns are just a few of the reasons people may not initially participate in a meeting. Positive public feedback early on in the meeting will eliminate some of these concerns and create more participation and more possible solutions.

- Consider Solutions. Once several possible ideas have been identified, discuss the advantages and drawbacks of each one until a consensus is made.

- Assign Tasks. A problem identified and a solution selected is not a problem solved. Once a solution is determined, assign tasks to work towards a resolution. A team that has been invested in the creation of the solution will be invested in its resolution. The best time to act is now.

- Evaluate the Solution. Reconnect as a team once the solution is implemented and the problem is solved. What went well? What didn't? Why? Collaboration doesn't necessarily end when the problem is solved. The solution to the problem is often the next step towards a new collaboration.

The burden that is lifted when a problem is solved is enough victory for some. However, a team that plays together should celebrate together. It's not only collaboration that brings unity to a team. It's also the combined celebration of a unified victory—the moment you look around and realize the collectiveness of your success.

We can help

Check out MindManager to learn more about how you can ignite teamwork and innovation by providing a clearer perspective on the big picture with a suite of sharing options and collaborative tools.

Need to Download MindManager?

Try the full version of mindmanager free for 30 days.

How to ace collaborative problem solving

April 30, 2023 They say two heads are better than one, but is that true when it comes to solving problems in the workplace? To solve any problem—whether personal (eg, deciding where to live), business-related (eg, raising product prices), or societal (eg, reversing the obesity epidemic)—it’s crucial to first define the problem. In a team setting, that translates to establishing a collective understanding of the problem, awareness of context, and alignment of stakeholders. “Both good strategy and good problem solving involve getting clarity about the problem at hand, being able to disaggregate it in some way, and setting priorities,” Rob McLean, McKinsey director emeritus, told McKinsey senior partner Chris Bradley in an Inside the Strategy Room podcast episode . Check out these insights to uncover how your team can come up with the best solutions for the most complex challenges by adopting a methodical and collaborative approach.

Want better strategies? Become a bulletproof problem solver

How to master the seven-step problem-solving process

Countering otherness: Fostering integration within teams

Psychological safety and the critical role of leadership development

If we’re all so busy, why isn’t anything getting done?

To weather a crisis, build a network of teams

Unleash your team’s full potential

Modern marketing: Six capabilities for multidisciplinary teams

Beyond collaboration overload

MORE FROM MCKINSEY

Take a step Forward

Jump to content

Psychiatry Academy

Bookmark/search this post.

You are here

Parenting, teaching and treating challenging kids: the collaborative problem solving approach.

- CE Information

- Register/Take course

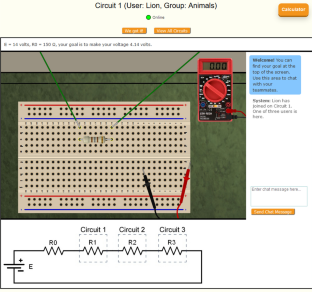

Think:Kids and the Department of Psychiatry at Massachusetts General Hospital are pleased to offer an online training program featuring Dr. J. Stuart Ablon. This introductory training provides a foundation for professionals and parents interested in learning the evidence-based approach to understanding and helping children and adolescents with behavioral challenges called Collaborative Problem Solving (CPS). This online training serves as the prerequisite for our professional intensive training.

The CPS approach provides a way of understanding and helping kids who struggle with behavioral challenges. Challenging behavior is thought of as willful and goal oriented which has led to approaches that focus on motivating better behavior using reward and punishment programs. If you’ve tried these strategies and they haven’t worked, this online training is for you! At Think:Kids we have some very different ideas about why these kids struggle. Research over the past 30 years demonstrates that for the majority of these kids, their challenges result from a lack of crucial thinking skills when it comes to things like problem solving, frustration tolerance and flexibility. The CPS approach, therefore, focuses on helping adults teach the skills these children lack while resolving the chronic problems that tend to precipitate challenging behavior.

This training is designed to allow you to learn at your own pace. You must complete the modules sequentially, but you can take your time with the content as your schedule allows. Additional resources for each module provide you with the opportunity for further development. Discussion boards for each module allow you to discuss concepts and your own experiences with other participants. Faculty from the Think:Kids program monitor the boards and offer their point of view.

Registrants will have access to course materials from the date of their registration through the course expiration date.

All care Providers: $149 Due to COVID-19, we are offering this course at the reduced rate of $99 for a limited time.

NOTE: If you are paying for your registration via Purchase Order, please send the PO to [email protected] . Our customer service agent will respond with further instructions.

Cancellation Policy

Refunds will be issued for requests received within 10 business days of purchase, but an administrative fee of $35 will be deducted from your refund. No refunds will be made thereafter. Additionally, no refunds will be made for individuals who claim CME or credit, regardless of when they request a refund.

Through the duration of the course, the faculty moderator will respond to any clinical questions that are submitted to the interactive discussion board. The faculty moderator for this course will be:

J. Stuart Ablon, PhD

*** Please note that discussion boards are reviewed on a regular basis, and responses to all questions will be posted within one week of receipt. ***

Target Audience

This program is intended for: Parents, clinicians, educators, allied mental health professionals, and direct care staff.

Learning Objectives

At the end of this program, participants will be able to:

- Shift thinking and approach to foster positive relationships with children

- Reduce challenging behavior

- Foster proactive, rather than reactive interventions

- Teach skills related to self-regulation, communication and problem solving

MaMHCA, and its agent, MMCEP has been designated by the Board of Allied Mental Health and Human Service Professions to approve sponsors of continuing education for licensed mental health counselors in the Commonwealth of Massachusetts for licensure renewal, in accordance with the requirements of 262 CMR 3.00.

This program has been approved for 3.00 CE credit for Licensed Mental Health Counselors MaMHCA.

Authorization number: 17-0490

The Collaborative of NASW, Boston College, and Simmons College Schools of Social Work authorizes social work continuing education credits for courses, workshops, and educational programs that meet the criteria outlined in 258 CMR of the Massachusetts Board of Registration of Social Workers

This program has been approved for 3.00 Social Work Continuing Education hours for relicensure, in accordance with 258 CMR. Collaborative of NASW and the Boston College and Simmons Schools of Social Work Authorization Number D 61675-E

This course allows other providers to claim a Participation Certificate upon successful completion of this course.

Participation Certificates will specify the title, location, type of activity, date of activity, and number of AMA PRA Category 1 Credit™ associated with the activity. Providers should check with their regulatory agencies to determine ways in which AMA PRA Category 1 Credit™ may or may not fulfill continuing education requirements. Providers should also consider saving copies of brochures, agenda, and other supporting documents.

The Massachusetts General Hospital Department of Psychiatry is approved by the American Psychological Association to sponsor continuing education for psychologists. The Massachusetts General Hospital Department of Psychiatry maintains responsibility for this program and its content.

This offering meets the criteria for 3.00 Continuing Education (CE) credits per presentation for psychologists.

Stuart Ablon, PhD

Available credit.

- Collaborative Problem Solving in Schools »

Collaborative Problem Solving in Schools

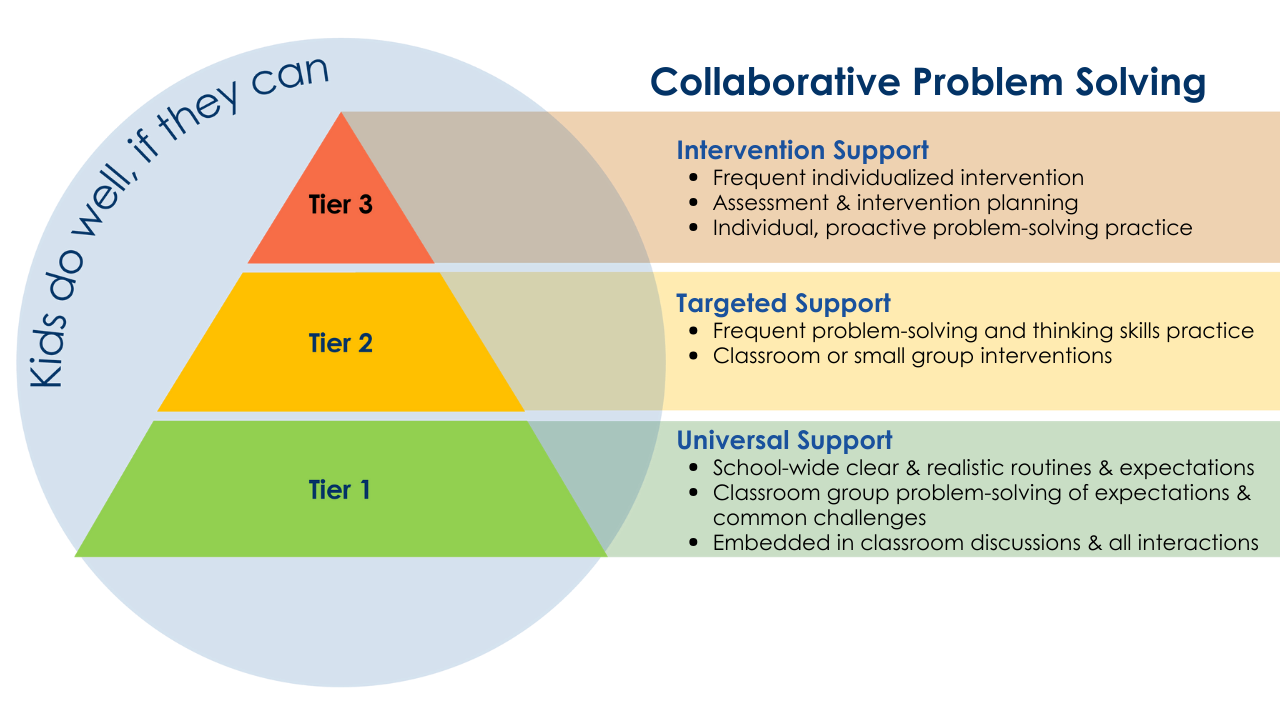

Collaborative Problem Solving ® (CPS) is an evidence-based, trauma-informed practice that helps students meet expectations, reduces concerning behavior, builds students’ skills, and strengthens their relationships with educators.

Collaborative Problem Solving is designed to meet the needs of all children, including those with social, emotional, and behavioral challenges. It promotes the understanding that students who have trouble meeting expectations or managing their behavior lack the skill—not the will—to do so. These students struggle with skills related to problem-solving, flexibility, and frustration tolerance. Collaborative Problem Solving has been shown to help build these skills.

Collaborative Problem Solving avoids using power, control, and motivational procedures. Instead, it focuses on collaborating with students to solve the problems leading to them not meeting expectations and displaying concerning behavior. This trauma-informed approach provides staff with actionable strategies for trauma-sensitive education and aims to mitigate implicit bias’s impact on school discipline . It integrates with MTSS frameworks, PBIS, restorative practices, and SEL approaches, such as RULER. Collaborative Problem Solving reduces challenging behavior and teacher stress while building future-ready skills and relationships between educators and students.

Transform School Discipline

Traditional school discipline is broken, it doesn’t result in improved behavior or improved relationships between educators and students. In addition, it has been shown to be disproportionately applied to students of color. The Collaborative Problem Solving approach is an equitable and effective form of relational discipline that reduces concerning behavior and teacher stress while building skills and relationships between educators and students. Learn more >>

A Client’s Story

Collaborative Problem Solving and SEL

Collaborative Problem Solving aligns with CASEL’s five core competencies by building relationships between teachers and students using everyday situations. Students develop the skills they need to prepare for the real world, including problem-solving, collaboration and communication, flexibility, perspective-taking, and empathy. Collaborative Problem Solving makes social-emotional learning actionable.

Collaborative Problem Solving and MTSS

The Collaborative Problem Solving approach integrates with Multi-Tiered Systems of Support (MTSS) in educational settings. CPS benefits all students and can be implemented across the three tiers of support within an MTSS framework to effectively identify and meet the diverse social emotional and behavioral needs of students in schools. Learn More >>

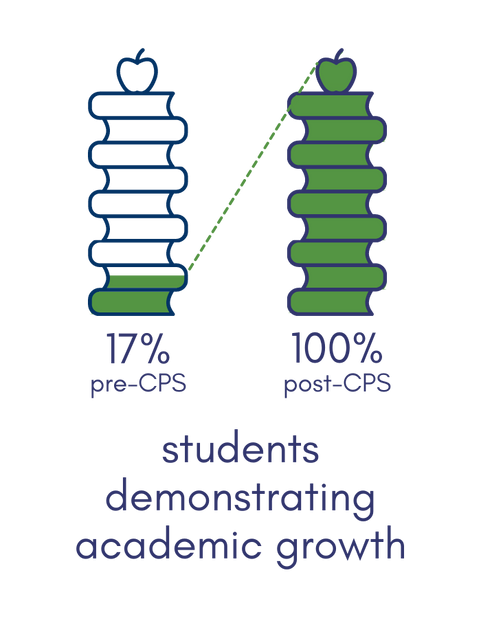

The Results

Our research has shown that the Collaborative Problem Solving approach helps kids and adults build crucial social-emotional skills and leads to dramatic decreases in behavior problems across various settings. Results in schools include remarkable reductions in time spent out of class, detentions, suspensions, injuries, teacher stress, and alternative placements as well as increases in emotional safety, attendance, academic growth, and family participation.

Educators, join us in this introductory course and develop your behavioral growth mindset!

This 2-hour, self-paced course introduces the principles of Collaborative Problem Solving ® while outlining how the approach is uniquely suited to the needs of today's educators and students. Tuition: $39 Enroll Now

Bring CPS to Your School

We can help you bring a more accurate, compassionate, and effective approach to working with children to your school or district.

What Our Clients Say

Education insights, corporal punishment ban in new york sparks awareness of practice, to fix students’ bad behavior, stop punishing them, behaviors charts: helpful or harmful, bringing collaborative problem solving to marshalltown, ia community school district, the benefits of changing school discipline, eliminating the school-to-prison pipeline, ending restraint and seclusion in schools: podcast, a skill-building approach to reducing students’ anxiety and challenging behavior, the school discipline fix book club, what can we do about post-pandemic school violence, sos: our schools are in crisis and we need to act now, talking to kids about the tiktok bathroom destruction challenge, north dakota governor’s summit on innovative education 2021, kids of color suffer from both explicit and implicit bias, school discipline is trauma-insensitive and trauma-uninformed, privacy overview.

Collaborative problem solvers are made not born – here’s what you need to know

Professor of Cognitive Sciences, University of Central Florida

Disclosure statement

Stephen M. Fiore has received funding from federal agencies such as NASA, ONR, DARPA, and the NSF to study collaborative problem solving and teamwork. He is past president of the Interdisciplinary Network for Group Research, currently a board member of the International Network for the Science of Team Science, and a member of DARPA's Information Science and Technology working group.

View all partners

Challenges are a fact of life. Whether it’s a high-tech company figuring out how to shrink its carbon footprint, or a local community trying to identify new revenue sources, people are continually dealing with problems that require input from others. In the modern world, we face problems that are broad in scope and great in scale of impact – think of trying to understand and identify potential solutions related to climate change, cybersecurity or authoritarian leaders.

But people usually aren’t born competent in collaborative problem-solving. In fact, a famous turn of phrase about teams is that a team of experts does not make an expert team . Just as troubling, the evidence suggests that, for the most part, people aren’t being taught this skill either. A 2012 survey by the American Management Association found that higher level managers believed recent college graduates lack collaboration abilities .

Maybe even worse, college grads seem to overestimate their own competence. One 2015 survey found nearly two-thirds of recent graduates believed they can effectively work in a team, but only one-third of managers agreed . The tragic irony is that the less competent you are, the less accurate is your self-assessment of your own competence. It seems that this infamous Dunning-Kruger effect can also occur for teamwork.

Perhaps it’s no surprise that in a 2015 international assessment of hundreds of thousands of students, less than 10% performed at the highest level of collaboration . For example, the vast majority of students could not overcome teamwork obstacles or resolve conflict. They were not able to monitor group dynamics or to engage in the kind of actions needed to make sure the team interacted according to their roles. Given that all these students have had group learning opportunities in and out of school over many years, this points to a global deficit in the acquisition of collaboration skills.

How can this deficiency be addressed? What makes one team effective while another fails? How can educators improve training and testing of collaborative problem-solving? Drawing from disciplines that study cognition, collaboration and learning, my colleagues and I have been studying teamwork processes. Based on this research, we have three key recommendations.

How it should work

At the most general level, collaborative problem-solving requires team members to establish and maintain a shared understanding of the situation they’re facing and any relevant problem elements they’ve identified. At the start, there’s typically an uneven distribution of knowledge on a team. Members must maintain communication to help each other know who knows what, as well as help each other interpret elements of the problem and which expertise should be applied.

Then the team can get to work, laying out subtasks based upon member roles, or creating mechanisms to coordinate member actions. They’ll critique possible solutions to identify the most appropriate path forward.

Finally, at a higher level, collaborative problem-solving requires keeping the team organized – for example, by monitoring interactions and providing feedback to each other. Team members need, at least, basic interpersonal competencies that help them manage relationships within the team (like encouraging participation) and communication (like listening to learn). Even better is the more sophisticated ability to take others’ perspectives, in order to consider alternative views of problem elements.

Whether it is a team of professionals in an organization or a team of scientists solving complex scientific problems , communicating clearly, managing conflict, understanding roles on a team, and knowing who knows what – all are collaboration skills related to effective teamwork.

What’s going wrong in the classroom?

When so many students are continually engaged in group projects, or collaborative learning, why are they not learning about teamwork? There are interrelated factors that may be creating graduates who collaborate poorly but who think they are quite good at teamwork.

I suggest students vastly overestimate their collaboration skills due to the dangerous combination of a lack of systematic instruction coupled with inadequate feedback. On the one hand, students engage in a great deal of group work in high school and college. On the other hand, students rarely receive meaningful instruction, modeling and feedback on collaboration . Decades of research on learning show that explicit instruction and feedback are crucial for mastery .