8 Essential Qualitative Data Collection Methods

Qualitative data methods allow you to deep dive into the mindset of your audience to discover areas for growth, development, and improvement.

British mathematician and marketing mastermind Clive Humby once famously stated that “Data is the new oil.” He has a point. Without data, nonprofit organizations are left second-guessing what their clients and supporters think, how their brand compares to others in the market, whether their messaging is on-point, how their campaigns are performing, where improvements can be made, and how overall results can be optimized.

There are two primary data collection methodologies: qualitative data collection and quantitative data collection. At UpMetrics, we believe that relying on quantitative, static data is no longer an option to drive effective impact. In the nonprofit sector, where financial gain is not the sole purpose of your organization’s existence. In this guide, we’ll focus on qualitative data collection methods and how they can help you gather, analyze, and collate information that can help drive your organization forward.

What is Qualitative Data?

Data collection in qualitative research focuses on gathering contextual information. Unlike quantitative data, which focuses primarily on numbers to establish ‘how many’ or ‘how much,’ qualitative data collection tools allow you to assess the ‘why’s’ and ‘how’s’ behind those statistics. This is vital for nonprofits as it enables organizations to determine:

- Existing knowledge surrounding a particular issue.

- How social norms and cultural practices impact a cause.

- What kind of experiences and interactions people have with your brand.

- Trends in the way people change their opinions.

- Whether meaningful relationships are being established between all parties.

In short, qualitative data collection methods collect perceptual and descriptive information that helps you understand the reasoning and motivation behind particular reactions and behaviors. For that reason, qualitative data methods are usually non-numerical and center around spoken and written words rather than data extrapolated from a spreadsheet or report.

Qualitative vs. Quantitative Data

Quantitative and qualitative data represent both sides of the same coin. There will always be some degree of debate over the importance of quantitative vs. qualitative research, data, and collection. However, successful organizations should strive to achieve a balance between the two.

Organizations can track their performance by collecting quantitative data based on metrics including dollars raised, membership growth, number of people served, overhead costs, etc. This is all essential information to have. However, the data lacks value without the additional details provided by qualitative research because it doesn’t tell you anything about how your target audience thinks, feels, and acts.

Qualitative data collection is particularly relevant in the nonprofit sector as the relationships people have with the causes they support are fundamentally personal and cannot be expressed numerically. Qualitative data methods allow you to deep dive into the mindset of your audience to discover areas for growth, development, and improvement.

8 Types of Qualitative Data Collection Methods

As we have firmly established the need for qualitative data, it’s time to answer the next big question: how to collect qualitative data.

Here is a list of the most common qualitative data collection methods. You don’t need to use them all in your quest for gathering information. However, a foundational understanding of each will help you refine your research strategy and select the methods that are likely to provide the highest quality business intelligence for your organization.

1. Interviews

One-on-one interviews are one of the most commonly used data collection methods in qualitative research because they allow you to collect highly personalized information directly from the source. Interviews explore participants' opinions, motivations, beliefs, and experiences and are particularly beneficial in gathering data on sensitive topics because respondents are more likely to open up in a one-on-one setting than in a group environment.

Interviews can be conducted in person or by online video call. Typically, they are separated into three main categories:

- Structured Interviews - Structured interviews consist of predetermined (and usually closed) questions with little or no variation between interviewees. There is generally no scope for elaboration or follow-up questions, making them better suited to researching specific topics.

- Unstructured Interviews – Conversely, unstructured interviews have little to no organization or preconceived topics and include predominantly open questions. As a result, the discussion will flow in completely different directions for each participant and can be very time-consuming. For this reason, unstructured interviews are generally only used when little is known about the subject area or when in-depth responses are required on a particular subject.

- Semi-Structured Interviews – A combination of the two interviews mentioned above, semi-structured interviews comprise several scripted questions but allow both interviewers and interviewees the opportunity to diverge and elaborate so more in-depth reasoning can be explored.

While each approach has its merits, semi-structured interviews are typically favored as a way to uncover detailed information in a timely manner while highlighting areas that may not have been considered relevant in previous research efforts. Whichever type of interview you utilize, participants must be fully briefed on the format, purpose, and what you hope to achieve. With that in mind, here are a few tips to follow:

- Give them an idea of how long the interview will last

- If you plan to record the conversation, ask permission beforehand

- Provide the opportunity to ask questions before you begin and again at the end.

2. Focus Groups

Focus groups share much in common with less structured interviews, the key difference being that the goal is to collect data from several participants simultaneously. Focus groups are effective in gathering information based on collective views and are one of the most popular data collection instruments in qualitative research when a series of one-on-one interviews proves too time-consuming or difficult to schedule.

Focus groups are most helpful in gathering data from a specific group of people, such as donors or clients from a particular demographic. The discussion should be focused on a specific topic and carefully guided and moderated by the researcher to determine participant views and the reasoning behind them.

Feedback in a group setting often provides richer data than one-on-one interviews, as participants are generally more open to sharing when others are sharing too. Plus, input from one participant may spark insight from another that would not have come to light otherwise. However, here are a couple of potential downsides:

- If participants are uneasy with each other, they may not be at ease openly discussing their feelings or opinions.

- If the topic is not of interest or does not focus on something participants are willing to discuss, data will lack value.

The size of the group should be carefully considered. Research suggests over-recruiting to avoid risking cancellation, even if that means moderators have to manage more participants than anticipated. The optimum group size is generally between six and eight for all participants to be granted ample opportunity to speak. However, focus groups can still be successful with as few as three or as many as fourteen participants.

3. Observation

Observation is one of the ultimate data collection tools in qualitative research for gathering information through subjective methods. A technique used frequently by modern-day marketers, qualitative observation is also favored by psychologists, sociologists, behavior specialists, and product developers.

The primary purpose is to gather information that cannot be measured or easily quantified. It involves virtually no cognitive input from the participants themselves. Researchers simply observe subjects and their reactions during the course of their regular routines and take detailed field notes from which to draw information.

Observational techniques vary in terms of contact with participants. Some qualitative observations involve the complete immersion of the researcher over a period of time. For example, attending the same church, clinic, society meetings, or volunteer organizations as the participants. Under these circumstances, researchers will likely witness the most natural responses rather than relying on behaviors elicited in a simulated environment. Depending on the study and intended purpose, they may or may not choose to identify themselves as a researcher during the process.

Regardless of whether you take a covert or overt approach, remember that because each researcher is as unique as every participant, they will have their own inherent biases. Therefore, observational studies are prone to a high degree of subjectivity. For example, one researcher’s notes on the behavior of donors at a society event may vary wildly from the next. So, each qualitative observational study is unique in its own right.

4. Open-Ended Surveys and Questionnaires

Open-ended surveys and questionnaires allow organizations to collect views and opinions from respondents without meeting in person. They can be sent electronically and are considered one of the most cost-effective qualitative data collection tools. Unlike closed question surveys and questionnaires that limit responses, open-ended questions allow participants to provide lengthy and in-depth answers from which you can extrapolate large amounts of data.

The findings of open-ended surveys and questionnaires can be challenging to analyze because there are no uniform answers. A popular approach is to record sentiments as positive, negative, and neutral and further dissect the data from there. To gather the best business intelligence, carefully consider the presentation and length of your survey or questionnaire. Here is a list of essential considerations:

- Number of questions : Too many can feel intimidating, and you’ll experience low response rates. Too few can feel like it’s not worth the effort. Plus, the data you collect will have limited actionability. The consensus on how many questions to include varies depending on which sources you consult. However, 5-10 is a good benchmark for shorter surveys that take around 10 minutes and 15-20 for longer surveys that take approximately 20 minutes to complete.

- Personalization: Your response rate will be higher if you greet patients by name and demonstrate a historical knowledge of their interactions with your brand.

- Visual elements : Recipients can be easily turned off by poorly designed questionnaires. Besides, it’s a good idea to customize your survey template to include brand assets like colors, logos, and fonts to increase brand loyalty and recognition.

- Reminders : Sending survey reminders is the best way to improve your response rate. You don’t want to hassle respondents too soon, nor do you want to wait too long. Sending a follow-up at around the 3-7 mark is usually the most effective.

- Building a feedback loop : Adding a tick-box requesting permission for further follow-ups is a proven way to elicit more in-depth feedback. Plus, it gives respondents a voice and makes their opinion feel valued.

5. Case Studies

Case studies are often a preferred method of qualitative research data collection for organizations looking to generate incredibly detailed and in-depth information on a specific topic. Case studies are usually a deep dive into one specific case or a small number of related cases. As a result, they work well for organizations that operate in niche markets.

Case studies typically involve several qualitative data collection methods, including interviews, focus groups, surveys, and observation. The idea is to cast a wide net to obtain a rich picture comprising multiple views and responses. When conducted correctly, case studies can generate vast bodies of data that can be used to improve processes at every client and donor touchpoint.

The best way to demonstrate the purpose and value of a case study is with an example: A Longitudinal Qualitative Case Study of Change in Nonprofits – Suggesting A New Approach to the Management of Change .

The researchers established that while change management had already been widely researched in commercial and for-profit settings, little reference had been made to the unique challenges in the nonprofit sector. The case study examined change and change management at a single nonprofit hospital from the viewpoint of all those who witnessed and experienced it. To gain a holistic view of the entire process, research included interviews with employees at every level, from nursing staff to CEOs, to identify the direct and indirect impacts of change. Results were collated based on detailed responses to questions about preparing for change, experiencing change, and reflecting on change.

6. Text Analysis

Text analysis has long been used in political and social science spheres to gain a deeper understanding of behaviors and motivations by gathering insights from human-written texts. By analyzing the flow of text and word choices, relationships between other texts written by the same participant can be identified so that researchers can draw conclusions about the mindset of their target audience. Though technically a qualitative data collection method, the process can involve some quantitative elements, as often, computer systems are used to scan, extract, and categorize information to identify patterns, sentiments, and other actionable information.

You might be wondering how to collect written information from your research subjects. There are many different options, and approaches can be overt or covert.

Examples include:

- Investigating how often certain cause-related words and phrases are used in client and donor social media posts.

- Asking participants to keep a journal or diary.

- Analyzing existing interview transcripts and survey responses.

By conducting a detailed analysis, you can connect elements of written text to specific issues, causes, and cultural perspectives, allowing you to draw empirical conclusions about personal views, behaviors, and social relations. With small studies focusing on participants' subjective experience on a specific theme or topic, diaries and journals can be particularly effective in building an understanding of underlying thought processes and beliefs.

7. Audio and Video Recordings

Similarly to how data is collected from a person’s writing, you can draw valuable conclusions by observing someone’s speech patterns, intonation, and body language when you watch or listen to them interact in a particular environment or within specific surroundings.

Video and audio recordings are helpful in circumstances where researchers predict better results by having participants be in the moment rather than having them think about what to write down or how to formulate an answer to an email survey.

You can collect audio and video materials for analysis from multiple sources, including:

- Previously filmed records of events

- Interview recordings

- Video diaries

Utilizing audio and video footage allows researchers to revisit key themes, and it's possible to use the same analytical sources in multiple studies – providing that the scope of the original recording is comprehensive enough to cover the intended theme in adequate depth.

It can be challenging to present the results of audio and video analysis in a quantifiable form that helps you gauge campaign and market performance. However, results can be used to effectively design concept maps that extrapolate central themes that arise consistently. Concept Mapping offers organizations a visual representation of thought patterns and how ideas link together between different demographics. This data can prove invaluable in identifying areas for improvement and change across entire projects and organizational processes.

8. Hybrid Methodologies

It is often possible to utilize data collection methods in qualitative research that provide quantitative facts and figures. So if you’re struggling to settle on an approach, a hybrid methodology may be a good starting point. For instance, a survey format that asks closed and open questions can collect and collate quantitative and qualitative data.

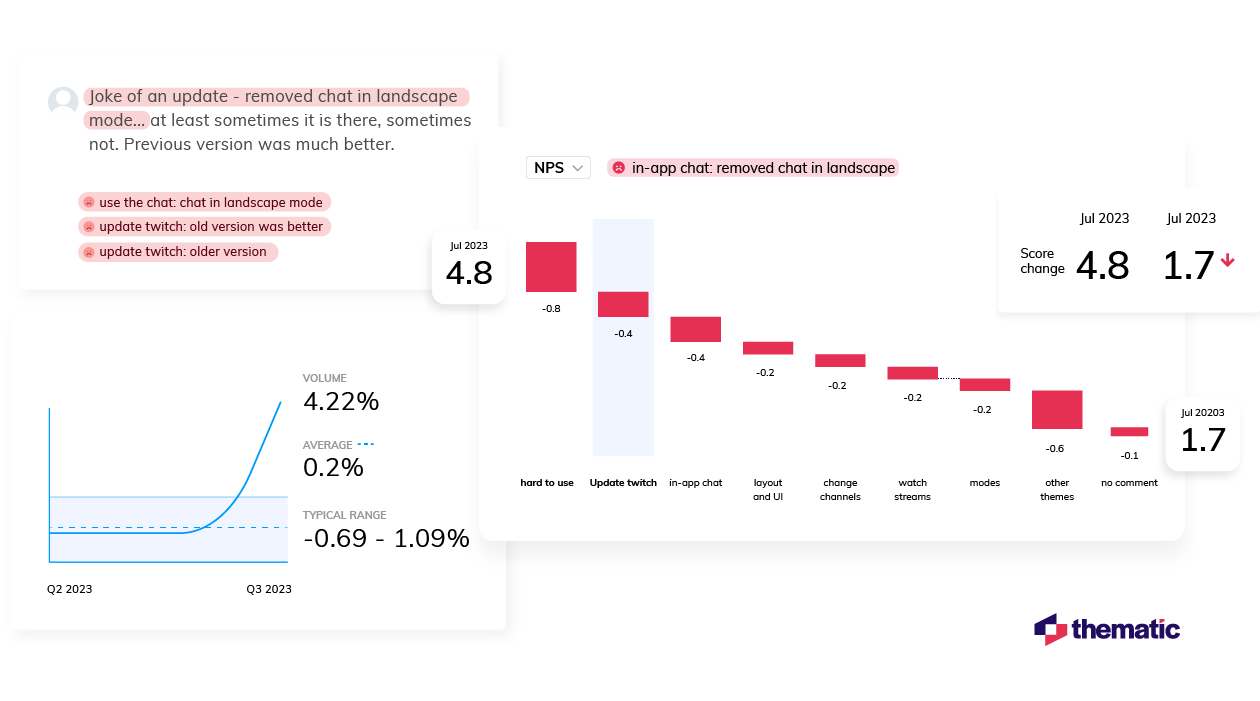

A Net Promoter Score (NPS) survey is a great example. The primary goal of an NPS survey is to collect quantitative ratings of various factors on a score of 1-10. However, they also utilize open-ended follow-up questions to collect qualitative data that helps identify insights into the trends, thought processes, reasoning, and behaviors behind the initial scoring.

Collect and Collate Actionable Data with UpMetrics

Most nonprofits believe data is strategically important. It has been statistically proven that organizations with advanced data insights achieve their missions more efficiently. Yet, studies show that despite 90% of organizations collecting data, only 5% believe internal decision-making is data-driven. At UpMetrics, we’re here to help you change that.

UpMetrics specializes in bringing technology and humanity together to serve social good. Our unique social impact software combines quantitative and qualitative data collection methods and analysis techniques, enabling social impact organizations to gain insights, drive action, and inspire change. By reporting and analyzing quantitative and qualitative data in one intuitive platform, your impact organization gains the understanding it needs to identify the drivers of positive outcomes, achieve transparency, and increase knowledge sharing across stakeholders.

Contact us today to learn more about our nonprofit impact measurement solutions and discover the power of a partnership with UpMetrics.

Disrupting Tradition

Download our case study to discover how the Ewing Marion Kauffman Foundation is using a Learning Mindset to Support Grantees in Measuring Impact

- AI & NLP

- Churn & Loyalty

- Customer Experience

- Customer Journeys

- Customer Metrics

- Feedback Analysis

- Product Experience

- Product Updates

- Sentiment Analysis

- Surveys & Feedback Collection

- Try Thematic

Welcome to the community

Qualitative Data Analysis: Step-by-Step Guide (Manual vs. Automatic)

When we conduct qualitative methods of research, need to explain changes in metrics or understand people's opinions, we always turn to qualitative data. Qualitative data is typically generated through:

- Interview transcripts

- Surveys with open-ended questions

- Contact center transcripts

- Texts and documents

- Audio and video recordings

- Observational notes

Compared to quantitative data, which captures structured information, qualitative data is unstructured and has more depth. It can answer our questions, can help formulate hypotheses and build understanding.

It's important to understand the differences between quantitative data & qualitative data . But unfortunately, analyzing qualitative data is difficult. While tools like Excel, Tableau and PowerBI crunch and visualize quantitative data with ease, there are a limited number of mainstream tools for analyzing qualitative data . The majority of qualitative data analysis still happens manually.

That said, there are two new trends that are changing this. First, there are advances in natural language processing (NLP) which is focused on understanding human language. Second, there is an explosion of user-friendly software designed for both researchers and businesses. Both help automate the qualitative data analysis process.

In this post we want to teach you how to conduct a successful qualitative data analysis. There are two primary qualitative data analysis methods; manual & automatic. We will teach you how to conduct the analysis manually, and also, automatically using software solutions powered by NLP. We’ll guide you through the steps to conduct a manual analysis, and look at what is involved and the role technology can play in automating this process.

More businesses are switching to fully-automated analysis of qualitative customer data because it is cheaper, faster, and just as accurate. Primarily, businesses purchase subscriptions to feedback analytics platforms so that they can understand customer pain points and sentiment.

We’ll take you through 5 steps to conduct a successful qualitative data analysis. Within each step we will highlight the key difference between the manual, and automated approach of qualitative researchers. Here's an overview of the steps:

The 5 steps to doing qualitative data analysis

- Gathering and collecting your qualitative data

- Organizing and connecting into your qualitative data

- Coding your qualitative data

- Analyzing the qualitative data for insights

- Reporting on the insights derived from your analysis

What is Qualitative Data Analysis?

Qualitative data analysis is a process of gathering, structuring and interpreting qualitative data to understand what it represents.

Qualitative data is non-numerical and unstructured. Qualitative data generally refers to text, such as open-ended responses to survey questions or user interviews, but also includes audio, photos and video.

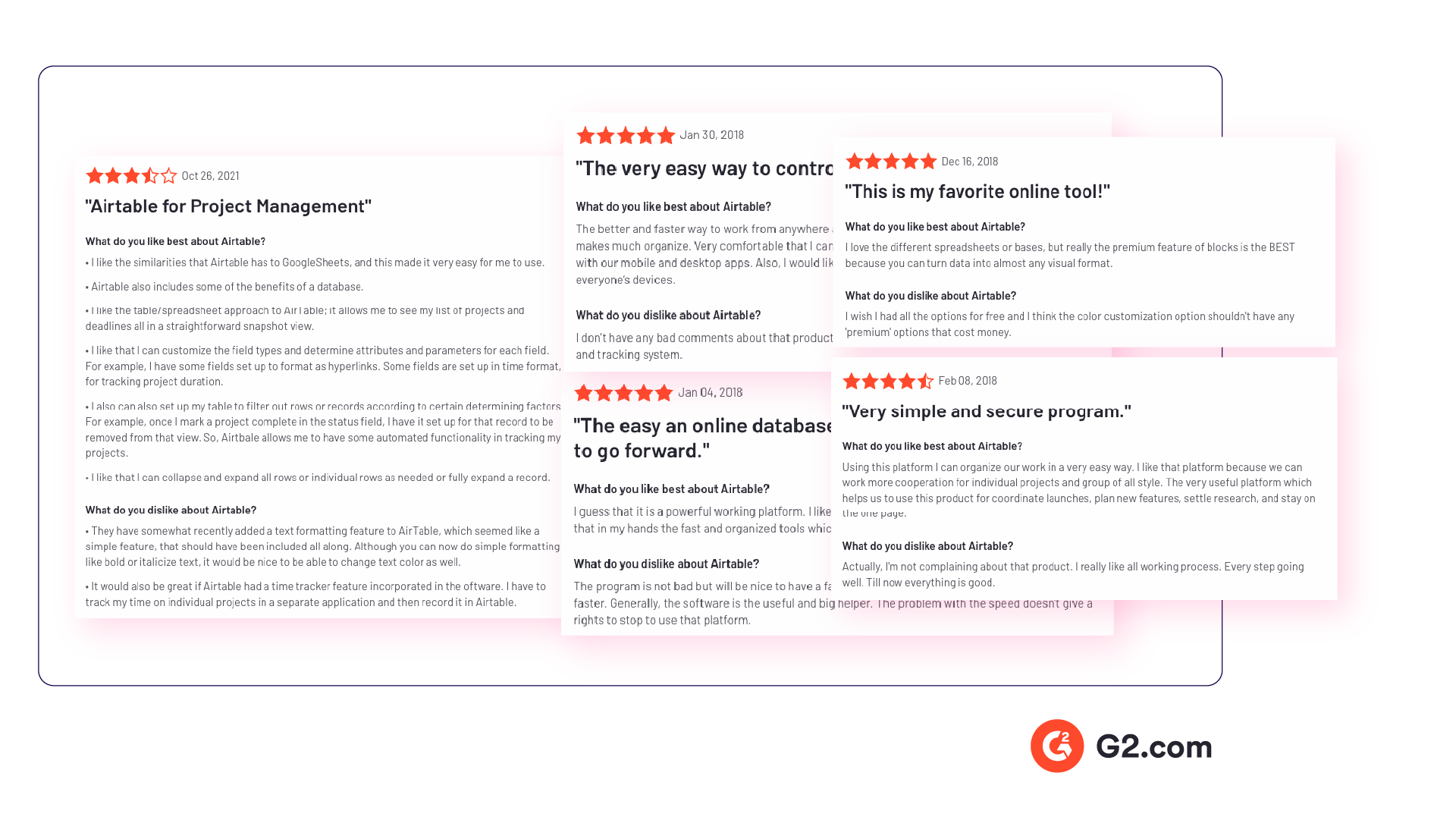

Businesses often perform qualitative data analysis on customer feedback. And within this context, qualitative data generally refers to verbatim text data collected from sources such as reviews, complaints, chat messages, support centre interactions, customer interviews, case notes or social media comments.

How is qualitative data analysis different from quantitative data analysis?

Understanding the differences between quantitative & qualitative data is important. When it comes to analyzing data, Qualitative Data Analysis serves a very different role to Quantitative Data Analysis. But what sets them apart?

Qualitative Data Analysis dives into the stories hidden in non-numerical data such as interviews, open-ended survey answers, or notes from observations. It uncovers the ‘whys’ and ‘hows’ giving a deep understanding of people’s experiences and emotions.

Quantitative Data Analysis on the other hand deals with numerical data, using statistics to measure differences, identify preferred options, and pinpoint root causes of issues. It steps back to address questions like "how many" or "what percentage" to offer broad insights we can apply to larger groups.

In short, Qualitative Data Analysis is like a microscope, helping us understand specific detail. Quantitative Data Analysis is like the telescope, giving us a broader perspective. Both are important, working together to decode data for different objectives.

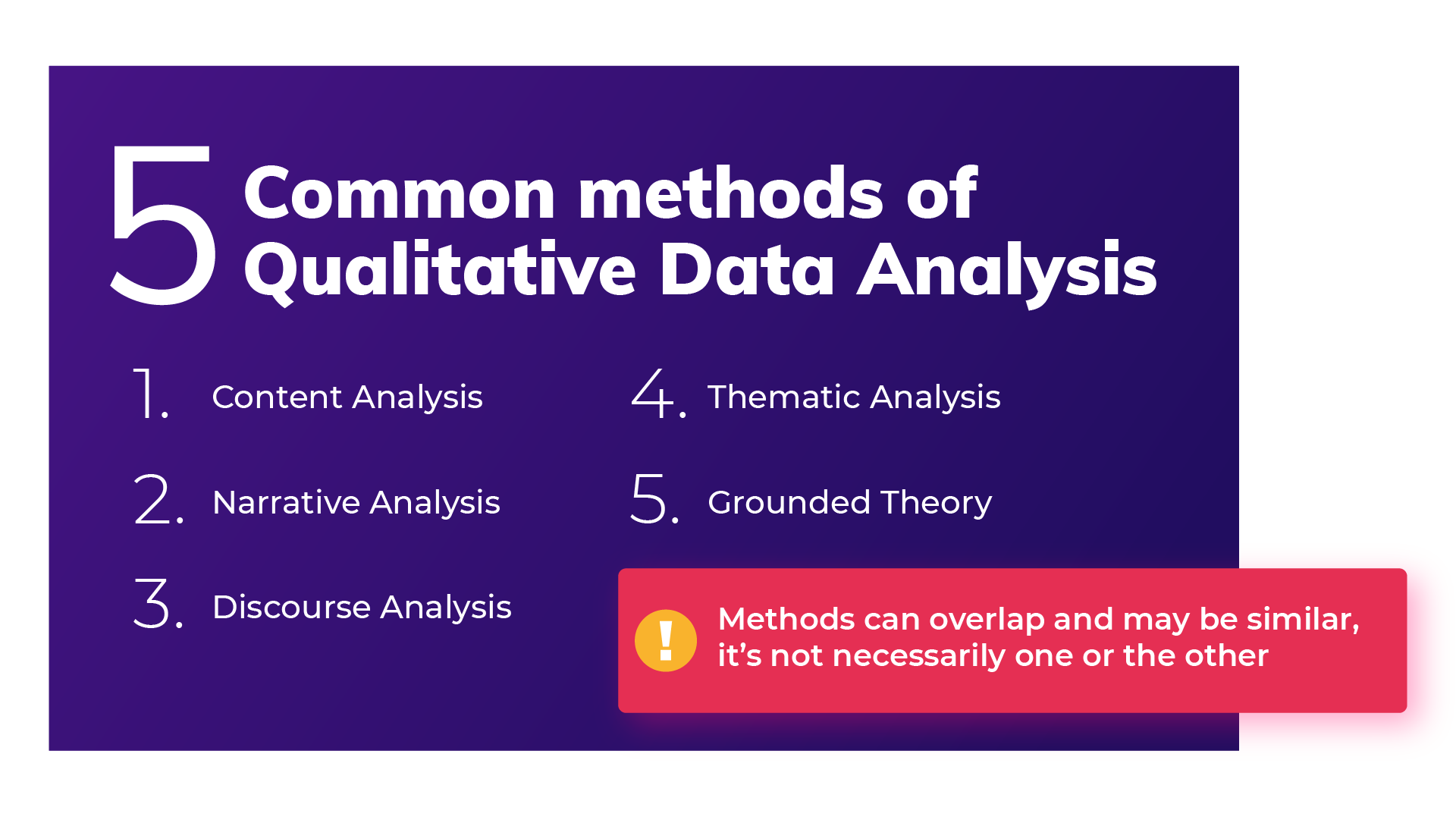

Qualitative Data Analysis methods

Once all the data has been captured, there are a variety of analysis techniques available and the choice is determined by your specific research objectives and the kind of data you’ve gathered. Common qualitative data analysis methods include:

Content Analysis

This is a popular approach to qualitative data analysis. Other qualitative analysis techniques may fit within the broad scope of content analysis. Thematic analysis is a part of the content analysis. Content analysis is used to identify the patterns that emerge from text, by grouping content into words, concepts, and themes. Content analysis is useful to quantify the relationship between all of the grouped content. The Columbia School of Public Health has a detailed breakdown of content analysis .

Narrative Analysis

Narrative analysis focuses on the stories people tell and the language they use to make sense of them. It is particularly useful in qualitative research methods where customer stories are used to get a deep understanding of customers’ perspectives on a specific issue. A narrative analysis might enable us to summarize the outcomes of a focused case study.

Discourse Analysis

Discourse analysis is used to get a thorough understanding of the political, cultural and power dynamics that exist in specific situations. The focus of discourse analysis here is on the way people express themselves in different social contexts. Discourse analysis is commonly used by brand strategists who hope to understand why a group of people feel the way they do about a brand or product.

Thematic Analysis

Thematic analysis is used to deduce the meaning behind the words people use. This is accomplished by discovering repeating themes in text. These meaningful themes reveal key insights into data and can be quantified, particularly when paired with sentiment analysis . Often, the outcome of thematic analysis is a code frame that captures themes in terms of codes, also called categories. So the process of thematic analysis is also referred to as “coding”. A common use-case for thematic analysis in companies is analysis of customer feedback.

Grounded Theory

Grounded theory is a useful approach when little is known about a subject. Grounded theory starts by formulating a theory around a single data case. This means that the theory is “grounded”. Grounded theory analysis is based on actual data, and not entirely speculative. Then additional cases can be examined to see if they are relevant and can add to the original grounded theory.

Challenges of Qualitative Data Analysis

While Qualitative Data Analysis offers rich insights, it comes with its challenges. Each unique QDA method has its unique hurdles. Let’s take a look at the challenges researchers and analysts might face, depending on the chosen method.

- Time and Effort (Narrative Analysis): Narrative analysis, which focuses on personal stories, demands patience. Sifting through lengthy narratives to find meaningful insights can be time-consuming, requires dedicated effort.

- Being Objective (Grounded Theory): Grounded theory, building theories from data, faces the challenges of personal biases. Staying objective while interpreting data is crucial, ensuring conclusions are rooted in the data itself.

- Complexity (Thematic Analysis): Thematic analysis involves identifying themes within data, a process that can be intricate. Categorizing and understanding themes can be complex, especially when each piece of data varies in context and structure. Thematic Analysis software can simplify this process.

- Generalizing Findings (Narrative Analysis): Narrative analysis, dealing with individual stories, makes drawing broad challenging. Extending findings from a single narrative to a broader context requires careful consideration.

- Managing Data (Thematic Analysis): Thematic analysis involves organizing and managing vast amounts of unstructured data, like interview transcripts. Managing this can be a hefty task, requiring effective data management strategies.

- Skill Level (Grounded Theory): Grounded theory demands specific skills to build theories from the ground up. Finding or training analysts with these skills poses a challenge, requiring investment in building expertise.

Benefits of qualitative data analysis

Qualitative Data Analysis (QDA) is like a versatile toolkit, offering a tailored approach to understanding your data. The benefits it offers are as diverse as the methods. Let’s explore why choosing the right method matters.

- Tailored Methods for Specific Needs: QDA isn't one-size-fits-all. Depending on your research objectives and the type of data at hand, different methods offer unique benefits. If you want emotive customer stories, narrative analysis paints a strong picture. When you want to explain a score, thematic analysis reveals insightful patterns

- Flexibility with Thematic Analysis: thematic analysis is like a chameleon in the toolkit of QDA. It adapts well to different types of data and research objectives, making it a top choice for any qualitative analysis.

- Deeper Understanding, Better Products: QDA helps you dive into people's thoughts and feelings. This deep understanding helps you build products and services that truly matches what people want, ensuring satisfied customers

- Finding the Unexpected: Qualitative data often reveals surprises that we miss in quantitative data. QDA offers us new ideas and perspectives, for insights we might otherwise miss.

- Building Effective Strategies: Insights from QDA are like strategic guides. They help businesses in crafting plans that match people’s desires.

- Creating Genuine Connections: Understanding people’s experiences lets businesses connect on a real level. This genuine connection helps build trust and loyalty, priceless for any business.

How to do Qualitative Data Analysis: 5 steps

Now we are going to show how you can do your own qualitative data analysis. We will guide you through this process step by step. As mentioned earlier, you will learn how to do qualitative data analysis manually , and also automatically using modern qualitative data and thematic analysis software.

To get best value from the analysis process and research process, it’s important to be super clear about the nature and scope of the question that’s being researched. This will help you select the research collection channels that are most likely to help you answer your question.

Depending on if you are a business looking to understand customer sentiment, or an academic surveying a school, your approach to qualitative data analysis will be unique.

Once you’re clear, there’s a sequence to follow. And, though there are differences in the manual and automatic approaches, the process steps are mostly the same.

The use case for our step-by-step guide is a company looking to collect data (customer feedback data), and analyze the customer feedback - in order to improve customer experience. By analyzing the customer feedback the company derives insights about their business and their customers. You can follow these same steps regardless of the nature of your research. Let’s get started.

Step 1: Gather your qualitative data and conduct research (Conduct qualitative research)

The first step of qualitative research is to do data collection. Put simply, data collection is gathering all of your data for analysis. A common situation is when qualitative data is spread across various sources.

Classic methods of gathering qualitative data

Most companies use traditional methods for gathering qualitative data: conducting interviews with research participants, running surveys, and running focus groups. This data is typically stored in documents, CRMs, databases and knowledge bases. It’s important to examine which data is available and needs to be included in your research project, based on its scope.

Using your existing qualitative feedback

As it becomes easier for customers to engage across a range of different channels, companies are gathering increasingly large amounts of both solicited and unsolicited qualitative feedback.

Most organizations have now invested in Voice of Customer programs , support ticketing systems, chatbot and support conversations, emails and even customer Slack chats.

These new channels provide companies with new ways of getting feedback, and also allow the collection of unstructured feedback data at scale.

The great thing about this data is that it contains a wealth of valubale insights and that it’s already there! When you have a new question about user behavior or your customers, you don’t need to create a new research study or set up a focus group. You can find most answers in the data you already have.

Typically, this data is stored in third-party solutions or a central database, but there are ways to export it or connect to a feedback analysis solution through integrations or an API.

Utilize untapped qualitative data channels

There are many online qualitative data sources you may not have considered. For example, you can find useful qualitative data in social media channels like Twitter or Facebook. Online forums, review sites, and online communities such as Discourse or Reddit also contain valuable data about your customers, or research questions.

If you are considering performing a qualitative benchmark analysis against competitors - the internet is your best friend. Gathering feedback in competitor reviews on sites like Trustpilot, G2, Capterra, Better Business Bureau or on app stores is a great way to perform a competitor benchmark analysis.

Customer feedback analysis software often has integrations into social media and review sites, or you could use a solution like DataMiner to scrape the reviews.

Step 2: Connect & organize all your qualitative data

Now you all have this qualitative data but there’s a problem, the data is unstructured. Before feedback can be analyzed and assigned any value, it needs to be organized in a single place. Why is this important? Consistency!

If all data is easily accessible in one place and analyzed in a consistent manner, you will have an easier time summarizing and making decisions based on this data.

The manual approach to organizing your data

The classic method of structuring qualitative data is to plot all the raw data you’ve gathered into a spreadsheet.

Typically, research and support teams would share large Excel sheets and different business units would make sense of the qualitative feedback data on their own. Each team collects and organizes the data in a way that best suits them, which means the feedback tends to be kept in separate silos.

An alternative and a more robust solution is to store feedback in a central database, like Snowflake or Amazon Redshift .

Keep in mind that when you organize your data in this way, you are often preparing it to be imported into another software. If you go the route of a database, you would need to use an API to push the feedback into a third-party software.

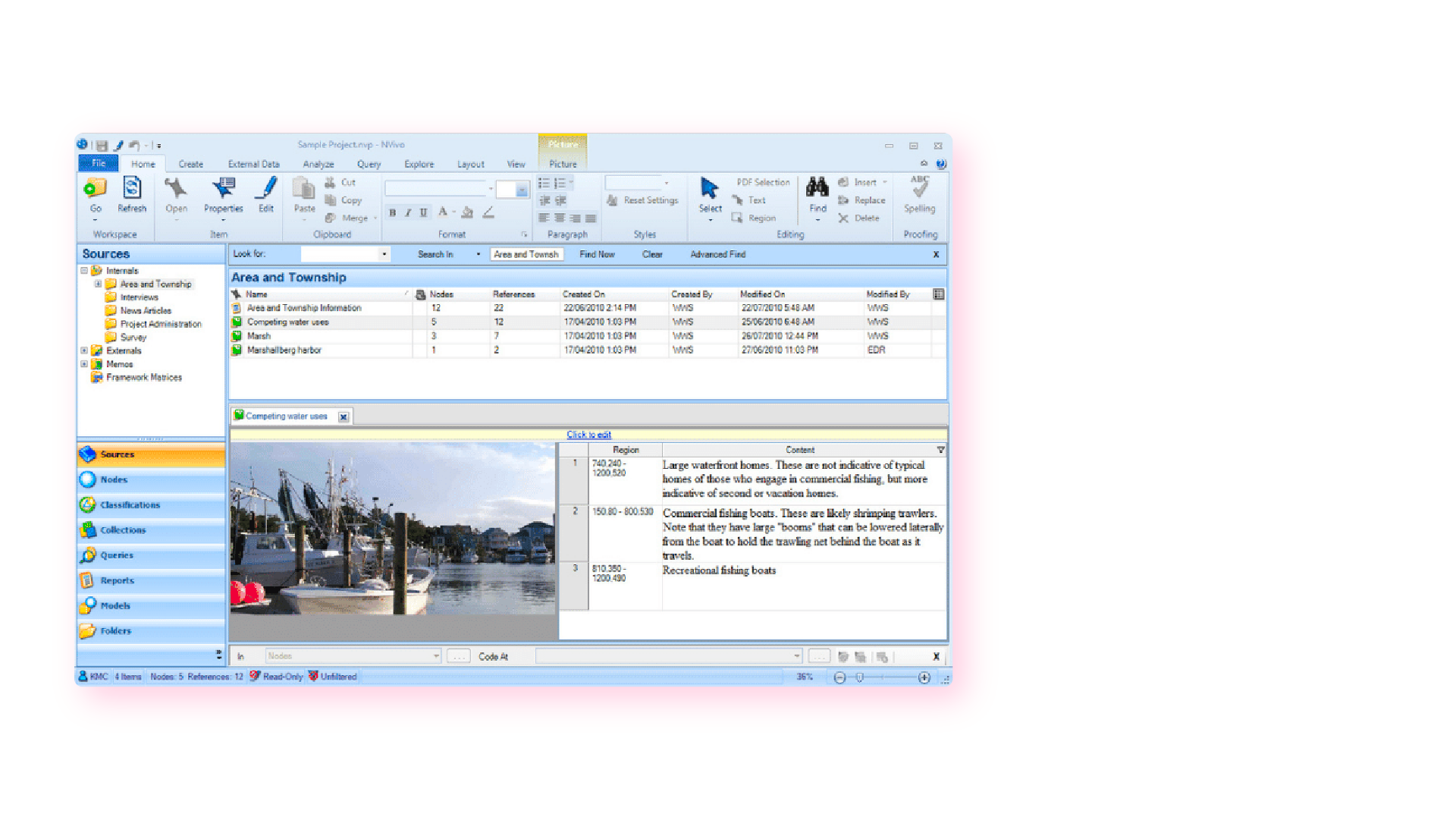

Computer-assisted qualitative data analysis software (CAQDAS)

Traditionally within the manual analysis approach (but not always), qualitative data is imported into CAQDAS software for coding.

In the early 2000s, CAQDAS software was popularised by developers such as ATLAS.ti, NVivo and MAXQDA and eagerly adopted by researchers to assist with the organizing and coding of data.

The benefits of using computer-assisted qualitative data analysis software:

- Assists in the organizing of your data

- Opens you up to exploring different interpretations of your data analysis

- Allows you to share your dataset easier and allows group collaboration (allows for secondary analysis)

However you still need to code the data, uncover the themes and do the analysis yourself. Therefore it is still a manual approach.

Organizing your qualitative data in a feedback repository

Another solution to organizing your qualitative data is to upload it into a feedback repository where it can be unified with your other data , and easily searchable and taggable. There are a number of software solutions that act as a central repository for your qualitative research data. Here are a couple solutions that you could investigate:

- Dovetail: Dovetail is a research repository with a focus on video and audio transcriptions. You can tag your transcriptions within the platform for theme analysis. You can also upload your other qualitative data such as research reports, survey responses, support conversations, and customer interviews. Dovetail acts as a single, searchable repository. And makes it easier to collaborate with other people around your qualitative research.

- EnjoyHQ: EnjoyHQ is another research repository with similar functionality to Dovetail. It boasts a more sophisticated search engine, but it has a higher starting subscription cost.

Organizing your qualitative data in a feedback analytics platform

If you have a lot of qualitative customer or employee feedback, from the likes of customer surveys or employee surveys, you will benefit from a feedback analytics platform. A feedback analytics platform is a software that automates the process of both sentiment analysis and thematic analysis . Companies use the integrations offered by these platforms to directly tap into their qualitative data sources (review sites, social media, survey responses, etc.). The data collected is then organized and analyzed consistently within the platform.

If you have data prepared in a spreadsheet, it can also be imported into feedback analytics platforms.

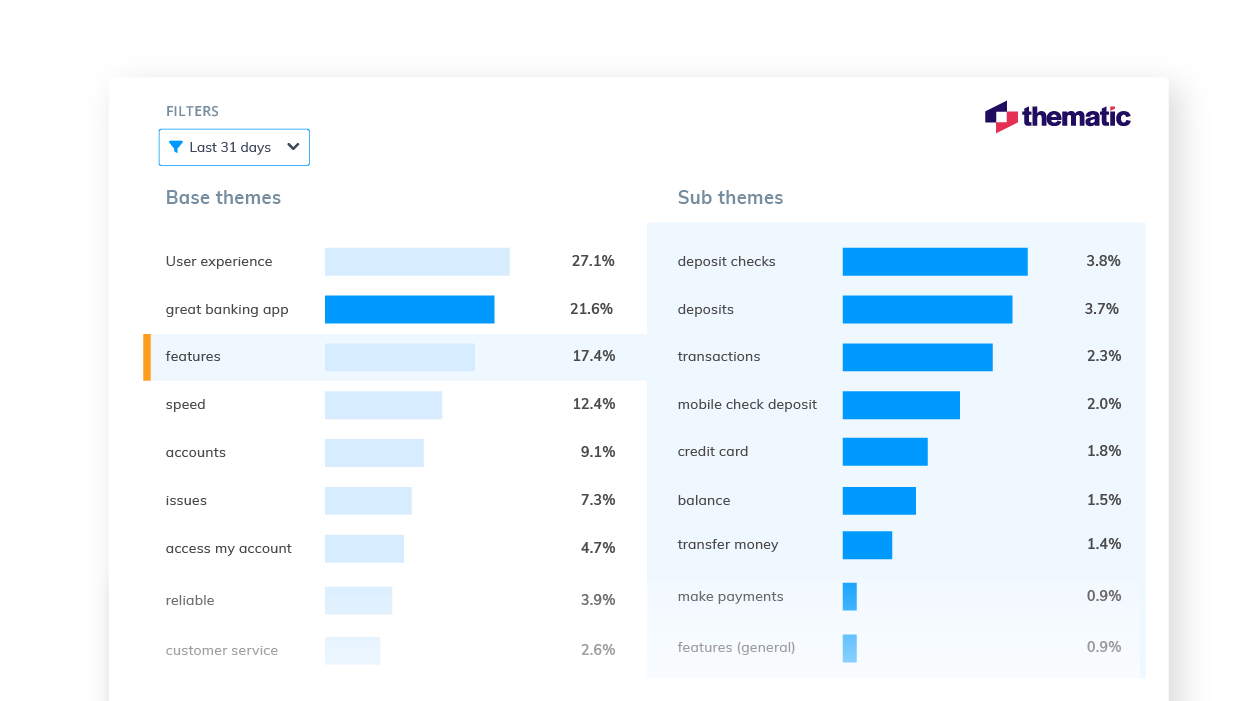

Once all this rich data has been organized within the feedback analytics platform, it is ready to be coded and themed, within the same platform. Thematic is a feedback analytics platform that offers one of the largest libraries of integrations with qualitative data sources.

Step 3: Coding your qualitative data

Your feedback data is now organized in one place. Either within your spreadsheet, CAQDAS, feedback repository or within your feedback analytics platform. The next step is to code your feedback data so we can extract meaningful insights in the next step.

Coding is the process of labelling and organizing your data in such a way that you can then identify themes in the data, and the relationships between these themes.

To simplify the coding process, you will take small samples of your customer feedback data, come up with a set of codes, or categories capturing themes, and label each piece of feedback, systematically, for patterns and meaning. Then you will take a larger sample of data, revising and refining the codes for greater accuracy and consistency as you go.

If you choose to use a feedback analytics platform, much of this process will be automated and accomplished for you.

The terms to describe different categories of meaning (‘theme’, ‘code’, ‘tag’, ‘category’ etc) can be confusing as they are often used interchangeably. For clarity, this article will use the term ‘code’.

To code means to identify key words or phrases and assign them to a category of meaning. “I really hate the customer service of this computer software company” would be coded as “poor customer service”.

How to manually code your qualitative data

- Decide whether you will use deductive or inductive coding. Deductive coding is when you create a list of predefined codes, and then assign them to the qualitative data. Inductive coding is the opposite of this, you create codes based on the data itself. Codes arise directly from the data and you label them as you go. You need to weigh up the pros and cons of each coding method and select the most appropriate.

- Read through the feedback data to get a broad sense of what it reveals. Now it’s time to start assigning your first set of codes to statements and sections of text.

- Keep repeating step 2, adding new codes and revising the code description as often as necessary. Once it has all been coded, go through everything again, to be sure there are no inconsistencies and that nothing has been overlooked.

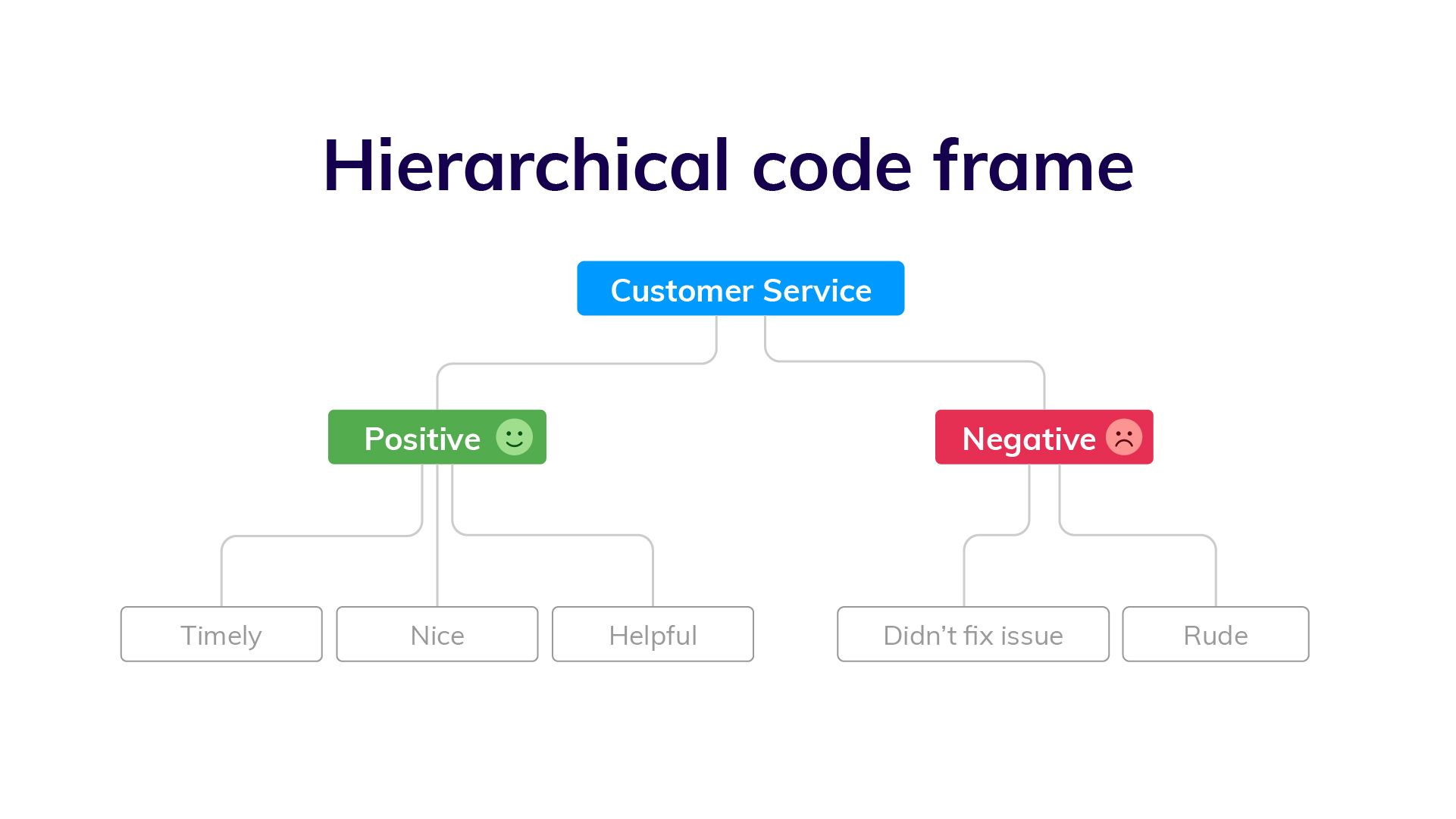

- Create a code frame to group your codes. The coding frame is the organizational structure of all your codes. And there are two commonly used types of coding frames, flat, or hierarchical. A hierarchical code frame will make it easier for you to derive insights from your analysis.

- Based on the number of times a particular code occurs, you can now see the common themes in your feedback data. This is insightful! If ‘bad customer service’ is a common code, it’s time to take action.

We have a detailed guide dedicated to manually coding your qualitative data .

Using software to speed up manual coding of qualitative data

An Excel spreadsheet is still a popular method for coding. But various software solutions can help speed up this process. Here are some examples.

- CAQDAS / NVivo - CAQDAS software has built-in functionality that allows you to code text within their software. You may find the interface the software offers easier for managing codes than a spreadsheet.

- Dovetail/EnjoyHQ - You can tag transcripts and other textual data within these solutions. As they are also repositories you may find it simpler to keep the coding in one platform.

- IBM SPSS - SPSS is a statistical analysis software that may make coding easier than in a spreadsheet.

- Ascribe - Ascribe’s ‘Coder’ is a coding management system. Its user interface will make it easier for you to manage your codes.

Automating the qualitative coding process using thematic analysis software

In solutions which speed up the manual coding process, you still have to come up with valid codes and often apply codes manually to pieces of feedback. But there are also solutions that automate both the discovery and the application of codes.

Advances in machine learning have now made it possible to read, code and structure qualitative data automatically. This type of automated coding is offered by thematic analysis software .

Automation makes it far simpler and faster to code the feedback and group it into themes. By incorporating natural language processing (NLP) into the software, the AI looks across sentences and phrases to identify common themes meaningful statements. Some automated solutions detect repeating patterns and assign codes to them, others make you train the AI by providing examples. You could say that the AI learns the meaning of the feedback on its own.

Thematic automates the coding of qualitative feedback regardless of source. There’s no need to set up themes or categories in advance. Simply upload your data and wait a few minutes. You can also manually edit the codes to further refine their accuracy. Experiments conducted indicate that Thematic’s automated coding is just as accurate as manual coding .

Paired with sentiment analysis and advanced text analytics - these automated solutions become powerful for deriving quality business or research insights.

You could also build your own , if you have the resources!

The key benefits of using an automated coding solution

Automated analysis can often be set up fast and there’s the potential to uncover things that would never have been revealed if you had given the software a prescribed list of themes to look for.

Because the model applies a consistent rule to the data, it captures phrases or statements that a human eye might have missed.

Complete and consistent analysis of customer feedback enables more meaningful findings. Leading us into step 4.

Step 4: Analyze your data: Find meaningful insights

Now we are going to analyze our data to find insights. This is where we start to answer our research questions. Keep in mind that step 4 and step 5 (tell the story) have some overlap . This is because creating visualizations is both part of analysis process and reporting.

The task of uncovering insights is to scour through the codes that emerge from the data and draw meaningful correlations from them. It is also about making sure each insight is distinct and has enough data to support it.

Part of the analysis is to establish how much each code relates to different demographics and customer profiles, and identify whether there’s any relationship between these data points.

Manually create sub-codes to improve the quality of insights

If your code frame only has one level, you may find that your codes are too broad to be able to extract meaningful insights. This is where it is valuable to create sub-codes to your primary codes. This process is sometimes referred to as meta coding.

Note: If you take an inductive coding approach, you can create sub-codes as you are reading through your feedback data and coding it.

While time-consuming, this exercise will improve the quality of your analysis. Here is an example of what sub-codes could look like.

You need to carefully read your qualitative data to create quality sub-codes. But as you can see, the depth of analysis is greatly improved. By calculating the frequency of these sub-codes you can get insight into which customer service problems you can immediately address.

Correlate the frequency of codes to customer segments

Many businesses use customer segmentation . And you may have your own respondent segments that you can apply to your qualitative analysis. Segmentation is the practise of dividing customers or research respondents into subgroups.

Segments can be based on:

- Demographic

- And any other data type that you care to segment by

It is particularly useful to see the occurrence of codes within your segments. If one of your customer segments is considered unimportant to your business, but they are the cause of nearly all customer service complaints, it may be in your best interest to focus attention elsewhere. This is a useful insight!

Manually visualizing coded qualitative data

There are formulas you can use to visualize key insights in your data. The formulas we will suggest are imperative if you are measuring a score alongside your feedback.

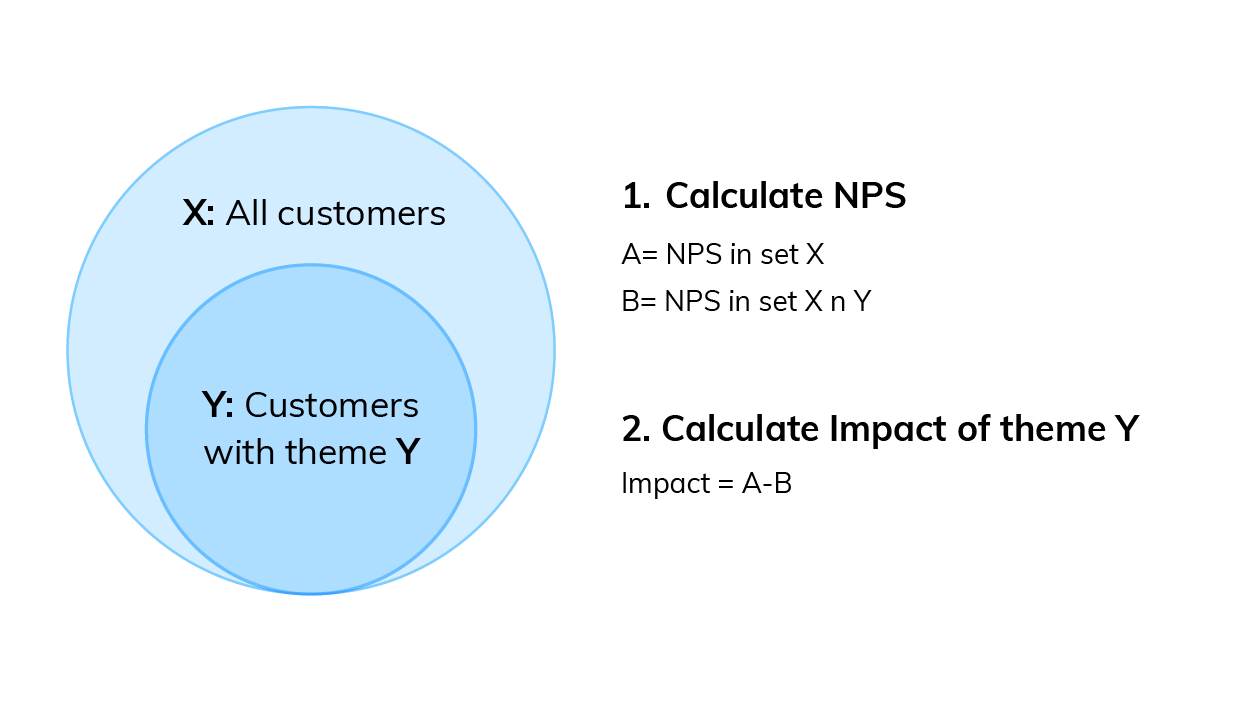

If you are collecting a metric alongside your qualitative data this is a key visualization. Impact answers the question: “What’s the impact of a code on my overall score?”. Using Net Promoter Score (NPS) as an example, first you need to:

- Calculate overall NPS

- Calculate NPS in the subset of responses that do not contain that theme

- Subtract B from A

Then you can use this simple formula to calculate code impact on NPS .

You can then visualize this data using a bar chart.

You can download our CX toolkit - it includes a template to recreate this.

Trends over time

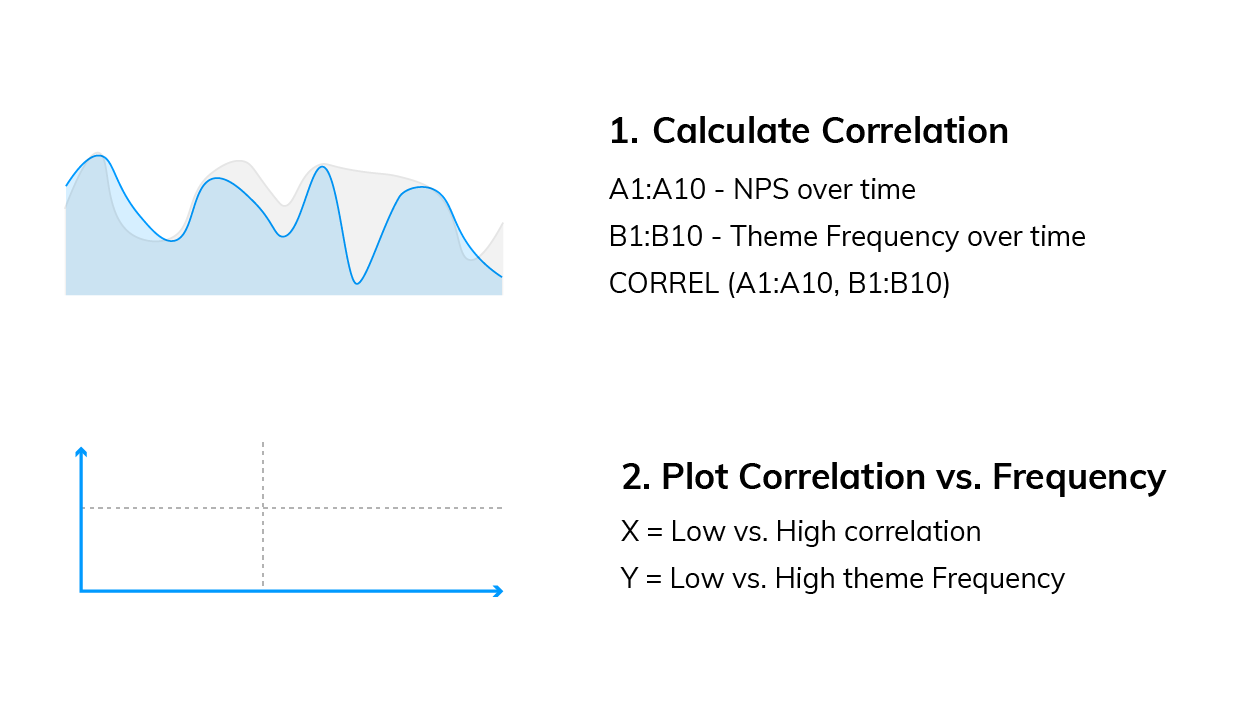

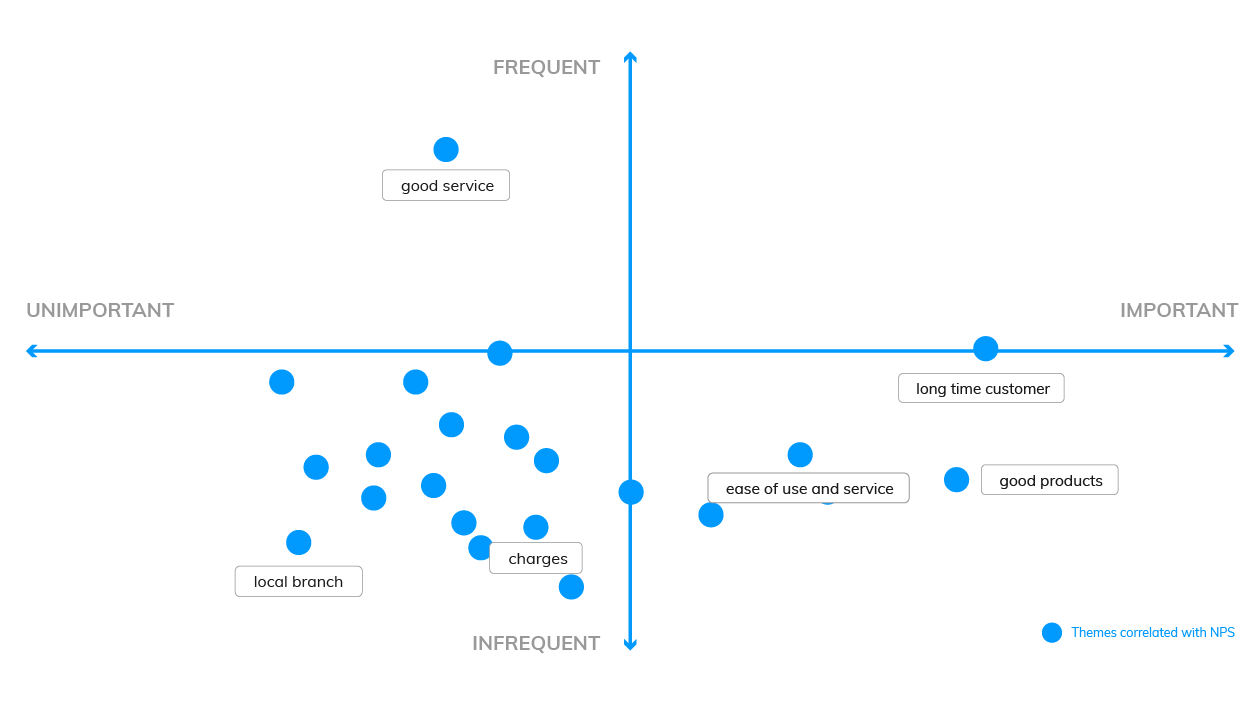

This analysis can help you answer questions like: “Which codes are linked to decreases or increases in my score over time?”

We need to compare two sequences of numbers: NPS over time and code frequency over time . Using Excel, calculate the correlation between the two sequences, which can be either positive (the more codes the higher the NPS, see picture below), or negative (the more codes the lower the NPS).

Now you need to plot code frequency against the absolute value of code correlation with NPS. Here is the formula:

The visualization could look like this:

These are two examples, but there are more. For a third manual formula, and to learn why word clouds are not an insightful form of analysis, read our visualizations article .

Using a text analytics solution to automate analysis

Automated text analytics solutions enable codes and sub-codes to be pulled out of the data automatically. This makes it far faster and easier to identify what’s driving negative or positive results. And to pick up emerging trends and find all manner of rich insights in the data.

Another benefit of AI-driven text analytics software is its built-in capability for sentiment analysis, which provides the emotive context behind your feedback and other qualitative textual data therein.

Thematic provides text analytics that goes further by allowing users to apply their expertise on business context to edit or augment the AI-generated outputs.

Since the move away from manual research is generally about reducing the human element, adding human input to the technology might sound counter-intuitive. However, this is mostly to make sure important business nuances in the feedback aren’t missed during coding. The result is a higher accuracy of analysis. This is sometimes referred to as augmented intelligence .

Step 5: Report on your data: Tell the story

The last step of analyzing your qualitative data is to report on it, to tell the story. At this point, the codes are fully developed and the focus is on communicating the narrative to the audience.

A coherent outline of the qualitative research, the findings and the insights is vital for stakeholders to discuss and debate before they can devise a meaningful course of action.

Creating graphs and reporting in Powerpoint

Typically, qualitative researchers take the tried and tested approach of distilling their report into a series of charts, tables and other visuals which are woven into a narrative for presentation in Powerpoint.

Using visualization software for reporting

With data transformation and APIs, the analyzed data can be shared with data visualisation software, such as Power BI or Tableau , Google Studio or Looker. Power BI and Tableau are among the most preferred options.

Visualizing your insights inside a feedback analytics platform

Feedback analytics platforms, like Thematic, incorporate visualisation tools that intuitively turn key data and insights into graphs. This removes the time consuming work of constructing charts to visually identify patterns and creates more time to focus on building a compelling narrative that highlights the insights, in bite-size chunks, for executive teams to review.

Using a feedback analytics platform with visualization tools means you don’t have to use a separate product for visualizations. You can export graphs into Powerpoints straight from the platforms.

Conclusion - Manual or Automated?

There are those who remain deeply invested in the manual approach - because it’s familiar, because they’re reluctant to spend money and time learning new software, or because they’ve been burned by the overpromises of AI.

For projects that involve small datasets, manual analysis makes sense. For example, if the objective is simply to quantify a simple question like “Do customers prefer X concepts to Y?”. If the findings are being extracted from a small set of focus groups and interviews, sometimes it’s easier to just read them

However, as new generations come into the workplace, it’s technology-driven solutions that feel more comfortable and practical. And the merits are undeniable. Especially if the objective is to go deeper and understand the ‘why’ behind customers’ preference for X or Y. And even more especially if time and money are considerations.

The ability to collect a free flow of qualitative feedback data at the same time as the metric means AI can cost-effectively scan, crunch, score and analyze a ton of feedback from one system in one go. And time-intensive processes like focus groups, or coding, that used to take weeks, can now be completed in a matter of hours or days.

But aside from the ever-present business case to speed things up and keep costs down, there are also powerful research imperatives for automated analysis of qualitative data: namely, accuracy and consistency.

Finding insights hidden in feedback requires consistency, especially in coding. Not to mention catching all the ‘unknown unknowns’ that can skew research findings and steering clear of cognitive bias.

Some say without manual data analysis researchers won’t get an accurate “feel” for the insights. However, the larger data sets are, the harder it is to sort through the feedback and organize feedback that has been pulled from different places. And, the more difficult it is to stay on course, the greater the risk of drawing incorrect, or incomplete, conclusions grows.

Though the process steps for qualitative data analysis have remained pretty much unchanged since psychologist Paul Felix Lazarsfeld paved the path a hundred years ago, the impact digital technology has had on types of qualitative feedback data and the approach to the analysis are profound.

If you want to try an automated feedback analysis solution on your own qualitative data, you can get started with Thematic .

Community & Marketing

Tyler manages our community of CX, insights & analytics professionals. Tyler's goal is to help unite insights professionals around common challenges.

We make it easy to discover the customer and product issues that matter.

Unlock the value of feedback at scale, in one platform. Try it for free now!

- Questions to ask your Feedback Analytics vendor

- How to end customer churn for good

- Scalable analysis of NPS verbatims

- 5 Text analytics approaches

- How to calculate the ROI of CX

Our experts will show you how Thematic works, how to discover pain points and track the ROI of decisions. To access your free trial, book a personal demo today.

Recent posts

Watercare is New Zealand's largest water and wastewater service provider. They are responsible for bringing clean water to 1.7 million people in Tamaki Makaurau (Auckland) and safeguarding the wastewater network to minimize impact on the environment. Water is a sector that often gets taken for granted, with drainage and

Become a qualitative theming pro! Creating a perfect code frame is hard, but thematic analysis software makes the process much easier.

Qualtrics is one of the most well-known and powerful Customer Feedback Management platforms. But even so, it has limitations. We recently hosted a live panel where data analysts from two well-known brands shared their experiences with Qualtrics, and how they extended this platform’s capabilities. Below, we’ll share the

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Methodology

- What Is Qualitative Research? | Methods & Examples

What Is Qualitative Research? | Methods & Examples

Published on 4 April 2022 by Pritha Bhandari . Revised on 30 January 2023.

Qualitative research involves collecting and analysing non-numerical data (e.g., text, video, or audio) to understand concepts, opinions, or experiences. It can be used to gather in-depth insights into a problem or generate new ideas for research.

Qualitative research is the opposite of quantitative research , which involves collecting and analysing numerical data for statistical analysis.

Qualitative research is commonly used in the humanities and social sciences, in subjects such as anthropology, sociology, education, health sciences, and history.

- How does social media shape body image in teenagers?

- How do children and adults interpret healthy eating in the UK?

- What factors influence employee retention in a large organisation?

- How is anxiety experienced around the world?

- How can teachers integrate social issues into science curriculums?

Table of contents

Approaches to qualitative research, qualitative research methods, qualitative data analysis, advantages of qualitative research, disadvantages of qualitative research, frequently asked questions about qualitative research.

Qualitative research is used to understand how people experience the world. While there are many approaches to qualitative research, they tend to be flexible and focus on retaining rich meaning when interpreting data.

Common approaches include grounded theory, ethnography, action research, phenomenological research, and narrative research. They share some similarities, but emphasise different aims and perspectives.

Prevent plagiarism, run a free check.

Each of the research approaches involve using one or more data collection methods . These are some of the most common qualitative methods:

- Observations: recording what you have seen, heard, or encountered in detailed field notes.

- Interviews: personally asking people questions in one-on-one conversations.

- Focus groups: asking questions and generating discussion among a group of people.

- Surveys : distributing questionnaires with open-ended questions.

- Secondary research: collecting existing data in the form of texts, images, audio or video recordings, etc.

- You take field notes with observations and reflect on your own experiences of the company culture.

- You distribute open-ended surveys to employees across all the company’s offices by email to find out if the culture varies across locations.

- You conduct in-depth interviews with employees in your office to learn about their experiences and perspectives in greater detail.

Qualitative researchers often consider themselves ‘instruments’ in research because all observations, interpretations and analyses are filtered through their own personal lens.

For this reason, when writing up your methodology for qualitative research, it’s important to reflect on your approach and to thoroughly explain the choices you made in collecting and analysing the data.

Qualitative data can take the form of texts, photos, videos and audio. For example, you might be working with interview transcripts, survey responses, fieldnotes, or recordings from natural settings.

Most types of qualitative data analysis share the same five steps:

- Prepare and organise your data. This may mean transcribing interviews or typing up fieldnotes.

- Review and explore your data. Examine the data for patterns or repeated ideas that emerge.

- Develop a data coding system. Based on your initial ideas, establish a set of codes that you can apply to categorise your data.

- Assign codes to the data. For example, in qualitative survey analysis, this may mean going through each participant’s responses and tagging them with codes in a spreadsheet. As you go through your data, you can create new codes to add to your system if necessary.

- Identify recurring themes. Link codes together into cohesive, overarching themes.

There are several specific approaches to analysing qualitative data. Although these methods share similar processes, they emphasise different concepts.

Qualitative research often tries to preserve the voice and perspective of participants and can be adjusted as new research questions arise. Qualitative research is good for:

- Flexibility

The data collection and analysis process can be adapted as new ideas or patterns emerge. They are not rigidly decided beforehand.

- Natural settings

Data collection occurs in real-world contexts or in naturalistic ways.

- Meaningful insights

Detailed descriptions of people’s experiences, feelings and perceptions can be used in designing, testing or improving systems or products.

- Generation of new ideas

Open-ended responses mean that researchers can uncover novel problems or opportunities that they wouldn’t have thought of otherwise.

Researchers must consider practical and theoretical limitations in analysing and interpreting their data. Qualitative research suffers from:

- Unreliability

The real-world setting often makes qualitative research unreliable because of uncontrolled factors that affect the data.

- Subjectivity

Due to the researcher’s primary role in analysing and interpreting data, qualitative research cannot be replicated . The researcher decides what is important and what is irrelevant in data analysis, so interpretations of the same data can vary greatly.

- Limited generalisability

Small samples are often used to gather detailed data about specific contexts. Despite rigorous analysis procedures, it is difficult to draw generalisable conclusions because the data may be biased and unrepresentative of the wider population .

- Labour-intensive

Although software can be used to manage and record large amounts of text, data analysis often has to be checked or performed manually.

Quantitative research deals with numbers and statistics, while qualitative research deals with words and meanings.

Quantitative methods allow you to test a hypothesis by systematically collecting and analysing data, while qualitative methods allow you to explore ideas and experiences in depth.

There are five common approaches to qualitative research :

- Grounded theory involves collecting data in order to develop new theories.

- Ethnography involves immersing yourself in a group or organisation to understand its culture.

- Narrative research involves interpreting stories to understand how people make sense of their experiences and perceptions.

- Phenomenological research involves investigating phenomena through people’s lived experiences.

- Action research links theory and practice in several cycles to drive innovative changes.

Data collection is the systematic process by which observations or measurements are gathered in research. It is used in many different contexts by academics, governments, businesses, and other organisations.

There are various approaches to qualitative data analysis , but they all share five steps in common:

- Prepare and organise your data.

- Review and explore your data.

- Develop a data coding system.

- Assign codes to the data.

- Identify recurring themes.

The specifics of each step depend on the focus of the analysis. Some common approaches include textual analysis , thematic analysis , and discourse analysis .

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

Bhandari, P. (2023, January 30). What Is Qualitative Research? | Methods & Examples. Scribbr. Retrieved 22 April 2024, from https://www.scribbr.co.uk/research-methods/introduction-to-qualitative-research/

Is this article helpful?

Pritha Bhandari

- Search Menu

- Browse content in Arts and Humanities

- Browse content in Archaeology

- Anglo-Saxon and Medieval Archaeology

- Archaeological Methodology and Techniques

- Archaeology by Region

- Archaeology of Religion

- Archaeology of Trade and Exchange

- Biblical Archaeology

- Contemporary and Public Archaeology

- Environmental Archaeology

- Historical Archaeology

- History and Theory of Archaeology

- Industrial Archaeology

- Landscape Archaeology

- Mortuary Archaeology

- Prehistoric Archaeology

- Underwater Archaeology

- Urban Archaeology

- Zooarchaeology

- Browse content in Architecture

- Architectural Structure and Design

- History of Architecture

- Residential and Domestic Buildings

- Theory of Architecture

- Browse content in Art

- Art Subjects and Themes

- History of Art

- Industrial and Commercial Art

- Theory of Art

- Biographical Studies

- Byzantine Studies

- Browse content in Classical Studies

- Classical History

- Classical Philosophy

- Classical Mythology

- Classical Literature

- Classical Reception

- Classical Art and Architecture

- Classical Oratory and Rhetoric

- Greek and Roman Papyrology

- Greek and Roman Epigraphy

- Greek and Roman Law

- Greek and Roman Archaeology

- Late Antiquity

- Religion in the Ancient World

- Digital Humanities

- Browse content in History

- Colonialism and Imperialism

- Diplomatic History

- Environmental History

- Genealogy, Heraldry, Names, and Honours

- Genocide and Ethnic Cleansing

- Historical Geography

- History by Period

- History of Emotions

- History of Agriculture

- History of Education

- History of Gender and Sexuality

- Industrial History

- Intellectual History

- International History

- Labour History

- Legal and Constitutional History

- Local and Family History

- Maritime History

- Military History

- National Liberation and Post-Colonialism

- Oral History

- Political History

- Public History

- Regional and National History

- Revolutions and Rebellions

- Slavery and Abolition of Slavery

- Social and Cultural History

- Theory, Methods, and Historiography

- Urban History

- World History

- Browse content in Language Teaching and Learning

- Language Learning (Specific Skills)

- Language Teaching Theory and Methods

- Browse content in Linguistics

- Applied Linguistics

- Cognitive Linguistics

- Computational Linguistics

- Forensic Linguistics

- Grammar, Syntax and Morphology

- Historical and Diachronic Linguistics

- History of English

- Language Evolution

- Language Reference

- Language Acquisition

- Language Variation

- Language Families

- Lexicography

- Linguistic Anthropology

- Linguistic Theories

- Linguistic Typology

- Phonetics and Phonology

- Psycholinguistics

- Sociolinguistics

- Translation and Interpretation

- Writing Systems

- Browse content in Literature

- Bibliography

- Children's Literature Studies

- Literary Studies (Romanticism)

- Literary Studies (American)

- Literary Studies (Asian)

- Literary Studies (European)

- Literary Studies (Eco-criticism)

- Literary Studies (Modernism)

- Literary Studies - World

- Literary Studies (1500 to 1800)

- Literary Studies (19th Century)

- Literary Studies (20th Century onwards)

- Literary Studies (African American Literature)

- Literary Studies (British and Irish)

- Literary Studies (Early and Medieval)

- Literary Studies (Fiction, Novelists, and Prose Writers)

- Literary Studies (Gender Studies)

- Literary Studies (Graphic Novels)

- Literary Studies (History of the Book)

- Literary Studies (Plays and Playwrights)

- Literary Studies (Poetry and Poets)

- Literary Studies (Postcolonial Literature)

- Literary Studies (Queer Studies)

- Literary Studies (Science Fiction)

- Literary Studies (Travel Literature)

- Literary Studies (War Literature)

- Literary Studies (Women's Writing)

- Literary Theory and Cultural Studies

- Mythology and Folklore

- Shakespeare Studies and Criticism

- Browse content in Media Studies

- Browse content in Music

- Applied Music

- Dance and Music

- Ethics in Music

- Ethnomusicology

- Gender and Sexuality in Music

- Medicine and Music

- Music Cultures

- Music and Media

- Music and Religion

- Music and Culture

- Music Education and Pedagogy

- Music Theory and Analysis

- Musical Scores, Lyrics, and Libretti

- Musical Structures, Styles, and Techniques

- Musicology and Music History

- Performance Practice and Studies

- Race and Ethnicity in Music

- Sound Studies

- Browse content in Performing Arts

- Browse content in Philosophy

- Aesthetics and Philosophy of Art

- Epistemology

- Feminist Philosophy

- History of Western Philosophy

- Metaphysics

- Moral Philosophy

- Non-Western Philosophy

- Philosophy of Language

- Philosophy of Mind

- Philosophy of Perception

- Philosophy of Science

- Philosophy of Action

- Philosophy of Law

- Philosophy of Religion

- Philosophy of Mathematics and Logic

- Practical Ethics

- Social and Political Philosophy

- Browse content in Religion

- Biblical Studies

- Christianity

- East Asian Religions

- History of Religion

- Judaism and Jewish Studies

- Qumran Studies

- Religion and Education

- Religion and Health

- Religion and Politics

- Religion and Science

- Religion and Law

- Religion and Art, Literature, and Music

- Religious Studies

- Browse content in Society and Culture

- Cookery, Food, and Drink

- Cultural Studies

- Customs and Traditions

- Ethical Issues and Debates

- Hobbies, Games, Arts and Crafts

- Lifestyle, Home, and Garden

- Natural world, Country Life, and Pets

- Popular Beliefs and Controversial Knowledge

- Sports and Outdoor Recreation

- Technology and Society

- Travel and Holiday

- Visual Culture

- Browse content in Law

- Arbitration

- Browse content in Company and Commercial Law

- Commercial Law

- Company Law

- Browse content in Comparative Law

- Systems of Law

- Competition Law

- Browse content in Constitutional and Administrative Law

- Government Powers

- Judicial Review

- Local Government Law

- Military and Defence Law

- Parliamentary and Legislative Practice

- Construction Law

- Contract Law

- Browse content in Criminal Law

- Criminal Procedure

- Criminal Evidence Law

- Sentencing and Punishment

- Employment and Labour Law

- Environment and Energy Law

- Browse content in Financial Law

- Banking Law

- Insolvency Law

- History of Law

- Human Rights and Immigration

- Intellectual Property Law

- Browse content in International Law

- Private International Law and Conflict of Laws

- Public International Law

- IT and Communications Law

- Jurisprudence and Philosophy of Law

- Law and Politics

- Law and Society

- Browse content in Legal System and Practice

- Courts and Procedure

- Legal Skills and Practice

- Primary Sources of Law

- Regulation of Legal Profession

- Medical and Healthcare Law

- Browse content in Policing

- Criminal Investigation and Detection

- Police and Security Services

- Police Procedure and Law

- Police Regional Planning

- Browse content in Property Law

- Personal Property Law

- Study and Revision

- Terrorism and National Security Law

- Browse content in Trusts Law

- Wills and Probate or Succession

- Browse content in Medicine and Health

- Browse content in Allied Health Professions

- Arts Therapies

- Clinical Science

- Dietetics and Nutrition

- Occupational Therapy

- Operating Department Practice

- Physiotherapy

- Radiography

- Speech and Language Therapy

- Browse content in Anaesthetics

- General Anaesthesia

- Neuroanaesthesia

- Clinical Neuroscience

- Browse content in Clinical Medicine

- Acute Medicine

- Cardiovascular Medicine

- Clinical Genetics

- Clinical Pharmacology and Therapeutics

- Dermatology

- Endocrinology and Diabetes

- Gastroenterology

- Genito-urinary Medicine

- Geriatric Medicine

- Infectious Diseases

- Medical Toxicology

- Medical Oncology

- Pain Medicine

- Palliative Medicine

- Rehabilitation Medicine

- Respiratory Medicine and Pulmonology

- Rheumatology

- Sleep Medicine

- Sports and Exercise Medicine

- Community Medical Services

- Critical Care

- Emergency Medicine

- Forensic Medicine

- Haematology

- History of Medicine

- Browse content in Medical Skills

- Clinical Skills

- Communication Skills

- Nursing Skills

- Surgical Skills

- Browse content in Medical Dentistry

- Oral and Maxillofacial Surgery

- Paediatric Dentistry

- Restorative Dentistry and Orthodontics

- Surgical Dentistry

- Medical Ethics

- Medical Statistics and Methodology

- Browse content in Neurology

- Clinical Neurophysiology

- Neuropathology

- Nursing Studies

- Browse content in Obstetrics and Gynaecology

- Gynaecology

- Occupational Medicine

- Ophthalmology

- Otolaryngology (ENT)

- Browse content in Paediatrics

- Neonatology

- Browse content in Pathology

- Chemical Pathology

- Clinical Cytogenetics and Molecular Genetics

- Histopathology

- Medical Microbiology and Virology

- Patient Education and Information

- Browse content in Pharmacology

- Psychopharmacology

- Browse content in Popular Health

- Caring for Others

- Complementary and Alternative Medicine

- Self-help and Personal Development

- Browse content in Preclinical Medicine

- Cell Biology

- Molecular Biology and Genetics

- Reproduction, Growth and Development

- Primary Care

- Professional Development in Medicine

- Browse content in Psychiatry

- Addiction Medicine

- Child and Adolescent Psychiatry

- Forensic Psychiatry

- Learning Disabilities

- Old Age Psychiatry

- Psychotherapy

- Browse content in Public Health and Epidemiology

- Epidemiology

- Public Health

- Browse content in Radiology

- Clinical Radiology

- Interventional Radiology

- Nuclear Medicine

- Radiation Oncology

- Reproductive Medicine

- Browse content in Surgery

- Cardiothoracic Surgery

- Gastro-intestinal and Colorectal Surgery

- General Surgery

- Neurosurgery

- Paediatric Surgery

- Peri-operative Care

- Plastic and Reconstructive Surgery

- Surgical Oncology

- Transplant Surgery

- Trauma and Orthopaedic Surgery

- Vascular Surgery

- Browse content in Science and Mathematics

- Browse content in Biological Sciences

- Aquatic Biology

- Biochemistry

- Bioinformatics and Computational Biology

- Developmental Biology

- Ecology and Conservation

- Evolutionary Biology

- Genetics and Genomics

- Microbiology

- Molecular and Cell Biology

- Natural History

- Plant Sciences and Forestry

- Research Methods in Life Sciences

- Structural Biology

- Systems Biology

- Zoology and Animal Sciences

- Browse content in Chemistry

- Analytical Chemistry

- Computational Chemistry

- Crystallography

- Environmental Chemistry

- Industrial Chemistry

- Inorganic Chemistry

- Materials Chemistry

- Medicinal Chemistry

- Mineralogy and Gems

- Organic Chemistry

- Physical Chemistry

- Polymer Chemistry

- Study and Communication Skills in Chemistry

- Theoretical Chemistry

- Browse content in Computer Science

- Artificial Intelligence

- Computer Architecture and Logic Design

- Game Studies

- Human-Computer Interaction

- Mathematical Theory of Computation

- Programming Languages

- Software Engineering

- Systems Analysis and Design

- Virtual Reality

- Browse content in Computing

- Business Applications

- Computer Security

- Computer Games

- Computer Networking and Communications

- Digital Lifestyle

- Graphical and Digital Media Applications

- Operating Systems

- Browse content in Earth Sciences and Geography

- Atmospheric Sciences

- Environmental Geography

- Geology and the Lithosphere

- Maps and Map-making

- Meteorology and Climatology

- Oceanography and Hydrology

- Palaeontology

- Physical Geography and Topography

- Regional Geography

- Soil Science

- Urban Geography

- Browse content in Engineering and Technology

- Agriculture and Farming

- Biological Engineering

- Civil Engineering, Surveying, and Building

- Electronics and Communications Engineering

- Energy Technology

- Engineering (General)

- Environmental Science, Engineering, and Technology

- History of Engineering and Technology

- Mechanical Engineering and Materials

- Technology of Industrial Chemistry

- Transport Technology and Trades

- Browse content in Environmental Science

- Applied Ecology (Environmental Science)

- Conservation of the Environment (Environmental Science)

- Environmental Sustainability

- Environmentalist Thought and Ideology (Environmental Science)

- Management of Land and Natural Resources (Environmental Science)

- Natural Disasters (Environmental Science)

- Nuclear Issues (Environmental Science)

- Pollution and Threats to the Environment (Environmental Science)

- Social Impact of Environmental Issues (Environmental Science)

- History of Science and Technology

- Browse content in Materials Science

- Ceramics and Glasses

- Composite Materials

- Metals, Alloying, and Corrosion

- Nanotechnology

- Browse content in Mathematics

- Applied Mathematics

- Biomathematics and Statistics

- History of Mathematics

- Mathematical Education

- Mathematical Finance

- Mathematical Analysis

- Numerical and Computational Mathematics

- Probability and Statistics

- Pure Mathematics

- Browse content in Neuroscience

- Cognition and Behavioural Neuroscience

- Development of the Nervous System

- Disorders of the Nervous System

- History of Neuroscience

- Invertebrate Neurobiology

- Molecular and Cellular Systems

- Neuroendocrinology and Autonomic Nervous System

- Neuroscientific Techniques

- Sensory and Motor Systems

- Browse content in Physics

- Astronomy and Astrophysics

- Atomic, Molecular, and Optical Physics

- Biological and Medical Physics

- Classical Mechanics

- Computational Physics

- Condensed Matter Physics

- Electromagnetism, Optics, and Acoustics

- History of Physics

- Mathematical and Statistical Physics

- Measurement Science

- Nuclear Physics

- Particles and Fields

- Plasma Physics

- Quantum Physics

- Relativity and Gravitation

- Semiconductor and Mesoscopic Physics

- Browse content in Psychology

- Affective Sciences

- Clinical Psychology

- Cognitive Psychology

- Cognitive Neuroscience

- Criminal and Forensic Psychology

- Developmental Psychology

- Educational Psychology

- Evolutionary Psychology

- Health Psychology

- History and Systems in Psychology

- Music Psychology

- Neuropsychology

- Organizational Psychology

- Psychological Assessment and Testing

- Psychology of Human-Technology Interaction

- Psychology Professional Development and Training