Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

- Research bias

- What Is Selection Bias? | Definition & Examples

What Is Selection Bias? | Definition & Examples

Published on September 30, 2022 by Kassiani Nikolopoulou . Revised on May 1, 2023.

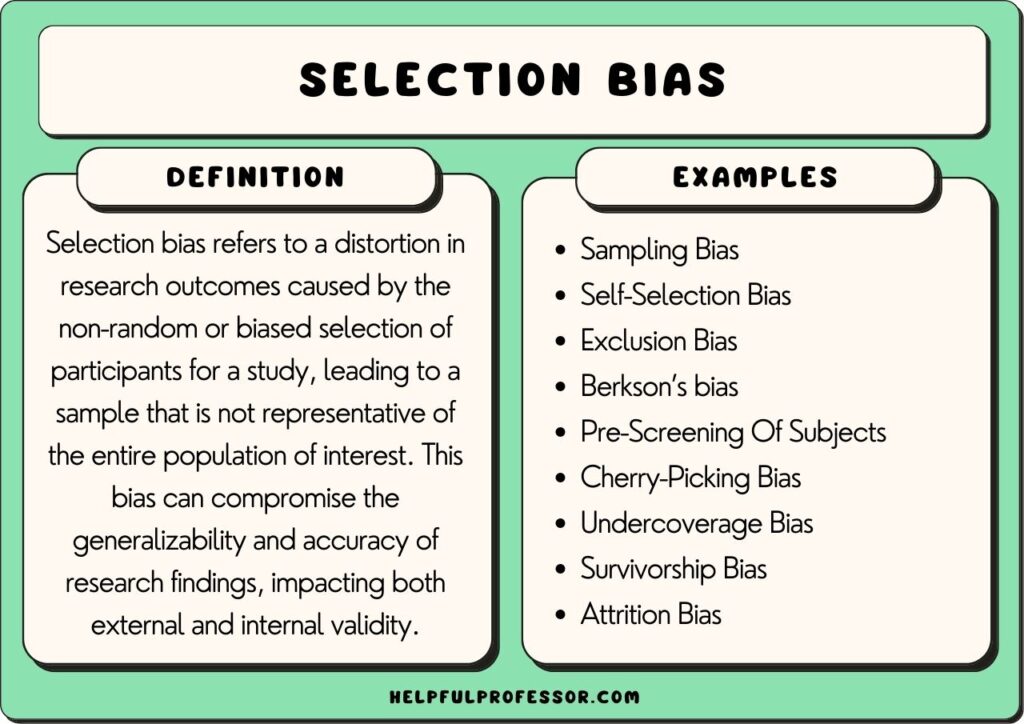

Selection bias refers to situations where research bias is introduced due to factors related to the study’s participants. Selection bias can be introduced via the methods used to select the population of interest, the sampling methods , or the recruitment of participants. It is also known as the selection effect .

Selection bias may threaten the validity of your research, as the study population is not representative of the target population .

Table of contents

What is selection bias, types of selection bias, examples of selection bias, how to avoid selection bias, other types of research bias, frequently asked questions.

Selection bias occurs when the selection of subjects into a study (or their likelihood of remaining in the study) leads to a result that is systematically different to the target population.

Selection bias often occurs in observational studies where the selection of participants isn’t random, such as cohort studies, case-control studies, and cross-sectional studies . It also occurs in interventional studies or clinical trials due to poor randomization .

Selection bias is a form of systematic error . Systematic differences between participants and non-participants or between treatment and control groups can limit your ability to compare the groups and arrive at unbiased conclusions.

There are several potential sources of selection bias that can affect the study, either during the recruitment of participants or in the process of ensuring they remain in the study. These can include:

- Flawed procedure used to select participants, such as poorly defined inclusion and exclusion criteria

- External reasons that could explain why some participants want to participate in the study while others don’t

- Whether some participants are more likely to be selected than others

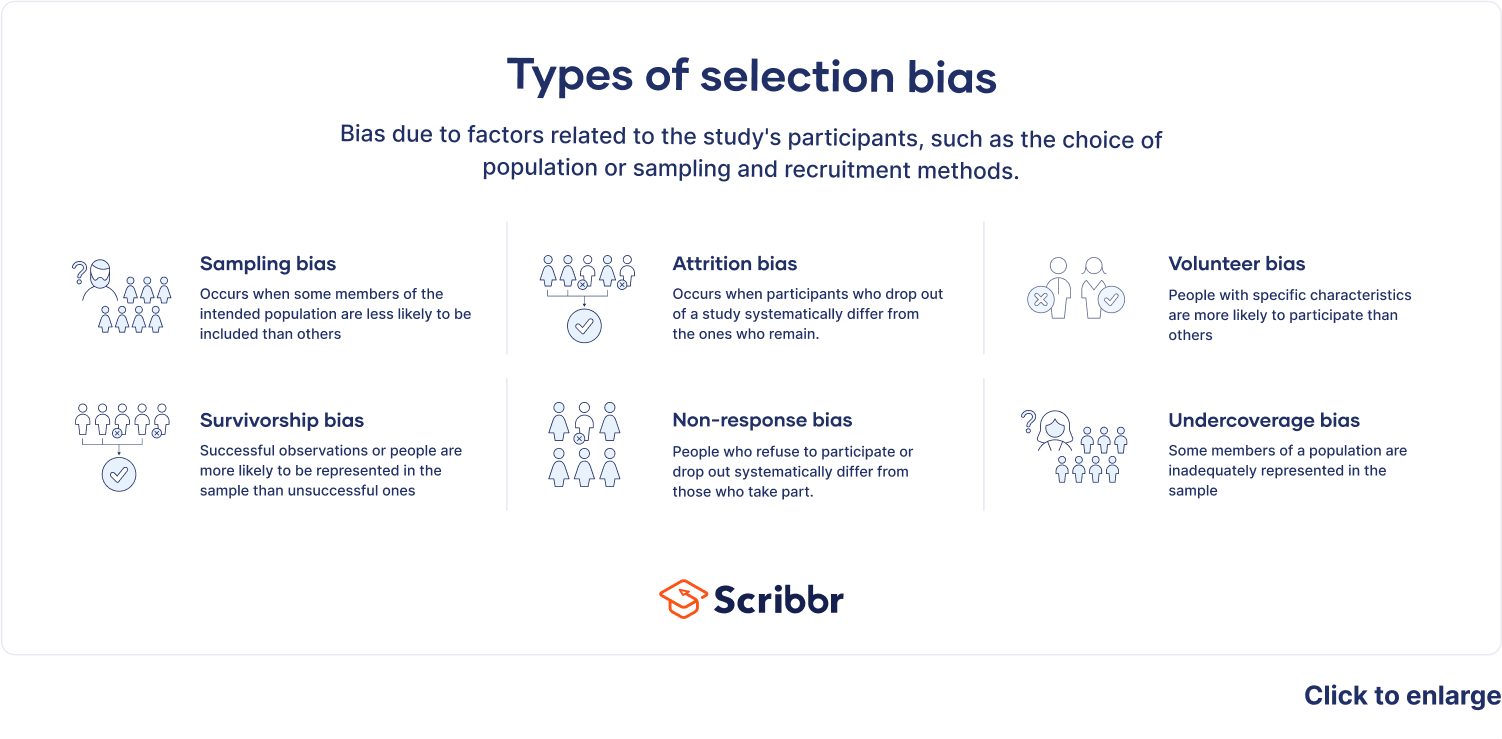

Selection bias is a general term describing errors arising from factors related to the population being studied, but there are several types of selection bias:

- Sampling bias or ascertainment bias occurs when some members of the intended population are less likely to be included than others. As a result, your sample is not representative of your population.

- Attrition bias occurs when participants who drop out of a study are systematically different from those who remain.

- Self-selection bias (or volunteer bias) arises when individuals decide entirely for themselves whether or not they want to participate in the study. Due to this, participants may differ from those who don’t—for example, in terms of motivation.

- Survivorship bias is a form of logical error that leads researchers who study a group to draw conclusions by only focusing on examples of successful individuals (the “survivors”) rather than the group as a whole.

- Nonresponse bias is observed when people who don’t respond to a survey are different in significant ways from those who do. Non-respondents may be unwilling or unable to participate, leading to their under-representation in the study.

- Undercoverage bias occurs when some members of your population are not represented in the sample. It is common in convenience sampling , where you recruit a sample that’s easy to obtain.

Selection bias is introduced when data collection or data analysis is biased toward a specific subgroup of the target population.

If you only focus on current customers, their feedback is more likely to be positive than if you also included those who stopped shopping prior to checkout. While current customers had a positive enough experience to ultimately buy something, those who stopped shopping will have different insights. For example, they may be disappointed in the lack of service or the overall web design.

Because of selection bias, study findings do not reflect the target population as a whole.

The cases consist of 200 women with cervical cancer who were referred to Mass General Hospital for treatment. The women were referred to from different parts of the state of Massachusetts. The cases were given a questionnaire asking about their income, education level, employment status, etc.

Control subjects were recruited by interviewers going door to door in the area around the hospital between 9:00 a.m. and 5:00 p.m.

Selection bias can be avoided as you recruit and retain your sample population.

- For non-probability sampling designs, such as observational studies, try to make the control group as comparable as possible to the treatment group. This method is called matching . Researchers match each treated unit with a non-treated unit of similar characteristics. This helps estimate the impact of a program or event for which it is not ethically or logistically feasible to randomize.

- In experimental research, selection bias can be minimized by proper use of random assignment , ensuring that neither researchers nor participants know which group each participant is assigned to. Otherwise, knowledge of group assignment can taint the data.

- Sampling bias can be avoided by carefully defining the target population and using probability sampling whenever possible. This ensures that all eligible participants have an equal chance of being included in the sample.

Cognitive bias

- Confirmation bias

- Baader–Meinhof phenomenon

Selection bias

- Sampling bias

- Ascertainment bias

- Attrition bias

- Self-selection bias

- Survivorship bias

- Nonresponse bias

- Undercoverage bias

- Hawthorne effect

- Observer bias

- Omitted variable bias

- Publication bias

- Pygmalion effect

- Recall bias

- Social desirability bias

- Placebo effect

Common types of selection bias are:

- Sampling bias or ascertainment bias

- Volunteer or self-selection bias

Sampling bias occurs when some members of a population are systematically more likely to be selected in a sample than others.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Nikolopoulou, K. (2023, May 01). What Is Selection Bias? | Definition & Examples. Scribbr. Retrieved April 9, 2024, from https://www.scribbr.com/research-bias/selection-bias/

Is this article helpful?

Kassiani Nikolopoulou

Other students also liked, what is an observational study | guide & examples, cross-sectional study | definition, uses & examples, random vs. systematic error | definition & examples.

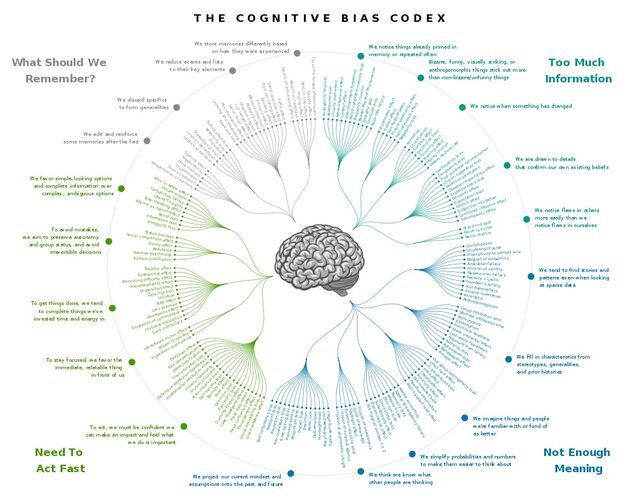

Cognitive Bias: How We Are Wired to Misjudge

Charlotte Ruhl

Research Assistant & Psychology Graduate

BA (Hons) Psychology, Harvard University

Charlotte Ruhl, a psychology graduate from Harvard College, boasts over six years of research experience in clinical and social psychology. During her tenure at Harvard, she contributed to the Decision Science Lab, administering numerous studies in behavioral economics and social psychology.

Learn about our Editorial Process

Saul Mcleod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul Mcleod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Olivia Guy-Evans, MSc

Associate Editor for Simply Psychology

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

On This Page:

Have you ever been so busy talking on the phone that you don’t notice the light has turned green and it is your turn to cross the street?

Have you ever shouted, “I knew that was going to happen!” after your favorite baseball team gave up a huge lead in the ninth inning and lost?

Or have you ever found yourself only reading news stories that further support your opinion?

These are just a few of the many instances of cognitive bias that we experience every day of our lives. But before we dive into these different biases, let’s backtrack first and define what bias is.

What is Cognitive Bias?

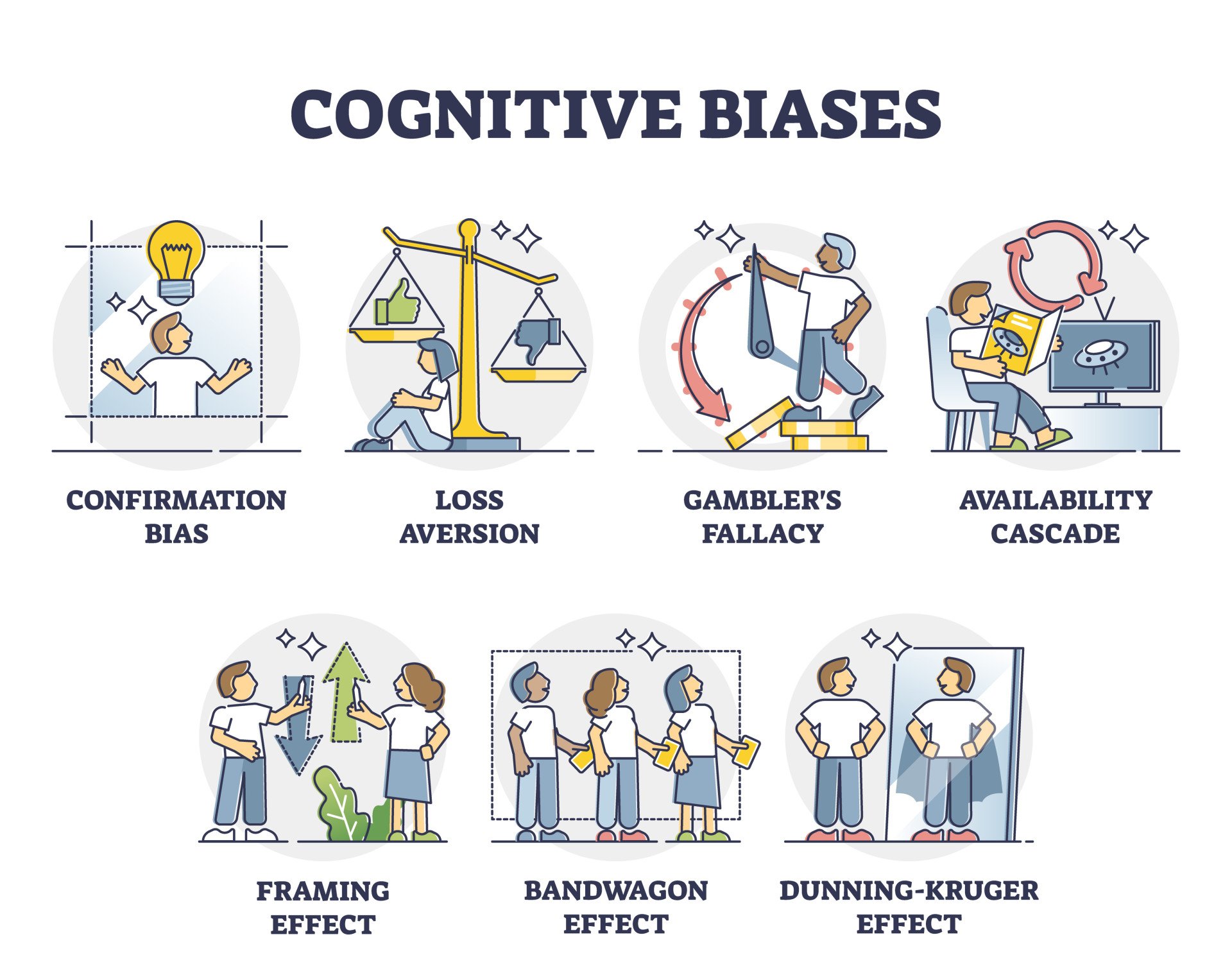

Cognitive bias is a systematic error in thinking, affecting how we process information, perceive others, and make decisions. It can lead to irrational thoughts or judgments and is often based on our perceptions, memories, or individual and societal beliefs.

Biases are unconscious and automatic processes designed to make decision-making quicker and more efficient. Cognitive biases can be caused by many things, such as heuristics (mental shortcuts) , social pressures, and emotions.

Broadly speaking, bias is a tendency to lean in favor of or against a person, group, idea, or thing, usually in an unfair way. Biases are natural — they are a product of human nature — and they don’t simply exist in a vacuum or in our minds — they affect the way we make decisions and act.

In psychology, there are two main branches of biases: conscious and unconscious. Conscious or explicit bias is intentional — you are aware of your attitudes and the behaviors resulting from them (Lang, 2019).

Explicit bias can be good because it helps provide you with a sense of identity and can lead you to make good decisions (for example, being biased towards healthy foods).

However, these biases can often be dangerous when they take the form of conscious stereotyping.

On the other hand, unconscious bias , or cognitive bias, represents a set of unintentional biases — you are unaware of your attitudes and behaviors resulting from them (Lang, 2019).

Cognitive bias is often a result of your brain’s attempt to simplify information processing — we receive roughly 11 million bits of information per second. Still, we can only process about 40 bits of information per second (Orzan et al., 2012).

Therefore, we often rely on mental shortcuts (called heuristics) to help make sense of the world with relative speed. As such, these errors tend to arise from problems related to thinking: memory, attention, and other mental mistakes.

Cognitive biases can be beneficial because they do not require much mental effort and can allow you to make decisions relatively quickly, but like conscious biases, unconscious biases can also take the form of harmful prejudice that serves to hurt an individual or a group.

Although it may feel like there has been a recent rise of unconscious bias, especially in the context of police brutality and the Black Lives Matter movement, this is not a new phenomenon.

Thanks to Tversky and Kahneman (and several other psychologists who have paved the way), we now have an existing dictionary of our cognitive biases.

Again, these biases occur as an attempt to simplify the complex world and make information processing faster and easier. This section will dive into some of the most common forms of cognitive bias.

Confirmation Bias

Confirmation bias is the tendency to interpret new information as confirmation of your preexisting beliefs and opinions while giving disproportionately less consideration to alternative possibilities.

Real-World Examples

Since Watson’s 1960 experiment, real-world examples of confirmation bias have gained attention.

This bias often seeps into the research world when psychologists selectively interpret data or ignore unfavorable data to produce results that support their initial hypothesis.

Confirmation bias is also incredibly pervasive on the internet, particularly with social media. We tend to read online news articles that support our beliefs and fail to seek out sources that challenge them.

Various social media platforms, such as Facebook, help reinforce our confirmation bias by feeding us stories that we are likely to agree with – further pushing us down these echo chambers of political polarization.

Some examples of confirmation bias are especially harmful, specifically in the context of the law. For example, a detective may identify a suspect early in an investigation, seek out confirming evidence, and downplay falsifying evidence.

Experiments

The confirmation bias dates back to 1960 when Peter Wason challenged participants to identify a rule applying to triples of numbers.

People were first told that the sequences 2, 4, 6 fit the rule, and they then had to generate triples of their own and were told whether that sequence fits the rule. The rule was simple: any ascending sequence.

But not only did participants have an unusually difficult time realizing this and instead devised overly-complicated hypotheses, they also only generated triples that confirmed their preexisting hypothesis (Wason, 1960).

Explanations

But why does confirmation bias occur? It’s partially due to the effect of desire on our beliefs. In other words, certain desired conclusions (ones that support our beliefs) are more likely to be processed by the brain and labeled as true (Nickerson, 1998).

This motivational explanation is often coupled with a more cognitive theory.

The cognitive explanation argues that because our minds can only focus on one thing at a time, it is hard to parallel process (see information processing for more information) alternate hypotheses, so, as a result, we only process the information that aligns with our beliefs (Nickerson, 1998).

Another theory explains confirmation bias as a way of enhancing and protecting our self-esteem.

As with the self-serving bias (see more below), our minds choose to reinforce our preexisting ideas because being right helps preserve our sense of self-esteem, which is important for feeling secure in the world and maintaining positive relationships (Casad, 2019).

Although confirmation bias has obvious consequences, you can still work towards overcoming it by being open-minded and willing to look at situations from a different perspective than you might be used to (Luippold et al., 2015).

Even though this bias is unconscious, training your mind to become more flexible in its thought patterns will help mitigate the effects of this bias.

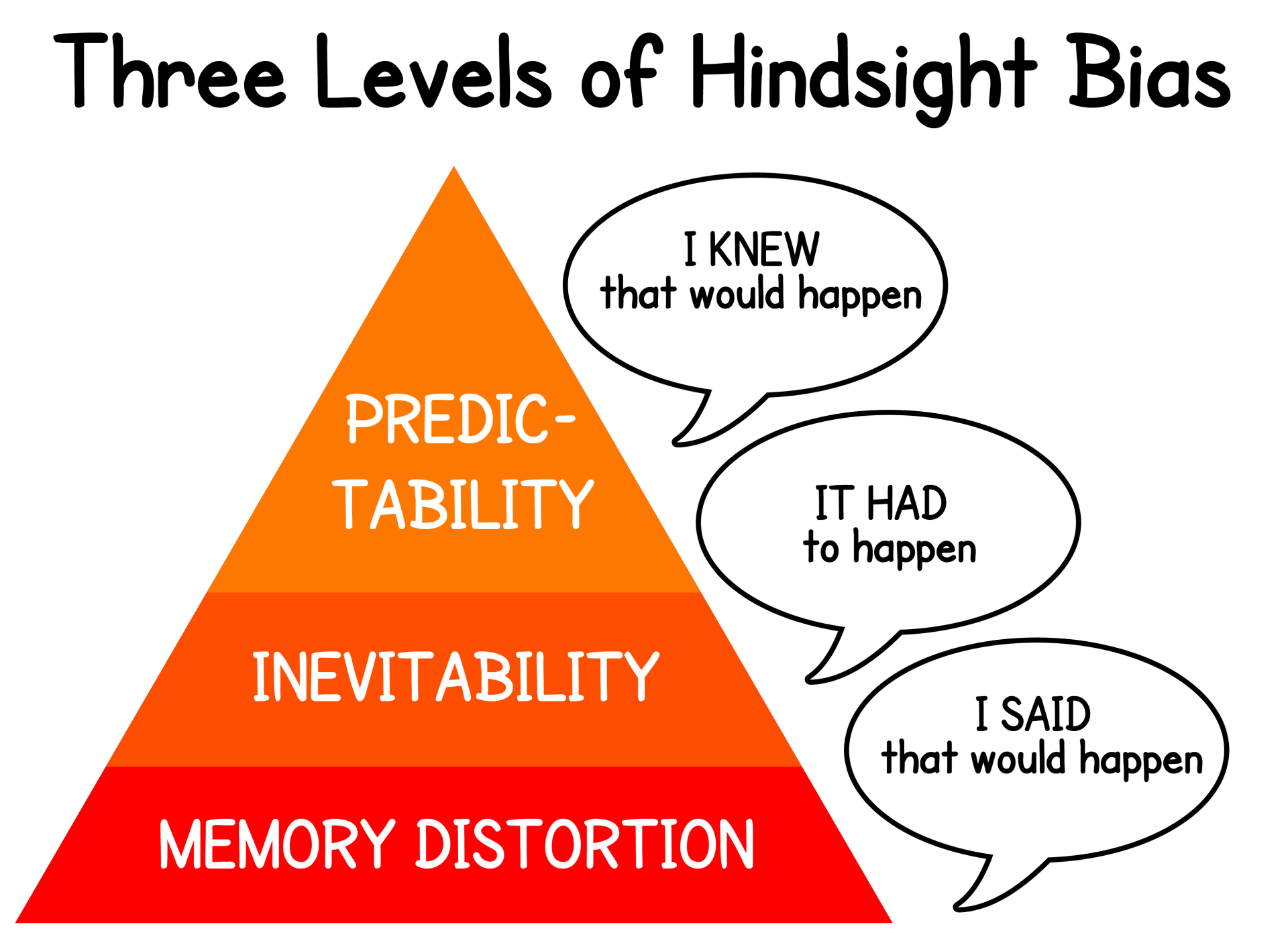

Hindsight Bias

Hindsight bias refers to the tendency to perceive past events as more predictable than they actually were (Roese & Vohs, 2012). There are cognitive and motivational explanations for why we ascribe so much certainty to knowing the outcome of an event only once the event is completed.

When sports fans know the outcome of a game, they often question certain decisions coaches make that they otherwise would not have questioned or second-guessed.

And fans are also quick to remark that they knew their team was going to win or lose, but, of course, they only make this statement after their team actually did win or lose.

Although research studies have demonstrated that the hindsight bias isn’t necessarily mitigated by pure recognition of the bias (Pohl & Hell, 1996).

You can still make a conscious effort to remind yourself that you can’t predict the future and motivate yourself to consider alternate explanations.

It’s important to do all we can to reduce this bias because when we are overly confident about our ability to predict outcomes, we might make future risky decisions that could have potentially dangerous outcomes.

Building on Tversky and Kahneman’s growing list of heuristics, researchers Baruch Fischhoff and Ruth Beyth-Marom (1975) were the first to directly investigate the hindsight bias in the empirical setting.

The team asked participants to judge the likelihood of several different outcomes of former U.S. president Richard Nixon’s visit to Beijing and Moscow.

After Nixon returned back to the States, participants were asked to recall the likelihood of each outcome they had initially assigned.

Fischhoff and Beyth found that for events that actually occurred, participants greatly overestimated the initial likelihood they assigned to those events.

That same year, Fischhoff (1975) introduced a new method for testing the hindsight bias – one that researchers still use today.

Participants are given a short story with four possible outcomes, and they are told that one is true. When they are then asked to assign the likelihood of each specific outcome, they regularly assign a higher likelihood to whichever outcome they have been told is true, regardless of how likely it actually is.

But hindsight bias does not only exist in artificial settings. In 1993, Dorothee Dietrich and Matthew Olsen asked college students to predict how the U.S. Senate would vote on the confirmation of Supreme Court nominee Clarence Thomas.

Before the vote, 58% of participants predicted that he would be confirmed, but after his actual confirmation, 78% of students said that they thought he would be approved – a prime example of the hindsight bias. And this form of bias extends beyond the research world.

From the cognitive perspective, hindsight bias may result from distortions of memories of what we knew or believed to know before an event occurred (Inman, 2016).

It is easier to recall information that is consistent with our current knowledge, so our memories become warped in a way that agrees with what actually did happen.

Motivational explanations of the hindsight bias point to the fact that we are motivated to live in a predictable world (Inman, 2016).

When surprising outcomes arise, our expectations are violated, and we may experience negative reactions as a result. Thus, we rely on the hindsight bias to avoid these adverse responses to certain unanticipated events and reassure ourselves that we actually did know what was going to happen.

Self-Serving Bias

Self-serving bias is the tendency to take personal responsibility for positive outcomes and blame external factors for negative outcomes.

You would be right to ask how this is similar to the fundamental attribution error (Ross, 1977), which identifies our tendency to overemphasize internal factors for other people’s behavior while attributing external factors to our own.

The distinction is that the self-serving bias is concerned with valence. That is, how good or bad an event or situation is. And it is also only concerned with events for which you are the actor.

In other words, if a driver cuts in front of you as the light turns green, the fundamental attribution error might cause you to think that they are a bad person and not consider the possibility that they were late for work.

On the other hand, the self-serving bias is exercised when you are the actor. In this example, you would be the driver cutting in front of the other car, which you would tell yourself is because you are late (an external attribution to a negative event) as opposed to it being because you are a bad person.

From sports to the workplace, self-serving bias is incredibly common. For example, athletes are quick to take responsibility for personal wins, attributing their successes to their hard work and mental toughness, but point to external factors, such as unfair calls or bad weather, when they lose (Allen et al., 2020).

In the workplace, people attribute internal factors when they have hired for a job but external factors when they are fired (Furnham, 1982). And in the office itself, workplace conflicts are given external attributions, and successes, whether a persuasive presentation or a promotion, are awarded internal explanations (Walther & Bazarova, 2007).

Additionally, self-serving bias is more prevalent in individualistic cultures , which place emphasis on self-esteem levels and individual goals, and it is less prevalent among individuals with depression (Mezulis et al., 2004), who are more likely to take responsibility for negative outcomes.

Overcoming this bias can be difficult because it is at the expense of our self-esteem. Nevertheless, practicing self-compassion – treating yourself with kindness even when you fall short or fail – can help reduce the self-serving bias (Neff, 2003).

The leading explanation for the self-serving bias is that it is a way of protecting our self-esteem (similar to one of the explanations for the confirmation bias).

We are quick to take credit for positive outcomes and divert the blame for negative ones to boost and preserve our individual ego, which is necessary for confidence and healthy relationships with others (Heider, 1982).

Another theory argues that self-serving bias occurs when surprising events arise. When certain outcomes run counter to our expectations, we ascribe external factors, but when outcomes are in line with our expectations, we attribute internal factors (Miller & Ross, 1975).

An extension of this theory asserts that we are naturally optimistic, so negative outcomes come as a surprise and receive external attributions as a result.

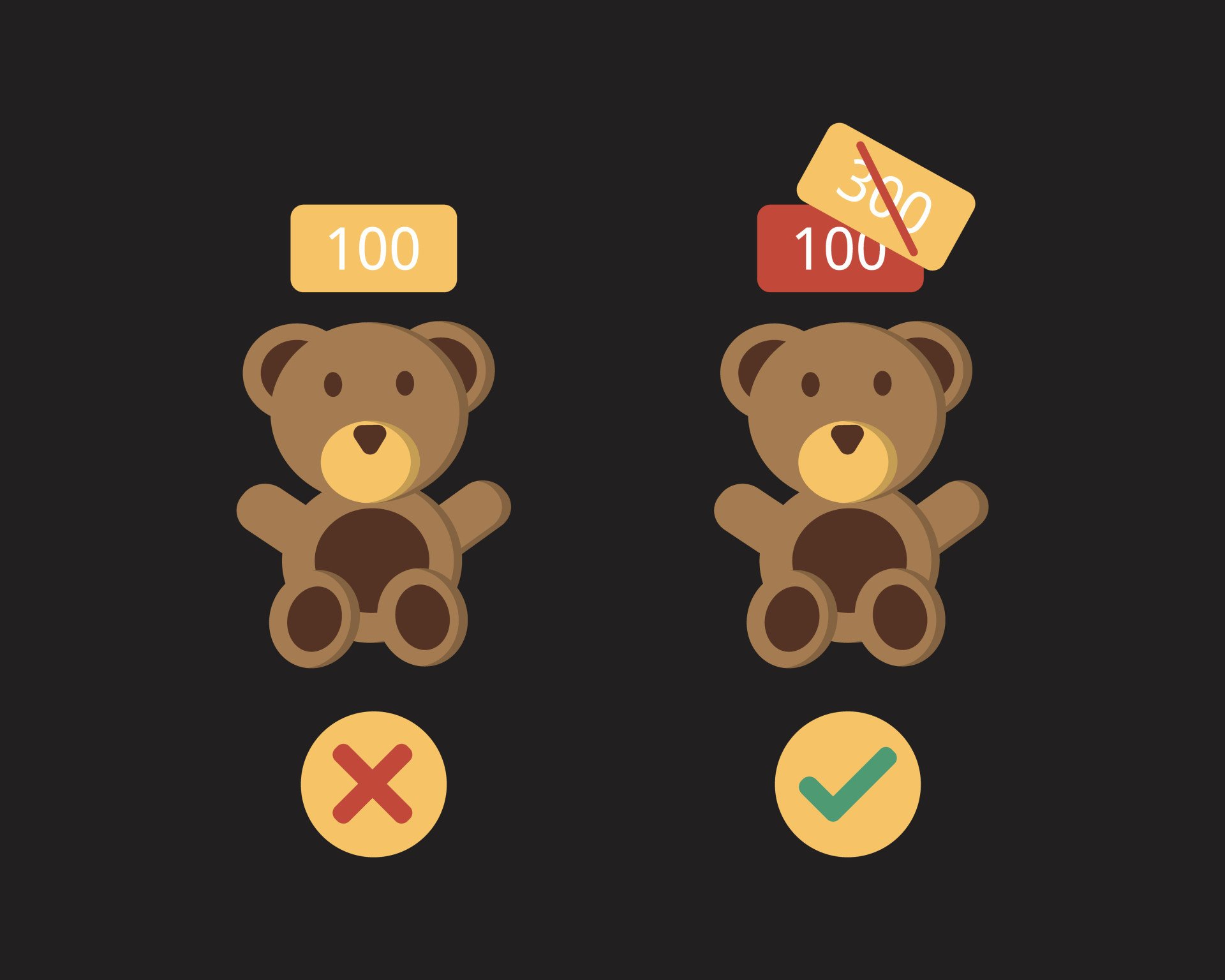

Anchoring Bias

individualistic cultures is closely related to the decision-making process. It occurs when we rely too heavily on either pre-existing information or the first piece of information (the anchor) when making a decision.

For example, if you first see a T-shirt that costs $1,000 and then see a second one that costs $100, you’re more likely to see the second shirt as cheap as you would if the first shirt you saw was $120. Here, the price of the first shirt influences how you view the second.

Sarah is looking to buy a used car. The first dealership she visits has a used sedan listed for $19,000. Sarah takes this initial listing price as an anchor and uses it to evaluate prices at other dealerships.

When she sees another similar used sedan priced at $18,000, that price seems like a good bargain compared to the $19,000 anchor price she saw first, even though the actual market value is closer to $16,000.

When Sarah finds a comparable used sedan priced at $15,500, she continues perceiving that price as cheap compared to her anchored reference price.

Ultimately, Sarah purchases the $18,000 sedan, overlooking that all of the prices seemed like bargains only in relation to the initial high anchor price.

The key elements that demonstrate anchoring bias here are:

- Sarah establishes an initial reference price based on the first listing she sees ($19k)

- She uses that initial price as her comparison/anchor for evaluating subsequent prices

- This biases her perception of the market value of the cars she looks at after the initial anchor is set

- She makes a purchase decision aligned with her anchored expectations rather than a more objective market value

Multiple theories seek to explain the existence of this bias.

One theory, known as anchoring and adjustment, argues that once an anchor is established, people insufficiently adjust away from it to arrive at their final answer, and so their final guess or decision is closer to the anchor than it otherwise would have been (Tversky & Kahneman, 1992).

And when people experience a greater cognitive load (the amount of information the working memory can hold at any given time; for example, a difficult decision as opposed to an easy one), they are more susceptible to the effects of anchoring.

Another theory, selective accessibility, holds that although we assume that the anchor is not a suitable answer (or a suitable price going back to the initial example) when we evaluate the second stimulus (or second shirt), we look for ways in which it is similar or different to the anchor (the price being way different), resulting in the anchoring effect (Mussweiler & Strack, 1999).

A final theory posits that providing an anchor changes someone’s attitudes to be more favorable to the anchor, which then biases future answers to have similar characteristics as the initial anchor.

Although there are many different theories for why we experience anchoring bias, they all agree that it affects our decisions in real ways (Wegner et al., 2001).

The first study that brought this bias to light was during one of Tversky and Kahneman’s (1974) initial experiments. They asked participants to compute the product of numbers 1-8 in five seconds, either as 1x2x3… or 8x7x6…

Participants did not have enough time to calculate the answer, so they had to estimate based on their first few calculations.

They found that those who computed the small multiplications first (i.e., 1x2x3…) gave a median estimate of 512, but those who computed the larger multiplications first gave a median estimate of 2,250 (although the actual answer is 40,320).

This demonstrates how the initial few calculations influenced the participant’s final answer.

Availability Bias

Availability bias (also commonly referred to as the availability heuristic ) refers to the tendency to think that examples of things that readily come to mind are more common than what is actually the case.

In other words, information that comes to mind faster influences the decisions we make about the future. And just like with the hindsight bias, this bias is related to an error of memory.

But instead of being a memory fabrication, it is an overemphasis on a certain memory.

In the workplace, if someone is being considered for a promotion but their boss recalls one bad thing that happened years ago but left a lasting impression, that one event might have an outsized influence on the final decision.

Another common example is buying lottery tickets because the lifestyle and benefits of winning are more readily available in mind (and the potential emotions associated with winning or seeing other people win) than the complex probability calculation of actually winning the lottery (Cherry, 2019).

A final common example that is used to demonstrate the availability heuristic describes how seeing several television shows or news reports about shark attacks (or anything that is sensationalized by the news, such as serial killers or plane crashes) might make you think that this incident is relatively common even though it is not at all.

Regardless, this thinking might make you less inclined to go in the water the next time you go to the beach (Cherry, 2019).

As with most cognitive biases, the best way to overcome them is by recognizing the bias and being more cognizant of your thoughts and decisions.

And because we fall victim to this bias when our brain relies on quick mental shortcuts in order to save time, slowing down our thinking and decision-making process is a crucial step to mitigating the effects of the availability heuristic.

Researchers think this bias occurs because the brain is constantly trying to minimize the effort necessary to make decisions, and so we rely on certain memories – ones that we can recall more easily – instead of having to endure the complicated task of calculating statistical probabilities.

Two main types of memories are easier to recall: 1) those that more closely align with the way we see the world and 2) those that evoke more emotion and leave a more lasting impression.

This first type of memory was identified in 1973, when Tversky and Kahneman, our cognitive bias pioneers, conducted a study in which they asked participants if more words begin with the letter K or if more words have K as their third letter.

Although many more words have K as their third letter, 70% of participants said that more words begin with K because the ability to recall this is not only easier, but it more closely aligns with the way they see the world (knowing the first letter of any word is infinitely more common than the third letter of any word).

In terms of the second type of memory, the same duo ran an experiment in 1983, 10 years later, where half the participants were asked to guess the likelihood of a massive flood would occur somewhere in North America, and the other half had to guess the likelihood of a flood occurring due to an earthquake in California.

Although the latter is much less likely, participants still said that this would be much more common because they could recall specific, emotionally charged events of earthquakes hitting California, largely due to the news coverage they receive.

Together, these studies highlight how memories that are easier to recall greatly influence our judgments and perceptions about future events.

Inattentional Blindness

A final popular form of cognitive bias is inattentional blindness . This occurs when a person fails to notice a stimulus that is in plain sight because their attention is directed elsewhere.

For example, while driving a car, you might be so focused on the road ahead of you that you completely fail to notice a car swerve into your lane of traffic.

Because your attention is directed elsewhere, you aren’t able to react in time, potentially leading to a car accident. Experiencing inattentional blindness has its obvious consequences (as illustrated by this example), but, like all biases, it is not impossible to overcome.

Many theories seek to explain why we experience this form of cognitive bias. In reality, it is probably some combination of these explanations.

Conspicuity holds that certain sensory stimuli (such as bright colors) and cognitive stimuli (such as something familiar) are more likely to be processed, and so stimuli that don’t fit into one of these two categories might be missed.

The mental workload theory describes how when we focus a lot of our brain’s mental energy on one stimulus, we are using up our cognitive resources and won’t be able to process another stimulus simultaneously.

Similarly, some psychologists explain how we attend to different stimuli with varying levels of attentional capacity, which might affect our ability to process multiple stimuli simultaneously.

In other words, an experienced driver might be able to see that car swerve into the lane because they are using fewer mental resources to drive, whereas a beginner driver might be using more resources to focus on the road ahead and unable to process that car swerving in.

A final explanation argues that because our attentional and processing resources are limited, our brain dedicates them to what fits into our schemas or our cognitive representations of the world (Cherry, 2020).

Thus, when an unexpected stimulus comes into our line of sight, we might not be able to process it on the conscious level. The following example illustrates how this might happen.

The most famous study to demonstrate the inattentional blindness phenomenon is the invisible gorilla study (Most et al., 2001). This experiment asked participants to watch a video of two groups passing a basketball and count how many times the white team passed the ball.

Participants are able to accurately report the number of passes, but what they fail to notice is a gorilla walking directly through the middle of the circle.

Because this would not be expected, and because our brain is using up its resources to count the number of passes, we completely fail to process something right before our eyes.

A real-world example of inattentional blindness occurred in 1995 when Boston police officer Kenny Conley was chasing a suspect and ran by a group of officers who were mistakenly holding down an undercover cop.

Conley was convicted of perjury and obstruction of justice because he supposedly saw the fight between the undercover cop and the other officers and lied about it to protect the officers, but he stood by his word that he really hadn’t seen it (due to inattentional blindness) and was ultimately exonerated (Pickel, 2015).

The key to overcoming inattentional blindness is to maximize your attention by avoiding distractions such as checking your phone. And it is also important to pay attention to what other people might not notice (if you are that driver, don’t always assume that others can see you).

By working on expanding your attention and minimizing unnecessary distractions that will use up your mental resources, you can work towards overcoming this bias.

Preventing Cognitive Bias

As we know, recognizing these biases is the first step to overcoming them. But there are other small strategies we can follow in order to train our unconscious mind to think in different ways.

From strengthening our memory and minimizing distractions to slowing down our decision-making and improving our reasoning skills, we can work towards overcoming these cognitive biases.

An individual can evaluate his or her own thought process, also known as metacognition (“thinking about thinking”), which provides an opportunity to combat bias (Flavell, 1979).

This multifactorial process involves (Croskerry, 2003):

(a) acknowledging the limitations of memory, (b) seeking perspective while making decisions, (c) being able to self-critique, (d) choosing strategies to prevent cognitive error.

Many strategies used to avoid bias that we describe are also known as cognitive forcing strategies, which are mental tools used to force unbiased decision-making.

The History of Cognitive Bias

The term cognitive bias was first coined in the 1970s by Israeli psychologists Amos Tversky and Daniel Kahneman, who used this phrase to describe people’s flawed thinking patterns in response to judgment and decision problems (Tversky & Kahneman, 1974).

Tversky and Kahneman’s research program, the heuristics and biases program, investigated how people make decisions given limited resources (for example, limited time to decide which food to eat or limited information to decide which house to buy).

As a result of these limited resources, people are forced to rely on heuristics or quick mental shortcuts to help make their decisions.

Tversky and Kahneman wanted to understand the biases associated with this judgment and decision-making process.

To do so, the two researchers relied on a research paradigm that presented participants with some type of reasoning problem with a computed normative answer (they used probability theory and statistics to compute the expected answer).

Participants’ responses were then compared with the predetermined solution to reveal the systematic deviations in the mind.

After running several experiments with countless reasoning problems, the researchers were able to identify numerous norm violations that result when our minds rely on these cognitive biases to make decisions and judgments (Wilke & Mata, 2012).

Key Takeaways

- Cognitive biases are unconscious errors in thinking that arise from problems related to memory, attention, and other mental mistakes.

- These biases result from our brain’s efforts to simplify the incredibly complex world in which we live.

- Confirmation bias , hindsight bias, mere exposure effect , self-serving bias , base rate fallacy , anchoring bias , availability bias , the framing effect , inattentional blindness, and the ecological fallacy are some of the most common examples of cognitive bias. Another example is the false consensus effect .

- Cognitive biases directly affect our safety, interactions with others, and how we make judgments and decisions in our daily lives.

- Although these biases are unconscious, there are small steps we can take to train our minds to adopt a new pattern of thinking and mitigate the effects of these biases.

Allen, M. S., Robson, D. A., Martin, L. J., & Laborde, S. (2020). Systematic review and meta-analysis of self-serving attribution biases in the competitive context of organized sport. Personality and Social Psychology Bulletin, 46 (7), 1027-1043.

Casad, B. (2019). Confirmation bias . Retrieved from https://www.britannica.com/science/confirmation-bias

Cherry, K. (2019). How the availability heuristic affects your decision-making . Retrieved from https://www.verywellmind.com/availability-heuristic-2794824

Cherry, K. (2020). Inattentional blindness can cause you to miss things in front of you . Retrieved from https://www.verywellmind.com/what-is-inattentional-blindness-2795020

Dietrich, D., & Olson, M. (1993). A demonstration of hindsight bias using the Thomas confirmation vote. Psychological Reports, 72 (2), 377-378.

Fischhoff, B. (1975). Hindsight is not equal to foresight: The effect of outcome knowledge on judgment under uncertainty. Journal of Experimental Psychology: Human Perception and Performance, 1 (3), 288.

Fischhoff, B., & Beyth, R. (1975). I knew it would happen: Remembered probabilities of once—future things. Organizational Behavior and Human Performance, 13 (1), 1-16.

Furnham, A. (1982). Explanations for unemployment in Britain. European Journal of social psychology, 12(4), 335-352.

Heider, F. (1982). The psychology of interpersonal relations . Psychology Press.

Inman, M. (2016). Hindsight bias . Retrieved from https://www.britannica.com/topic/hindsight-bias

Lang, R. (2019). What is the difference between conscious and unconscious bias? : Faqs. Retrieved from https://engageinlearning.com/faq/compliance/unconscious-bias/what-is-the-difference-between-conscious-and-unconscious-bias/

Luippold, B., Perreault, S., & Wainberg, J. (2015). Auditor’s pitfall: Five ways to overcome confirmation bias . Retrieved from https://www.babson.edu/academics/executive-education/babson-insight/finance-and-accounting/auditors-pitfall-five-ways-to-overcome-confirmation-bias/

Mezulis, A. H., Abramson, L. Y., Hyde, J. S., & Hankin, B. L. (2004). Is there a universal positivity bias in attributions? A meta-analytic review of individual, developmental, and cultural differences in the self-serving attributional bias. Psychological Bulletin, 130 (5), 711.

Miller, D. T., & Ross, M. (1975). Self-serving biases in the attribution of causality: Fact or fiction?. Psychological Bulletin, 82 (2), 213.

Most, S. B., Simons, D. J., Scholl, B. J., Jimenez, R., Clifford, E., & Chabris, C. F. (2001). How not to be seen: The contribution of similarity and selective ignoring to sustained inattentional blindness. Psychological Science, 12 (1), 9-17.

Mussweiler, T., & Strack, F. (1999). Hypothesis-consistent testing and semantic priming in the anchoring paradigm: A selective accessibility model. Journal of Experimental Social Psychology, 35 (2), 136-164.

Neff, K. (2003). Self-compassion: An alternative conceptualization of a healthy attitude toward oneself. Self and Identity, 2 (2), 85-101.

Nickerson, R. S. (1998). Confirmation bias: A ubiquitous phenomenon in many guises. Review of General Psychology, 2 (2), 175-220.

Orzan, G., Zara, I. A., & Purcarea, V. L. (2012). Neuromarketing techniques in pharmaceutical drugs advertising. A discussion and agenda for future research. Journal of Medicine and Life, 5 (4), 428.

Pickel, K. L. (2015). Eyewitness memory. The handbook of attention , 485-502.

Pohl, R. F., & Hell, W. (1996). No reduction in hindsight bias after complete information and repeated testing. Organizational Behavior and Human Decision Processes, 67 (1), 49-58.

Roese, N. J., & Vohs, K. D. (2012). Hindsight bias. Perspectives on Psychological Science, 7 (5), 411-426.

Ross, L. (1977). The intuitive psychologist and his shortcomings: Distortions in the attribution process. In Advances in experimental social psychology (Vol. 10, pp. 173-220). Academic Press.

Tversky, A., & Kahneman, D. (1973). Availability: A heuristic for judging frequency and probability. Cognitive Psychology, 5 (2), 207-232.

Tversky, A., & Kahneman, D. (1974). Judgment under uncertainty: Heuristics and biases. Science, 185 (4157), 1124-1131.

Tversky, A., & Kahneman, D. (1983). Extensional versus intuitive reasoning: The conjunction fallacy in probability judgment. Psychological Review , 90(4), 293.

Tversky, A., & Kahneman, D. (1992). Advances in prospect theory: Cumulative representation of uncertainty. Journal of Risk and Uncertainty, 5 (4), 297-323.

Walther, J. B., & Bazarova, N. N. (2007). Misattribution in virtual groups: The effects of member distribution on self-serving bias and partner blame. Human Communication Research, 33 (1), 1-26.

Wason, Peter C. (1960), “On the failure to eliminate hypotheses in a conceptual task”. Quarterly Journal of Experimental Psychology, 12 (3): 129–40.

Wegener, D. T., Petty, R. E., Detweiler-Bedell, B. T., & Jarvis, W. B. G. (2001). Implications of attitude change theories for numerical anchoring: Anchor plausibility and the limits of anchor effectiveness. Journal of Experimental Social Psychology, 37 (1), 62-69.

Wilke, A., & Mata, R. (2012). Cognitive bias. In Encyclopedia of human behavior (pp. 531-535). Academic Press.

Further Information

Test yourself for bias.

- Project Implicit (IAT Test) From Harvard University

- Implicit Association Test From the Social Psychology Network

- Test Yourself for Hidden Bias From Teaching Tolerance

- How The Concept Of Implicit Bias Came Into Being With Dr. Mahzarin Banaji, Harvard University. Author of Blindspot: hidden biases of good people5:28 minutes; includes transcript

- Understanding Your Racial Biases With John Dovidio, PhD, Yale University From the American Psychological Association11:09 minutes; includes transcript

- Talking Implicit Bias in Policing With Jack Glaser, Goldman School of Public Policy, University of California Berkeley21:59 minutes

- Implicit Bias: A Factor in Health Communication With Dr. Winston Wong, Kaiser Permanente19:58 minutes

- Bias, Black Lives and Academic Medicine Dr. David Ansell on Your Health Radio (August 1, 2015)21:42 minutes

- Uncovering Hidden Biases Google talk with Dr. Mahzarin Banaji, Harvard University

- Impact of Implicit Bias on the Justice System 9:14 minutes

- Students Speak Up: What Bias Means to Them 2:17 minutes

- Weight Bias in Health Care From Yale University16:56 minutes

- Gender and Racial Bias In Facial Recognition Technology 4:43 minutes

Journal Articles

- An implicit bias primer Mitchell, G. (2018). An implicit bias primer. Virginia Journal of Social Policy & the Law , 25, 27–59.

- Implicit Association Test at age 7: A methodological and conceptual review Nosek, B. A., Greenwald, A. G., & Banaji, M. R. (2007). The Implicit Association Test at age 7: A methodological and conceptual review. Automatic processes in social thinking and behavior, 4 , 265-292.

- Implicit Racial/Ethnic Bias Among Health Care Professionals and Its Influence on Health Care Outcomes: A Systematic Review Hall, W. J., Chapman, M. V., Lee, K. M., Merino, Y. M., Thomas, T. W., Payne, B. K., … & Coyne-Beasley, T. (2015). Implicit racial/ethnic bias among health care professionals and its influence on health care outcomes: a systematic review. American journal of public health, 105 (12), e60-e76.

- Reducing Racial Bias Among Health Care Providers: Lessons from Social-Cognitive Psychology Burgess, D., Van Ryn, M., Dovidio, J., & Saha, S. (2007). Reducing racial bias among health care providers: lessons from social-cognitive psychology. Journal of general internal medicine, 22 (6), 882-887.

- Integrating implicit bias into counselor education Boysen, G. A. (2010). Integrating Implicit Bias Into Counselor Education. Counselor Education & Supervision, 49 (4), 210–227.

- Cognitive Biases and Errors as Cause—and Journalistic Best Practices as Effect Christian, S. (2013). Cognitive Biases and Errors as Cause—and Journalistic Best Practices as Effect. Journal of Mass Media Ethics, 28 (3), 160–174.

- Empathy intervention to reduce implicit bias in pre-service teachers Whitford, D. K., & Emerson, A. M. (2019). Empathy Intervention to Reduce Implicit Bias in Pre-Service Teachers. Psychological Reports, 122 (2), 670–688.

2.2 Overcoming Cognitive Biases and Engaging in Critical Reflection

Learning objectives.

By the end of this section, you will be able to:

- Label the conditions that make critical thinking possible.

- Classify and describe cognitive biases.

- Apply critical reflection strategies to resist cognitive biases.

To resist the potential pitfalls of cognitive biases, we have taken some time to recognize why we fall prey to them. Now we need to understand how to resist easy, automatic, and error-prone thinking in favor of more reflective, critical thinking.

Critical Reflection and Metacognition

To promote good critical thinking, put yourself in a frame of mind that allows critical reflection. Recall from the previous section that rational thinking requires effort and takes longer. However, it will likely result in more accurate thinking and decision-making. As a result, reflective thought can be a valuable tool in correcting cognitive biases. The critical aspect of critical reflection involves a willingness to be skeptical of your own beliefs, your gut reactions, and your intuitions. Additionally, the critical aspect engages in a more analytic approach to the problem or situation you are considering. You should assess the facts, consider the evidence, try to employ logic, and resist the quick, immediate, and likely conclusion you want to draw. By reflecting critically on your own thinking, you can become aware of the natural tendency for your mind to slide into mental shortcuts.

This process of critical reflection is often called metacognition in the literature of pedagogy and psychology. Metacognition means thinking about thinking and involves the kind of self-awareness that engages higher-order thinking skills. Cognition, or the way we typically engage with the world around us, is first-order thinking, while metacognition is higher-order thinking. From a metacognitive frame, we can critically assess our thought process, become skeptical of our gut reactions and intuitions, and reconsider our cognitive tendencies and biases.

To improve metacognition and critical reflection, we need to encourage the kind of self-aware, conscious, and effortful attention that may feel unnatural and may be tiring. Typical activities associated with metacognition include checking, planning, selecting, inferring, self-interrogating, interpreting an ongoing experience, and making judgments about what one does and does not know (Hackner, Dunlosky, and Graesser 1998). By practicing metacognitive behaviors, you are preparing yourself to engage in the kind of rational, abstract thought that will be required for philosophy.

Good study habits, including managing your workspace, giving yourself plenty of time, and working through a checklist, can promote metacognition. When you feel stressed out or pressed for time, you are more likely to make quick decisions that lead to error. Stress and lack of time also discourage critical reflection because they rob your brain of the resources necessary to engage in rational, attention-filled thought. By contrast, when you relax and give yourself time to think through problems, you will be clearer, more thoughtful, and less likely to rush to the first conclusion that leaps to mind. Similarly, background noise, distracting activity, and interruptions will prevent you from paying attention. You can use this checklist to try to encourage metacognition when you study:

- Check your work.

- Plan ahead.

- Select the most useful material.

- Infer from your past grades to focus on what you need to study.

- Ask yourself how well you understand the concepts.

- Check your weaknesses.

- Assess whether you are following the arguments and claims you are working on.

Cognitive Biases

In this section, we will examine some of the most common cognitive biases so that you can be aware of traps in thought that can lead you astray. Cognitive biases are closely related to informal fallacies. Both fallacies and biases provide examples of the ways we make errors in reasoning.

Connections

See the chapter on logic and reasoning for an in-depth exploration of informal fallacies.

Watch the video to orient yourself before reading the text that follows.

Cognitive Biases 101, with Peter Bauman

Confirmation bias.

One of the most common cognitive biases is confirmation bias , which is the tendency to search for, interpret, favor, and recall information that confirms or supports your prior beliefs. Like all cognitive biases, confirmation bias serves an important function. For instance, one of the most reliable forms of confirmation bias is the belief in our shared reality. Suppose it is raining. When you first hear the patter of raindrops on your roof or window, you may think it is raining. You then look for additional signs to confirm your conclusion, and when you look out the window, you see rain falling and puddles of water accumulating. Most likely, you will not be looking for irrelevant or contradictory information. You will be looking for information that confirms your belief that it is raining. Thus, you can see how confirmation bias—based on the idea that the world does not change dramatically over time—is an important tool for navigating in our environment.

Unfortunately, as with most heuristics, we tend to apply this sort of thinking inappropriately. One example that has recently received a lot of attention is the way in which confirmation bias has increased political polarization. When searching for information on the internet about an event or topic, most people look for information that confirms their prior beliefs rather than what undercuts them. The pervasive presence of social media in our lives is exacerbating the effects of confirmation bias since the computer algorithms used by social media platforms steer people toward content that reinforces their current beliefs and predispositions. These multimedia tools are especially problematic when our beliefs are incorrect (for example, they contradict scientific knowledge) or antisocial (for example, they support violent or illegal behavior). Thus, social media and the internet have created a situation in which confirmation bias can be “turbocharged” in ways that are destructive for society.

Confirmation bias is a result of the brain’s limited ability to process information. Peter Wason (1960) conducted early experiments identifying this kind of bias. He asked subjects to identify the rule that applies to a sequence of numbers—for instance, 2, 4, 8. Subjects were told to generate examples to test their hypothesis. What he found is that once a subject settled on a particular hypothesis, they were much more likely to select examples that confirmed their hypothesis rather than negated it. As a result, they were unable to identify the real rule (any ascending sequence of numbers) and failed to “falsify” their initial assumptions. Falsification is an important tool in the scientist’s toolkit when they are testing hypotheses and is an effective way to avoid confirmation bias.

In philosophy, you will be presented with different arguments on issues, such as the nature of the mind or the best way to act in a given situation. You should take your time to reason through these issues carefully and consider alternative views. What you believe to be the case may be right, but you may also fall into the trap of confirmation bias, seeing confirming evidence as better and more convincing than evidence that calls your beliefs into question.

Anchoring Bias

Confirmation bias is closely related to another bias known as anchoring. Anchoring bias refers to our tendency to rely on initial values, prices, or quantities when estimating the actual value, price, or quantity of something. If you are presented with a quantity, even if that number is clearly arbitrary, you will have a hard discounting it in your subsequent calculations; the initial value “anchors” subsequent estimates. For instance, Tversky and Kahneman (1974) reported an experiment in which subjects were asked to estimate the number of African nations in the United Nations. First, the experimenters spun a wheel of fortune in front of the subjects that produced a random number between 0 and 100. Let’s say the wheel landed on 79. Subjects were asked whether the number of nations was higher or lower than the random number. Subjects were then asked to estimate the real number of nations. Even though the initial anchoring value was random, people in the study found it difficult to deviate far from that number. For subjects receiving an initial value of 10, the median estimate of nations was 25, while for subjects receiving an initial value of 65, the median estimate was 45.

In the same paper, Tversky and Kahneman described the way that anchoring bias interferes with statistical reasoning. In a number of scenarios, subjects made irrational judgments about statistics because of the way the question was phrased (i.e., they were tricked when an anchor was inserted into the question). Instead of expending the cognitive energy needed to solve the statistical problem, subjects were much more likely to “go with their gut,” or think intuitively. That type of reasoning generates anchoring bias. When you do philosophy, you will be confronted with some formal and abstract problems that will challenge you to engage in thinking that feels difficult and unnatural. Resist the urge to latch on to the first thought that jumps into your head, and try to think the problem through with all the cognitive resources at your disposal.

Availability Heuristic

The availability heuristic refers to the tendency to evaluate new information based on the most recent or most easily recalled examples. The availability heuristic occurs when people take easily remembered instances as being more representative than they objectively are (i.e., based on statistical probabilities). In very simple situations, the availability of instances is a good guide to judgments. Suppose you are wondering whether you should plan for rain. It may make sense to anticipate rain if it has been raining a lot in the last few days since weather patterns tend to linger in most climates. More generally, scenarios that are well-known to us, dramatic, recent, or easy to imagine are more available for retrieval from memory. Therefore, if we easily remember an instance or scenario, we may incorrectly think that the chances are high that the scenario will be repeated. For instance, people in the United States estimate the probability of dying by violent crime or terrorism much more highly than they ought to. In fact, these are extremely rare occurrences compared to death by heart disease, cancer, or car accidents. But stories of violent crime and terrorism are prominent in the news media and fiction. Because these vivid stories are dramatic and easily recalled, we have a skewed view of how frequently violent crime occurs.

Another more loosely defined category of cognitive bias is the tendency for human beings to align themselves with groups with whom they share values and practices. The tendency toward tribalism is an evolutionary advantage for social creatures like human beings. By forming groups to share knowledge and distribute work, we are much more likely to survive. Not surprisingly, human beings with pro-social behaviors persist in the population at higher rates than human beings with antisocial tendencies. Pro-social behaviors, however, go beyond wanting to communicate and align ourselves with other human beings; we also tend to see outsiders as a threat. As a result, tribalistic tendencies both reinforce allegiances among in-group members and increase animosity toward out-group members.

Tribal thinking makes it hard for us to objectively evaluate information that either aligns with or contradicts the beliefs held by our group or tribe. This effect can be demonstrated even when in-group membership is not real or is based on some superficial feature of the person—for instance, the way they look or an article of clothing they are wearing. A related bias is called the bandwagon fallacy . The bandwagon fallacy can lead you to conclude that you ought to do something or believe something because many other people do or believe the same thing. While other people can provide guidance, they are not always reliable. Furthermore, just because many people believe something doesn’t make it true. Watch the video below to improve your “tribal literacy” and understand the dangers of this type of thinking.

The Dangers of Tribalism, Kevin deLaplante

Sunk cost fallacy.

Sunk costs refer to the time, energy, money, or other costs that have been paid in the past. These costs are “sunk” because they cannot be recovered. The sunk cost fallacy is thinking that attaches a value to things in which you have already invested resources that is greater than the value those things have today. Human beings have a natural tendency to hang on to whatever they invest in and are loath to give something up even after it has been proven to be a liability. For example, a person may have sunk a lot of money into a business over time, and the business may clearly be failing. Nonetheless, the businessperson will be reluctant to close shop or sell the business because of the time, money, and emotional energy they have spent on the venture. This is the behavior of “throwing good money after bad” by continuing to irrationally invest in something that has lost its worth because of emotional attachment to the failed enterprise. People will engage in this kind of behavior in all kinds of situations and may continue a friendship, a job, or a marriage for the same reason—they don’t want to lose their investment even when they are clearly headed for failure and ought to cut their losses.

A similar type of faulty reasoning leads to the gambler’s fallacy , in which a person reasons that future chance events will be more likely if they have not happened recently. For instance, if I flip a coin many times in a row, I may get a string of heads. But even if I flip several heads in a row, that does not make it more likely I will flip tails on the next coin flip. Each coin flip is statistically independent, and there is an equal chance of turning up heads or tails. The gambler, like the reasoner from sunk costs, is tied to the past when they should be reasoning about the present and future.

There are important social and evolutionary purposes for past-looking thinking. Sunk-cost thinking keeps parents engaged in the growth and development of their children after they are born. Sunk-cost thinking builds loyalty and affection among friends and family. More generally, a commitment to sunk costs encourages us to engage in long-term projects, and this type of thinking has the evolutionary purpose of fostering culture and community. Nevertheless, it is important to periodically reevaluate our investments in both people and things.

In recent ethical scholarship, there is some debate about how to assess the sunk costs of moral decisions. Consider the case of war. Just-war theory dictates that wars may be justified in cases where the harm imposed on the adversary is proportional to the good gained by the act of defense or deterrence. It may be that, at the start of the war, those costs seemed proportional. But after the war has dragged on for some time, it may seem that the objective cannot be obtained without a greater quantity of harm than had been initially imagined. Should the evaluation of whether a war is justified estimate the total amount of harm done or prospective harm that will be done going forward (Lazar 2018)? Such questions do not have easy answers.

Table 2.1 summarizes these common cognitive biases.

Think Like a Philosopher

As we have seen, cognitive biases are built into the way human beings process information. They are common to us all, and it takes self-awareness and effort to overcome the tendency to fall back on biases. Consider a time when you have fallen prey to one of the five cognitive biases described above. What were the circumstances? Recall your thought process. Were you aware at the time that your thinking was misguided? What were the consequences of succumbing to that cognitive bias?

Write a short paragraph describing how that cognitive bias allowed you to make a decision you now realize was irrational. Then write a second paragraph describing how, with the benefit of time and distance, you would have thought differently about the incident that triggered the bias. Use the tools of critical reflection and metacognition to improve your approach to this situation. What might have been the consequences of behaving differently? Finally, write a short conclusion describing what lesson you take from reflecting back on this experience. Does it help you understand yourself better? Will you be able to act differently in the future? What steps can you take to avoid cognitive biases in your thinking today?

As an Amazon Associate we earn from qualifying purchases.

This book may not be used in the training of large language models or otherwise be ingested into large language models or generative AI offerings without OpenStax's permission.

Want to cite, share, or modify this book? This book uses the Creative Commons Attribution License and you must attribute OpenStax.

Access for free at https://openstax.org/books/introduction-philosophy/pages/1-introduction

- Authors: Nathan Smith

- Publisher/website: OpenStax

- Book title: Introduction to Philosophy

- Publication date: Jun 15, 2022

- Location: Houston, Texas

- Book URL: https://openstax.org/books/introduction-philosophy/pages/1-introduction

- Section URL: https://openstax.org/books/introduction-philosophy/pages/2-2-overcoming-cognitive-biases-and-engaging-in-critical-reflection

© Dec 19, 2023 OpenStax. Textbook content produced by OpenStax is licensed under a Creative Commons Attribution License . The OpenStax name, OpenStax logo, OpenStax book covers, OpenStax CNX name, and OpenStax CNX logo are not subject to the Creative Commons license and may not be reproduced without the prior and express written consent of Rice University.

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- HHS Author Manuscripts

Identifying and Avoiding Bias in Research

This narrative review provides an overview on the topic of bias as part of Plastic and Reconstructive Surgery 's series of articles on evidence-based medicine. Bias can occur in the planning, data collection, analysis, and publication phases of research. Understanding research bias allows readers to critically and independently review the scientific literature and avoid treatments which are suboptimal or potentially harmful. A thorough understanding of bias and how it affects study results is essential for the practice of evidence-based medicine.

The British Medical Journal recently called evidence-based medicine (EBM) one of the fifteen most important milestones since the journal's inception 1 . The concept of EBM was created in the early 1980's as clinical practice became more data-driven and literature based 1 , 2 . EBM is now an essential part of medical school curriculum 3 . For plastic surgeons, the ability to practice EBM is limited. Too frequently, published research in plastic surgery demonstrates poor methodologic quality, although a gradual trend toward higher level study designs has been noted over the past ten years 4 , 5 . In order for EBM to be an effective tool, plastic surgeons must critically interpret study results and must also evaluate the rigor of study design and identify study biases. As the leadership of Plastic and Reconstructive Surgery seeks to provide higher quality science to enhance patient safety and outcomes, a discussion of the topic of bias is essential for the journal's readers. In this paper, we will define bias and identify potential sources of bias which occur during study design, study implementation, and during data analysis and publication. We will also make recommendations on avoiding bias before, during, and after a clinical trial.

I. Definition and scope of bias

Bias is defined as any tendency which prevents unprejudiced consideration of a question 6 . In research, bias occurs when “systematic error [is] introduced into sampling or testing by selecting or encouraging one outcome or answer over others” 7 . Bias can occur at any phase of research, including study design or data collection, as well as in the process of data analysis and publication ( Figure 1 ). Bias is not a dichotomous variable. Interpretation of bias cannot be limited to a simple inquisition: is bias present or not? Instead, reviewers of the literature must consider the degree to which bias was prevented by proper study design and implementation. As some degree of bias is nearly always present in a published study, readers must also consider how bias might influence a study's conclusions 8 . Table 1 provides a summary of different types of bias, when they occur, and how they might be avoided.

Major Sources of Bias in Clinical Research

Tips to avoid different types of bias during a trial.

Chance and confounding can be quantified and/or eliminated through proper study design and data analysis. However, only the most rigorously conducted trials can completely exclude bias as an alternate explanation for an association. Unlike random error, which results from sampling variability and which decreases as sample size increases, bias is independent of both sample size and statistical significance. Bias can cause estimates of association to be either larger or smaller than the true association. In extreme cases, bias can cause a perceived association which is directly opposite of the true association. For example, prior to 1998, multiple observational studies demonstrated that hormone replacement therapy (HRT) decreased risk of heart disease among post-menopausal women 8 , 9 . However, more recent studies, rigorously designed to minimize bias, have found the opposite effect (i.e., an increased risk of heart disease with HRT) 10 , 11 .

II. Pre-trial bias

Sources of pre-trial bias include errors in study design and in patient recruitment. These errors can cause fatal flaws in the data which cannot be compensated during data analysis. In this section, we will discuss the importance of clearly defining both risk and outcome, the necessity of standardized protocols for data collection, and the concepts of selection and channeling bias.

Bias during study design

The definition of risk and outcome should be clearly defined prior to study implementation. Subjective measures, such as the Baker grade of capsular contracture, can have high inter-rater variability and the arbitrary cutoffs may make distinguishing between groups difficult 12 . This can inflate the observed variance seen with statistical analysis, making a statistically significant result less likely. Objective, validated risk stratification models such as those published by Caprini 13 and Davison 14 for venous thromboembolism, or standardized outcomes measures such as the Breast-Q 15 should have lower inter-rater variability and are more appropriate for use. When risk or exposure is retrospectively identified via medical chart review, it is prudent to crossreference data sources for confirmation. For example, a chart reviewer should confirm a patient-reported history of sacral pressure ulcer closure with physical exam findings and by review of an operative report; this will decrease discrepancies when compared to using a single data source.

Data collection methods may include questionnaires, structured interviews, physical exam, laboratory or imaging data, or medical chart review. Standardized protocols for data collection, including training of study personnel, can minimize inter-observer variability when multiple individuals are gathering and entering data. Blinding of study personnel to the patient's exposure and outcome status, or if not possible, having different examiners measure the outcome than those who evaluated the exposure, can also decrease bias. Due to the presence of scars, patients and those directly examining them cannot be blinded to whether or not an operation was received. For comparisons of functional or aesthetic outcomes in surgical procedures, an independent examiner can be blinded to the type of surgery performed. For example, a hand surgery study comparing lag screw versus plate and screw fixation of metacarpal fractures could standardize the surgical approach (and thus the surgical scar) and have functional outcomes assessed by a blinded examiner who had not viewed the operative notes or x-rays. Blinded examiners can also review imaging and confirm diagnoses without examining patients 16 , 17 .

Selection bias

Selection bias may occur during identification of the study population. The ideal study population is clearly defined, accessible, reliable, and at increased risk to develop the outcome of interest. When a study population is identified, selection bias occurs when the criteria used to recruit and enroll patients into separate study cohorts are inherently different. This can be a particular problem with case-control and retrospective cohort studies where exposure and outcome have already occurred at the time individuals are selected for study inclusion 18 . Prospective studies (particularly randomized, controlled trials) where the outcome is unknown at time of enrollment are less prone to selection bias.

Channeling bias

Channeling bias occurs when patient prognostic factors or degree of illness dictates the study cohort into which patients are placed. This bias is more likely in non-randomized trials when patient assignment to groups is performed by medical personnel. Channeling bias is commonly seen in pharmaceutical trials comparing old and new drugs to one another 19 . In surgical studies, channeling bias can occur if one intervention carries a greater inherent risk 20 . For example, hand surgeons managing fractures may be more aggressive with operative intervention in young, healthy individuals with low perioperative risk. Similarly, surgeons might tolerate imperfect reduction in the elderly, a group at higher risk for perioperative complications and with decreased need for perfect hand function. Thus, a selection bias exists for operative intervention in young patients. Now imagine a retrospective study of operative versus non-operative management of hand fractures. In this study, young patients would be channeled into the operative study cohort and the elderly would be channeled into the nonoperative study cohort.

III. Bias during the clinical trial

Information bias is a blanket classification of error in which bias occurs in the measurement of an exposure or outcome. Thus, the information obtained and recorded from patients in different study groups is unequal in some way 18 . Many subtypes of information bias can occur, including interviewer bias, chronology bias, recall bias, patient loss to follow-up, bias from misclassification of patients, and performance bias.

Interviewer bias

Interviewer bias refers to a systematic difference between how information is solicited, recorded, or interpreted 18 , 21 . Interviewer bias is more likely when disease status is known to interviewer. An example of this would be a patient with Buerger's disease enrolled in a case control study which attempts to retrospectively identify risk factors. If the interviewer is aware that the patient has Buerger's disease, he/she may probe for risk factors, such as smoking, more extensively (“Are you sure you've never smoked? Never? Not even once?”) than in control patients. Interviewer bias can be minimized or eliminated if the interviewer is blinded to the outcome of interest or if the outcome of interest has not yet occurred, as in a prospective trial.

Chronology bias

Chronology bias occurs when historic controls are used as a comparison group for patients undergoing an intervention. Secular trends within the medical system could affect how disease is diagnosed, how treatments are administered, or how preferred outcome measures are obtained 20 . Each of these differences could act as a source of inequality between the historic controls and intervention groups. For example, many microsurgeons currently use preoperative imaging to guide perforator flap dissection. Imaging has been shown to significantly reduce operative time 40 . A retrospective study of flap dissection time might conclude that dissection time decreases as surgeon experience improves. More likely, the use of preoperative imaging caused a notable reduction in dissection time. Thus, chronology bias is present. Chronology bias can be minimized by conducting prospective cohort or randomized control trials, or by using historic controls from only the very recent past.

Recall bias

Recall bias refers to the phenomenon in which the outcomes of treatment (good or bad) may color subjects' recollections of events prior to or during the treatment process. One common example is the perceived association between autism and the MMR vaccine. This vaccine is given to children during a prominent period of language and social development. As a result, parents of children with autism are more likely to recall immunization administration during this developmental regression, and a causal relationship may be perceived 22 . Recall bias is most likely when exposure and disease status are both known at time of study, and can also be problematic when patient interviews (or subjective assessments) are used as a primary data sources. When patient-report data are used, some investigators recommend that the trial design masks the intent of questions in structured interviews or surveys and/or uses only validated scales for data acquisition 23 .

Transfer bias

In almost all clinical studies, subjects are lost to follow-up. In these instances, investigators must consider whether these patients are fundamentally different than those retained in the study. Researchers must also consider how to treat patients lost to follow-up in their analysis. Well designed trials usually have protocols in place to attempt telephone or mail contact for patients who miss clinic appointments. Transfer bias can occur when study cohorts have unequal losses to follow-up. This is particularly relevant in surgical trials when study cohorts are expected to require different follow-up regimens. Consider a study evaluating outcomes in inferior pedicle Wise pattern versus vertical scar breast reductions. Because the Wise pattern patients often have fewer contour problems in the immediate postoperative period, they may be less likely to return for long-term follow-up. By contrast, patient concerns over resolving skin redundancies in the vertical reduction group may make these individuals more likely to return for postoperative evaluations by their surgeons. Some authors suggest that patient loss to follow-up can be minimized by offering convenient office hours, personalized patient contact via phone or email, and physician visits to the patient's home 20 , 24 .

Bias from misclassification of exposure or outcome

Misclassification of exposure can occur if the exposure itself is poorly defined or if proxies of exposure are utilized. For example, this might occur in a study evaluating efficacy of becaplermin (Regranex, Systagenix Wound Management) versus saline dressings for management of diabetic foot ulcers. Significantly different results might be obtained if the becaplermin cohort of patients included those prescribed the medication, rather than patients directly observed to be applying the medication. Similarly, misclassification of outcome can occur if non-objective measures are used. For example, clinical signs and symptoms are notoriously unreliable indicators of venous thromboembolism. Patients are accurately diagnosed by physical exam less than 50% of the time 25 . Thus, using Homan's sign (calf pain elicited by extreme dorsi-flexion) or pleuritic chest pain as study measures for deep venous thrombosis or pulmonary embolus would be inappropriate. Venous thromboembolism is appropriately diagnosed using objective tests with high sensitivity and specificity, such as duplex ultrasound or spiral CT scan 26 - 28 .

Performance bias

In surgical trials, performance bias may complicate efforts to establish a cause-effect relationship between procedures and outcomes. As plastic surgeons, we are all aware that surgery is rarely standardized and that technical variability occurs between surgeons and among a single surgeon's cases. Variations by surgeon commonly occur in surgical plan, flow of operation, and technical maneuvers used to achieve the desired result. The surgeon's experience may have a significant effect on the outcome. To minimize or avoid performance bias, investigators can consider cluster stratification of patients, in which all patients having an operation by one surgeon or at one hospital are placed into the same study group, as opposed to placing individual patients into groups. This will minimize performance variability within groups and decrease performance bias. Cluster stratification of patients may allow surgeons to perform only the surgery with which they are most comfortable or experienced, providing a more valid assessment of the procedures being evaluated. If the operation in question has a steep learning curve, cluster stratification may make generalization of study results to the everyday plastic surgeon difficult.

IV. Bias after a trial

Bias after a trial's conclusion can occur during data analysis or publication. In this section, we will discuss citation bias, evaluate the role of confounding in data analysis, and provide a brief discussion of internal and external validity.

Citation bias