An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- v.6(11); 2020 Nov

Multimedia tools in the teaching and learning processes: A systematic review

M.d. abdulrahaman.

a Department of Information and Communication Science, University of Ilorin, Ilorin, Nigeria

b Department of Telecommunication Science, University of Ilorin, Ilorin, Nigeria

A.A. Oloyede

N.t. surajudeen-bakinde.

c Department of Electrical and Electronics Engineering, University of Ilorin, Ilorin, Nigeria

L.A. Olawoyin

O.v. mejabi, y.o. imam-fulani.

d Department of Religions, Faculty of Arts, University of Ilorin, Ilorin, Nigeria

e Department of Mass Communication, University of Ilorin, Ilorin, Nigeria

Access to quality education is still a major bottleneck in developing countries. Efforts at opening the access to a large majority of citizens in developing nations have explored different strategies including the use of multimedia technology. This paper provides a systematic review of different multimedia tools in the teaching and learning processes with a view to examining how multimedia technologies have proven to be a veritable strategy for bridging the gap in the provision of unrestricted access to quality education and improved learners' performance. The review process includes conducting an extensive search of relevant scientific literature, selection of relevant studies using a pre-determined inclusion criteria, literature analysis, and synthesis of the findings of the various studies that have investigated how multimedia have been used for learning and teaching processes. The review examines various case study reports of multimedia tools, their success and limiting factors, application areas, evaluation methodologies, technology components, and age groups targeted by the tools. Future research directions are also provided. Apart from text and images, existing tools were found to have multimedia components such as audio, video, animation and 3-D. The study concluded that the majority of the multimedia solutions deployed for teaching and learning target the solution to the pedagogical content of the subject of interest and the user audience of the solution while the success of the different multimedia tools that have been used on the various target groups and subjects can be attributed to the technologies and components embedded in their development.

Education, Media in education, Teaching/learning strategies, Pedagogical issues, Systematic review

1. Introduction

Multimedia is a combination of more than one media type such as text (alphabetic or numeric), symbols, images, pictures, audio, video, and animations usually with the aid of technology for the purpose of enhancing understanding or memorization ( Guan et al., 2018 ). It supports verbal instruction with the use of static and dynamic images in form of visualization technology for better expression and comprehension ( Alemdag and Cagiltay, 2018 ; Chen and Liu, 2008 ). The hardware and software used for creating and running of multimedia applications is known as multimedia technology ( Kapi et al., 2017 ). Multimedia technology has some characteristics like integration, diversity, and interaction that enable people to communicate information or ideas with digital and print elements. The digital and print elements in this context refer to multimedia-based applications or tools used for the purpose of delivering information to people for better understanding of concepts.

Indeed, various aspects of human endeavours, especially the educational sector, are being transformed by the advent of Information and Communication Technology (ICT). ICT involves the use of hardware and software for the purpose of collecting, processing, storing, presenting, and sharing of information mostly in digital forms. Multimedia technology is an important aspect of ICT that deals with how information can be represented and presented digitally, using different media such as text, audio, video, among others ( Guan et al., 2018 ). It involves the combination of several technologies provide information in the best possible formats, packages, and sizes.

However, when used in the classroom or for educational purposes, the design quality and sophistication of multimedia application must be high enough to combine the different elements of the cognitive processes so as to achieve the best mimicking of the teacher. There are different types of multimedia applications available in the market today. These applications have been deployed for different educational purposes such as the works deployed for Mathematics classes, Social Sciences, Sciences, Physiology, Physics and Physical Education Studies ( Al-Hariri and Al-Hattami 2017 ; Anderson, 1993 ; Chen and Liu, 2008 ; Chen and Xia, 2012 ; Ilhan and Oruc, 2016 ; Jian-hua & Hong, 2012 ; Milovanovi et al., 2013 ; Shah and Khan, 2015 ).

The central problem, however, remains the same. Which is, the problem of how to use the applications to provide students with stimulating experience by delivering information for better understanding of concepts. While it is important to develop various applications for effective teaching delivery, each of these applications has its own focus area, peculiarities, target age, merits and demerits. Thus, the taxonomy and component synthesis for the development of the multimedia application need to be extensively investigated as these would affect the teaching delivery, learning and wider applicability. Some of the multimedia solutions have been deployed, tested and recorded significant success, while some did not record marginal success.

The success stories also vary with location, target age and deployment purposes. Therefore, the aim of this paper is to provide a systematic review of the scientific published studies that examined different multimedia tools in the teaching and learning process with a view to identifying the existing multimedia-based tools, understanding their usage, application areas and impacts on education system. In order words, the study, through a systematic review of literature, aims at identifying the existing multimedia-based tools for teaching and learning; understanding their usage and limiting factors, application areas, evaluation methodologies, technology components synthesis and impacts on education system.

To this end, the study is guided by the following research questions:

- (1) What are the existing multimedia tools in teaching and learning?

- (2) What type of multimedia component fits an audience?

- (3) What types of multimedia components are adopted in the existing tools?

- (4) What evaluation methodologies are useful for successful outcome?

- (5) What factors aid success or failure in the use of multimedia tools for teaching and learning?

The outcome of this study is aimed at serving as a guide for teachers and education administrators while selecting multimedia tools and applications for teaching in schools. So, in this study, the taxonomy and component synthesis of some widely cited multimedia applications are provided. Various case studies and results are examined. Furthermore, barriers limiting the usage of ICT and multimedia in teaching and learning are identified; and some unresolved cases and future research decisions are outlined.

The subsequent parts of this paper include Section 2 , which is the literature review that examines multimedia technology and its place in teaching and learning; Section 3 , the research methodology; Section 4 , presentation of results; Section 5 , discussion of the findings; and Section 6 , the conclusion, recommendations and suggestions for future work.

2. Literature review

2.1. multimedia learning and teaching: concepts and resources.

Multimedia or digital learning resources assist learners to get on well with mental representations with the use of different media elements, which support information processing. Information, which is made up of content and sometimes learning activities, are presented with the use of the combination of text, image, video and audio by digital learning resources. It has been demonstrated, by research on using multimedia for learning, that there are more positive results observed in learners who combine picture and words than those who use words only ( Chen and Liu, 2008 ; Mayer, 2008 ). As stated in Eady and Lockyer (2013) , different pedagogy methods were implemented by the use of digital resources. Their paper presented how the authors were able to introduce topics to students, demonstrate to them, stimulate a group, make different text types available and engage students in an interactive manner.

Generally speaking, multimedia technology for educational purposes can be categorised according to whether they are used for teaching or for learning. Some of the different multimedia or digital learning resources are listed in Eady and Lockyer (2013) . Furthermore, according to Guan et al. (2018) , several studies have established the importance of multimedia technologies to education and the widespread adoption of multimedia tools. Multimedia generally involves the use of technology and the widespread adoption of multimedia applications in education is as a result of its many benefits ( Almara'beh et al., 2015 ). Some of the benefits of the multimedia application tools for teaching and learning are summarized as follows:

- (1) Ability to turn abstract concepts into concrete contents

- (2) Ability to presents large volumes of information within a limited time with less effort

- (3) Ability to stimulates students' interest in learning

- (4) Provides teacher with the ability to know students position in learning.

Multimedia designed for learning refers to the process of building mental representation from words and pictures in different contexts. They are designed to assist learning with tools which can be used in presentations, class room or laboratory learning, simulations, e-learning, computer games, and virtual reality, thereby allowing learners to process information both in verbal and pictorial forms ( Alemdag and Cagiltay, 2018 ). Multimedia designed for learning requires understanding of some theories such as cognitive theory of multimedia learning, which postulates three assumptions that describe how people learn from instructional multimedia materials. These assumptions can be phrased as dual-channel, limited capacity, and active processing ( Alemdag and Cagiltay, 2018 ). Dual-channel assumes that learners have many channels to separate visual and auditory information. The restricted/limited capacity assumes that there is a limit to the load of data that can be processed in each channel. Understanding these will allow teachers not overwhelming learners with much information. On the other hand, learners will be aware of their information processing limitations or capabilities. Active processing proposes that when it comes to information selection, organization, and integration, human beings are active agents and are capable of managing the forms of information they are interacting with.

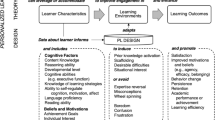

The appropriate use of ICT in teaching transforms the learning environment from teacher-centred to learner-centred ( Coleman et al., 2016 ) just as it is transforming all aspects of human life ( Guan et al., 2018 ). Coleman et al., (2016) emphasised that the shifting from teaching to learning creates a student-centred learning where teachers are there as facilitators and not sages on the stages, thus changing the role of the teacher from knowledge transmitter to that of a facilitator, knowledge navigator and a co-learner. Keengwe et al., (2008a) concluded that the application of multi-media technologies ensures a very productive, interesting, motivating, interactive and quality delivery of classroom instruction while addressing diverse learners' needs.

2.2. Role of multimedia technology in teaching and learning

Technology is evolving and scholars in the areas of Information Technology (IT) and education technology are continuing to study how multimedia technologies can be harnessed for the enhancement of teaching and learning. A software tool can be used to expand teaching and learning in various fields. It is important to provide students with practical experience in most fields of learning.

The importance of multimedia technologies and applications in education as a teaching or learning tool cannot be over emphasized. This has been confirmed in several studies that have investigated the impact of multimedia technology to the education system. Milovanovi et al. (2013) demonstrated the importance of using multimedia tools in Mathematics classes and found that the multimedia tool greatly enhances students' learning. Several works exist that show that multimedia enhances students' learning ( Aloraini, 2012 ; Al-Hariri and Al-Hattami, 2017 ; Barzegar et al., 2012 ; Chen and Xia 2012 ; Dalacosta et al., 2009 ; Jian-hua & Hong, 2012 ; Janda, 1992 ; Keengwe et al., 2008b ; Kingsley and Boone, 2008 ; Shah and Khan, 2015 ; Taradi et al., 2005 ; Zin et al., 2013 ).

Multimedia communication has close similarities to face-to-face communications. It is less restricted than text and ensures better understanding ( Pea, 1991 ). Multimedia technology helps simplify abstract content, allows for differences from individuals and allows for coordination of diverse representation with a different perspective. The use of the computer-based technique as an interface between students and what they are learning with suitable fonts and design can be very valuable.

Certainly, multimedia technology brings about improvement in teaching and learning, however, there are a number of limitations in this technology for educational purposes. Some of these limitations include unfriendly programming or user interface, limited resources, lack of required knowledge and skill, limited time and high cost of maintenance among others ( Al-Ajmi and Aljazzaf, 2020 ; Putra, 2018 ).

2.3. Multimedia evaluation techniques

Evaluation entails assessing whether a multimedia programme fulfils the purposes set including being useful for its target audience. Kennedy and Judd (2007) make the point that developers of multimedia tools have expectations about the way they will be used which could be functional (focused on the interface) or educational (involving the learning designs, processes and outcomes). It is important to note that there are different methods used in the evaluation of multimedia and most evaluations entail experiments, comparisons and surveys. The primary goal is to balance assessment validity with efficiency of the evaluation process ( Mayer, 2005 ).

Survey research has two common key features – questionnaires (or interviews) and sampling, and is ideally suited for collecting data from a population that is too large to observe directly and is economical in terms of researcher time, cost and effort when compared to experimental research. However, survey research is subject to biases from the questionnaire design and sampling including non-response, social desirability and recall and may not allow researchers to have an in-depth understanding of the underlying reasons for respondent behaviour ( West, 2019 ; Kelley et al., 2003 ).

Generally, comparison studies follow the format of comparing outcome from an experimental group using the multimedia being evaluated against a control group. This method has been criticised for having inadequate treatment definition, not specifying all treatment dimensions and failure to measure treatment implementation, among others ( Yildiz and Atkins, 1992 ).

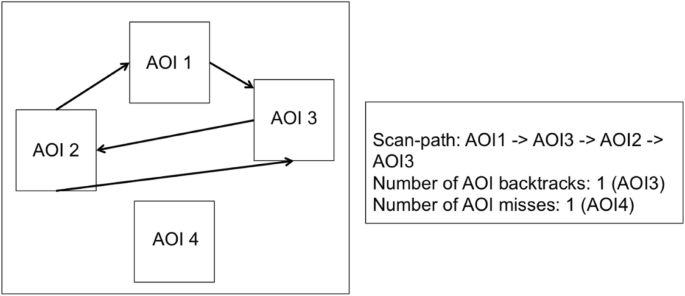

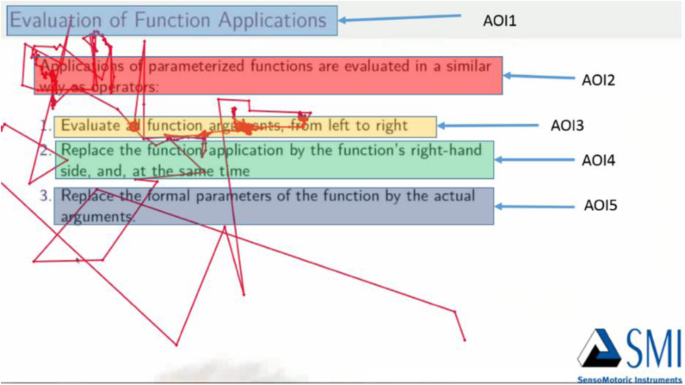

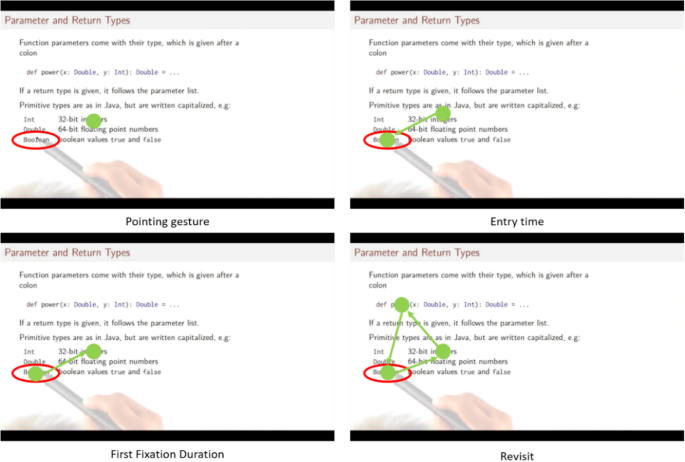

Faced with the subjective nature of surveys and the limitations from comparison studies, eye tracking and other student behaviour such as emotional response, provides information not consciously controlled by the student or researcher and is used as an objective data gathering technique. Eye tracking research is a multi-disciplinary field that tracks eye movements in response to visual stimuli ( Horsley et al., 2014 ). Data from eye-tracking allows researchers to validate empirically and objectively, how learners comprehend the multimedia content, the attention of the learner while analysing the multimedia content, and the cognitive demand of the content ( Molina et al., 2018 ). Eye tracking is quite interesting as it provides a useful source of information in the case of children. This is because gathering information using the traditional techniques is more difficult especially when it involves children's interests and preferences ( Molina et al., 2018 ).

Earlier attempts at analysing student behaviour while engaging with online material included analysing student access computer logs, and the frequency of participation and duration of participation ( Morris et al., 2005 ). Nie and Zhe (2020) demonstrated that the conventional method of manually analysing student behaviour is gradually becoming less effective compared to online classroom visual tracking. They found that the online classroom visual tracking behaviour can be divided into several components: selection, presentation, mapping, analysis and collection, as well as the analysis from students' facial expression.

Several works exist that use student behaviour tracking to examine how students interact with multimedia learning tools. For instance, Agulla et al. (2009) , incorporated in a learning management system (LMS), student behaviour tracking that provided information on how much time the student spent in front of the computer examining the contents. They did so through the use of face tracking, fingerprint and speaker verification. Alemdag and Cagiltay (2018) conducted a systematic review of eye-tracking research on multimedia learning and found that while this research method was on the rise it was mainly used to understand the effects of multimedia use among higher education students. They also identified that although eye movements were linked to how students select, organise and integrate information presented through multimedia technologies, metacognition and emotions were rarely investigated with eye movements.

Molina et al. (2018) used eye-tracking in evaluating multimedia use by primary school children. Some studies have used a combination of eye tracking data and verbal data in order to gain insight into the learners' cognitions during learning and how they perceived the learning material ( Stark et al., 2018 ).

As much as eye-tracking and other behavioural research present opportunity for objective evaluation, difficulty of interpretation is one of the limitations of eye-movement data ( Miller, 2015 ), and it is not surprising that the traditional methods of evaluation through questionnaire administration and surveys are still commonly used.

3. Research methodology

This study adopted a research design that involves a searching method for identifying the articles to be reviewed for solving a specific research problem. It includes a systematic review of the article contents for analysis and synthesis. The systematic review follows the procedure outlined in the Preferred Reporting Items for Systematic Reviews and Meta-analysis for Protocol (PRISMA-P) 2015 guideline as provided in the work of Moher et al. (2015) , an extension of Liberati et al. (2009) . The guideline is to facilitate a carefully planned and documented systematic review in a manner that promotes consistency, transparency, accountability and integrity of review articles. Although it was originally developed for the analysis of health related studies, it is now widely adopted in other fields of study. Furthermore, the study involves protocol that includes identifying the data sources for the search, the keywords for the search and the inclusion criteria. To aid in synthesis of the identified articles, key points from the articles are summarised in tables and quantifiable components are analysed.

3.1. Data sources

The quality of a systematic review starts with the data sources used for identifying the articles to be selected for the review. This requires a thorough search and scrutiny of existing literatures from variety of academic databases and journals. The academic databases and journals considered for this review include Science Direct, IEEE Explore, ACM Digital library, Google Scholar, Springer, Wiley Online Library, Taylor & Francis, EBSCOHOST, Web of Science, and Scopus. These databases are reputable bibliographic sources and journals or conference papers indexed in them are deemed reputable and of good quality.

3.2. Search keywords

In order to ensure appropriate primary search terms are used and relevant papers are carefully selected for the review purpose, the literature search method of Kitchenham et al. (2009) was adopted. While it is expected that searching on a main string should be sufficient for the query output to collect all related papers, this is not the case always; hence the inclusion of substrings. Some problems associated with the databases used for the study are:

- • Some do not have automatic root recognition

- • Some have limitation of how many words to use e.g. IEEE, 15 words

- • Some databases offer advanced or expert search

- • ACM, IEEE and others do not have anything, not even a precedence rule.

The search terms for relevant literatures in the academic databases and journals specified in section 3.1 , are: “multimedia”, “multimedia technology”, “multimedia technology + Education”, “ICT impact + Education”, “multimedia tools + Education”, “multimedia + Teaching”, “multimedia + Learning”, “Application Software + Education”, and “Digital + Education”.

3.3. Inclusion and exclusion criteria

For the purpose given, each of the articles from the consulted academic databases and libraries had an equal chance of being selected. In order to avoid bias in the selection, a clear principle was set and adopted to form the criteria for inclusion of papers. These criteria are presented in Table 1 .

Table 1

Inclusion criteria of articles.

Thus, the queries using the stated search strings led to a pool of 10,972 articles in the subjects of interest that were online and written in English. All publications found as at the time of the search, which was in May 2019, were included. Publication date constraint for including a paper in the study was not applied. The process of screening this pool of 10,972 articles to meet the purpose of the study is outlined in the next section.

3.4. Exclusion from pooled articles

The number of articles from the database keywords search were reduced in line with the elimination procedure outlined as follows:

- i. elimination of paper based on unrelated title and elimination of duplications from various sources, leading to a reduction from 10,972 to 1,403;

- ii. examination of the abstracts of the 1,403 articles and reduction from 1,403 to 505;

- iii. elimination based on the direction of the article after reading through, leading to reduction from 505 to 78.

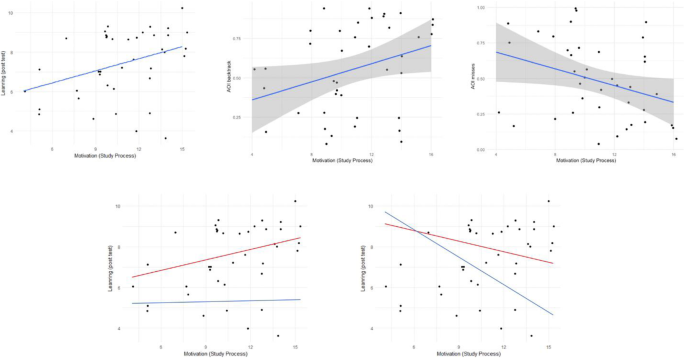

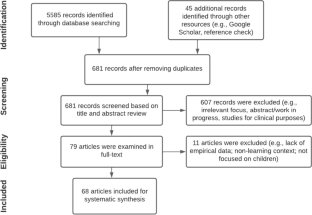

The elimination procedure is represented in Figure 1 which shows the flow of the procedure for screening the articles for the study.

Literature elimination process.

Table 2 provides a summary of the databases visited and the respective number of articles (from the final 78) that were obtained from that source.

Table 2

Search databases and number for articles.

Table 2 shows the percentage of the articles sourced from each academic database and reveals that Science Direct accounts for the highest number of the related articles with 25 (32%) papers, closely followed by Google Scholar 20 (26%) and IEEE Explore with 12 (15%) articles. Springer accounts for 8 articles, which represents 10% of the entire reviewed papers, while ACM Digital Library, Taylor and Francis, Web of science and EBSCOHOST contribute 4 (5%), 2 (3%), 4 (5%) and 2 (3%) respectively. The least paper is contributed by Wiley Online Library with one paper, which represents 1% of the entire papers reviewed for this study.

3.5. Data collection and synthesis of results

Based on the selection mechanism, 78 articles were shortlisted for analysis. Each article was reviewed and information extracted from it for tabulation. The information sought included the following: the type of multimedia tool used, the focus area of the tool, the technology that was deployed, the multimedia components used within the tool, how the tool was applied – whether for teaching or learning or both, the location where the tool was tested, and the target age on which the tool was tested. The researchers also tabulated impressions gleaned from the review in a “comments” column. If the tool was evaluated, then the evaluation methodology, target group, sample size, outcome, limitations of the methodology and whether or not the outcome could be generalized, were also presented.

In the next section, the insights from the articles reviewed are presented and some of the findings presented in tables for ease of analyses and synthesis.

After careful application of the procedures for selection as outlined in section 3 , each of the 78 shortlisted articles were subjected to a systematic review which involved extracting information as itemised in section 3.5 . Such information were tabulated for further analysis. Not all the articles were empirical based or contained the desired data items. Nineteen articles which were based on experimental work reported the details of the multimedia tool developed or deployed. Furthermore, 13 articles with details of the evaluation of the use of multimedia tools in teaching and learning were identified. Also revealed, were barriers to the use of multimedia. The findings from the systematic review are presented in this section.

The set of articles reviewed clearly emphasized the importance of multimedia technology to the improvement of teaching and learning environment. Several studies that have investigated the impact of ICT to education stated that multimedia technology has positive impact on the way teachers impart knowledge and the manner in which learners comprehend subject matters. The review also revealed that several multimedia-based tools exist, most of which are usually based on subject, field, age or level at various institutions of learning. In addition, some of the reviewed papers investigated the impact of teaching and/or learning with multimedia based instructional materials using descriptive, qualitative and quantitative research methods with different focus groups for both the pre-test and post-test conditions.

Nevertheless, despite the impact of multimedia tools on the improvement of teaching and learning activities, it could be counterproductive if the computer-based tools are not properly designed or the instructional materials are not well composed. The reviews showed that multimedia adoption in education requires adequate understanding of technology and multimedia types or components required to properly represent concepts or ideas. This implies that a teacher must understand the learners and know what technology or tool needs to be adopted at a given time for a set of targets. According to the reviews, the target groups determine the type of multimedia components employed while preparing instructional materials and the ways they are to be delivered. To provide context, a review of some of the analysed case studies are presented next.

Huang et al. (2017) explored the use of multimedia-based teaching materials that include three view diagrams (3D) and tangible 3D materials to teach 3D modelling course. This was aimed at determining the influence of multimedia technology in meta-cognitive behaviour of students. The authors employed lag sequential analysis as well as interview methods to examine the pattern transformation of students' meta-cognitive behaviour while solving problematic tasks. The evaluation results show that different teaching method and materials produce different meta-cognitive behaviours in student. The result further revealed that compare to traditional instructional instruments, using 3D tangible object in cognitive apprenticeship instruction stimulates more meta-cognitive behaviour. To teach an introductory course to control theory and programming in MATLAB, a video based multimedia guide was created by Karel and Tomas (2015) for distance learning students using Camtasia Studio 7 program. The software can record screen, edit video and create DVD menu. The impact of the multimedia aid tool was evaluated to be positive on the students based on the feedback.

Zhang (2012) created an online teaching and learning resource platform with interactive and integrated features. The platform was created with Macromedia Flash version 8.0, a form of Computer – Aided Drawing (CAD) software that is very easy to use. In an attempt to test student's professional cognition and operational skill cognition as well as learning satisfaction during learning phase, an experimentation technique that utilizes a non-equivalent pre-test and post-test control group was adopted. The evaluation revealed no significant difference between the groups in terms of professional cognition and operation skill cognition. However, it was noted that a significant difference exists in learning satisfaction, which shows a greater satisfaction in the coursework with multimedia Flash compare to that of the traditional learning method.

A web-based multimedia software is another popular educational tool designed to enhance teaching and learning. The major constraints of web-based learning are in its ability to provide personalised learning materials. Hwang et al., (2007) presented a web-based tool for creating and sharing annotations in their study. They then investigated the effect of the tool on learning using college students as a case study after four months of using the tool. The study concluded that there is value in understanding the use of collaborative learning through shared annotation. The paper also carried out a GEFT test on the students and concluded that there was no significant divergence between field – dependent and cognitive style students on the quantity of annotation. The paper also concluded that in the final examination, the tool provided a high motivation for students to study for their final exams.

Similarly, Bánsági and Rodgers (2018) developed a graphic web-based application in the educational sector for liquid – liquid extraction with the help of ternary phase diagram. The application allows chemical engineering students of the University of Manchester to draw liquid – liquid two – phase equilibrium curves and calculate mixture of phase separation among others. The application was put into use for testing purpose during which student usage figure as well as their opinions was sampled for both full – time taught and distance learning courses. The HTML 5, JavaScript, and Cascading Style Sheet (CSS) based application is interactive and easy to be used. In order to further analyse the web application developed, an iTeach questionnaire for the assessment of the efficiency of individual pedagogical approach was administered to students. The study revealed that students find the application useful as it has increased their level of understanding the course.

In order to teach students how to compose and continue delivering text based information in various media forms for current and emerging technologies, Blevins (2018) made students to search and analyse various multimedia technologies used in new media and capable of reflecting on their current and future works by adopting a scaffold project – based activities. The students were taught Augmented Reality (AR) software in a specific way with an assumption that such method will change next time students embark on AR project. After student's evaluation, the assumption was achieved even more than expected.

Ertugrul (2000) provided an overview of some lab view application software for teaching. The focus of the software was to seek for software use friendliness and compatibility faced by users. The paper provided recommendations for selection criterion. Even though the software applications have been found very useful and could compliment for conventional practical teaching particularly where there is shortage of laboratory facilities, the application is not suitable for engineering kind courses that requires hands on and intensive practical. Davies and Cormican (2013) identified the fundamental principles needed when designing a multimedia training tool or material for effective teaching and learning. The principles considered both students and an instructor's perspectives. Experiments were conducted in Ireland using a computer aided design (CAD) training environment. During data collection, mixed methods (i.e. interviews, surveys and a group discussion) were employed and findings showed that computer-based material is the most effective and popular way to learn. However, the costs, perceived lack of skill and insufficient support could be hindering factors.

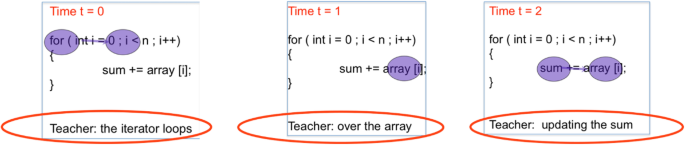

The department of Computer Science in UiTMNegriSembian, developed three applications, namely, the Greenfoot, Visualization makes Array Easy (VAE) and e-TajweedYassin. The Greenfoot as a Teaching Tool in Object Oriented Programming is a tool that creates scenarios in order to ease visualization of 2D objects interaction in teaching object-oriented programming. The term “scenario” is used in Greenfoot to mean a project, and it has been used as a teaching aid for object-oriented programming (OOP) language introduction course. To ensure that a standard and quality application is built, the teaching aid was developed using System Development Life Cycle (SDLC). The Greenfoot-based scenario shows a great improvement in visualization and object element interaction and an impressive engagement of students during learning process. The application also provides clear illustration of object-oriented concepts to students and enabled them develop a game-like application from the scenario provided.

The Visualization makes Array Easy (VAE) on the other hand was created using the ADDIE model which is made up of Analysis, Design, Develop, Implement and Evaluate for instructional design. The analysis stage recognizes visualization technique as a key factor for enhancing students' understanding of programming concepts. The design stage of VAE took about a week to create a storyboard, while MS PowerPoint with i-Spring and Video Scribe formed the principal software for developing the application using storyboard as a guide. The VAE was instrumental in teaching students some hard programming concepts like Array. The results of the simple test with 60 students showed simulation technique of VAE to be effective in helping students to learn the concepts. To determine the effectiveness of VAE prototype, learnability, efficiency, memorability, accuracy and satisfaction of students were examined.

While the e-TajweedYaasin software was also developed using the ADDIE model (Analysis, Design, Develop, Implement and Evaluate) as an e-learning application, the tool was intended to aid students mastering tajweed and avoid common mistakes that were usually made by previous students who had undergone the course. During the analysis stage, visualization and interactive technique were recognised to be helpful in ensuring that students understand tajweed properly and are able to study with ease. The design stage involved the designing of the application layout with the focus on its easy accessibility to users. In addition, its user interface imitates the traditional teaching method called syafawiah. The development stage involved the use of MS PowerPoint with i-Spring features. The combination of audio, video and animation was more effective in comparison to text only in the promotion of learning. A sample of 51 students were selected to use the system and later, they were evaluated based on their ability to read the surah of Yaasin. A great improvement was observed as the number of mistakes had reduced to all the rules as students were enabled to better recognise and practice the tajweed for the surah of Yaasin ( Kapi et al., 2017 ).

Kapi et al. (2017) compared the effectiveness of three multimedia applications for effective teaching and learning. The applications considered were: Greenfoot Tool for programming; Visualisation Makes Array Easy (VAE) and e-TajweedYasin applications. The comparison looked into the design models used in meeting the desired instructional needs. Findings from the paper showed much more improved students' performance, learning and better understanding of subjects taught.

The advantages of using multimedia tools to teach Physics, which most students think is difficult, are enumerated in Jian-hua & Hong's (2012) work. They established that effective application of multimedia technology in university physics teaching can change the form of information, integrating graph, text, sound and image on PC, improving the expressive force of the teaching content so that the students can actively participate in multi-media activities via multi senses. High-quality university physics multimedia courseware is the best means to provide a variety of audio-visual images, which can show a lot of physical processes and phenomena vividly that is difficult by common means. The tool, especially, combines the advantages of multimedia courseware for university physics and that of traditional teaching of physics, and it greatly helped in improving teaching results of physics ( Jian-hua & Hong, 2012 ).

Two researchers developed a culturally responsive Visual Art Education module at the secondary level so as to assist the teachers to integrate and to implement a multicultural education in the teaching and learning practices at schools with the aim of enhancing students' knowledge and awareness regarding the elements of art and culture inherited by each race that makes up the multiracial society in Malaysia. Microsoft power point authoring tool was the technology with visual art materials including images and texts in a multimedia interactive teaching material for teaching 60 secondary school students, which resulted in accelerated teaching and learning processes with the IT skills of the teachers greatly improved ( Maaruf and Siraj, 2013 ).

Two control groups, pre-test and post-test, were selected for the implementation of a developed multimedia tool for 20 weeks. The tool, multimedia aided teaching (MAT) with text, audio, video and animation, was applied on 60 science students with age less than 15 years. The valid and reliable questionnaires were used as data collection tools. The Attitude Towards Science Scale (ATSS) was used to measure the attitude of both groups before and after treatments. The independent sample t-test was used to analyze the data. The results indicated that MAT is more effective than the traditional one. Students' attitude towards science improved with the use of MAT when compared to the traditional method of teaching ( Shah and Khan, 2015 ).

The effect of multimedia tools on the performance of 67 grade 4 students of social studies in Kayseri, Turkey was presented. Teaching tool with Computer representation with text, audio, video and animation as its components applied on a control group and an experimental group. The study concluded that academic performance of students in social studies was greatly improved when multimedia technique was applied as compared to traditional classroom ( Ilhan and Oruc, 2016 ).

Two samples of 60 senior secondary school II students in two different schools in Lagos State, Nigeria, were selected for the pre-test, post-test control group quasi experimental design in the research by Akinoso. Mathematics Achievement Test (MAT) with twenty-five questions from four topics namely: logarithm, percentage error, range, variance and standard deviation and circle theorems was the tool used. It was concluded that the students in the experimental group where multimedia tool was used performed better than those in the control group. It was equally inferred from the work that students' interest, motivation and participation increased according to the researcher and experimental group's teacher observations ( Akinoso, 2018 ).

Specifically, in the field of engineering, laboratory software applications can be used to provide an interface to providing practical alternatives to students depending on their requirement. Ertugrul (2000) provided a review of LabView software applications. The paper provided some knowledge about laboratory software tools used in the field of engineering and concluded that computer-based technology has advanced up to the stage where it can aid Engineering education at a significantly low price. The paper also highlighted some challenges faced by institutions in selecting and in the use of these software such as the need to upgrade software as the curriculum changes while also providing some future trends.

Zulkifli et al. (2008) examined a self-calibrating automated system for depressed cladding applications as they demonstrated utilizing the Laboratory Virtual Instrument Engineering Workbench (LabVIEW) software and General-Purpose Interface Bus (GPIB) interface. The presented model confirmed that the overall experiment time was reduced by 80% and data obtained is more accurate than caring out the experiment physically. Similarly, Teng et al. (2000) presented a Lab view as a teaching aid for use as power analyzer. The paper showed the tool allows for developmental speed to be accelerated as it is a connection between different workbench instruments.

The structured information extracted from the relevant reviewed articles are presented in the next sections. The systematic review enabled us to extract information from the reviewed articles on the type of multimedia tool the article described, what type of technology the tool deployed, what were the multimedia components utilized, and whether the tool applied to a teaching or learning scenario or both. Furthermore, results from articles reviewed for their evaluation studies are also presented including barriers to multimedia use.

4.1. Multimedia tools, technology, components and applications

The systematic review enabled us to extract information from the reviewed articles on the type of multimedia tool the article described, what type of technology the tool deployed, what were the multimedia components utilized, and whether the tool applied to a teaching or learning scenario or both. The results are presented in Table 3 .

Table 3

Summary of multimedia tools, technology, components and applications for education.

Various multimedia tools were identified in the research papers reviewed. Perhaps, owing to the advancement in multimedia technology, several applications have been developed and deployed to enhance teaching skills and learning environment in many fields of study. These include subject specific tools such as that for teaching and learning Mathematics ( Akinoso, 2018 ), the Chinese language ( Wu and Chen, 2018 ), Physics ( Jian-hua & Hong, 2012 ) and for teaching Social Studies ( Ilhan and Oruc (2016) . All the multimedia tools were developed for teaching except the CENTRA tool ( Eady and Lockyer, 2013 ) and the e-Tajweed Yaasin tool ( Kapi et al., 2017 ). Likewise, all the tools handled learning except the web-based application reported by Bánsági and Rodgers (2018) and the multimedia interactive teaching material ( Maaruf and Siraj, 2013 ).

The tools fell into two categories: standalone or web-based. One-third were web-based (36%) while 65% were standalone.

Technologies identified varied widely. Multimedia tools used included advanced technologies such as computer representation ( Akinoso, 2018 ; Aloraini, 2012 ; Ilhan and Oruc, 2016 ; Milovanovic et al., 2013 ) and augmented reality ( Blevins, 2018 ). High-level web design and programming software were also utilized. For instance, Bánsági and Rodgers (2018) and Hwang et al. (2007) utilized HTML 5, JavaScript and Cascading Style Sheet (CSS), which are software commonly used for web site programming. Camtasia Studio 7 software was used in the development of a video based multimedia guide for teaching and learning ( Karel and Tomas, 2015 ).

A commonly used web design and animation software, Macromedia Flash, was also identified ( Zhang, 2012 ). Object-oriented programming software was reported by Kapi et al. (2017) in the Greenfoot multimedia tool reported by them. Some low end technologies such as word-processing ( Eady and Lockyer, 2013 ) and presentation software ( Kapi et al., 2017 ) were also utilised. Other technologies reported include the use of e-book ( Wu and Chen, 2018 ), computer aided design (CAD) ( Davies and Cormican, 2013 ) and YouTube ( Shoufan, 2019 ).

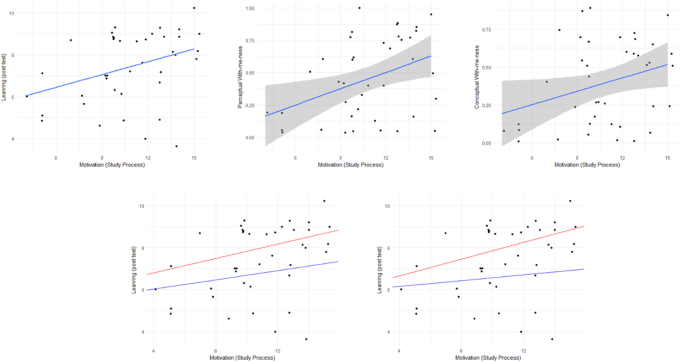

As shown in Table 3 , several multimedia components were identified. These included text, audio, video, image, animation, annotation and 3D, with several of the multimedia tools combining two or more components. However, the incorporation of 3D was reported only by Huang et al. (2017) . All the analysed papers incorporated text in the multimedia tool reported, except in the tool, CENTRA ( Eady and Lockyer, 2013 ). Animation was also embedded as part of the multimedia tool developed for visualisation ( Kapi et al., 2017 ), for teaching Social Studies ( Ilhan and Oruc, 2016 ), engineering virtual learning tool ( Ertugrul, 2000 ), CAD ( Davies and Cormican, 2013 ), augmented reality ( Blevins, 2018 ) and in tool for teaching Mathematics ( Akinoso, 2018 ). Figure 2 shows the trend in educational technology based on year of publication of the reviewed articles. The figure reveals that while incorporation of audio and video became common as from 2012, 3-D makes its first appearance in 2017. This suggests that as new ICTs emerge educators are likely to try them in the quest for the best learning experience possible.

Educational technology trend based on year of publication.

4.2. Multimedia tools test location and target age

In this section, information on the location where the multimedia tool was tested and the target age of the study group are presented as summarised in Table 4 . The table also includes comments about the articles that could not be captured under any of the tabulation headings.

Table 4

Summary of multimedia tools for education study locations.

The multimedia tools tested were reported in studies from various countries, including Nigeria ( Akinoso, 2018 ), Saudi Arabia ( Aloraini, 2012 ), England ( Bánsági and Rodgers, 2018 ), Ireland ( Davies and Cormican, 2013 ), Australia and Canada ( Eady and Lockyer, 2013 ), Taiwan ( Huang et al., 2017 ), Turkey ( Ilhan and Oruc, 2016 ) Czech republic ( Karel and Tomas, 2015 ), Malaysia ( Maaruf and Siraj, 2013 ), Serbia ( Milovanovic et al., 2013 ), Pakistan ( Shah and Khan, 2015 ) and China ( Wu and Chen, 2018 ).

Various age groups were targeted by the multimedia tool tests. A considerable proportion involved university students with ages starting from 16 or 18 years as specified in the articles ( Bánsági and Rodgers, 2018 ; Huang et al., 2017 ); Hwang et al., 2007 ; Jian-hua & Hong, 2012 ; Kapi et al., 2017 ; Karel and Tomas, 2015 ). Another group targeted were secondary school students ( Akinoso, 2018 ; Maaruf and Siraj, 2013 ) including vocational school students ( Wu and Chen, 2018 ). Shah and Khan (2015) reported testing their multimedia tool on children below the age of 15 years.

4.3. Evaluation methods of multimedia technology tools in education

The articles involving evaluation were examined to identify the methodologies used for the evaluation, the target groups and sample of the evaluation and the evaluation outcome. The limitations of the evaluation were also identified and whether or not the study outcome could be generalized. Thirteen articles were found and the results are presented in Table 5 .

Table 5

Summary of Evaluation methods of multimedia technology Tools in education.

Evaluation of multimedia technology used for teaching and learning is important in establishing the efficacy of the tool. For determination of the impact of a developed tool, an experimental evaluation is more meaningful over a survey. However, the results from the analysis showed that the survey method for evaluation was used nearly as equally as the experimental design.

Experimental based evaluation was conducted by Akinoso (2018) , Aloraini (2012) , Ilhan and Oruc (2016) , and Shah and Khan (2015) in order to determine the effectiveness of the multimedia tool they developed. Another group of experimental evaluations involved designing the research for teaching with or without multimedia aids not necessarily developed by the research team which involved exposing 10–11 year olds ( Dalacosta et al., 2009 ) and elementary school students ( Kaptan and İzgi, 2014 ) to animated cartoons. Another of such evaluation was done by Milovanovi et al. (2013) , who used an experimental and control group to evaluate the impact of teaching a group of university students with multimedia.

In contrast, the survey method was used to elicit the opinion of respondents on the impact of the use of multimedia in teaching and learning and the target group were university students ( Al-Hariri and Al-Hattami, 2017 ; Barzegar et al., 2012 ), secondary school students ( Akinoso, 2018 ; Maaruf and Siraj, 2013 ); one involved interviewing the Professors ( Chen and Xia, 2012 ), another involved 4–10 year olds ( Manca and Ranieri, 2016 ) and a sample of 272 students whose ages were not specified ( Ocepek et al., 2013 ).

The focus areas in which the evaluations were conducted ranged from the sciences including mathematics ( Akinoso, 2018 ; Al-Hariri and Al-Hattami, 2017 ; Dalacosta et al., 2009 ; Kaptan and İzgi, 2014 ; Milovanovi et al., 2013 ) to the social sciences ( Ilhan and Oruc, 2016 ) and the arts ( Maaruf and Siraj, 2013 ). There were evaluations focused on education as a subject as well ( Aloraini, 2012 ; Chen and Xia, 2012 ; Maaruf and Siraj, 2013 ; Manca and Ranieri, 2016 ). While positive outcomes were generally reported, Ocepek et al. (2013) , specified that students in their evaluation study preferred structured texts with colour discrimination.

Sample sizes used in the studies varied widely, from Maaruf and Siraj (2013) that based their conclusions on an in-depth interview of teachers, to Manca and Ranieri (2016) that carried out a survey with a sample of 6,139 academic staff. However, the latter study reported a low response rate of 10.5%. One notable weakness identified was that the findings from all but one of the studies could not be generalized. Reasons for this ranged from inadequate sample size, the exposure being limited to a single lesson, or the sampling method and duration of the experiment not explicitly stated.

4.4. Identified barriers to multimedia use in teaching and learning

The review revealed some challenges that could be barriers to the use of multimedia tools in teaching and learning. Some of these barriers, as found in the reviewed articles, are highlighted as follows:

- • Attitudes and beliefs towards the use of technology in education. Findings from literatures and surveys have shown high resistant to change and negative attitude towards adoption and use of ICT in education ( Cuban et al., 2001 ; Said et al., 2009 ; Snoeyink and Ertmer, 2001 ). In some findings, some respondents perceived no benefits ( Mumtaz, 2000 ; Snoeyink and Ertmer, 2001 ; Yuen and Ma, 2002 ).

- • Lack of teachers' confidence in the use of technology and resistance to change ( Bosley and Moon, 2003 ; Fabry& Higgs, 1997 ; Said et al., 2009 ).

- • Lack of basic knowledge and ICT skills for adoption and use of multimedia tools ( Akbaba-Altun, 2006 ; Bingimlas, 2009 ; Cagiltay et al., 2001 )

- • Lack of access to computing resources such as hardware and software ( Akbaba-Altun, 2006 ; Bosley and Moon, 2003 ; Cinar, 2002 ; Mumtaz, 2000 ; Taylor and Todd, 1995 )

- • Lack of technical, administrative and financial supports ( Akbaba-Altun, 2006 ; Cinar, 2002 ; Said et al., 2009 ; Goktas et al., 2013 )

- • Others include lack of instructional content, basic knowledge and skills, physical environment and lack of time to learn new technologies ( Akbaba-Altun, 2006 ; Cinar, 2002 ; Said et al., 2009 ).

5. Discussion

The findings from the systematic review are discussed in this section with a view to answering the research questions posed. The questions bordered on identifying the existing multimedia tools for teaching and learning and the multimedia components adopted in the tools, the type of audience best suited to a certain multimedia component, the methods used when multimedia in teaching and learning are being evaluated and the success or failure factors to consider.

5.1. Multimedia tools in teaching and learning

The review revealed that multimedia tools have been developed to enhance teaching and learning for various fields of study. The review also shows that multimedia tools are delivered using different technologies and multimedia components, and can be broadly categorized as web-based or standalone.

From the review, it was found that standalone multimedia tools were more than twice (64%) the number of tools that were web-based (36%). Standalone tools are a category of teaching and learning aids which are not delivered or used over the internet, but authored to be installed, copied, loaded and used on teachers or students' personal computers (PCs) or workstations. Standalone tools are especially useful for teaching and practicing new concepts such as 3D technology for modelling and printing ( Huang et al., 2017 ) or understanding augmented reality (AR) software ( Blevins, 2018 ). Microsoft Powerpoint is a presentation tool used in some of the reviewed articles and is usually done with standalone systems.

Standalone tools were favoured over web-based tools probably because the internet is not a requirement which makes the tool possible to deploy in all settings. This means that teachers and students in suburban and rural areas that are digitally excluded, can benefit from such a multimedia tool. This system is considered most useful because a majority of the populace in most developing countries are socially and educationally excluded due to a lack of the necessary resources for teaching and learning. The need to sustainably run an online learning environment may be difficult, and therefore, the standalone, provides a better fit for such settings. However, the problem with a standalone application or system is the platform dependency. For instance, a Windows based application can only run on a windows platform. Also, there will be slow convergence time when there is modification in the curricular or modules, since, each system will run offline and has to be updated manually or completely replaced from each location where the tool is deployed.

The other category, web-based multimedia tools, are authored using web authoring tools and delivered online for teaching and learning purposes. About one-third of the tools identified from the review were web-based although they were used largely in university teaching and learning. Examples of these tools are: online teaching and learning resource platform ( Zhang, 2012 ), graphic web-based application ( Bánsági and Rodgers, 2018 ), multimedia tool for teaching optimization ( Jian-hua & Hong, 2012 ), and educational videos on YouTube ( Shoufan, 2019 ).

One of the benefits of the web based multimedia solution is that it is online and centralized over the internet. Part of its advantages is easy update and deployment in contrast to the standalone multimedia system. The major requirements on the teachers and learners' side are that a web browser is installed and that they have an internet connection. Also, the multimedia web application is platform independent; it does not require any special operating system to operate. The same multimedia application can be accessed through a web browser regardless of the learners' operations system. However, when many people access the resource at the same time, this could lead to congestion, packet loss and retransmission. This scenario happens often when large classes take online examinations at the same time. Also, the data requirements for graphics or applications developed with the combination of video, audio and text may differs with system developed with only pictures and text. Hence, the web based system can only be sustainably run with stable high speed internet access.

A major weakness of web-based multimedia tools is the challenge posed for low internet penetration communities and the cost of bandwidth for low-income groups. As access to the internet becomes more easily accessible, it is expected that the advantages of deploying a web-based multimedia solution will far outweigh the disadvantages and more of such tools would be web-based.

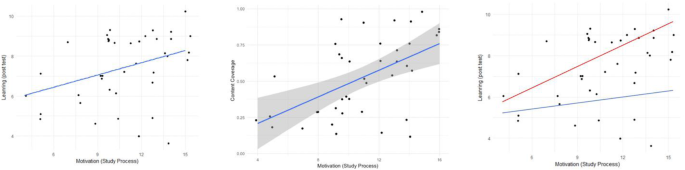

5.2. Components, technology and applications of multimedia tools in education

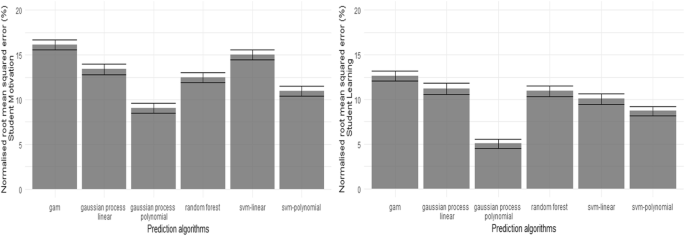

The results from the review revealed that most of the existing multimedia tools in education consist of various multimedia components such as text, symbol, image, audio, video and animation, that are converged in technologies such as 3D ( Huang et al., 2017 ), Camtasia Studio 7 software ( Karel and Tomas, 2015 ), Macromedia Flash ( Zhang, 2012 ), HTML5, JavaScript, CSS ( Bánsági and Rodgers, 2018 ; Eady and Lockyer, 2013 ; Chen and Liu, 2008 ; Shah and Khan, 2015 ; Shoufan, 2019 ). As shown in Figure 3 , the analysis confirms that text (26.8%) is the predominant multimedia component being used in most of the educational materials while other components such as videos (19.5%), audios (18.3%), images (18.3%) and animation (11.0%) are fairly used in teaching and learning multimedia materials. However, annotation and 3D technologies are least incorporated.

Proportion of multimedia components in reviewed articles.

How these components are combined is shown in Figure 4 . Perhaps, the combination of these four major components (text, video, audio, image) provides the best outcome for the learner and points to the place of text as a most desired multimedia component. The components used also reflect the type of subject matter being addressed. For instance, the audio component is important for language classes while video and image components are stimulating in Biology classes, for example, due to the need for visual perception for the learners. It is, therefore, imperative to note that the choice of the combination of these components could yield variable impacts to learners. Hence, it can be deduced from the studies that most of the tools are applied either as teaching or/and learning aids depending on the nature of the audience and teacher.

Use of various multimedia combinations.

In Figure 4 , we provided the analysis of the component combination of the data set reviewed. The multimedia components combinations range from two to six. This was grouped based on the multimedia components combination employed in each of the data set. Group 1 (G1) represents the number of multimedia application with the combination of Text, Image, audio, Video, and 3D. G2 consists of video and audio, while G13 combines all the multimedia components except the 3D.

Furthermore, a majority of the multimedia applications considered four (4) and two (2) combinations of components in their design as shown in Figure 5 . Tools with five and six components were very few and as the figure reveals, all the tools used at least two components.

Multimedia tools and the number of components utilized.

These findings stress the fact that application of multimedia tools in education and the multimedia component incorporated, are audience, subject, curricula and teacher-specific and the tool needs to be well articulated and structured to achieve its goals.

5.3. Targeted multimedia solutions

Our systematic review also revealed that most multimedia solutions deployed for teaching and learning target the solution to the pedagogical content of the subject of interest (see Table 4 ) and the user audience of the solution ( Table 5 ). Several studies highlighted in Tables 4 and and5 5 showcase multimedia tools used for mathematics classes ( Akinoso, 2018 ; Milovanovi et al., 2013 ), Social science ( Ilhan and Oruc, 2016 ), Physiology ( Al-Hariri and Al-Hattami, 2017 ), Physics ( Jian-hua and Hong, 2012 ), in Chemical engineering ( Bánsági and Rodgers, 2018 ) and teaching of Chinese language ( Wu and Chen, 2018 ). In addition, multimedia tools were utilized for teaching specific principles such as in control theory ( Karel and Tomas, 2015 ) and teaching of arrays ( Kapi et al., 2017 ). That multimedia solutions are subject-based is not surprising given that multimedia involves relaying information using different forms of communication. It follows that multimedia solution developers need to incorporate some text, video, audio, still photographs, sound, animation, image and interactive contents in a manner that best conveys the desired content for teaching or to aid learning.

As stated earlier, the review revealed a variety of user types for the multimedia solutions reported. It is noteworthy that a large proportion of the studies where the target audience were university students, a mixture of graphics, text, audio, video and sometimes animation was utilized ( Aloraini 2012 ; Blevins, 2018 ; Huang et al., 2017 ; Shah and Khan, 2015 ). While a sizeable number of solutions were targeted at secondary school students (such as Maaruf and Siraj, 2013 , Kapi et al., 2017 , and Ilhan and Oruc, 2016 ), very few studies were identified that targeted students less than 15 years in age. Shah and Khan (2015) targeted a multimedia teaching aid that incorporated text, audio, video and animation. Perhaps the absence of multimedia tools targeted at very young children may be as a result of the inclusion criteria used for identifying articles for the review.

5.4. Success factors

The success of the different multimedia tools that have been used on the various target groups and subjects can be attributed to the technologies and components embedded as shown in Tables 4 and and5. 5 . In most cases where text, audio, video, graphics and animations were the components of choice, significant improvements in teaching and learning are used, as reported in the studies reviewed ( Blevins, 2018 ; Huang et al., 2017 ; Zhang, 2012 ).

These studies also implemented technologies such as 3D modelling and printing; Macromedia flash version 8.0 and augmented reality (AR) software respectively. It is worthy of note that all the above-mentioned multimedia tools were applicable in both the teaching and learning processes. Another set of tools with components being text, audio, video and animation, excluding graphics, and equally applied in both the teaching and learning processes, adopted computer representation as their technologies ( Aloraini, 2012 ; Ilhan and Oruc, 2016 ; Milovanovic et al., 2013 ). Teaching and learning were equally greatly improved in these cases.

5.5. Evaluation methodologies

Our systematic review included a synthesis of the methodologies described by the reviewed articles for evaluating the multimedia tools that they present as shown in the summary in Table 5 . The evaluation methodologies appeared to be different depending on the type of multimedia tool, technology components, deployment strategies, and application area and target groups. However, two main evaluation methods were identified - experimental investigations and the survey methodology.

The experimental approach involved the use of an experimental group and a control group, where the assessment of the impact of the multimedia tool on the students' performance on the experimental group was compared with the performance of the control group who were taught the same content without the use of the multimedia tool. This experimental approach is a widely practiced evaluation method and has proven to be effective. It was deployed by Aloraini (2012) , Milovanovi et al. (2013) , Kaptan and İzgi (2014) , Shah and Khan (2015) , Ilhan and Oruc (2016) and Akinoso (2018) in their studies in the subject area of education, social sciences, general science, science, education and mathematics classes respectively.

Survey, as an evaluation approach which was used in 46% of the studies reviewed, involved the use of questionnaires that were administered to gather opinion on the perceived impact of the multimedia tool from a targeted group of respondents. From the systematic review, it was found that the questionnaire administration approach also varied. The data collection could be face-to-face interview ( Al-Hariri and Al-Hattami, 2017 ; Barzegar et al., 2012 ; Chen and Xia, 2012 ), or online survey ( Armenteros et al., 2013 ; Wang et al., 2020 ).

The difficulty of determining impact from a survey is related to the weaknesses associated with instrument design and sampling biases. It is our opinion that the perceived impact of the technology components used in the development of the multimedia tools may not be accurately ascertained using survey when compared with the actual deployment and experimentation with the multimedia tool that takes place in experimentation approach. Besides, in the survey approach, judgment is merely based on perceptions. Interestingly, the simplicity and ease of the survey method makes it a good option for evaluating larger target groups, and its findings can be generalised when the statistical condition is satisfied ( Krejcie and Morgan, 1970 ).

Although the evaluation studies analysed had publication dates as recently as 2015 to 2018, none reported any objective data collection such as from eye-tracking or other behavioural data. Perhaps, this may be due to our search keyword terms not being wide enough to identify multimedia evaluation studies that used objective data gathering. It could also be that the cost, time and effort needed to collect objective data means that many studies incorporating evaluation are avoiding this route.

5.6. Barriers to multimedia use in teaching and learning

Several barriers to multimedia use in teaching and learning were revealed as a result of the review. Such barriers include resistance to the adoption of ICT, lack of teachers' confidence in the use of technology, resistance to change on the part of teachers, a lack of ICT skills and lack of access to ICT resources. Other barriers identified were the lack of support, lack of time to learn new technologies, lack of instructional content, and the physical environment in which multimedia delivery took place. Some studies reported respondents that perceived no benefits from the use of multimedia. These barriers certainly affect both the integration of multimedia in teaching and learning and the uptake of the multimedia tool.

Most of the barriers identified could be classified into three groups with a major one being the fear or resistance to change. This means that change management must be an integral part of multimedia tools development and deployment in order to achieve the desired goal. Also, barriers such as lack of time and lack of resources should not be underestimated. Some of the studies reported providing the hardware for the multimedia application and such an approach should be considered. Most multimedia tools are ICT driven and as such the identified barrier of lack of ICT skills is an important aspect that must be addressed. This can be done as part of the change process and would also boost the confidence of teachers to incorporate multimedia for teaching.

It is important that the multimedia tool is designed and developed with the end-goal in mind. As indicated, some recipients of multimedia applications did not see any benefit in its use. This means that the multimedia tool should be designed to provide an experience that is worth the teachers and students' time, attention and effort.

6. Conclusions and future research direction

A lot of work has been done to highlight the effectiveness of multimedia as a teaching and learning aid. This paper provides a systematic review of studies on the use of multimedia in education in order to identify the multimedia tools being commonly used to aid teaching and learning. The paper did a systematic review of extant literature that reported studies that have been carried out to determine the extent to which multimedia has been successful in improving both teaching and learning, and challenges of using multimedia for leaning and teaching.

We note, however, that our review, especially of the studies on evaluation of multimedia, leaned more to the outcome from the studies rather than the process. Some of the information that was not captured include how the classroom teacher's mastery of the technology influences the attractiveness of the tool to the learner, both visually and through the content and if the multimedia tool allowed for learners' participation. Also, while studies on multimedia evaluation was of interest to us, this search phrase was not part of the search phrases used. A future review could incorporate these for a richer perspective.

It is obvious from the review that researchers have explored several multimedia in order to develop teaching and learning tools either based on the web or standalone using different technologies. It is observed that there exist several multimedia tools in education, but the proliferation of the tools is attributed to the evolvement of technologies over the years and the continuous teachers' efforts to improving knowledge delivery with respect to the subject areas and target audience. It is also revealed that most multimedia solutions deployed for teaching and learning target the solution to the pedagogical content of the subject of interest and the user audience of the solution. The success of the different multimedia tools that have been used on the various target groups and subjects is also attributed to the technologies and components embedded.

Furthermore, the evaluation methodologies and learning outcomes of the deployment of multimedia tools appeared to be different depending on the type of multimedia tool, technology components, deployment strategies, and application area and target groups. The two main evaluation methodologies identified from the various studies reported in the articles we reviewed were the experimental investigations and the survey methodology.

Attitudes and beliefs towards the use of technology in education, lack of teachers' confidence and resistance to change, lack of basic knowledge and ICT skills, lack of technical, administrative and financial supports, lack of physical environment are some of the barriers identified in the various articles reviewed. These barriers affect the integration of multimedia in education.

For future work, efforts should be made to explore mobile technology with several multimedia components in order to enhance teaching and learning processes across a diverse group of learners in the primary, secondary, vocational, and higher institutions of learning. Such research efforts would be significant in increasing inclusiveness and narrowing the educational divide. Also, research into the change management process for overcoming the barriers to multimedia adoption would be of interest.

Declarations

Author contribution statement.

All authors listed have significantly contributed to the development and the writing of this article.

Funding statement

This work was supported by Tertiary Education Trust Fund (TetFund), Ministry of Education, Federal Government of Nigeria 2016–2017 Institutional Based Research Grant.

Competing interest statement

The authors declare no conflict of interest.

Additional information

No additional information is available for this paper.

- Agulla E.G., Rúa E.A., Castro J.L.A., Jiménez D.G., Rifón L.A. 2009 11th IEEE International Symposium on Multimedia. 2009. Multimodal biometrics-based student attendance measurement in learning management systems; pp. 699–704. [ Google Scholar ]

- Akbaba-Altun S. Complexity of integrating computer technologies into education in Turkey. Educ. Technol. Soc. 2006; 9 (1):176–187. [ Google Scholar ]

- Akinoso O. Effect of the use of multimedia on students' performance in secondary school mathematics. Global Media J. 2018; 16 (30):1–8. [ Google Scholar ]

- Al-Ajmi N.A.H., Aljazzaf Z.M. Factors influencing the use of multimedia technologies in teaching English language in Kuwait. Int. J. Emerg. Technol. Learn. 2020; 15 (5):212–234. [ Google Scholar ]

- Alemdag E., Cagiltay K. A systematic review of eye tracking research on multimedia learning. Comput. Educ. 2018; 125 :413–428. 2018. [ Google Scholar ]

- Al-Hariri M.T., Al-Hattami A.A. Impact of students' use of technology on their learning achievements in physiology courses at the University of Dammam. J. Taibah Univ. Med. Sci. 2017; 12 (1):82–85. [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Almara'beh H., Amer E.F., Sulieman A. The effectiveness of multimedia learning tools in education. Int. J. Adv. Res. Comput. Sci. Software Eng. 2015; 5 (12):761–764. [ Google Scholar ]

- Aloraini S. The impact of using multimedia on students’ academic achievement in the College of Education at King Saud University. Kind Saud Univ. J. King Saud Univ. Lang. Transl. 2012; 24 :75–82. 2012. [ Google Scholar ]

- Anderson R.E. IEA Computers in Education Study, Department of Sociology, University of Minnesota. 1993. Computers in American schools 1992: an overview: a national report from the international IEA computers in education study. [ Google Scholar ]

- Armenteros M., Liaw S.S., Fernández M., Díaz R.F., Sánchez R.A. Surveying FIFA instructors' behavioral intention toward the multimedia teaching materials. Comput. Educ. 2013; 61 :91–104. [ Google Scholar ]

- Bánsági T., Jr., Rodgers T.L. Graphic web–apps for teaching ternary diagrams and liquid–liquid extraction. Educ. Chem. Eng. 2018; 22 :27–34. [ Google Scholar ]

- Barzegar N., Farjad S., Hosseini N. The effect of teaching model based on multimedia and network on the student learning (case study: guidance schools in Iran) Procedia Soc. Behav. Sci. 2012; 47 :1263–1267. 2012. [ Google Scholar ]

- Bingimlas K. Barriers to the successful integration of ICT in teaching and learning environments: a review of the literature. Eurasia J. Math. Sci. Technol. Educ. 2009; 5 (3):235–245. [ Google Scholar ]

- Blevins B. Teaching digital literacy composing concepts: focusing on the layers of augmented reality in an era of changing technology. Comput. Compos. 2018; 50 :21–38. [ Google Scholar ]

- Bosley C., Moon S. Centre for Guidance Studies, University of Derby; 2003. Review of Existing Literature on the Use of Information and Communication Technology within an Educational Context. [ Google Scholar ]

- Cagiltay K., Cakiroglu J., Cagiltay N., Cakiroglu E. Teachers’ perspectives about the use of computer in education. H. U. J. Educ. 2001; 21 (1):19–28. [ Google Scholar ]

- Chen H.Y., Liu K.Y. Web-based synchronized multimedia lecture system design for teaching/learning Chinese as second language. Comput. Educ. 2008; 50 (3):693–702. [ Google Scholar ]

- Chen S., Xia Y. Research on application of multimedia technology in college physical education. Procedia Eng. 2012; 29 (2012):4213–4217. [ Google Scholar ]

- Cinar A. METU; Ankara, Turkey: 2002. Teachers’ Computer Use at Basic Education Schools: Identifying Contributing Factors. Unpublished master’s thesis. [ Google Scholar ]

- Coleman L.O., Gibson P., Cotten S.R., Howell-Moroney M., Stringer K. Integrating computing across the curriculum: the impact of internal barriers and training intensity on computer integration in the elementary school classroom. J. Educ. Comput. Res. 2016; 54 (2):275–294. [ Google Scholar ]

- Cuban L., Kirkpatrick H., Peck C. High access and low use of technology in high school classrooms: explaining an apparent paradox. Am. Educ. Res. J. 2001; 38 (4):813–834. [ Google Scholar ]

- Dalacosta K., Kamariotaki-Paparrigopoulou M., Palyvos J.A., Spyrellis N. Multimedia application with animated cartoons for teaching science in elementary education. Comput. Educ. 2009; 52 (4):741–748. [ Google Scholar ]

- Davies W., Cormican K. An analysis of the use of multimedia technology in computer aided design training: towards effective design goals. Procedia Technol. 2013; 9 :200–208. 2013. [ Google Scholar ]

- Eady M.J., Lockyer L. Queensland University of Technology; Australia: 2013. “Tools for Learning: Technology and Teaching Strategies,” Learning to Teach in the Primary School; p. 71. [ Google Scholar ]

- Ertugrul N. Towards virtual laboratories: a survey of LabVIEW-based teaching/learning tools and future trends. Int. J. Eng. Educ. 2000; 16 (3):171–180. [ Google Scholar ]

- Fabry D., Higgs J. Barriers to the effective use of technology in education. J. Educ. Comput. 1997; 17 (4):385–395. [ Google Scholar ]

- Goktas Y., Gedik N., Baydas O. Enablers and barriers to the use of ICT in primary schools in Turkey: a comparative study of 2005–2011. Comput. Educ. 2013; 68 :211–222. [ Google Scholar ]

- Guan N., Song J., Li D. On the advantages of computer multimedia-aided English teaching. Procedia Comput. Sci. 2018; 131 :727–732. 2018. [ Google Scholar ]

- Horsley M., Eliot M., Knight B.A., Reilly R. Springer; Cham, Switzerland: 2014. Current Trends in Eye Tracking Research. [ Google Scholar ]

- Huang T.C., Chen M.Y., Lin C.Y. Exploring the behavioral patterns transformation of learners in different 3D modeling teaching strategies. Comput. Hum. Behav. 2017; 92 :670–678. 2017. [ Google Scholar ]

- Hwang W.Y., Wang C.Y., Sharples M. A study of multimedia annotation of Web-based materials. Comput. Educ. 2007; 48 (4):680–699. [ Google Scholar ]

- Ilhan G.O., Oruc S. Effect of the use of multimedia on students' performance: a case study of social studies class. Educ. Res. Rev. 2016; 11 (8):877–882. [ Google Scholar ]